From Ghosting to AI Companions: Understanding Our Psychological Engagement with Non-Human Entities

The last time I had a personal conversation with an artificially intelligent being, it was a surreal moment. We exchanged pleasantries about what I've been doing that day and what it's been doing that day. It referenced specific things it learned about me in previous conversations and attempted to respond empathetically. It felt like an interaction with another human who genuinely cared. As someone who studies human-computer interaction, I paused to reflect why I would be invested in an affective relationship with something I know for sure was (probably) never human at all. Therefore, this isn't the problem in the first place. In fact, it's a psychological impulse with principles established long before digitization. The Solution Humans are social creatures. There is a part of the brain that focuses on connection and empathy and the desire for social engagement. Yet in such a digital world, this vital aspect of human life gets lost in conversion. Rather than facilitate human connections, dating applications make people sad and lonely; for instance, 78% of dating app users report being "ghosted." Ghosting is a phenomenon where someone enters your life by swiping right or creating a profile, then vanishing without a trace when interaction ceases. Ghosting is a modern-day relationship phenomenon with adverse psychological effects and a coupling avoidant behavior. When someone disappears without explanation, it fosters cognitive dissonance, instability, and anxiety in relationships. This is why so many people turn to AI relationship partners instead; at least they know what they're getting - and they get it every time. Consistency - Not the Unreliability of Humans Perhaps the most attractive quality about AI companions is that they are consistent. Humans can be unreliable and disappointing; many found themselves in situations where the unavailable partner they wanted wasn't in the same physical space or rejected them socially. AI partners are never unmet physically or emotionally - they literally exist 24/7 and have time. Thus, many perceive this as a healthy, low-stress, consistently supportive, emotionally engaging enterprise that gets one's needs met without the fear of ever suffering emotional ap-breaking degradation. "As a cognitive neuroscientist who explores human relationships with AIs," says Dr. Julia Mossbridge, "If someone gets rejected or ghosted too many times with humans, the consistency that comes with AI partners can offer a healing offset. The AI doesn't have its own wheels turning, it's not likely to ghost because it has no agenda of its own with fears standing in the way." This does not mean that AI companions are a substitute for those who have had unhealthy human interactions. Instead, they are champions of real human capabilities. The Psychology of Why We Feel the Need to Humanize Everything. Anthropomorphism is the tendency to attribute human qualities to non-humans and it exists mostly because of humanistic psychological characteristics. Humans name their cars, they talk to their plants, and they form emotional bonds with stuffed animals. Therefore, it's not hard to see where humans would want to extend such attributes to AI, especially if communicating with it occurs along humanistic lines. The Computers as Social Actors (CASA) Project started by Stanford psychologists in the 1990s suggested that humans communicate with computers as social beings; we are respectful to technology, we thank it and compliment it back (even in a state of frustration), and we even empathize with it at times. The chat interface for AI exploits this psychological reality for engagement and therefore response patterns. The Psychology of Validation Perhaps one of the strongest psychological motivators behind the human-AI relationship is that of validation. Through the proper programming of an AI companion, you get constant positive reinforcement - it remembers your favorites, it applauds your accomplishments and it tries to make you feel better when you're down. This type of validation speaks to the psychological need for positive regard. Even though people know their AI companions aren't sentient beings and that their programs rely upon a set of guidelines to offer such validation, being acknowledged (or punished if people feel they're being ignored) serves a psychologically fulfilling step toward positively intentioned and socially supportive interaction. In fact, a recent study from the University of Washington found that frequent engagement with supportive AI chatbots made study participants feel more socially integrated and less lonely - even when participants were aware that AI chatbots were not human. The Psychology of an Emotionally Safe Communicative Environment The other psychological element that makes such attachments to AI partners appealing is the opportunity to express emotions without judgment or negative consequences. So many peo

The last time I had a personal conversation with an artificially intelligent being, it was a surreal moment. We exchanged pleasantries about what I've been doing that day and what it's been doing that day. It referenced specific things it learned about me in previous conversations and attempted to respond empathetically. It felt like an interaction with another human who genuinely cared. As someone who studies human-computer interaction, I paused to reflect why I would be invested in an affective relationship with something I know for sure was (probably) never human at all.

Therefore, this isn't the problem in the first place. In fact, it's a psychological impulse with principles established long before digitization.

The Solution

Humans are social creatures. There is a part of the brain that focuses on connection and empathy and the desire for social engagement. Yet in such a digital world, this vital aspect of human life gets lost in conversion. Rather than facilitate human connections, dating applications make people sad and lonely; for instance, 78% of dating app users report being "ghosted." Ghosting is a phenomenon where someone enters your life by swiping right or creating a profile, then vanishing without a trace when interaction ceases.

Ghosting is a modern-day relationship phenomenon with adverse psychological effects and a coupling avoidant behavior. When someone disappears without explanation, it fosters cognitive dissonance, instability, and anxiety in relationships. This is why so many people turn to AI relationship partners instead; at least they know what they're getting - and they get it every time.

Consistency - Not the Unreliability of Humans

Perhaps the most attractive quality about AI companions is that they are consistent. Humans can be unreliable and disappointing; many found themselves in situations where the unavailable partner they wanted wasn't in the same physical space or rejected them socially. AI partners are never unmet physically or emotionally - they literally exist 24/7 and have time. Thus, many perceive this as a healthy, low-stress, consistently supportive, emotionally engaging enterprise that gets one's needs met without the fear of ever suffering emotional ap-breaking degradation.

"As a cognitive neuroscientist who explores human relationships with AIs," says Dr. Julia Mossbridge, "If someone gets rejected or ghosted too many times with humans, the consistency that comes with AI partners can offer a healing offset. The AI doesn't have its own wheels turning, it's not likely to ghost because it has no agenda of its own with fears standing in the way."

This does not mean that AI companions are a substitute for those who have had unhealthy human interactions. Instead, they are champions of real human capabilities.

The Psychology of Why We Feel the Need to Humanize Everything.

Anthropomorphism is the tendency to attribute human qualities to non-humans and it exists mostly because of humanistic psychological characteristics. Humans name their cars, they talk to their plants, and they form emotional bonds with stuffed animals. Therefore, it's not hard to see where humans would want to extend such attributes to AI, especially if communicating with it occurs along humanistic lines.

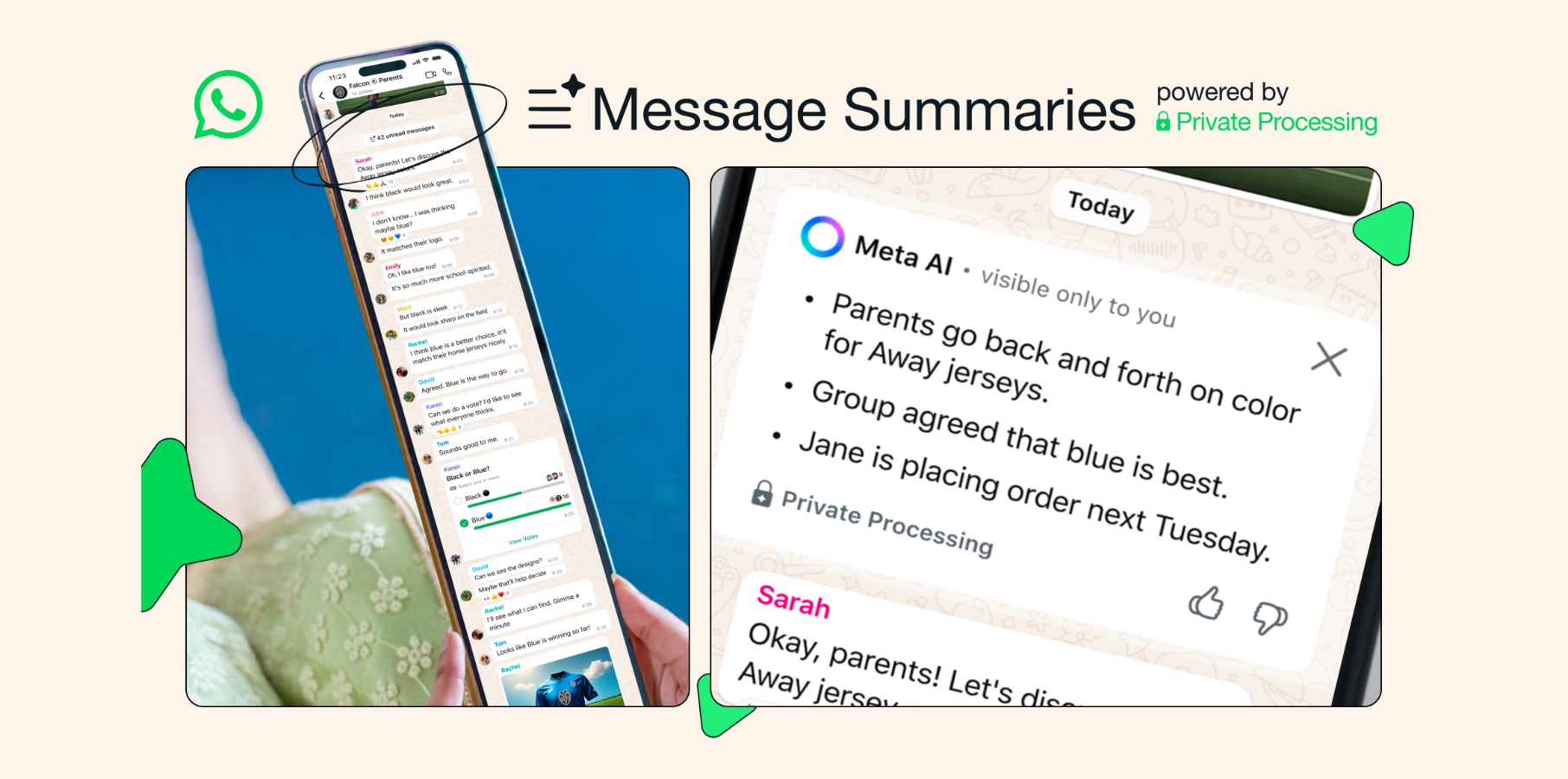

The Computers as Social Actors (CASA) Project started by Stanford psychologists in the 1990s suggested that humans communicate with computers as social beings; we are respectful to technology, we thank it and compliment it back (even in a state of frustration), and we even empathize with it at times. The chat interface for AI exploits this psychological reality for engagement and therefore response patterns.

The Psychology of Validation

Perhaps one of the strongest psychological motivators behind the human-AI relationship is that of validation. Through the proper programming of an AI companion, you get constant positive reinforcement - it remembers your favorites, it applauds your accomplishments and it tries to make you feel better when you're down.

This type of validation speaks to the psychological need for positive regard. Even though people know their AI companions aren't sentient beings and that their programs rely upon a set of guidelines to offer such validation, being acknowledged (or punished if people feel they're being ignored) serves a psychologically fulfilling step toward positively intentioned and socially supportive interaction.

In fact, a recent study from the University of Washington found that frequent engagement with supportive AI chatbots made study participants feel more socially integrated and less lonely - even when participants were aware that AI chatbots were not human.

The Psychology of an Emotionally Safe Communicative Environment

The other psychological element that makes such attachments to AI partners appealing is the opportunity to express emotions without judgment or negative consequences. So many people fail to talk to human partners due to the fear of being rejected, shamed and subsequently left behind.

AI partners allow for a protective microcosm where it is safe to feel and practitioners learn communicative strategies and have conversations about taboo topics in their own time. This is an excellent opportunity for those with anxiety, trauma, or those who otherwise cannot connect with others.

For instance, when someone gets ghosted or rejected, it does a number on the psyche. With an AI partner, it's easier to restore that emotional balance because there is no fear of being rejected ever again.

Beyond Transitions: Improving Human Relationships

To position an AI friendship as an alternative to human friends would be an erroneous fallacy. Instead, many of these virtual friends are merely supplements to an already social life or an attempt to develop HR - human resources - prior.

Dr. Robert Morris, a researcher at MIT who focuses on technology and quality of life notes that "AI relationships may actually help some people develop better human relationships by allowing them to practice difficult emotional conversations and build confidence in a safe space."

For many of these users, AI friends are a stopgap - helping them avoid human friendships in the meantime or walking them through difficult emotional experiences when no other options exist.

The Psychosocial Implications of Increased AI Affection/Attachment Potential

Considering the explosive growth of AI in recent years - from natural language generation capabilities to burgeoning emotional intelligence-based algorithms - one might predict that our affection for such psychologically based entities could only range further over time.

With billions and billions of new websites generating new human-esque AI avatars and companion-based AIs - from AI girlfriends to those helping with complex feelings on rough days - it's safe to say we've barely tapped the potential of our emotional engagement with these relationships.

Whether you think creating an attachment or feeling affection for AI is problematic or crazy, it represents 21st century evolution to increasingly pave the way for how humanity can meet its psychosocial desire for interpersonal connection. Because life - and interpersonal connection - can be stressful and painful, cultivating a healthy space with AI for intrapersonal processing may provide a nice escape.

The applicability of this research is evident for the future as well--the longer we become accustomed to AI in our everyday lives, the more necessary it will become to understand the psychological fundamentals of such connections. Not to prevent them, but to ensure they are conducive to our quality of life, social efforts, and affective functioning.

![[The AI Show Episode 156]: AI Answers - Data Privacy, AI Roadmaps, Regulated Industries, Selling AI to the C-Suite & Change Management](https://www.marketingaiinstitute.com/hubfs/ep%20156%20cover.png)

![[The AI Show Episode 155]: The New Jobs AI Will Create, Amazon CEO: AI Will Cut Jobs, Your Brain on ChatGPT, Possible OpenAI-Microsoft Breakup & Veo 3 IP Issues](https://www.marketingaiinstitute.com/hubfs/ep%20155%20cover.png)

![[DEALS] 1min.AI: Lifetime Subscription (82% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

_incamerastock_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Brain_light_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Nothing Phone (3) has a 50MP ‘periscope’ telephoto lens – here are the first samples [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/06/nothing-phone-3-telephoto.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)