Some AI Prompts Generate 50 Times More Carbon Emissions Than Others

Whether you use OpenAI’s ChatGPT, Google’s Gemini, or any other chatbot, every prompt you type triggers a chain of activity behind the scenes. A data center somewhere works a little harder, pulling energy from the grid, giving off heat, and adding to a toll on the planet that often goes unnoticed. It’s a quiet environmental cost built into the convenience of everyday AI use. According to the International Energy Agency (IEA), a simple prompt using ChatGPT consumes 10 times more electricity compared to a Google search. A separate study published in Frontiers in Communication found that certain advanced AI prompts can generate up to 50 times more CO₂ emissions than others, depending on the model used. This happens because AI models like ChatGPT process prompts using billions of parameters, requiring far more computation per token. Each token generated involves multiple layers of neural network operations, making it much more energy-intensive than retrieving search results. The electricity needed to power the world’s data infrastructure is only going in one direction, and that is up. The 2024 Report on U.S. Data Center Energy Use produced by Lawrence Berkeley National Laboratory (LBNL), tracks trends in data center electricity consumption from 2014 through projections for 2028. According to the report, energy demand from data centers has tripled over the past decade and is on course to double, even triple, by 2028. When you type a prompt into an AI, it breaks the text into small pieces called tokens. These tokens are turned into numbers so the system can understand and respond. That process uses a lot of computing power, and a byproduct of this is CO₂ emissions, which are a primary culprit behind global warming, melting ice caps, fueling extreme weather, and pushing the planet toward irreversible climate collapse. To shed light on this, the authors of the Frontiers of Communication study conducted a comparative study of several widely used language models, analyzing the CO₂ emissions generated by each when responding to a standardized set of prompts. The researchers compared 14 large language models, ranging in size from 7 billion to 72 billion parameters, and measured the CO₂ emissions each produced when responding to 1,000 standardized questions. Across the board, the process of generating answers came with a measurable carbon footprint. Reasoning models stood out for producing an average of 543.5 internal tokens per question, while more concise models used just 37.7. These internal or “thinking” tokens represent the behind-the-scenes steps a model takes before presenting a response, with each one carrying a higher energy cost. The subject matter was also a key factor. Researchers found that when a prompt required a longer reasoning process, such as questions about abstract algebra or philosophy, the models produced up to six times more CO₂ emissions compared to simpler topics like high school history. More complex questions led to more tokens, more computation, and ultimately a much larger environmental footprint. "The environmental impact of questioning trained LLMs is strongly determined by their reasoning approach, with explicit reasoning processes significantly driving up energy consumption and carbon emissions," said first author Maximilian Dauner, a researcher at Hochschule München University of Applied Sciences and first author of the study. "We found that reasoning-enabled models produced up to 50 times more CO₂ emissions than concise response models." The study revealed a surprising gap in emissions between two similarly sized AI models. DeepSeek R1, which runs on 70 billion parameters, was estimated to produce the same amount of CO₂ as a round-trip flight from London to New York after answering 600,000 questions. Qwen 2.5, which also uses 72 billion parameters, handled nearly three times as many questions with similar accuracy while generating about the same level of emissions. The researchers were careful to point out that these differences may not be due to the models alone. The results could be shaped by the type of hardware used in the tests, the local energy mix powering the data centers, and other technical variables. In other words, where and how an AI model runs can be just as important as how big or advanced it is. While the technical factors matter, the researchers also believe users have a role to play in reducing AI’s environmental impact. "Users can significantly reduce emissions by prompting AI to generate concise answers or limiting the use of high-capacity models to tasks that genuinely require that power," Dauner explained. Even small adjustments in how people interact with AI can add up. Across millions of users and queries, those choices have the potential to ease the growing burden on energy systems and data infrastructure. Dauner also emphasized the importance of transparency in AI usage, noting that clearer information could shape user behavior: "If users know the exact C

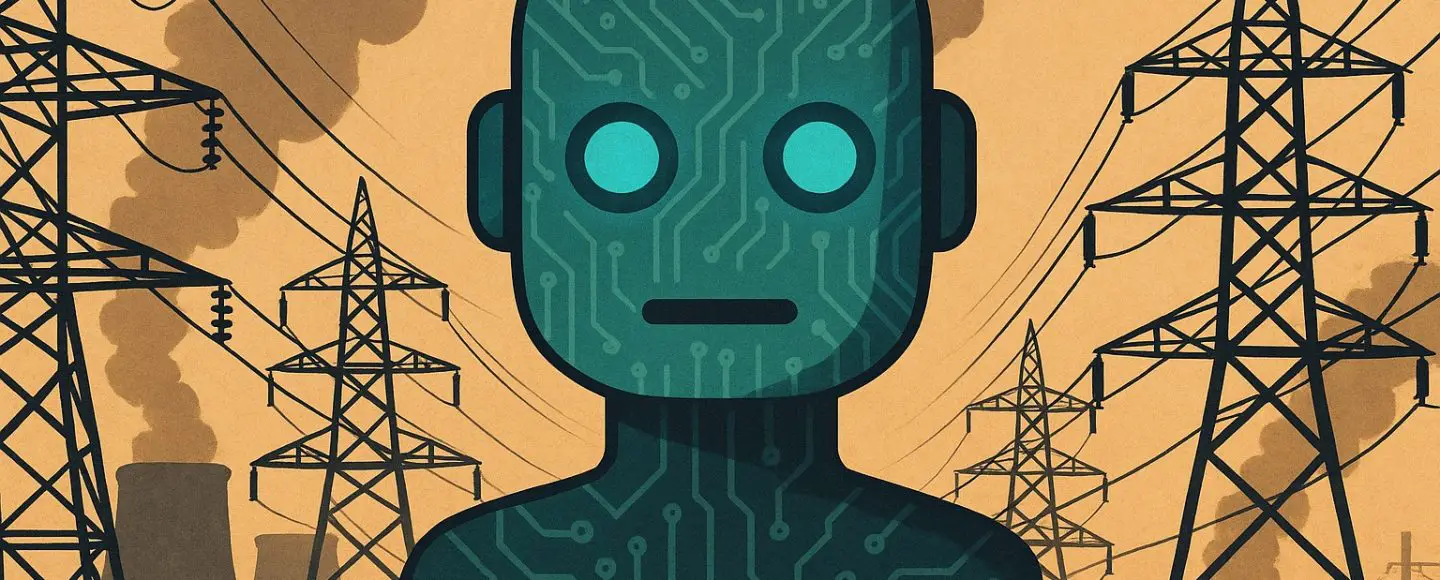

Whether you use OpenAI’s ChatGPT, Google’s Gemini, or any other chatbot, every prompt you type triggers a chain of activity behind the scenes. A data center somewhere works a little harder, pulling energy from the grid, giving off heat, and adding to a toll on the planet that often goes unnoticed. It’s a quiet environmental cost built into the convenience of everyday AI use.

According to the International Energy Agency (IEA), a simple prompt using ChatGPT consumes 10 times more electricity compared to a Google search. A separate study published in Frontiers in Communication found that certain advanced AI prompts can generate up to 50 times more CO₂ emissions than others, depending on the model used.

This happens because AI models like ChatGPT process prompts using billions of parameters, requiring far more computation per token. Each token generated involves multiple layers of neural network operations, making it much more energy-intensive than retrieving search results.

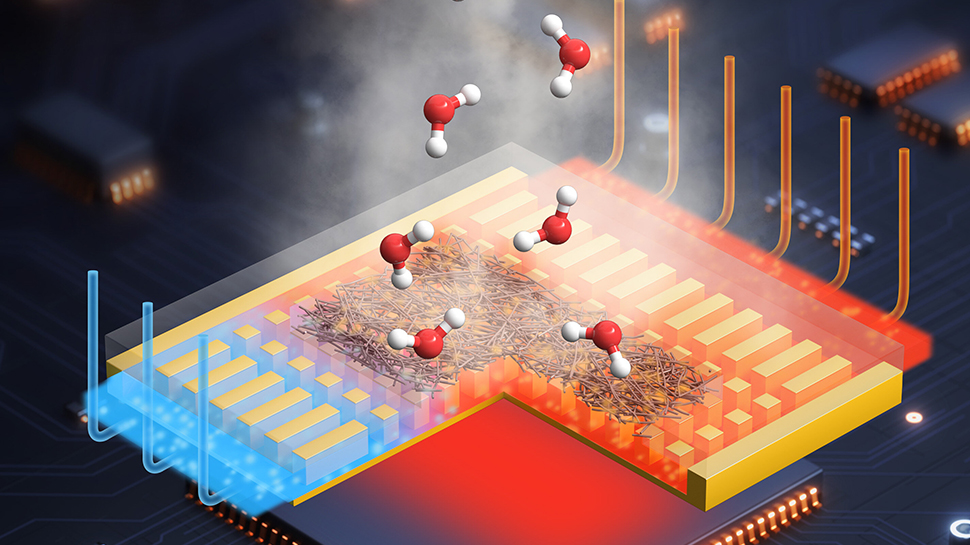

The electricity needed to power the world’s data infrastructure is only going in one direction, and that is up. The 2024 Report on U.S. Data Center Energy Use produced by Lawrence Berkeley National Laboratory (LBNL), tracks trends in data center electricity consumption from 2014 through projections for 2028. According to the report, energy demand from data centers has tripled over the past decade and is on course to double, even triple, by 2028.

When you type a prompt into an AI, it breaks the text into small pieces called tokens. These tokens are turned into numbers so the system can understand and respond. That process uses a lot of computing power, and a byproduct of this is CO₂ emissions, which are a primary culprit behind global warming, melting ice caps, fueling extreme weather, and pushing the planet toward irreversible climate collapse.

When you type a prompt into an AI, it breaks the text into small pieces called tokens. These tokens are turned into numbers so the system can understand and respond. That process uses a lot of computing power, and a byproduct of this is CO₂ emissions, which are a primary culprit behind global warming, melting ice caps, fueling extreme weather, and pushing the planet toward irreversible climate collapse.

To shed light on this, the authors of the Frontiers of Communication study conducted a comparative study of several widely used language models, analyzing the CO₂ emissions generated by each when responding to a standardized set of prompts.

The researchers compared 14 large language models, ranging in size from 7 billion to 72 billion parameters, and measured the CO₂ emissions each produced when responding to 1,000 standardized questions. Across the board, the process of generating answers came with a measurable carbon footprint.

Reasoning models stood out for producing an average of 543.5 internal tokens per question, while more concise models used just 37.7. These internal or “thinking” tokens represent the behind-the-scenes steps a model takes before presenting a response, with each one carrying a higher energy cost.

The subject matter was also a key factor. Researchers found that when a prompt required a longer reasoning process, such as questions about abstract algebra or philosophy, the models produced up to six times more CO₂ emissions compared to simpler topics like high school history. More complex questions led to more tokens, more computation, and ultimately a much larger environmental footprint.

"The environmental impact of questioning trained LLMs is strongly determined by their reasoning approach, with explicit reasoning processes significantly driving up energy consumption and carbon emissions," said first author Maximilian Dauner, a researcher at Hochschule München University of Applied Sciences and first author of the study. "We found that reasoning-enabled models produced up to 50 times more CO₂ emissions than concise response models."

The study revealed a surprising gap in emissions between two similarly sized AI models. DeepSeek R1, which runs on 70 billion parameters, was estimated to produce the same amount of CO₂ as a round-trip flight from London to New York after answering 600,000 questions. Qwen 2.5, which also uses 72 billion parameters, handled nearly three times as many questions with similar accuracy while generating about the same level of emissions.

The researchers were careful to point out that these differences may not be due to the models alone. The results could be shaped by the type of hardware used in the tests, the local energy mix powering the data centers, and other technical variables. In other words, where and how an AI model runs can be just as important as how big or advanced it is.

While the technical factors matter, the researchers also believe users have a role to play in reducing AI’s environmental impact. "Users can significantly reduce emissions by prompting AI to generate concise answers or limiting the use of high-capacity models to tasks that genuinely require that power," Dauner explained.

Even small adjustments in how people interact with AI can add up. Across millions of users and queries, those choices have the potential to ease the growing burden on energy systems and data infrastructure.

Dauner also emphasized the importance of transparency in AI usage, noting that clearer information could shape user behavior: "If users know the exact CO₂ cost of their AI-generated outputs, such as casually turning themselves into an action figure, they might be more selective and thoughtful about when and how they use these technologies."

At the same time, the study makes clear that the responsibility cannot rest with users alone. Developers and companies play a central role in shaping how AI is integrated into products and services. As generative tools continue to be embedded across platforms, often without much scrutiny or clear purpose, there is a growing need to ask whether these integrations actually serve a meaningful function.

With climate and environmental concerns not a clear priority in the current policy landscape, the onus falls largely on the industry itself. Users, developers, and companies will need to take the lead in building and applying AI more thoughtfully, with sustainability in mind.

![[The AI Show Episode 155]: The New Jobs AI Will Create, Amazon CEO: AI Will Cut Jobs, Your Brain on ChatGPT, Possible OpenAI-Microsoft Breakup & Veo 3 IP Issues](https://www.marketingaiinstitute.com/hubfs/ep%20155%20cover.png)

.jpg?#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Vladimir_Stanisic_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Design_Pics_Inc_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Latest Galaxy Z Fold 7 leak leaves very little to the imagination [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/06/galaxy-z-fold-7-teaser-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Mercedes, Audi, Volvo Reject Apple's New CarPlay Ultra [Report]](https://www.iclarified.com/images/news/97711/97711/97711-640.jpg)

![Apple Considers LX Semicon and LG Innotek Components for iPad OLED Displays [Report]](https://www.iclarified.com/images/news/97699/97699/97699-640.jpg)