IBM Think 2025: Download a Sneak Peek of the Next Gen Granite Models

At IBM Think 2025, IBM announced Granite 4.0 Tiny Preview, a preliminary version of the smallest model in the upcoming Granite 4.0 family of language models, to the open source community. IBM Granite models are a series of AI foundation models. Initially intended for use in IBM’s cloud-based data and generative AI platform Watsonx, along with other models, IBM opened the source code of some code models. IBM Granite models are trained on datasets curated from the Internet, academic publications, code datasets, and legal and finance documents. The following is based on the IBM Think news announcement. At FP8 precision, the Granite 4.0 Tiny Preview is extremely compact and compute-efficient. It allows several concurrent sessions to perform long context (128K) tasks that can be run on consumer-grade hardware, including GPUs. Though the model is only partially trained, it has only seen 2.5T of a planned 15T or more training tokens, it already offers performance rivaling that of IBM Granite 3.3 2B Instruct despite fewer active parameters and a roughly 72% reduction in memory requirements. IBM anticipates Granite 4.0 Tiny’s performance to be on par with Granite 3.3 8B Instruct by the time it has completed training and post-training. As its name suggests, Granite 4.0 Tiny will be among the smallest offerings in the Granite 4.0 model family. It will be officially released this summer as part of a model lineup that also includes Granite 4.0 Small and Granite 4.0 Medium. Granite 4.0 continues IBM’s commitment to making efficiency and practicality the cornerstone of its enterprise LLM development. This preliminary version of Granite 4.0 Tiny is now available on Hugging Face under a standard Apache 2.0 license. IBM intends to allow GPU-poor developers to experiment and tinker with the model on consumer-grade GPUs. The model’s novel architecture is pending support in Hugging Face transformers and vLLM, which IBM anticipates will be completed shortly for both projects. Official support to run this model locally through platform partners, including Ollama and LMStudio, is expected in time for the full model release later this summer. Enterprise Performance on Consumer Hardware IBM also mentions that LLM memory requirements are often provided, literally and figuratively, without proper context. It’s not enough to know that a model can be successfully loaded into your GPU(s): you need to know that your hardware can handle the model at the context lengths that your use case requires. Furthermore, many enterprise use cases entail multiple model deployment, but batch inferencing of multiple concurrent instances. Therefore, IBM endeavors to measure and report memory requirements with long context and concurrent sessions in mind. In that respect, IBM believes Granite 4.0 Tiny is one of today’s most memory-efficient language models. Despite very long contexts with several concurrent instances of Granite 4.0, Tiny can easily run on a modest consumer GPU. An All-new Hybrid MoE Architecture Whereas prior generations of Granite LLMs utilized a conventional transformer architecture, all models in the Granite 4.0 family utilize a new hybrid Mamba-2/Transformer architecture, marrying the speed and efficiency of Mamba with the precision of transformer-based self-attention. Granite 4.0 Tiny-Preview is a fine-grained hybrid mixture of experts (MoE) model, with 7B total parameters and only 1B active parameters at inference time. Many innovations informing the Granite 4 architecture arose from IBM Research’s collaboration with the original Mamba creators on Bamba, an experimental open-source hybrid model whose successor (Bamba v2) was released earlier this week. A Brief History of Mamba Models Mamba is a type of state space model (SSM) introduced in 2023, about six years after the debut of transformers in 2017. SSMs are conceptually similar to the recurrent neural networks (RNNs) that dominated natural language processing (NLP) in the pre-transformer era. They were originally designed to predict the next state of a continuous sequence (like an electrical signal) using only information from the current state, previous state, and range of possibilities (the state space). Though they’ve been used across several domains for decades, SSMs share certain shortcomings with RNNs that, until recently, limited their potential for language modeling. Unlike the self-attention mechanism of transformers, conventional SSMs have no inherent ability to selectively focus on or ignore specific pieces of contextual information. So in 2023, Carnegie Mellon’s Albert Gu and Princeton’s Tri Dao introduced a type of structured state space sequence (“S4”) neural network that adds a selection mechanism and a scan method (for computational efficiency)—abbreviated as an “S6” model—and achieved language modeling results competitive with transformers. They nicknamed their model “Mamba” because, among other reasons, all of those S’s sound like a snake’s hiss. In 2024, Gu and

At IBM Think 2025, IBM announced Granite 4.0 Tiny Preview, a preliminary version of the smallest model in the upcoming Granite 4.0 family of language models, to the open source community. IBM Granite models are a series of AI foundation models. Initially intended for use in IBM’s cloud-based data and generative AI platform Watsonx, along with other models, IBM opened the source code of some code models. IBM Granite models are trained on datasets curated from the Internet, academic publications, code datasets, and legal and finance documents. The following is based on the IBM Think news announcement.

At FP8 precision, the Granite 4.0 Tiny Preview is extremely compact and compute-efficient. It allows several concurrent sessions to perform long context (128K) tasks that can be run on consumer-grade hardware, including GPUs.

Though the model is only partially trained, it has only seen 2.5T of a planned 15T or more training tokens, it already offers performance rivaling that of IBM Granite 3.3 2B Instruct despite fewer active parameters and a roughly 72% reduction in memory requirements. IBM anticipates Granite 4.0 Tiny’s performance to be on par with Granite 3.3 8B Instruct by the time it has completed training and post-training.

As its name suggests, Granite 4.0 Tiny will be among the smallest offerings in the Granite 4.0 model family. It will be officially released this summer as part of a model lineup that also includes Granite 4.0 Small and Granite 4.0 Medium. Granite 4.0 continues IBM’s commitment to making efficiency and practicality the cornerstone of its enterprise LLM development.

This preliminary version of Granite 4.0 Tiny is now available on Hugging Face under a standard Apache 2.0 license. IBM intends to allow GPU-poor developers to experiment and tinker with the model on consumer-grade GPUs. The model’s novel architecture is pending support in Hugging Face transformers and vLLM, which IBM anticipates will be completed shortly for both projects. Official support to run this model locally through platform partners, including Ollama and LMStudio, is expected in time for the full model release later this summer.

Enterprise Performance on Consumer Hardware

IBM also mentions that LLM memory requirements are often provided, literally and figuratively, without proper context. It’s not enough to know that a model can be successfully loaded into your GPU(s): you need to know that your hardware can handle the model at the context lengths that your use case requires.

Furthermore, many enterprise use cases entail multiple model deployment, but batch inferencing of multiple concurrent instances. Therefore, IBM endeavors to measure and report memory requirements with long context and concurrent sessions in mind.

In that respect, IBM believes Granite 4.0 Tiny is one of today’s most memory-efficient language models. Despite very long contexts with several concurrent instances of Granite 4.0, Tiny can easily run on a modest consumer GPU.

An All-new Hybrid MoE Architecture

Whereas prior generations of Granite LLMs utilized a conventional transformer architecture, all models in the Granite 4.0 family utilize a new hybrid Mamba-2/Transformer architecture, marrying the speed and efficiency of Mamba with the precision of transformer-based self-attention. Granite 4.0 Tiny-Preview is a fine-grained hybrid mixture of experts (MoE) model, with 7B total parameters and only 1B active parameters at inference time.

Many innovations informing the Granite 4 architecture arose from IBM Research’s collaboration with the original Mamba creators on Bamba, an experimental open-source hybrid model whose successor (Bamba v2) was released earlier this week.

A Brief History of Mamba Models

Mamba is a type of state space model (SSM) introduced in 2023, about six years after the debut of transformers in 2017.

SSMs are conceptually similar to the recurrent neural networks (RNNs) that dominated natural language processing (NLP) in the pre-transformer era. They were originally designed to predict the next state of a continuous sequence (like an electrical signal) using only information from the current state, previous state, and range of possibilities (the state space). Though they’ve been used across several domains for decades, SSMs share certain shortcomings with RNNs that, until recently, limited their potential for language modeling.

Unlike the self-attention mechanism of transformers, conventional SSMs have no inherent ability to selectively focus on or ignore specific pieces of contextual information. So in 2023, Carnegie Mellon’s Albert Gu and Princeton’s Tri Dao introduced a type of structured state space sequence (“S4”) neural network that adds a selection mechanism and a scan method (for computational efficiency)—abbreviated as an “S6” model—and achieved language modeling results competitive with transformers. They nicknamed their model “Mamba” because, among other reasons, all of those S’s sound like a snake’s hiss.

In 2024, Gu and Dao released Mamba-2, a simplified and optimized implementation of the Mamba architecture. Equally importantly, their technical paper fleshed out the compatibility between SSMs and self-attention.

Mamba-2 vs. Transformers

Mamba’s major advantages over transformer-based models center on efficiency and speed.

Transformers have a crucial weakness: the computational requirements of self-attention scale quadratically with context. In other words, each time your context length doubles, the attention mechanism doesn’t just use double the resources, it uses quadruple the resources. This “quadratic bottleneck” increasingly throttles speed and performance as the context window (and corresponding KV-cache) grows.

Conversely, Mamba’s computational needs scale linearly: if you double the length of an input sequence, Mamba uses only double the resources. Whereas self-attention must repeatedly compute the relevance of every previous token to each new token, Mamba simply maintains a condensed, fixed-size “summary” of prior context from prior tokens. As the model “reads” each new token, it determines that token’s relevance, then updates (or doesn’t update) the summary accordingly. Essentially, whereas self-attention retains every bit of information and then weights the influence of each based on its relevance, Mamba selectively retains only the relevant information.

While Transformers are more memory-intensive and computationally redundant, the method has its own advantages. For instance, research has shown that transformers still outpace Mamba and Mamba-2 on tasks requiring in-context learning (such as few-shot prompting), copying, or long-context reasoning.

The Best of Both Worlds

Fortunately, the respective strengths of transformers and Mamba are not mutually exclusive. In the original Mamba-2 paper, authors Dao and Gu suggest that a hybrid model could exceed the performance of a pure transformer or SSM—a notion validated by Nvidia research from last year. To explore this further, IBM Research collaborated with Dao and Gu themselves, along with the University of Illinois at Urbana-Champaign (UIUC) ‘s Minjia Zhang, on Bamba and Bamba V2. Bamba, in turn, informed many of the architectural elements of Granite 4.0.

The Granite 4.0 MoE architecture employs 9 Mamba blocks for every one transformer block. In essence, the selectivity mechanisms of the Mamba blocks efficiently capture global context, which is then passed to transformer blocks that enable a more nuanced parsing of local context. The result is a dramatic reduction in memory usage and latency with no apparent tradeoff in performance.

Granite 4.0 Tiny doubles down on these efficiency gains by implementing them within a compact, fine-grained mixture of experts (MoE) framework, comprising 7B total parameters and 64 experts, yielding 1B active parameters at inference time. Further details are available in Granite 4.0 Tiny Preview’s Hugging Face model card.

Unconstrained Context Length

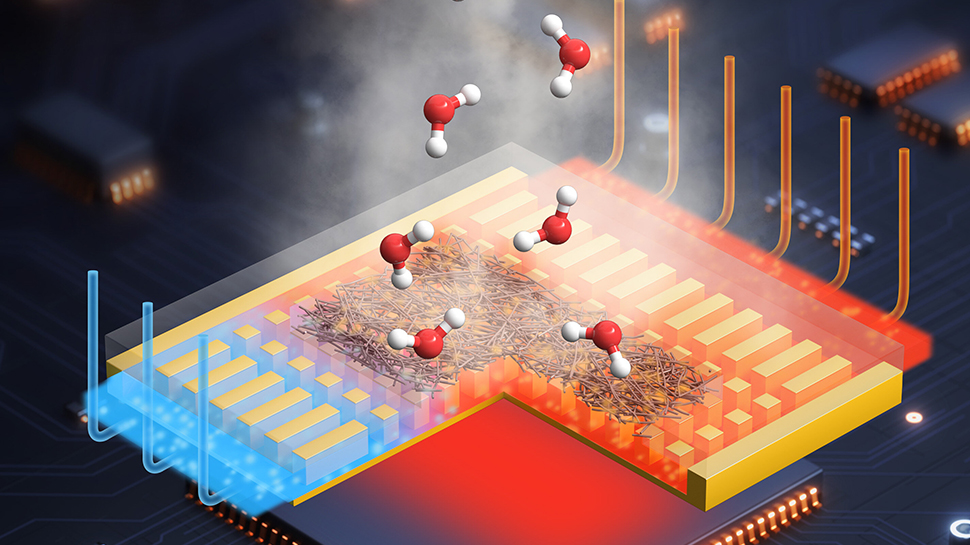

One of the more tantalizing aspects of SSM-based language models is their theoretical ability to handle infinitely long sequences. However, due to practical constraints, the word “theoretical” typically does a lot of heavy lifting.

One of those constraints, especially for hybrid-SSM models, comes from the positional encoding (PE) used to represent information about the order of words. PE adds computational steps, and research has shown that models using PE techniques such as rotary positional encoding (RoPE) struggle to generalize to sequences longer than they’ve seen in training.

The Granite 4.0 architecture usesno positional encoding (NoPE). IBM testing convincingly demonstrates that this has had no adverse effect on long-context performance. At present, IBM has already validated Tiny Preview’s long-context performance for at least 128K tokens and expects to validate similar performance on significantly longer context lengths by the time the model has completed training and post-training. It’s worth noting that a key challenge in definitively validating performance on tasks in the neighborhood of 1 M-token context is the scarcity of suitable datasets.

The other practical constraint on Mamba context length is compute. Linear scaling is better than quadratic scaling, but still adds up eventually. Here again, Granite 4.0 Tiny has two key advantages:

- Unlike PE, NoPE doesn’t add additional computational burden to the attention mechanism in the model’s transformer layers.

- Granite 4.0 Tiny is extremely compact and efficient, leaving plenty of hardware space for linear scaling.

Put simply, the Granite 4.0 MoE architecture itself does not constrain context length. It can go as far as your hardware resources allow.

What’s Happening Next

IBM expressed its excitement about continuing pre-training Granite 4.0 Tiny, given such promising results so early in the process. It is also excited to apply its lessons from post-training Granite 3.3, particularly regarding reasoning capabilities and complex instruction following, to the new models.

More information about new developments in the Granite Series was presented at IBM Think 2025 and in the following weeks and months.

You can find the Granite 4.0 Tiny on Hugging Face.

This article is based on IBM Think News Announcement authored by Kate Soule, Director, Technical Product Management, Granite, and Dave Bergmann Senior Writer, AI Models at IBM.

![[The AI Show Episode 155]: The New Jobs AI Will Create, Amazon CEO: AI Will Cut Jobs, Your Brain on ChatGPT, Possible OpenAI-Microsoft Breakup & Veo 3 IP Issues](https://www.marketingaiinstitute.com/hubfs/ep%20155%20cover.png)

.jpg?#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Vladimir_Stanisic_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Design_Pics_Inc_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Latest Galaxy Z Fold 7 leak leaves very little to the imagination [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/06/galaxy-z-fold-7-teaser-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Mercedes, Audi, Volvo Reject Apple's New CarPlay Ultra [Report]](https://www.iclarified.com/images/news/97711/97711/97711-640.jpg)

![Apple Considers LX Semicon and LG Innotek Components for iPad OLED Displays [Report]](https://www.iclarified.com/images/news/97699/97699/97699-640.jpg)