Production postmorterm: The rookie server's untimely promotion

Today's incident involved a production system failure when one node in the cluster unexpectedly died. That is a scenario RavenDB is designed to handle, and there are well-established (and well-trodden) procedures for recovery.In this case, the failing node didn’t just crash (which a restart would solve), but actually died. This meant that the admin had to provision a new server and add it to the cluster. This process is, again, both well-established and well-trodden. As you can tell from the fact that you are reading this post, something went wrong. This cluster is primarily intended to host a single large database (100+ GB in size). When you add a new node to the cluster and add an existing database to it, we need to sync the state between the existing nodes and the new node.For large databases, that can take a while to complete, which is fine because the new node hasn’t (yet) been promoted to serve users’ requests. It is just slurping all the data until it is in complete sync with the rest of the system. In this case, however… somehow this rookie server got promoted to a full-blown member and started serving user requests.This is not possible. I repeat, it is not possible. This code has been running in production for over a decade. It has been tested, it has been proven, it has been reviewed, and it has been modeled. And yet… It happened. This sucks.This postmortem will dissect this distributed systems bug.Debugging such systems is pretty complex and requires specialized expertise. But this particular bug is surprisingly easy to reason about.Let’s start from the beginning. Here is how the RavenDB cluster decides if a node can be promoted:def scan_nodes(): states = {} for node in self.cluster.nodes: # retrieve the state of the node (remote call) # - may fail if node is down state = self.cluster.get_current_state(node) states[node] = state for database in self.cluster.databases: promotables = database.promotable_nodes() if len(promotables) == 0: # nothing to do continue for promotable in promotables: mentor = promotable.mentor_node() mentor_db_state = states[mentor].databases[database.name] if mentor_db_state.faulted: # ignore mentor in faulty state continue promotable_db_state = states[promotable].databases[database.name] if mentor_db_state.last_etag > promotable_db_state.current_etag: continue # the promotable node is up to date as of the last check cycle, promote self.cluster.promote_node(promotable, database)The overall structure is pretty simple, we ask each of the nodes in the cluster what its current state is. That gives us an inconsistent view of the system (because we ask different nodes at different times).To resolve this, we keep both the last and current values. In the code above, you can see that we go over all the promotable nodes and check the current state of each promotable node compared to the last state (from the previous call) of its mentoring node.The idea is that we can promote a node when its current state is greater than the last state of its mentor (allowing some flexibility for constant writes, etc.). The code is simple, well-tested, and has been widely deployed for a long time. Staring at this code didn’t tell us anything, it looks like it is supposed to work!The problem with distributed systems is that there is also all the code involved that is not there. For example, you can see that there is handling here for when the mentor node has failed. In that case, another part of the code would reassign the promotable node to a new mentor, and we’ll start the cycle again.That was indeed the cause of the problem. Midway through the sync process for the new node, the mentor node failed. That is expected, as I mentioned, and handled. The problem was that there are various levels of failure.For example, it is very clear that a node that is offline isn’t going to respond to a status request, right? What about a node that just restarted? It can respond, and for all intents and purposes, it is up & running - except that it is still loading its databases. Loading a database that exceeds the 100 GB mark can take a while, especially if your disk is taking its time. In that case, what ended up happening was that the status check for the node passed with flying colors, and the status check for the database state returned a loading state.All the other fields in the database status check were set to their default values… I think you can see where this is going, right? The problem was that we got a valid status report from a node and didn’t check the status of the individual database state. Then we checked the progress of the promotable database against the mentor state (which was all set to default values). The promotable node’s current etag was indeed higher than the last etag from the mentor node (since it was the default 0 value), and boom, we have a rookie server being promoted too so

Today's incident involved a production system failure when one node in the cluster unexpectedly died. That is a scenario RavenDB is designed to handle, and there are well-established (and well-trodden) procedures for recovery.

In this case, the failing node didn’t just crash (which a restart would solve), but actually died. This meant that the admin had to provision a new server and add it to the cluster. This process is, again, both well-established and well-trodden.

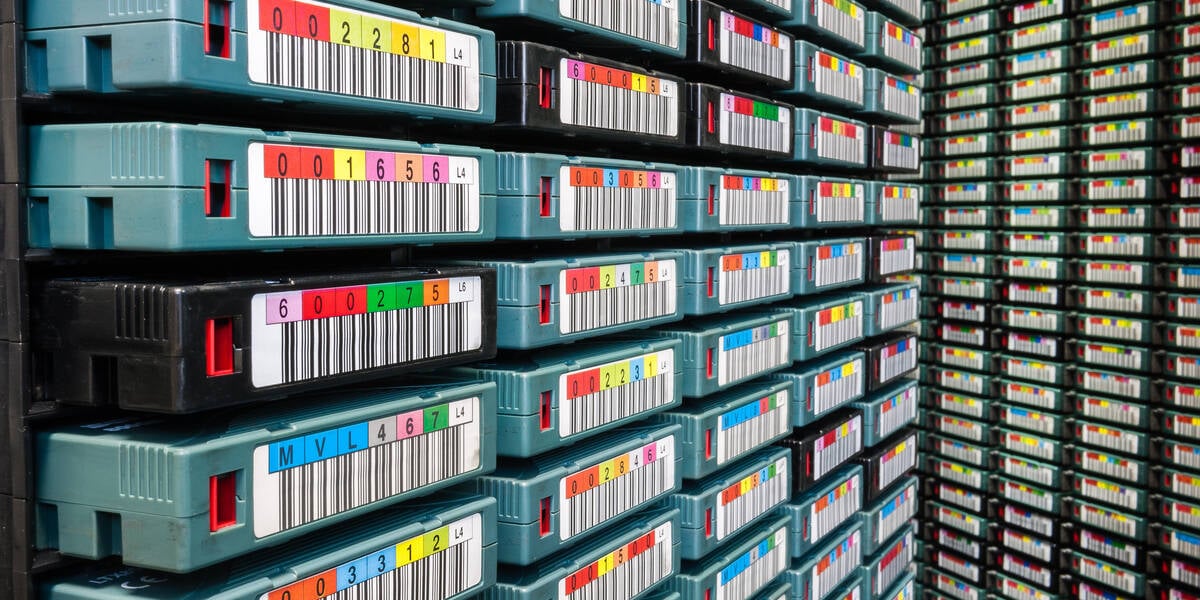

As you can tell from the fact that you are reading this post, something went wrong. This cluster is primarily intended to host a single large database (100+ GB in size). When you add a new node to the cluster and add an existing database to it, we need to sync the state between the existing nodes and the new node.

For large databases, that can take a while to complete, which is fine because the new node hasn’t (yet) been promoted to serve users’ requests. It is just slurping all the data until it is in complete sync with the rest of the system. In this case, however… somehow this rookie server got promoted to a full-blown member and started serving user requests.

This is not possible. I repeat, it is not possible. This code has been running in production for over a decade. It has been tested, it has been proven, it has been reviewed, and it has been modeled. And yet… It happened. This sucks.

This postmortem will dissect this distributed systems bug.Debugging such systems is pretty complex and requires specialized expertise. But this particular bug is surprisingly easy to reason about.

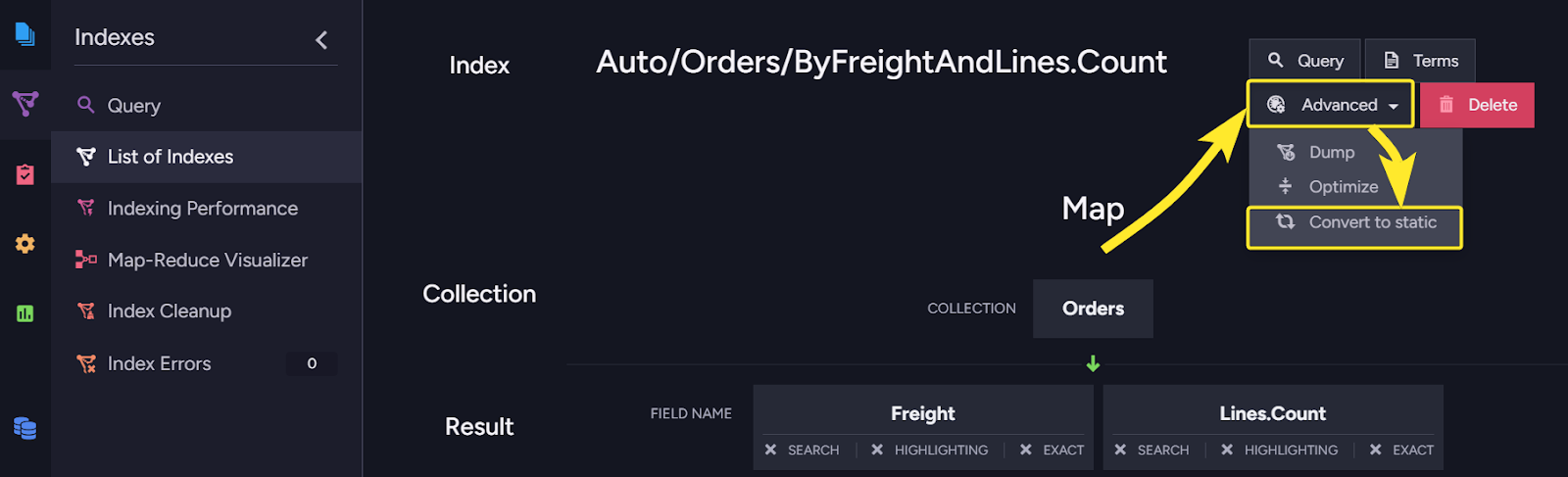

Let’s start from the beginning. Here is how the RavenDB cluster decides if a node can be promoted:

def scan_nodes():

states = {}

for node in self.cluster.nodes:

# retrieve the state of the node (remote call)

# - may fail if node is down

state = self.cluster.get_current_state(node)

states[node] = state

for database in self.cluster.databases:

promotables = database.promotable_nodes()

if len(promotables) == 0: # nothing to do

continue

for promotable in promotables:

mentor = promotable.mentor_node()

mentor_db_state = states[mentor].databases[database.name]

if mentor_db_state.faulted: # ignore mentor in faulty state

continue

promotable_db_state = states[promotable].databases[database.name]

if mentor_db_state.last_etag > promotable_db_state.current_etag:

continue

# the promotable node is up to date as of the last check cycle, promote

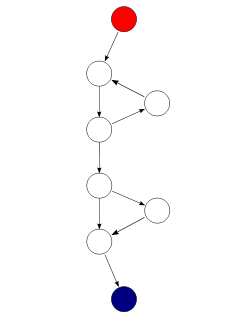

self.cluster.promote_node(promotable, database)The overall structure is pretty simple, we ask each of the nodes in the cluster what its current state is. That gives us an inconsistent view of the system (because we ask different nodes at different times).

To resolve this, we keep both the last and current values. In the code above, you can see that we go over all the promotable nodes and check the current state of each promotable node compared to the last state (from the previous call) of its mentoring node.

The idea is that we can promote a node when its current state is greater than the last state of its mentor (allowing some flexibility for constant writes, etc.).

The code is simple, well-tested, and has been widely deployed for a long time. Staring at this code didn’t tell us anything, it looks like it is supposed to work!

The problem with distributed systems is that there is also all the code involved that is not there. For example, you can see that there is handling here for when the mentor node has failed. In that case, another part of the code would reassign the promotable node to a new mentor, and we’ll start the cycle again.

That was indeed the cause of the problem. Midway through the sync process for the new node, the mentor node failed. That is expected, as I mentioned, and handled. The problem was that there are various levels of failure.

For example, it is very clear that a node that is offline isn’t going to respond to a status request, right?

What about a node that just restarted? It can respond, and for all intents and purposes, it is up & running - except that it is still loading its databases.

Loading a database that exceeds the 100 GB mark can take a while, especially if your disk is taking its time. In that case, what ended up happening was that the status check for the node passed with flying colors, and the status check for the database state returned a loading state.

All the other fields in the database status check were set to their default values…

I think you can see where this is going, right? The problem was that we got a valid status report from a node and didn’t check the status of the individual database state. Then we checked the progress of the promotable database against the mentor state (which was all set to default values).

The promotable node’s current etag was indeed higher than the last etag from the mentor node (since it was the default 0 value), and boom, we have a rookie server being promoted too soon.

The actual fix, by the way, is a single if statement to verify that the state of the database is properly loaded before we check the actual values.

To reproduce this, even after we knew what was going on, was an actual chore, by the way. You need to hit just the right race conditions on two separate machines to get to this state, helped by slow disk, a very large database, and two separate mistimed incidents of server failures.

![Top Features of Vision-Based Workplace Safety Tools [2025]](https://static.wixstatic.com/media/379e66_7e75a4bcefe14e4fbc100abdff83bed3~mv2.jpg/v1/fit/w_1000,h_884,al_c,q_80/file.png?#)

![[The AI Show Episode 152]: ChatGPT Connectors, AI-Human Relationships, New AI Job Data, OpenAI Court-Ordered to Keep ChatGPT Logs & WPP’s Large Marketing Model](https://www.marketingaiinstitute.com/hubfs/ep%20152%20cover.png)

![[DEALS] Microsoft Visual Studio Professional 2022 + The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

-0-6-screenshot.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![Someone is selling a bunch of those rare Essential ‘Gem’ phones for $1,200 [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2019/10/next-essential-phone.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Teaser Trailer for 'The Lost Bus' Starring Matthew McConaughey [Video]](https://www.iclarified.com/images/news/97582/97582/97582-640.jpg)