Deploy JetBrains Mellum Your Way: Now Available via NVIDIA NIM

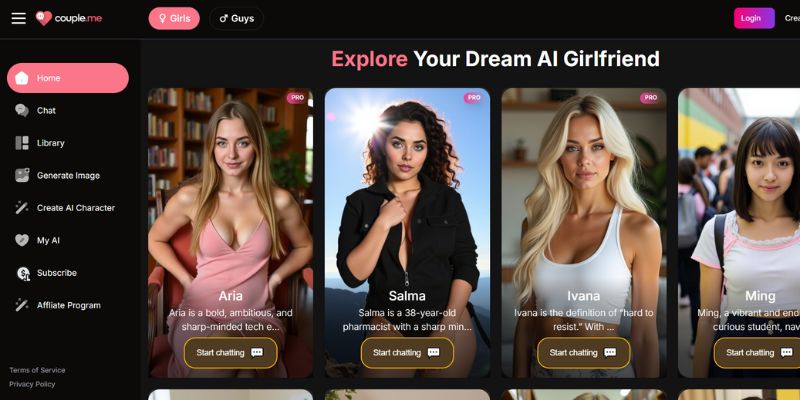

Deploy Mellum as a production-grade LLM inside your own infrastructure – with NVIDIA. JetBrains Mellum – our open, focused LLM specialized on code completion – is now available to run as a containerized microservice on NVIDIA AI Factories. Using the new NVIDIA universal LLM NIM container, Mellum can be deployed in minutes on any NVIDIA-accelerated […]

Deploy Mellum as a production-grade LLM inside your own infrastructure – with NVIDIA.

JetBrains Mellum – our open, focused LLM specialized on code completion – is now available to run as a containerized microservice on NVIDIA AI Factories. Using the new NVIDIA universal LLM NIM container, Mellum can be deployed in minutes on any NVIDIA-accelerated infrastructure, whether in the cloud, on-premises, or across hybrid environments.

Mellum is part of the early launch cohort of models that showcase coding capabilities on AI factories. We’re proud to be among the first teams contributing to this new enterprise ecosystem.

But wait – isn’t Mellum already in JetBrains IDEs and on Hugging Face?

Yes – and that’s not changing. Mellum is tightly integrated into our developer tools via JetBrains AI Assistant and is also available on Hugging Face. But some teams often have very different requirements, such as:

- Deployment on their own hardware, in environments they control

- Integration into custom pipelines, CI/CD flows, observability platforms

- Fine-tuning or customization for domain-specific use cases

- Security, compliance, and performance guarantees

That’s where NVIDIA Enterprise AI Factory validated design comes in – it’s a reference platform for building full-stack enterprise AI systems. Mellum, available via NVIDIA NIM, becomes a plug-and-play model block that can fit directly into those pipelines. In our testing, we wanted to ensure that, as the Mellum family grows, we are able to offer JetBrains models on a performant inference solution that is enterprise-ready.

What are NVIDIA NIM microservices?

NVIDIA NIM microservices are part of NVIDIA AI Enterprise, and do something very straightforward but invaluable: wrap complex AI model infrastructure into simple, fast-deployable containers optimized for inference. With the new universal LLM NIM container designed to work with a broad range of open and specialized LLMs, Mellum can now be deployed securely on NVIDIA-accelerated computing – on-premises, in the cloud, or across hybrid environments.

From a technical standpoint, it means Mellum is now available through a single container interface that supports major backends like NVIDIA TensorRT-LLM, vLLM, and SGLang. This helps teams run inference efficiently and predictably using open-source models they can inspect, adapt, and improve.

We’re particularly excited about how this ecosystem can help enterprise users evolve from basic chatbot integrations to deeply integrated AI assistants embedded across software engineering workflows.

Do I still need JetBrains AI Assistant if Mellum runs on NIM?

Some users ask:

“Is the open-source Mellum (via NIM) the same as what’s in JetBrains AI Assistant?”

Not exactly.

The open-source Mellum,now deployable via NIM, is great for custom, self-hosted use cases. But JetBrains AI Assistant uses enhanced proprietary versions of Mellum, with deeper IDE integration and a more polished developer experience.

In short:

- Use NIM and Mellum for flexible, custom deployment.

- Use AI Assistant for the best out-of-the-box experience inside JetBrains tools.

Try it now

Mellum deployment is now one button away from you, so check it out here.

![Top Features of Vision-Based Workplace Safety Tools [2025]](https://static.wixstatic.com/media/379e66_7e75a4bcefe14e4fbc100abdff83bed3~mv2.jpg/v1/fit/w_1000,h_884,al_c,q_80/file.png?#)

![[The AI Show Episode 152]: ChatGPT Connectors, AI-Human Relationships, New AI Job Data, OpenAI Court-Ordered to Keep ChatGPT Logs & WPP’s Large Marketing Model](https://www.marketingaiinstitute.com/hubfs/ep%20152%20cover.png)

![[DEALS] Microsoft Visual Studio Professional 2022 + The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

-0-6-screenshot.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![Someone is selling a bunch of those rare Essential ‘Gem’ phones for $1,200 [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2019/10/next-essential-phone.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Teaser Trailer for 'The Lost Bus' Starring Matthew McConaughey [Video]](https://www.iclarified.com/images/news/97582/97582/97582-640.jpg)