The Pentagon is gutting the team that tests AI and weapons systems

The Trump administration’s chainsaw approach to federal spending lives on, even as Elon Musk turns on the president. On May 28, Secretary of Defense Pete Hegseth announced he’d be gutting a key office at the Department of Defense responsible for testing and evaluating the safety of weapons and AI systems. As part of a string…

The Trump administration’s chainsaw approach to federal spending lives on, even as Elon Musk turns on the president. On May 28, Secretary of Defense Pete Hegseth announced he’d be gutting a key office at the Department of Defense responsible for testing and evaluating the safety of weapons and AI systems.

As part of a string of moves aimed at “reducing bloated bureaucracy and wasteful spending in favor of increased lethality,” Hegseth cut the size of the Office of the Director of Operational Test and Evaluation in half. The group was established in the 1980s—following orders from Congress—after criticisms that the Pentagon was fielding weapons and systems that didn’t perform as safely or effectively as advertised. Hegseth is reducing the agency’s staff to about 45, down from 94, and firing and replacing its director. He gave the office just seven days to implement the changes.

It is a significant overhaul of a department that in 40 years has never before been placed so squarely on the chopping block. Here’s how today’s defense tech companies, which have fostered close connections to the Trump administration, stand to gain, and why safety testing might suffer as a result.

The Operational Test and Evaluation office is “the last gate before a technology gets to the field,” says Missy Cummings, a former fighter pilot for the US Navy who is now a professor of engineering and computer science at George Mason University. Though the military can do small experiments with new systems without running it by the office, it has to test anything that gets fielded at scale.

“In a bipartisan way—up until now—everybody has seen it’s working to help reduce waste, fraud, and abuse,” she says. That’s because it provides an independent check on companies’ and contractors’ claims about how well their technology works. It also aims to expose the systems to more rigorous safety testing.

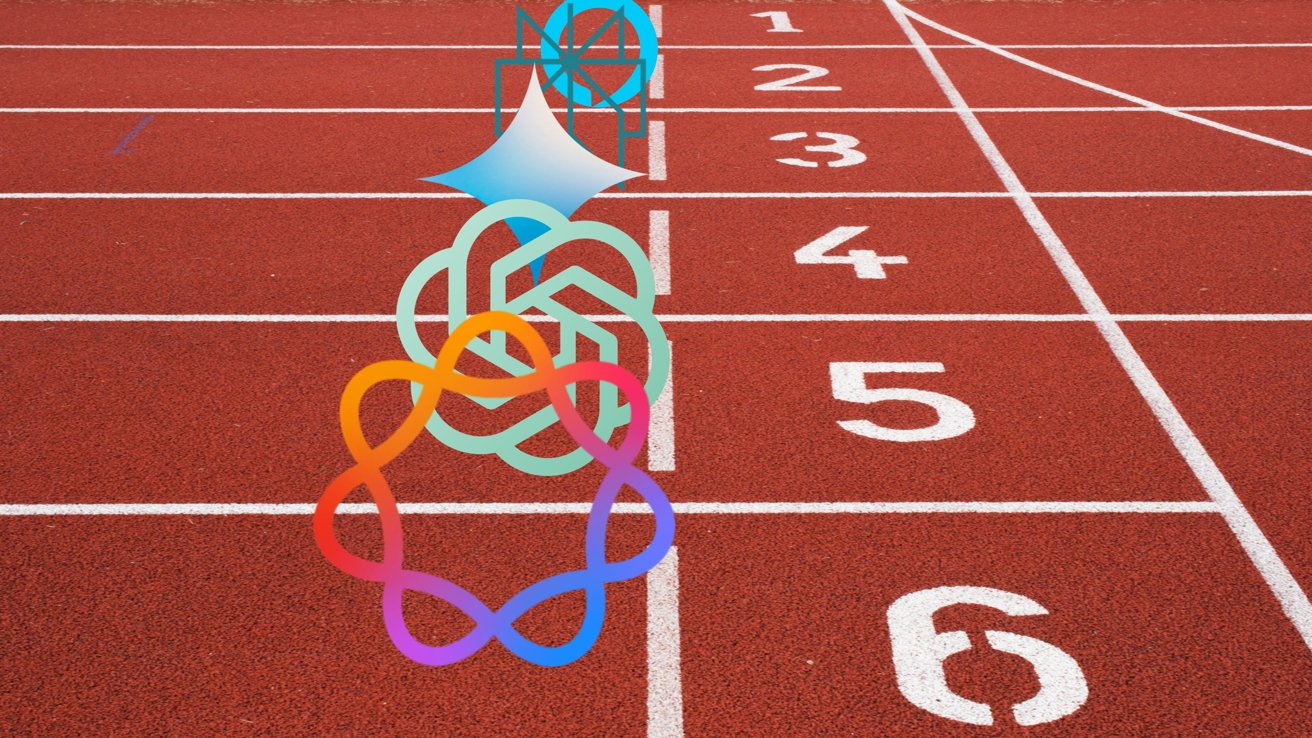

The gutting comes at a particularly pivotal time for AI and military adoption: The Pentagon is experimenting with putting AI into everything, mainstream companies like OpenAI are now more comfortable working with the military, and defense giants like Anduril are winning big contracts to launch AI systems (last Thursday, Anduril announced a whopping $2.5 billion funding round, doubling its valuation to over $30 billion).

Hegseth claims his cuts will “make testing and fielding weapons more efficient,” saving $300 million. But Cummings is concerned that they are paving a way to faster adoption while increasing the chances that new systems won’t be as safe or effective as promised. “The firings in DOTE send a clear message that all perceived obstacles for companies favored by Trump are going to be removed,” she says.

Anduril and Anthropic, which have launched AI applications for military use, did not respond to my questions about whether they pushed for or approve of the cuts. A representative for OpenAI said that the company was not involved in lobbying for the restructuring.

“The cuts make me nervous,” says Mark Cancian, a senior advisor at the Center for Strategic and International Studies who previously worked at the Pentagon in collaboration with the testing office. “It’s not that we’ll go from effective to ineffective, but you might not catch some of the problems that would surface in combat without this testing step.”

It’s hard to say precisely how the cuts will affect the office’s ability to test systems, and Cancian admits that those responsible for getting new technologies out onto the battlefield sometimes complain that it can really slow down adoption. But still, he says, the office frequently uncovers errors that weren’t previously caught.

It’s an especially important step, Cancian says, whenever the military is adopting a new type of technology like generative AI. Systems that might perform well in a lab setting almost always encounter new challenges in more realistic scenarios, and the Operational Test and Evaluation group is where that rubber meets the road.

So what to make of all this? It’s true that the military was experimenting with artificial intelligence long before the current AI boom, particularly with computer vision for drone feeds, and defense tech companies have been winning big contracts for this push across multiple presidential administrations. But this era is different. The Pentagon is announcing ambitious pilots specifically for large language models, a relatively nascent technology that by its very nature produces hallucinations and errors, and it appears eager to put much-hyped AI into everything. The key independent group dedicated to evaluating the accuracy of these new and complex systems now only has half the staff to do it. I’m not sure that’s a win for anyone.

This story originally appeared in The Algorithm, our weekly newsletter on AI. To get stories like this in your inbox first, sign up here.

![Top Features of Vision-Based Workplace Safety Tools [2025]](https://static.wixstatic.com/media/379e66_7e75a4bcefe14e4fbc100abdff83bed3~mv2.jpg/v1/fit/w_1000,h_884,al_c,q_80/file.png?#)

![[The AI Show Episode 152]: ChatGPT Connectors, AI-Human Relationships, New AI Job Data, OpenAI Court-Ordered to Keep ChatGPT Logs & WPP’s Large Marketing Model](https://www.marketingaiinstitute.com/hubfs/ep%20152%20cover.png)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

-Edo_Schmidt-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Aleksey_Funtap_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

.png?#)

![This is Android 16’s desktop mode – a little broken, but the start of something good [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/06/android-16-qpr1-desktop-mode-3.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![WWDC 2025 Interview: Federighi and Joswiak on Siri Delay, Apple Intelligence, and iPadOS 26 [Video]](https://www.iclarified.com/images/news/97579/97579/97579-640.jpg)

![New Things On the Way From Apple [Video]](https://www.iclarified.com/images/news/97562/97562/97562-640.jpg)

![Introducing Liquid Glass [Video]](https://www.iclarified.com/images/news/97565/97565/97565-640.jpg)