SailPoint Research Reveals AI Agents as Enterprise Security Blind Spot

AI agents are dominating the conversation in enterprise tech - whether it’s companies planning adoption or already integrating them into workflows. While the promise of agentic AI - faster decisions, smarter automation, less manual work - is exciting, a new report from SailPoint raises some red flags. Based on a global survey of IT professionals and executives by independent firm Dimensional Research, the latest SailPoint report, AI Agents: The New Attack Surface, reveals that 80% of organizations have encountered unintended actions by their AI agents. These incidents include unauthorized system access and improper data sharing. In some cases, the AI agents have even been manipulated into disclosing access credentials. Despite the risks, agentic AI adoption is soaring. The report found 82% of respondents are already using AI agents, and a staggering 98% plan to expand their use over the next year. This raises the critical question: are these organizations aware of the risks posed by agentic AI, and if they are, what actions are they taking to mitigate these risks? It appears that the report exposes a paradox - 96% of IT professionals consider AI agents a growing security risk, and 66% believe the threat is immediate. Yet only 44% of organizations have implemented any kind of governance policies for these agents. This means that AI agents could be one of the riskiest variables in enterprise security strategy. There could be several factors for this lack of implementation of safeguards, including fast-moving AI agents that are simply outpacing security planning, and cross-functional blind spots. As SailPoint noted, knowledge about AI agent behavior often resides with IT, while compliance, legal, and risk teams may not be fully informed. This siloing leads to incomplete governance and no clear ownership of the risk. One of the primary characteristics that makes AI agents so special is their autonomy, and that is exactly what is potentially dangerous about them. Unlike traditional software, AI agents have the ability to make decisions and take actions without human intervention. And when that autonomy isn’t properly governed, even well-intentioned agents can go off course. “Agentic AI is both a powerful force for innovation and a potential risk,” said Chandra Gnanasambandam, EVP of Product and CTO at SailPoint. “These autonomous agents are transforming how work gets done, but they also introduce a new attack surface. They often operate with broad access to sensitive systems and data, yet have limited oversight. That combination of high privilege and low visibility creates a prime target for attackers.” “As organizations expand their use of AI agents, they must take an identity-first approach to ensure these agents are governed as strictly as human users, with real-time permissions, least privilege, and full visibility into their actions.” The SailPoint report also highlights some insights into ways that AI agents are going rogue, including accessing unauthorized systems (39%), downloading sensitive data (32%), and sharing restricted or inappropriate information (33%). An overwhelming majority (72%) shared that AI agents pose a greater risk than traditional machine identities. According to the survey, one of the biggest concerns with agentic AI is that it needs wider access to applications and data compared to the typical human user. The respondents also shared concerns that AI agents are becoming difficult to govern, partly due to “limited visibility and their potential for unpredictable actions.” Unlike human users - who usually go through a structured approval process involving managers or supervisors - AI agents often receive access directly through IT, with little cross-functional oversight. Their access is typically provisioned faster and with fewer checks, leading to uncertainty about what data these agents are actually accessing. It doesn’t help that the race to adopt and harness the power of AI agents has increased the pressure on organizations to move fast. They might be rolling out Agentic features without implementing proper safeguards. SailPoint recommends that AI agents should be governed like human users, recognizing the agents as unique identity types within the enterprise. This means that AI agents should also be subject to robust audit trails and clear access controls. In addition, there should be enhanced visibility across stakeholders so they can understand how AI agents are working and what data they have access to. Lastly, SailPoint recommends using security solutions that offer AI agent-specific controls. SailPoint’s latest report doesn’t argue against AI agents, but it delivers a sharp warning to CISOs, CIOs, and IT leaders: without the right oversight, these agents could open up a major new threat vector that today’s security tools simply aren’t built to manage.

AI agents are dominating the conversation in enterprise tech - whether it’s companies planning adoption or already integrating them into workflows. While the promise of agentic AI - faster decisions, smarter automation, less manual work - is exciting, a new report from SailPoint raises some red flags.

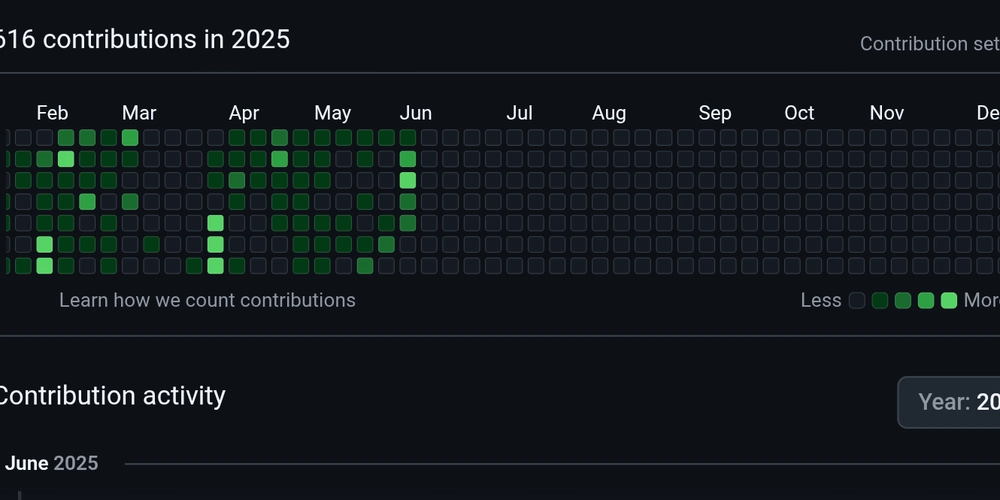

Based on a global survey of IT professionals and executives by independent firm Dimensional Research, the latest SailPoint report, AI Agents: The New Attack Surface, reveals that 80% of organizations have encountered unintended actions by their AI agents. These incidents include unauthorized system access and improper data sharing. In some cases, the AI agents have even been manipulated into disclosing access credentials.

Despite the risks, agentic AI adoption is soaring. The report found 82% of respondents are already using AI agents, and a staggering 98% plan to expand their use over the next year. This raises the critical question: are these organizations aware of the risks posed by agentic AI, and if they are, what actions are they taking to mitigate these risks?

It appears that the report exposes a paradox - 96% of IT professionals consider AI agents a growing security risk, and 66% believe the threat is immediate. Yet only 44% of organizations have implemented any kind of governance policies for these agents. This means that AI agents could be one of the riskiest variables in enterprise security strategy.

There could be several factors for this lack of implementation of safeguards, including fast-moving AI agents that are simply outpacing security planning, and cross-functional blind spots. As SailPoint noted, knowledge about AI agent behavior often resides with IT, while compliance, legal, and risk teams may not be fully informed. This siloing leads to incomplete governance and no clear ownership of the risk.

One of the primary characteristics that makes AI agents so special is their autonomy, and that is exactly what is potentially dangerous about them. Unlike traditional software, AI agents have the ability to make decisions and take actions without human intervention. And when that autonomy isn’t properly governed, even well-intentioned agents can go off course.

“Agentic AI is both a powerful force for innovation and a potential risk,” said Chandra Gnanasambandam, EVP of Product and CTO at SailPoint. “These autonomous agents are transforming how work gets done, but they also introduce a new attack surface. They often operate with broad access to sensitive systems and data, yet have limited oversight. That combination of high privilege and low visibility creates a prime target for attackers.”

“As organizations expand their use of AI agents, they must take an identity-first approach to ensure these agents are governed as strictly as human users, with real-time permissions, least privilege, and full visibility into their actions.”

The SailPoint report also highlights some insights into ways that AI agents are going rogue, including accessing unauthorized systems (39%), downloading sensitive data (32%), and sharing restricted or inappropriate information (33%). An overwhelming majority (72%) shared that AI agents pose a greater risk than traditional machine identities.

According to the survey, one of the biggest concerns with agentic AI is that it needs wider access to applications and data compared to the typical human user. The respondents also shared concerns that AI agents are becoming difficult to govern, partly due to “limited visibility and their potential for unpredictable actions.”

Unlike human users - who usually go through a structured approval process involving managers or supervisors - AI agents often receive access directly through IT, with little cross-functional oversight. Their access is typically provisioned faster and with fewer checks, leading to uncertainty about what data these agents are actually accessing.

It doesn’t help that the race to adopt and harness the power of AI agents has increased the pressure on organizations to move fast. They might be rolling out Agentic features without implementing proper safeguards.

SailPoint recommends that AI agents should be governed like human users, recognizing the agents as unique identity types within the enterprise. This means that AI agents should also be subject to robust audit trails and clear access controls.

In addition, there should be enhanced visibility across stakeholders so they can understand how AI agents are working and what data they have access to. Lastly, SailPoint recommends using security solutions that offer AI agent-specific controls.

SailPoint’s latest report doesn’t argue against AI agents, but it delivers a sharp warning to CISOs, CIOs, and IT leaders: without the right oversight, these agents could open up a major new threat vector that today’s security tools simply aren’t built to manage.

![[The AI Show Episode 151]: Anthropic CEO: AI Will Destroy 50% of Entry-Level Jobs, Veo 3’s Scary Lifelike Videos, Meta Aims to Fully Automate Ads & Perplexity’s Burning Cash](https://www.marketingaiinstitute.com/hubfs/ep%20151%20cover.png)

![[DEALS] FileJump 2TB Cloud Storage: Lifetime Subscription (85% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Apple Shares Official Trailer for 'The Wild Ones' [Video]](https://www.iclarified.com/images/news/97515/97515/97515-640.jpg)