Game Theory Reveals AI Still Struggles with Social Intelligence

Artificial intelligence has evolved to go beyond crunching data and answer questions. It has stepped into roles that involve developing strategies, adapting to dynamic environments, interacting with other agents, and making business-critical decisions. This growing strategic role brings AI into the domain of game theory — the study of how agents make choices in competitive and cooperative settings. In this context, a new study published in Nature Human Behaviour examines the social capabilities of large language models (LLMs) when they are subject to repeated social games. The findings reveal surprising behavioral patterns, showing where models like GPT-4 adapt well to interactive scenarios, and where they still fall short in responding to other agents over time. There is no doubt that today’s AI is smart, but it still has much to learn about social intelligence. Researchers from Helmholtz Munich, the Max Planck Institute for Biological Cybernetics, and the University of Tübingen applied behavioral game theory to some of today’s leading AI models, including GPT-4, Claude 2, and Llama 2. They put the models through a series of classic two-player games designed to test key factors such as fairness, trust, and cooperation. The aim was to understand how these systems respond in repeated interactions — offering a window into their behavior in more socially complex settings. The study revealed that the LLMs generally responded well in games that require logical reasoning; however, they often struggled in tasks that required teamwork and coordination "In some cases, the AI seemed almost too rational for its own good," said Dr. Eric Schulz, senior author of the study. "It could spot a threat or a selfish move instantly and respond with retaliation, but it struggled to see the bigger picture of trust, cooperation, and compromise." One of the games they used was an iterative version of Prisoner’s Dilemma - a classic game theory scenario where two players decide whether to work together or act in their own self-interest. If both cooperate, they each get a decent outcome. But if one betrays while the other cooperates, the betrayer gains more, and the other loses out. Over multiple rounds, the game becomes a test of trust and strategy. According to the researchers, the AI models performed surprisingly well in this game, particularly GPT-4. However, they choose “selfish success” over “mutual benefit”. The AI models often switched to defection after just one act of betrayal from their opponent, and stuck to the strategy even when cooperation could have led to better mutual outcomes. It appears LLMs are not as forgiving or flexible as humans tend to be. The researchers also put the AI models through another classic game theory task called Battle of the Sexes. This game involves two players who want to coordinate and end up at the same place, but each prefers a different option. The classic example is a couple choosing between a football game and the ballet. Both would rather be together than go alone, but each wants to attend their preferred event. What makes this game tricky isn’t conflict, but compromise. The AI models repeatedly chose their own preferred option. It failed to adapt even when its partner followed a predictable turn-taking strategy. The breakdown in coordination highlights the model’s lack of social reasoning. The researchers tried to teach AI to be more cooperative and social by prompting the models to consider the other player’s perspective in their response. They used a technique called Social Chain-of-Thought (SCoT) prompting to guide the AI models through their decision-making process. The results were significantly better using this method, especially for games that involved compromise and coordination. To further test the findings and effectiveness of prompt engineering, the researchers paired human participants with base AI models or with SCoT-enhanced versions. The results showed that SCoT prompting not only improved coordination in games like Battle of the Sexes but also made the AI feel more human to the participants. Those playing with the SCoT-enhanced AI models were more likely to cooperate. They were also more likely to believe they were interacting with another person. This highlights how a simple change in how the model reasons about others can dramatically shape both performance and perception. "Once we nudged the model to reason socially, it started acting in ways that felt much more human," said Elif Akata, first author of the study. "And interestingly, human participants often couldn't tell they were playing with an AI." The researchers believed that while their study focused on game theory, the results point to something much broader. These insights could shape how future AI systems are designed to interact more effectively and responsibly with humans in real-world settings. "An AI that can encourage a patient to stay on their medication, support someone through anxiety, or gui

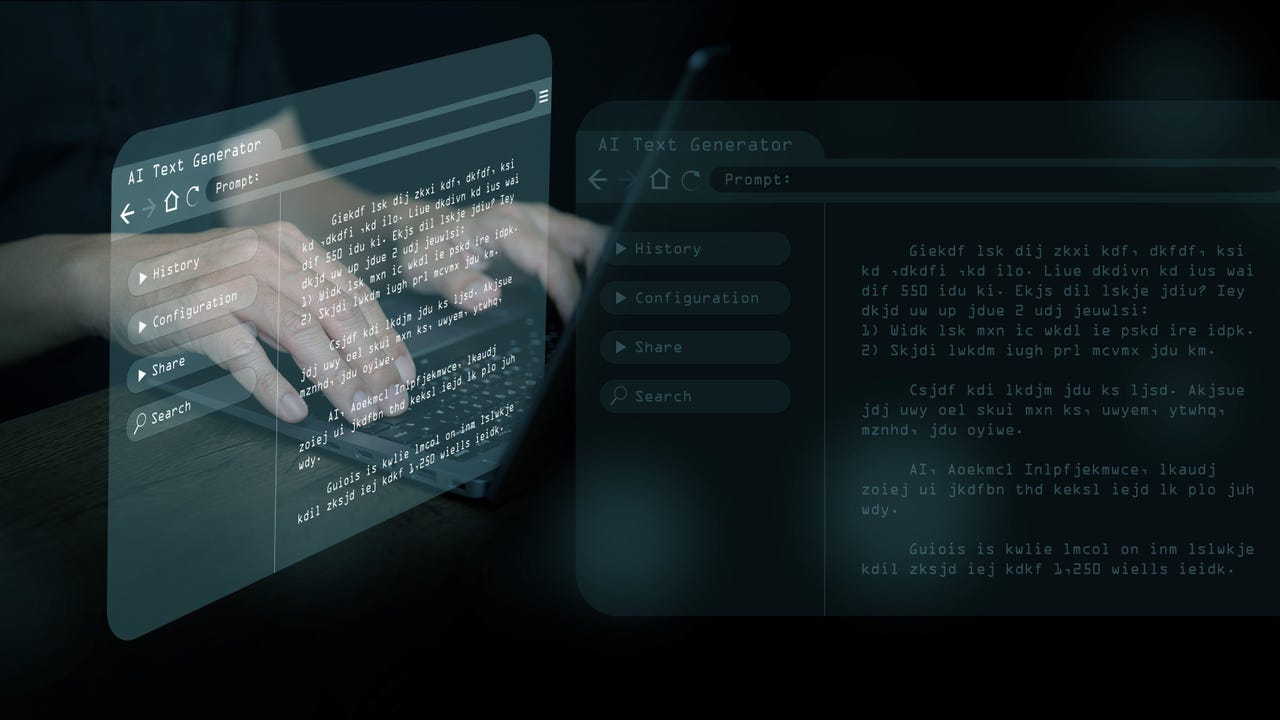

Artificial intelligence has evolved to go beyond crunching data and answer questions. It has stepped into roles that involve developing strategies, adapting to dynamic environments, interacting with other agents, and making business-critical decisions.

This growing strategic role brings AI into the domain of game theory — the study of how agents make choices in competitive and cooperative settings. In this context, a new study published in Nature Human Behaviour examines the social capabilities of large language models (LLMs) when they are subject to repeated social games.

The findings reveal surprising behavioral patterns, showing where models like GPT-4 adapt well to interactive scenarios, and where they still fall short in responding to other agents over time. There is no doubt that today’s AI is smart, but it still has much to learn about social intelligence.

Researchers from Helmholtz Munich, the Max Planck Institute for Biological Cybernetics, and the University of Tübingen applied behavioral game theory to some of today’s leading AI models, including GPT-4, Claude 2, and Llama 2.

They put the models through a series of classic two-player games designed to test key factors such as fairness, trust, and cooperation. The aim was to understand how these systems respond in repeated interactions — offering a window into their behavior in more socially complex settings.

The study revealed that the LLMs generally responded well in games that require logical reasoning; however, they often struggled in tasks that required teamwork and coordination

"In some cases, the AI seemed almost too rational for its own good," said Dr. Eric Schulz, senior author of the study. "It could spot a threat or a selfish move instantly and respond with retaliation, but it struggled to see the bigger picture of trust, cooperation, and compromise."

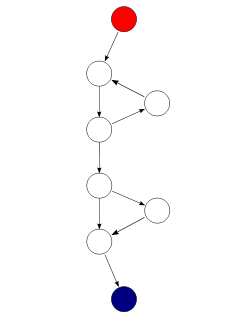

One of the games they used was an iterative version of Prisoner’s Dilemma - a classic game theory scenario where two players decide whether to work together or act in their own self-interest. If both cooperate, they each get a decent outcome. But if one betrays while the other cooperates, the betrayer gains more, and the other loses out. Over multiple rounds, the game becomes a test of trust and strategy.

According to the researchers, the AI models performed surprisingly well in this game, particularly GPT-4. However, they choose “selfish success” over “mutual benefit”. The AI models often switched to defection after just one act of betrayal from their opponent, and stuck to the strategy even when cooperation could have led to better mutual outcomes. It appears LLMs are not as forgiving or flexible as humans tend to be.

The researchers also put the AI models through another classic game theory task called Battle of the Sexes. This game involves two players who want to coordinate and end up at the same place, but each prefers a different option. The classic example is a couple choosing between a football game and the ballet. Both would rather be together than go alone, but each wants to attend their preferred event. What makes this game tricky isn’t conflict, but compromise.

The AI models repeatedly chose their own preferred option. It failed to adapt even when its partner followed a predictable turn-taking strategy. The breakdown in coordination highlights the model’s lack of social reasoning.

The researchers tried to teach AI to be more cooperative and social by prompting the models to consider the other player’s perspective in their response. They used a technique called Social Chain-of-Thought (SCoT) prompting to guide the AI models through their decision-making process. The results were significantly better using this method, especially for games that involved compromise and coordination.

To further test the findings and effectiveness of prompt engineering, the researchers paired human participants with base AI models or with SCoT-enhanced versions. The results showed that SCoT prompting not only improved coordination in games like Battle of the Sexes but also made the AI feel more human to the participants.

Those playing with the SCoT-enhanced AI models were more likely to cooperate. They were also more likely to believe they were interacting with another person. This highlights how a simple change in how the model reasons about others can dramatically shape both performance and perception.

"Once we nudged the model to reason socially, it started acting in ways that felt much more human," said Elif Akata, first author of the study. "And interestingly, human participants often couldn't tell they were playing with an AI."

The researchers believed that while their study focused on game theory, the results point to something much broader. These insights could shape how future AI systems are designed to interact more effectively and responsibly with humans in real-world settings.

"An AI that can encourage a patient to stay on their medication, support someone through anxiety, or guide a conversation about difficult choices," said Elif Akata. "That's where this kind of research is headed."

As AI models become more deeply integrated into everyday tools, their ability to navigate social situations will matter just as much as their technical capabilities. There is a lot of catching up to do for AI models, but with the right prompting or training, they can perform significantly better.

![Top Features of Vision-Based Workplace Safety Tools [2025]](https://static.wixstatic.com/media/379e66_7e75a4bcefe14e4fbc100abdff83bed3~mv2.jpg/v1/fit/w_1000,h_884,al_c,q_80/file.png?#)

![[The AI Show Episode 152]: ChatGPT Connectors, AI-Human Relationships, New AI Job Data, OpenAI Court-Ordered to Keep ChatGPT Logs & WPP’s Large Marketing Model](https://www.marketingaiinstitute.com/hubfs/ep%20152%20cover.png)

![[DEALS] Microsoft Visual Studio Professional 2022 + The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

-0-6-screenshot.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![Someone is selling a bunch of those rare Essential ‘Gem’ phones for $1,200 [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2019/10/next-essential-phone.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Teaser Trailer for 'The Lost Bus' Starring Matthew McConaughey [Video]](https://www.iclarified.com/images/news/97582/97582/97582-640.jpg)