Gemini 2.5 Pro vs OpenAI’s GPT-4.1: A Complete Comparison

The competition between leading AI developers has intensified with Google’s launch of Gemini 2.5 Pro and OpenAI’s introduction of GPT-4.1. These cutting-edge models promise significant advancements in areas ranging from coding and long-context comprehension to cost-efficiency and enterprise readiness. This in-depth comparison explores the latest features, benchmark results, and practical considerations for selecting the right model for your needs.

What’s new in Gemini 2.5 Pro?

Release and integration

Google rolled out the Gemini 2.5 Pro Preview 06-05 update in early June 2025, branding it their first “long-term stable release” and making it available via AI Studio, Vertex AI, and the Gemini app for Pro and Ultra subscribers.

Enhanced coding and Deep Think

One standout feature is “configurable thinking budgets,” which let you control how much compute the model spends on each task—great for optimizing costs and speed in your apps. Google also introduced Deep Think, an advanced reasoning mode that evaluates multiple hypotheses before answering, boosting performance on complex reasoning challenges .

Multimodal reasoning and long-form coherence

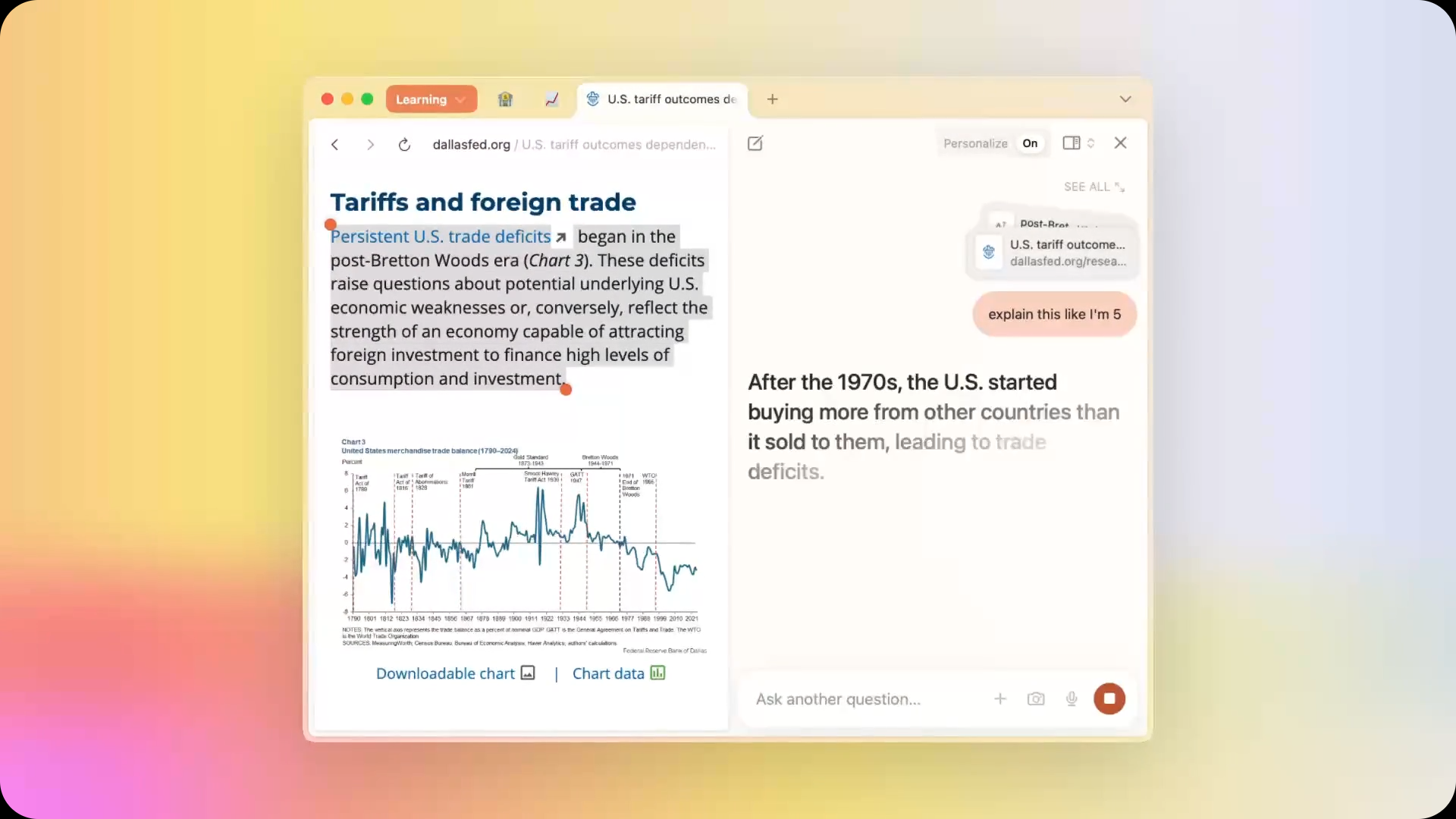

Beyond raw code, Gemini 2.5 Pro strengthens multimodal understanding, achieving 84.8 percent on the Video-MME benchmark and 93 percent on long-context MRCR at 128 K tokens. The model also addresses previous weaknesses in long-form writing—improving coherence, formatting, and factual consistency—making it a compelling choice for tasks such as document drafting or conversational agents requiring sustained, context-aware dialogues.

What’s new in GPT-4.1?

API launch and availability

On April 14, 2025, OpenAI officially introduced the GPT-4.1, GPT-4.1 mini, and GPT-4.1 nano families in their API, immediately deprecating the GPT-4.5 preview three months later (July 14, 2025) to give developers time to transition . All paid ChatGPT tiers now include GPT-4.1, while GPT-4.1 mini replaced GPT-4o mini as the default even for free users.

Performance gains

GPT-4.1 shows major improvements over its predecessor:

-

Coding: Scored 54.6 percent on SWE-bench Verified, a 21.4 point jump over GPT-4o .

-

Instruction following: Achieved 38.3 percent on Scale’s MultiChallenge, up 10.5 points .

Token window and efficiency

Perhaps the most exciting upgrade is the one-million token context window, compared to 128 K in GPT-4o. This lets you feed massive documents at once—something I’ve been eager to try for analyzing long technical manuals! Plus, GPT-4.1 often responds faster and at lower cost, thanks to optimized inference pipelines.

How do they compare in key benchmarks?

Coding and programming

-

Gemini 2.5 Pro leads on the Aider Polyglot coding benchmark, outperforming rivals with its latest updates.

-

GPT-4.1 dominates SWE-bench Verified and Codeforces problems, with clear margins over both GPT-4o and Gemini in some user tests .

Instruction following and reasoning

-

Deep Think in Gemini adds depth by evaluating multiple reasoning chains, which can help in complex Q&A scenarios .

-

GPT-4.1 shows stronger performance on standardized multi-step reasoning tests like ARC and GPQA

Gemini 2.5 Pro Preview 06-05 Thinking recently outperformed OpenAI’s o3 and Anthropic’s Claude Opus 4 on multiple reasoning and scientific benchmarks, including WebDev Arena and LMArena leaderboards . The update also demonstrated superior performance in advanced scientific question answering, showcasing Google’s investment in domain-specific reasoning capabilities.

GPT-4.1 has not published head-to-head comparisons on those exact leaderboards, but internal OpenAI benchmarks indicate it outperforms GPT-4o across reasoning, instruction following, and coding tests by substantial margins . Independent tests also show marked gains in long-context understanding and multi-turn coherence.

Context length

Both models now support very long contexts (hundreds of thousands to a million tokens), but GPT-4.1 currently has the edge with its formal million-token window.

multimodality

Gemini 2.5 Pro retains Gemini 2.5 Flash’s strong multimodal core—processing text, images, and audio—and adds Native Audio Output, generating human-like speech directly from the API . Developers can integrate audio responses into applications without third-party text-to-speech services. Combined with Deep Think, this makes Gemini 2.5 Pro suitable for interactive voice assistants that require sophisticated reasoning.

GPT-4.1 continues OpenAI’s multimodal trajectory, handling text and images with fine-tuned precision inherited from GPT-4o. While it does not yet offer native audio generation, it integrates seamlessly with existing OpenAI audio services (Whisper and TTS) for multimodal applications. Moreover, GPT-4.1 mini and nano variants enable deployment in resource-constrained environments, making multimodal AI more accessible to edge devices and mobile apps .

Which model fits your use case?

Developers and coding

If you’re building interactive web apps or automated coding agents, Gemini 2.5 Pro’s configurable budgets and tight Google Cloud integration (AI Studio/Vertex) are a boon. But if raw coding accuracy and access via ChatGPT are your priority, GPT-4.1’s SWE-bench leadership makes it my go-to .

Long-form writing and conversation

For extended chat sessions or drafting long reports, I find GPT-4.1’s stable million-token context window highly reliable. However, if you value more natural audio responses and richer multimodal exchanges, Gemini still leads with native voice and image understanding.

Enterprise integration

Both platforms offer enterprise features—Gemini via Google Workspace plugins and Scheduled Actions, and GPT-4.1 via API with Direct Preference Optimization (DPO) for fine-tuning to your team’s style. You can’t go wrong either way, but your choice may hinge on whether you’re already committed to Google Cloud or Azure/OpenAI infrastructure .

Here’s how I see it:

Criterion

Gemini 2.5 Pro

GPT-4.1

Coding accuracy

Top-tier (Aider Polyglot leader)

Excellent (outperforms GPT-4o)

Context window

Up to 1–2 million tokens

1 million tokens

Cost control

Configurable thinking budgets

26 % cheaper API calls; 75 % prompt-caching

Availability

Google AI Studio, Vertex AI (beta → GA soon)

OpenAI API, ChatGPT Plus/Pro/Team, Azure

Integration

Best for Google Cloud environments

Best for OpenAI/Azure ecosystems

Automation features

Scheduled Actions, Deep Think (beta)

N/

Maximum Output Tokens

64K tokens

32,768 tokens

Getting Started

CometAPI provides a unified REST interface that aggregates hundreds of AI models—under a consistent endpoint, with built-in API-key management, usage quotas, and billing dashboards. Instead of juggling multiple vendor URLs and credentials.

Developers can access (model name: gemini-2.5-pro-preview-06-05)and (model name: gpt-4.1 ;gpt-4.1-mini; gpt-4.1-nano)through , the latest models listed are as of the article’s publication date. To begin, explore the model’s capabilities in the and consult the for detailed instructions. Before accessing, please make sure you have logged in to CometAPI and obtained the API key. offer a price far lower than the official price to help you integrate.

Wrapping up

![[The AI Show Episode 152]: ChatGPT Connectors, AI-Human Relationships, New AI Job Data, OpenAI Court-Ordered to Keep ChatGPT Logs & WPP’s Large Marketing Model](https://www.marketingaiinstitute.com/hubfs/ep%20152%20cover.png)

![[DEALS] Microsoft Visual Studio Professional 2022 + The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

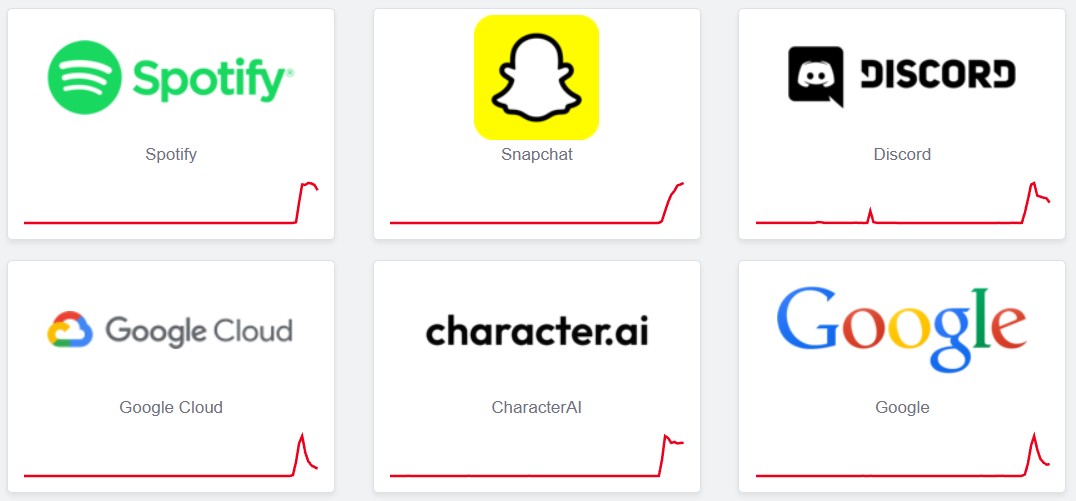

![PSA: Widespread internet outage affects Spotify, Google, Discord, Cloudflare, more [U: Fixed]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2024/07/iCloud-Private-Relay-outage-resolved.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Teaser Trailer for 'The Lost Bus' Starring Matthew McConaughey [Video]](https://www.iclarified.com/images/news/97582/97582/97582-640.jpg)