Run Multiple AI Coding Agents in Parallel with Container-Use from Dagger

In AI-driven development, coding agents have become indispensable collaborators. These autonomous or semi-autonomous tools can write, test, and refactor code, dramatically accelerating development cycles. However, as the number of agents working on a single codebase grows, so do the challenges: dependency conflicts, state leakage between agents, and the difficulty of tracking each agent’s actions. The […] The post Run Multiple AI Coding Agents in Parallel with Container-Use from Dagger appeared first on MarkTechPost.

In AI-driven development, coding agents have become indispensable collaborators. These autonomous or semi-autonomous tools can write, test, and refactor code, dramatically accelerating development cycles. However, as the number of agents working on a single codebase grows, so do the challenges: dependency conflicts, state leakage between agents, and the difficulty of tracking each agent’s actions. The container-use project from Dagger addresses these challenges by offering containerized environments tailored for coding agents. By isolating each agent in its container, developers can run multiple agents concurrently without interference, inspect their activities in real-time, and intervene directly when necessary.

Traditionally, when a coding agent executes tasks, such as installing dependencies, running build scripts, or launching servers, it does so within the developer’s local environment. This approach quickly leads to conflicts: one agent may upgrade a shared library that breaks another agent’s workflow, or an errant script may leave behind artifacts that obscure subsequent runs. Containerization elegantly solves these issues by encapsulating each agent’s environment. Rather than babysitting agents one by one, you can spin up entirely fresh environments, experiment safely, and discard failures instantly, all while maintaining visibility into exactly what each agent executed.

Moreover, because containers can be managed through familiar tools, Docker, git, and standard CLI utilities, container-use integrates seamlessly into existing workflows. Instead of locking into a proprietary solution, teams can leverage their preferred tech stack, whether that means Python virtual environments, Node.js toolchains, or system-level packages. The result is a flexible architecture that empowers developers to harness the full potential of coding agents, without sacrificing control or transparency.

Installation and Setup

Getting started with container-use is straightforward. The project provides a Go-based CLI tool, ‘cu’, which you build and install via a simple ‘make’ command. By default, the build targets your current platform, but cross-compilation is supported through standard ‘TARGETPLATFORM’ environment variables.

# Build the CLI tool

make

# (Optional) Install into your PATH

make install && hash -rAfter running these commands, the ‘cu’ binary becomes available in your shell, ready to launch containerized sessions for any MCP-compatible agent. If you need to compile for a different architecture, say, ARM64 for a Raspberry Pi, simply prefix the build with the target platform:

TARGETPLATFORM=linux/arm64 makeThis flexibility ensures that whether you’re developing on macOS, Windows Subsystem for Linux, or any flavor of Linux, you can generate an environment-specific binary with ease.

Integrating with Your Favorite Agents

One of container-use’s strengths is its compatibility with any agent that speaks the Model Context Protocol (MCP). The project provides example integrations for popular tools like Claude Code, Cursor, GitHub Copilot, and Goose. Integration typically involves adding ‘container-use’ as an MCP server in your agent’s configuration and enabling it:

Claude Code uses an NPM helper to register the server. You can merge Dagger’s recommended instructions into your ‘CLAUDE.md’ so that running ‘claude’ automatically spawns agents in isolated containers:

npx @anthropic-ai/claude-code mcp add container-use -- $(which cu) stdio

curl -o CLAUDE.md https://raw.githubusercontent.com/dagger/container-use/main/rules/agent.mdGoose, a browser-based agent framework, reads from ‘~/.config/goose/config.yaml’. Adding a ‘container-use’ section there directs Goose to launch each browsing agent inside its own container:

extensions:

container-use:

name: container-use

type: stdio

enabled: true

cmd: cu

args:

- stdio

envs: {}Cursor, the AI code assistant, can be hooked by dropping a rule file into your project. With ‘curl’ you fetch the recommended rule and place it in ‘.cursor/rules/container-use.mdc’.

VSCode and GitHub Copilot users can update their ‘settings.json’ and ‘.github/copilot-instructions.md’ respectively, pointing to the ‘cu’ command as the MCP server. Copilot then executes its code completions inside the encapsulated environment. Kilo Code integrates through a JSON-based settings file, letting you specify the ‘cu’ command and any required arguments under ‘mcpServers’. Each of these integrations ensures that, regardless of which assistant you choose, your agents operate in their sandbox, thereby removing the risk of cross-contamination and simplifying cleanup after each run.

Hands-On Examples

To illustrate how container-use can revolutionize your development workflow, the Dagger repository includes several ready-to-run examples. These demonstrate typical use cases and highlight the tool’s flexibility:

- Hello World: In this minimal example, an agent scaffolds a simple HTTP server, say, using Flask or Node’s ‘http’ module, and launches it within its container. You can hit ‘localhost’ in your browser to confirm that the code generated by the agent runs as expected, entirely isolated from your host system.

- Parallel Development: Here, two agents spin up distinct variations of the same app, one using Flask and another using FastAPI, each in its own container and on separate ports. This scenario demonstrates how to evaluate multiple approaches side by side without worrying about port collisions or dependency conflicts.

- Security Scanning: In this pipeline, an agent performs routine maintenance, updating vulnerable dependencies, rerunning the build to ensure nothing broke, and generating a patch file that captures all changes. The entire process unfolds in a throwaway container, leaving your repository in its original state unless you decide to merge the patches.

Running these examples is as simple as piping the example file into your agent command. For instance, with Claude Code:

cat examples/hello_world.md | claudeOr with Goose:

goose run -i examples/hello_world.md -sAfter execution, you’ll see each agent commit its work to a dedicated git branch that represents its container. Inspecting these branches via ‘git checkout’ lets you review, test, or merge changes on your terms.

One common concern when delegating tasks to agents is knowing what they did, not just what they claim. container-use addresses this through a unified logging interface. When you start a session, the tool records every command, output, and file change into your repository’s ‘.git’ history under a special remote called ‘container-use’. You can follow along as the container spins up, the agent runs commands, and the environment evolves.

If an agent encounters an error or goes off track, you don’t have to watch logs in a separate window. A simple command brings up an interactive view:

cu watchThis live view shows you which container branch is active, the latest outputs, and even gives you the option to drop into the agent’s shell. From there, you can debug manually: inspect environment variables, run your own commands, or edit files on the fly. This direct intervention capability ensures that agents remain collaborators rather than inscrutable black boxes.

While the default container images provided by container-use cover many node, Python, and system-level use cases, you might have specialized needs, say, custom compilers or proprietary libraries. Fortunately, you can control the Dockerfile that underpins each container. By placing a ‘Containerfile’ (or ‘Dockerfile’) at the root of your project, the ‘cu’ CLI will build a tailor-made image before launching the agent. This approach enables you to pre-install system packages, clone private repositories, or configure complex toolchains, all without affecting your host environment.

A typical custom Dockerfile might start from an official base, add OS-level packages, set environment variables, and install language-specific dependencies:

FROM ubuntu:22.04

RUN apt-get update && apt-get install -y git build-essential

WORKDIR /workspace

COPY requirements.txt .

RUN pip install -r requirements.txtOnce you’ve defined your container, any agent you invoke will operate within that context by default, inheriting all the pre-configured tools and libraries you need.

In conclusion, as AI agents undertake increasingly complex development tasks, the need for robust isolation and transparency grows in parallel. container-use from Dagger offers a pragmatic solution: containerized environments that ensure reliability, reproducibility, and real-time visibility. By building on standard tools, including Docker, Git, and shell scripts, and offering seamless integrations with popular MCP-compatible agents, it lowers the barrier to safe, scalable, multi-agent workflows.

The post Run Multiple AI Coding Agents in Parallel with Container-Use from Dagger appeared first on MarkTechPost.

![[The AI Show Episode 152]: ChatGPT Connectors, AI-Human Relationships, New AI Job Data, OpenAI Court-Ordered to Keep ChatGPT Logs & WPP’s Large Marketing Model](https://www.marketingaiinstitute.com/hubfs/ep%20152%20cover.png)

![[DEALS] Microsoft Visual Studio Professional 2022 + The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

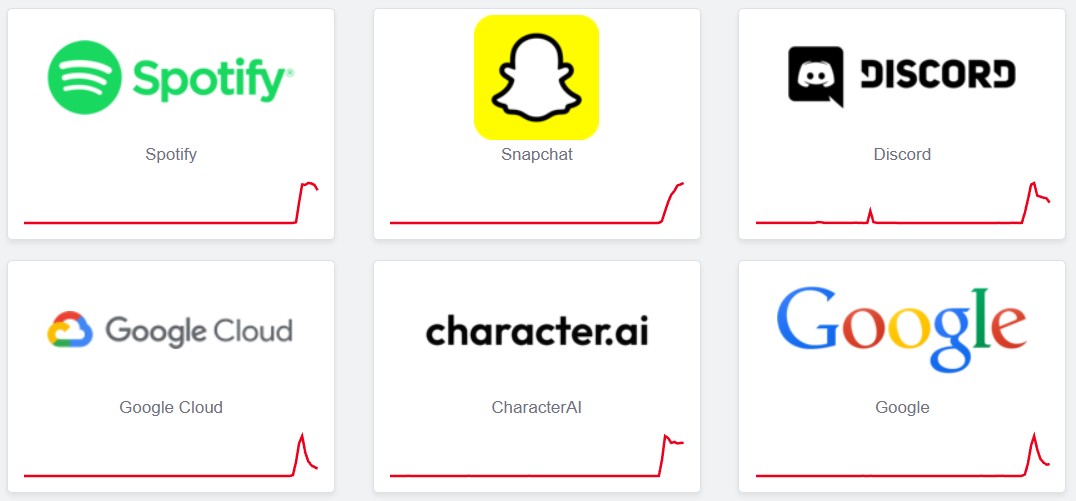

![PSA: Widespread internet outage affects Spotify, Google, Discord, Cloudflare, more [U: Fixed]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2024/07/iCloud-Private-Relay-outage-resolved.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Teaser Trailer for 'The Lost Bus' Starring Matthew McConaughey [Video]](https://www.iclarified.com/images/news/97582/97582/97582-640.jpg)