Kubernetes: The Backbone of Modern Cloud-Native Applications

In the world of cloud computing, efficiency and scalability are key. With the rise of containerized applications, managing them manually is not only tedious but also prone to errors. Enter Kubernetes—the open-source system that automates deployment, scaling, and management of containerized applications, ensuring seamless operations. The Problem with Manual Container Management Imagine running a web application with thousands of users. If traffic suddenly spikes, manually deploying more container instances is time-consuming. If a container crashes, replacing it manually can lead to downtime. Also, distributing incoming traffic evenly across instances requires intricate configurations. These challenges make manual container management inefficient. ECS: A Step Forward AWS’s Elastic Container Service (ECS) has already alleviated several issues: Automatic health checks ensure failed containers are replaced. Autoscaling dynamically adjusts resources based on demand. Load balancing distributes traffic efficiently among containers. However, ECS is deeply tied to AWS, which raises concerns about cloud provider lock-in. If an organization wishes to migrate to another provider, it must reconfigure everything from scratch. The Advantage of EKS: Freedom from Cloud Lock-In AWS Elastic Kubernetes Service (EKS) provides the same automation benefits as ECS but without restricting users to AWS. Since Kubernetes is a cloud-agnostic technology, EKS allows businesses to move seamlessly between cloud providers, offering unmatched flexibility. What Kubernetes Is Not Before diving deeper, let’s clear up a few misconceptions: Kubernetes is not a cloud service provider—it’s an open-source project. It is not a paid service but entirely free. It is not a direct alternative to Docker—rather, it works with Docker to orchestrate containers across multiple machines. It’s not just software running on a machine but a collection of tools and concepts that facilitate efficient containerized application management. Kubernetes vs. Docker Compose: Scaling Beyond One Machine If you’ve used Docker Compose, you know it helps manage multi-container applications on a single machine. Kubernetes takes this concept to the next level by distributing applications across multiple machines, ensuring high availability and fault tolerance. Kubernetes Architecture: Division of Labor Kubernetes Tasks User Responsibilities Creates and manages objects like Pods Sets up the cluster and node instances (Master + Worker Nodes) Monitors Pods, restarts failed instances, scales applications Installs API server, kubelet, and other Kubernetes services Utilizes cloud provider resources to enforce configurations Sets up additional resources (Load Balancer, Filesystems) Core Components Explained Cluster – The fundamental Kubernetes unit, consisting of multiple nodes. Nodes – Individual physical/virtual machines that host Pods. Master Node – The control plane that orchestrates applications across Worker Nodes. Worker Node – Machines that run application containers inside Pods. Pods – The smallest deployable unit in Kubernetes, holding containers and required resources. Containers – Traditional Docker containers encapsulating applications. Services – A logical set of Pods with a stable IP address, enabling reliable communication. Real-World Example: Scaling an E-Commerce Platform Consider an e-commerce platform during Black Friday sales. Without Kubernetes, developers would manually scale up instances, monitor failures, and distribute traffic across containers. With Kubernetes: Autoscaling would provision additional Pods in response to traffic spikes. Failed containers would automatically restart, ensuring seamless user experiences. Load balancing would distribute traffic efficiently across nodes. Kubernetes isn't just a tool—it’s a revolution in how modern applications are deployed and scaled. By eliminating manual intervention, ensuring high availability, and offering freedom from cloud provider lock-in, it has become the backbone of cloud-native architectures.

In the world of cloud computing, efficiency and scalability are key. With the rise of containerized applications, managing them manually is not only tedious but also prone to errors. Enter Kubernetes—the open-source system that automates deployment, scaling, and management of containerized applications, ensuring seamless operations.

The Problem with Manual Container Management

Imagine running a web application with thousands of users. If traffic suddenly spikes, manually deploying more container instances is time-consuming. If a container crashes, replacing it manually can lead to downtime. Also, distributing incoming traffic evenly across instances requires intricate configurations. These challenges make manual container management inefficient.

ECS: A Step Forward

AWS’s Elastic Container Service (ECS) has already alleviated several issues:

- Automatic health checks ensure failed containers are replaced.

- Autoscaling dynamically adjusts resources based on demand.

- Load balancing distributes traffic efficiently among containers.

However, ECS is deeply tied to AWS, which raises concerns about cloud provider lock-in. If an organization wishes to migrate to another provider, it must reconfigure everything from scratch.

The Advantage of EKS: Freedom from Cloud Lock-In

AWS Elastic Kubernetes Service (EKS) provides the same automation benefits as ECS but without restricting users to AWS. Since Kubernetes is a cloud-agnostic technology, EKS allows businesses to move seamlessly between cloud providers, offering unmatched flexibility.

What Kubernetes Is Not

Before diving deeper, let’s clear up a few misconceptions:

- Kubernetes is not a cloud service provider—it’s an open-source project.

- It is not a paid service but entirely free.

- It is not a direct alternative to Docker—rather, it works with Docker to orchestrate containers across multiple machines.

- It’s not just software running on a machine but a collection of tools and concepts that facilitate efficient containerized application management.

Kubernetes vs. Docker Compose: Scaling Beyond One Machine

If you’ve used Docker Compose, you know it helps manage multi-container applications on a single machine. Kubernetes takes this concept to the next level by distributing applications across multiple machines, ensuring high availability and fault tolerance.

Kubernetes Architecture: Division of Labor

| Kubernetes Tasks | User Responsibilities |

|---|---|

| Creates and manages objects like Pods | Sets up the cluster and node instances (Master + Worker Nodes) |

| Monitors Pods, restarts failed instances, scales applications | Installs API server, kubelet, and other Kubernetes services |

| Utilizes cloud provider resources to enforce configurations | Sets up additional resources (Load Balancer, Filesystems) |

Core Components Explained

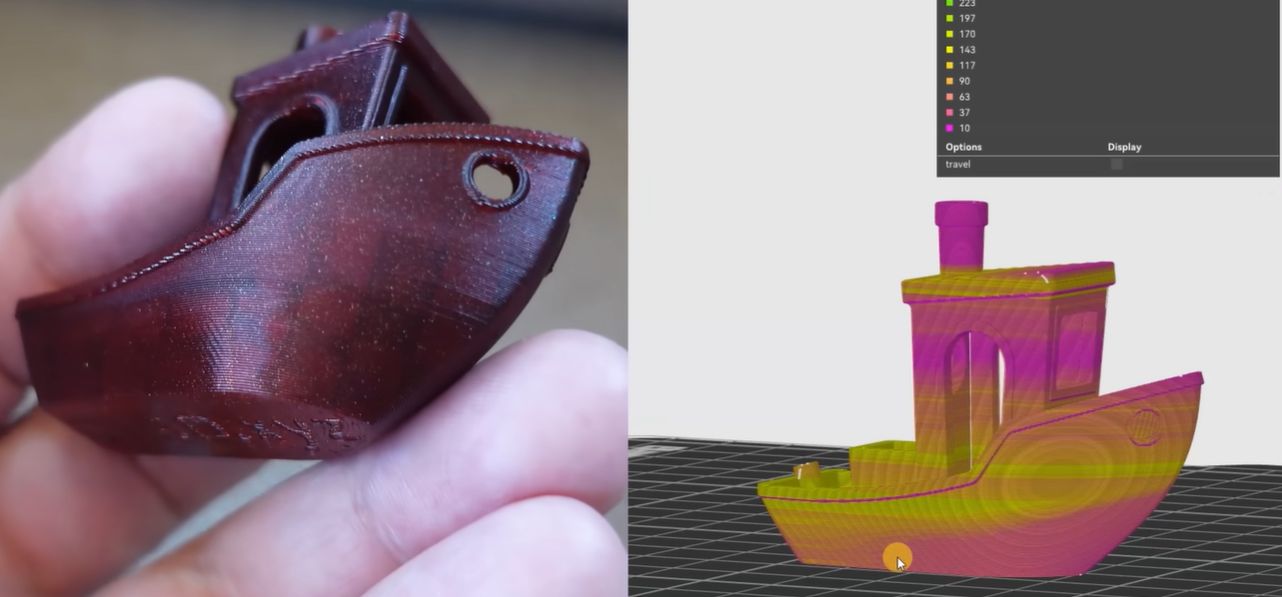

- Cluster – The fundamental Kubernetes unit, consisting of multiple nodes.

- Nodes – Individual physical/virtual machines that host Pods.

- Master Node – The control plane that orchestrates applications across Worker Nodes.

- Worker Node – Machines that run application containers inside Pods.

- Pods – The smallest deployable unit in Kubernetes, holding containers and required resources.

- Containers – Traditional Docker containers encapsulating applications.

- Services – A logical set of Pods with a stable IP address, enabling reliable communication.

Real-World Example: Scaling an E-Commerce Platform

Consider an e-commerce platform during Black Friday sales. Without Kubernetes, developers would manually scale up instances, monitor failures, and distribute traffic across containers. With Kubernetes:

- Autoscaling would provision additional Pods in response to traffic spikes.

- Failed containers would automatically restart, ensuring seamless user experiences.

- Load balancing would distribute traffic efficiently across nodes.

Kubernetes isn't just a tool—it’s a revolution in how modern applications are deployed and scaled. By eliminating manual intervention, ensuring high availability, and offering freedom from cloud provider lock-in, it has become the backbone of cloud-native architectures.

![[The AI Show Episode 156]: AI Answers - Data Privacy, AI Roadmaps, Regulated Industries, Selling AI to the C-Suite & Change Management](https://www.marketingaiinstitute.com/hubfs/ep%20156%20cover.png)

![[The AI Show Episode 155]: The New Jobs AI Will Create, Amazon CEO: AI Will Cut Jobs, Your Brain on ChatGPT, Possible OpenAI-Microsoft Breakup & Veo 3 IP Issues](https://www.marketingaiinstitute.com/hubfs/ep%20155%20cover.png)

![[DEALS] 1min.AI: Lifetime Subscription (82% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

_incamerastock_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Brain_light_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Nothing Phone (3) has a 50MP ‘periscope’ telephoto lens – here are the first samples [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/06/nothing-phone-3-telephoto.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)