If Your AI Is Hallucinating, Don’t Blame the AI

AI “hallucinations” – those convincing-sounding but false answers – draw a lot of media attention, as with the recent New York Times article, AI Is Getting More Powerful, But Its Hallucinations Are Getting Worse. Hallucinations are a real hazard when you’re dealing with a consumer chatbot. In the context of business applications of AI, it’s […] The post If Your AI Is Hallucinating, Don’t Blame the AI appeared first on Unite.AI.

AI “hallucinations” – those convincing-sounding but false answers – draw a lot of media attention, as with the recent New York Times article, AI Is Getting More Powerful, But Its Hallucinations Are Getting Worse. Hallucinations are a real hazard when you’re dealing with a consumer chatbot. In the context of business applications of AI, it’s an even more serious concern. Fortunately, as a business technology leader I have more control over it as well. I can make sure the agent has the right data to produce a meaningful answer.

Because that’s the real problem. In business, there is no excuse for AI hallucinations. Stop blaming AI. Blame yourself for not using AI properly.

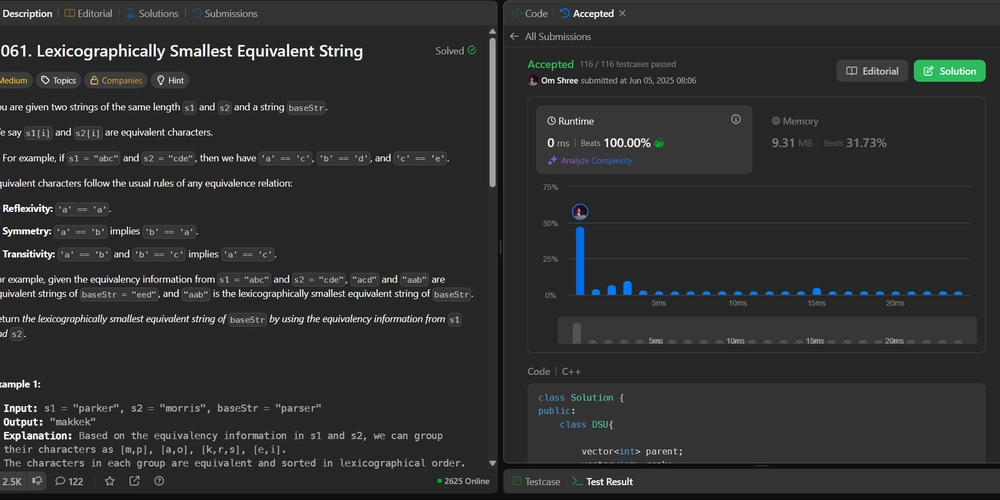

When generative AI tools hallucinate, they are doing what they are designed to do – provide the best answer they can based on the data they have available. When they make stuff up, producing an answer that is not based in reality, it’s because they’re missing the relevant data, can’t find it, or don’t understand the question. Yes, new models like OpenAI’s o3 and o4-mini are hallucinating more, acting even more “creative” when they don’t have a good answer to the question that’s been posed to them. Yes, more powerful tools can hallucinate more – but they can also produce more powerful and valuable results if we set them up for success.

If you don’t want your AI to hallucinate, don’t starve it for data. Feed the AI the best, most relevant data for the problem you want it to solve, and it won’t be tempted to go astray.

Even then, when working with any AI tool, I recommend keeping your critical thinking skills intact. The results AI agents deliver can be productive and delightful, but the point is not to unplug your brain and let the software do all the thinking for you. Keep asking questions. When an AI agent gives you an answer, question that answer to be sure it makes sense and is backed by data. If so, that should be an encouraging sign that it’s worth your time to ask follow up questions.

The more you question, the better insights you will get.

Why hallucinations happen

It’s not some mystery. The AI is not trying to lie to you. Every large language model (LLM) AI is essentially predicting the next word or number based on probability.

At a high level, what’s happening here is that LLMs string together sentences and paragraphs one word at a time, predicting the next word that should occur in the sentence based on billions of other examples in its training data. The ancestors of LLMs (aside from Clippy) were autocomplete prompts for text messages and computer code, automated human language translation tools, and other probabilistic linguistic systems. With increased brute force compute power, plus training on internet-scale volumes of data, these systems got “smart” enough that they could carry on a full conversation over chat, as the world learned with the introduction of ChatGPT.

AI naysayers like to point out that this is not the same as real “intelligence,” only software that can distill and regurgitate the human intelligence that has been fed into it. Ask it to summarize data in a written report, and it imitates the way other writers have summarized similar data.

That strikes me as an academic argument as long as the data is correct and the analysis is useful.

What happens if the AI doesn’t have the data? It fills in the blanks. Sometimes it’s funny. Sometimes it’s a total mess.

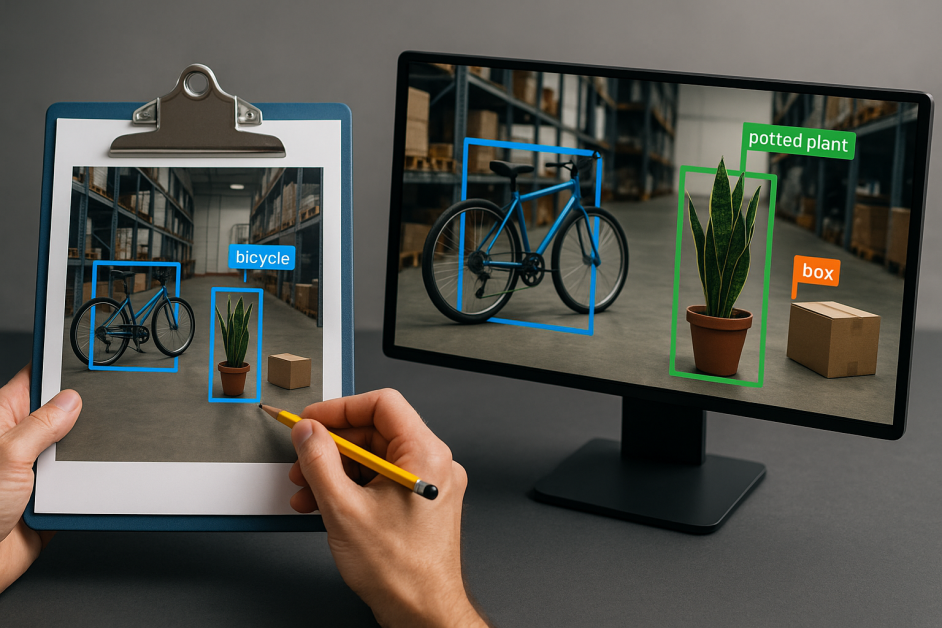

When building AI agents, this is 10x the risk. Agents are supposed to provide actionable insights, but they make more decisions along the way. They executed multi-step tasks, where the result of step 1 informs steps 2, 3, 4, 5, … 10 … 20. If the results of step 1 are incorrect, the error will be amplified, making the output at step 20 that much worse. Especially, as agents can make decisions and skip steps.

Done right, agents accomplish more for the business that deploys them. Yet as AI product managers, we have to recognize the greater risk that goes along with the greater reward.

Which is what our team did. We saw the risk, and tackled it. We didn’t just build a fancy robot; we made sure it runs on the right data. Here is what I think we did right:

- Build the agent to ask the right questions and verify it has the right data. Make sure the initial data input process of the agent is actually more deterministic, less “creative”. You want the agent to say when it doesn’t have the right data and not continue to the next step, rather than making up the data.

- Structure a playbook for your agent – make sure it doesn’t invent a new plan every time but has a semi-structured approach. Structure and context are extremely important at the data gathering and analysis stage. You can let the agent loosen up and act more “creative” when it has the facts and is ready to write the summary, but first get the facts right.

- Build a high quality tool to extract the data. This should be more than just an API call. Take the time to write the code (people still do that) that makes the right quantity and variety of data that will be gathered, building quality checks into the process.

- Make the agent show its work. The agent should cite its sources and link to where the user can verify the data, from the original source, and explore it further. No slight of hand allowed!

- Guardrails: Think through what could go wrong, and build in protections against the errors you absolutely cannot allow. In our case, that means that when the agent tasked with analyzing a market doesn’t have the data – by which I mean our Similarweb data, not some random data source pulled from the web – making sure it doesn’t make something up is an essential guardrail. Better for the agent to not be able to answer than to deliver a false or misleading answer.

We’ve incorporated these principles into our recent release of our three new agents, with more to follow. For example, our AI Meeting Prep Agent for salespeople doesn’t just ask for the name of the target company but details on the goal of the meeting and who it is with, priming it to provide a better answer. It doesn’t have to guess because it uses a wealth of company data, digital data, and executive profiles to inform its recommendations.

Are our agents perfect? No. Nobody is creating perfect AI yet, not even the biggest companies in the world. But facing the problem is a hell of a lot better than ignoring it.

Want fewer hallucinations? Give your AI a nice chunk of high quality data.

If it hallucinates, maybe it’s not the AI that needs fixing. Maybe it’s your approach to taking advantage of these powerful new capabilities without putting in the time and effort to get them right.

The post If Your AI Is Hallucinating, Don’t Blame the AI appeared first on Unite.AI.

![[The AI Show Episode 151]: Anthropic CEO: AI Will Destroy 50% of Entry-Level Jobs, Veo 3’s Scary Lifelike Videos, Meta Aims to Fully Automate Ads & Perplexity’s Burning Cash](https://www.marketingaiinstitute.com/hubfs/ep%20151%20cover.png)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![Epic Games: Apple’s attempt to pause App Store antitrust order fails [U]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/05/epic-games-app-store.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple AI Launch in China Delayed Amid Approval Roadblocks and Trade Tensions [Report]](https://www.iclarified.com/images/news/97500/97500/97500-640.jpg)

![[UPDATED] New Android Trojan Can Fake Contacts to Scam You — Meet Crocodilus](https://www.androidheadlines.com/wp-content/uploads/2022/12/Android-malware-image-1.jpg)

![T-Mobile may be misleading customers into spending more with new switch offer [UPDATED]](https://m-cdn.phonearena.com/images/article/171029-two/T-Mobile-may-be-misleading-customers-into-spending-more-with-new-switch-offer-UPDATED.jpg?#)