Return to the World of AI Mechanics

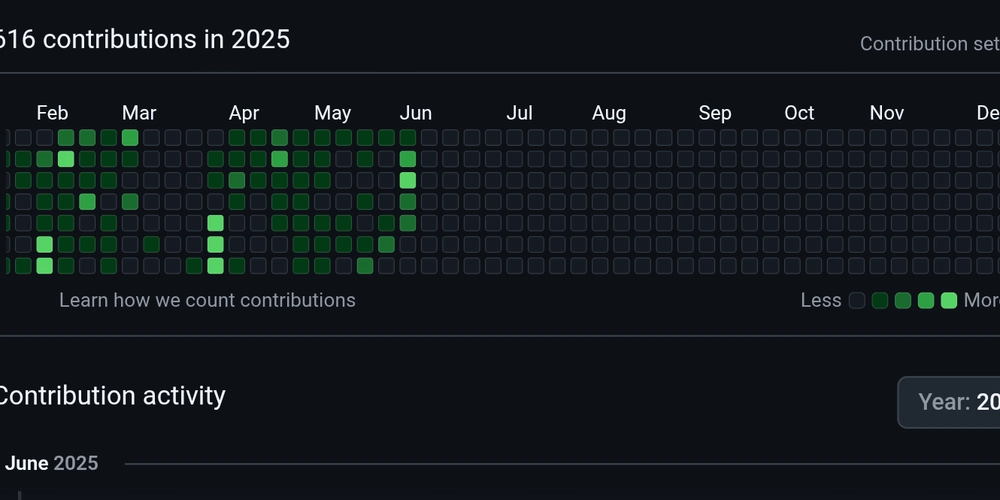

It's been over a year since I wrote Entering the World of AI Mechanics, and I happened to come back to it today as I've been reading and thinking a lot about the role of AI in engineering as well as in the wider world. A year is long enough for several quantum leaps in genAI. Of course new models have come out since then, but the fact that I wrote this before agentic AI was not only a thing, but a regular part of my workflow, makes it feel quaint. A year ago, my main experience with AI was adding it as a code snippet-generating tool to one of the apps I work on in my Day Job, and thus my point of view came from thinking about the user experience of a feature we added that wouldn't always act in the way a user (or us, the programmers) expected it to, and how to adjust to that uncertainty. Our users were used to software that always had the same result from the same input, and if it didn't, they'd file a ticket, as clearly we had introduced a regression that needed to be fixed. The genAI feature broke that contract between us and our users, and suddenly we had to account for unexpected results as well as unexpected reactions. As a user though, back then genAI was an occasionally helpful, often wrong, ever-annoying chatbot that was being forced on me at work and invading other software I relied on in nonintuitive, rarely useful ways. That AI very much still exists today, and I ignore it much as I did a year ago. Sure, generating images or recipes from ChatGPT has been entertaining and somewhat useful, but not enough to become a habit. What changed between then and now is agentic AI. The difference between chat and agents is that I actually want to use the latter now, because it's not only not terrible, it's actually helpful for select tasks. My go-to example is writing unit tests, because: They have a well-defined scope and purpose In a production codebase, there are plenty of examples to give the LLM to show style, which testing library to use, mocking examples, etc. I fucking hate writing them It's been a huge boost to my productivity to tell the AI that I updated the code for myAwesomeFunction.ts, can you now update the tests in myAwesomeFunction.test.ts for me? And the best part is, agentic AI will make the changes, run the tests, then revise as needed on its own until tests, type checks, linting, etc. all pass, all while I'm moving on to working on the next feature. This is AI that actually has a purpose; I'm not losing anything by not copy-pastaing code from similar tests, there is no test writer on our team that will lose their job because of this, and even junior developers will benefit from not having to do as many tedious tasks as they learn the codebase. The caveat here is that at this moment, agents should mainly be used for these kinds of tedious, well-defined tasks, as they still fall apart when given more complexity to work with, even with good guidelines. Additionally, the more complex tasks are the ones I want to do myself; writing code with the user and developer experience in mind is something I know I can do a hell of a lot better than an LLM right now, and it's also something I enjoy doing (for the most part). I may still tag in an agent to help with debugging, but they're serving the role of a rubber duck more often than actually being helpful. But back to my original post, and particularly this part: Quantum physics only applies at the subatomic level. Once you have a mass of atoms comprising, for example, a ball, it behaves in a very classical way. Perhaps masses of software, say at an enterprise or at the infrastructure level, should behave in a classical, predictable, and repeatable way. Yesterday, I read Steve Yegge's Revenge of the junior developer (I'm late to the party, I know. Two months is a geological era these days). The part that hit me the hardest was "Part 5: The agent fleet is coming", because OF COURSE IT IS. This has become so apparent to anyone using agents and seeing people already starting to build workflows where two agents interact with one another, or open-source tooling that lets you pull in multiple agent instances. Fleets are coming, and while I'm ambivalent about the direction this will lead software engineering teams, I'm hopeful in that they may be the answer to the uncertainty I feel when using LLMs today. If one LLM is a quantum particle, and agents are several particles interacting at the quantum level, my hope is that fleets bring us back to the classical world of more certain outputs.

It's been over a year since I wrote Entering the World of AI Mechanics, and I happened to come back to it today as I've been reading and thinking a lot about the role of AI in engineering as well as in the wider world.

A year is long enough for several quantum leaps in genAI. Of course new models have come out since then, but the fact that I wrote this before agentic AI was not only a thing, but a regular part of my workflow, makes it feel quaint.

A year ago, my main experience with AI was adding it as a code snippet-generating tool to one of the apps I work on in my Day Job, and thus my point of view came from thinking about the user experience of a feature we added that wouldn't always act in the way a user (or us, the programmers) expected it to, and how to adjust to that uncertainty. Our users were used to software that always had the same result from the same input, and if it didn't, they'd file a ticket, as clearly we had introduced a regression that needed to be fixed. The genAI feature broke that contract between us and our users, and suddenly we had to account for unexpected results as well as unexpected reactions.

As a user though, back then genAI was an occasionally helpful, often wrong, ever-annoying chatbot that was being forced on me at work and invading other software I relied on in nonintuitive, rarely useful ways. That AI very much still exists today, and I ignore it much as I did a year ago. Sure, generating images or recipes from ChatGPT has been entertaining and somewhat useful, but not enough to become a habit.

What changed between then and now is agentic AI. The difference between chat and agents is that I actually want to use the latter now, because it's not only not terrible, it's actually helpful for select tasks. My go-to example is writing unit tests, because:

- They have a well-defined scope and purpose

- In a production codebase, there are plenty of examples to give the LLM to show style, which testing library to use, mocking examples, etc.

- I fucking hate writing them

It's been a huge boost to my productivity to tell the AI that I updated the code for myAwesomeFunction.ts, can you now update the tests in myAwesomeFunction.test.ts for me? And the best part is, agentic AI will make the changes, run the tests, then revise as needed on its own until tests, type checks, linting, etc. all pass, all while I'm moving on to working on the next feature.

This is AI that actually has a purpose; I'm not losing anything by not copy-pastaing code from similar tests, there is no test writer on our team that will lose their job because of this, and even junior developers will benefit from not having to do as many tedious tasks as they learn the codebase.

The caveat here is that at this moment, agents should mainly be used for these kinds of tedious, well-defined tasks, as they still fall apart when given more complexity to work with, even with good guidelines. Additionally, the more complex tasks are the ones I want to do myself; writing code with the user and developer experience in mind is something I know I can do a hell of a lot better than an LLM right now, and it's also something I enjoy doing (for the most part). I may still tag in an agent to help with debugging, but they're serving the role of a rubber duck more often than actually being helpful.

But back to my original post, and particularly this part:

Quantum physics only applies at the subatomic level. Once you have a mass of atoms comprising, for example, a ball, it behaves in a very classical way. Perhaps masses of software, say at an enterprise or at the infrastructure level, should behave in a classical, predictable, and repeatable way.

Yesterday, I read Steve Yegge's Revenge of the junior developer (I'm late to the party, I know. Two months is a geological era these days). The part that hit me the hardest was "Part 5: The agent fleet is coming", because OF COURSE IT IS. This has become so apparent to anyone using agents and seeing people already starting to build workflows where two agents interact with one another, or open-source tooling that lets you pull in multiple agent instances. Fleets are coming, and while I'm ambivalent about the direction this will lead software engineering teams, I'm hopeful in that they may be the answer to the uncertainty I feel when using LLMs today.

If one LLM is a quantum particle, and agents are several particles interacting at the quantum level, my hope is that fleets bring us back to the classical world of more certain outputs.

![[The AI Show Episode 151]: Anthropic CEO: AI Will Destroy 50% of Entry-Level Jobs, Veo 3’s Scary Lifelike Videos, Meta Aims to Fully Automate Ads & Perplexity’s Burning Cash](https://www.marketingaiinstitute.com/hubfs/ep%20151%20cover.png)

![[DEALS] FileJump 2TB Cloud Storage: Lifetime Subscription (85% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Apple Shares Official Trailer for 'The Wild Ones' [Video]](https://www.iclarified.com/images/news/97515/97515/97515-640.jpg)