Kernelized Normalizing Constant Estimation: Bridging Bayesian Quadrature and Bayesian Optimization

Xu caiが第一著者 In this paper, a normalizing constant on RKHS is considered as follows: Z(f)=∫De−λxdx,λ>0 Z(f) = \int_{D} e^{- \lambda x} dx, \quad \lambda > 0 Z(f)=∫De−λxdx,λ>0 This method considers the lower bound and the upper bound of f∈RKHS f \in RKHS f∈RKHS This method considers the noiseless setting and the noisy setting for the lower bound of f, respectively. Applicable to a multi-layer perception, a point spread function. So authors conducted experiments with various f. Then consider the results with these experiments.

Xu caiが第一著者

In this paper, a normalizing constant on RKHS is considered as follows:

This method considers the lower bound and the upper bound of

This method considers the noiseless setting and the noisy setting for the lower bound of f, respectively.

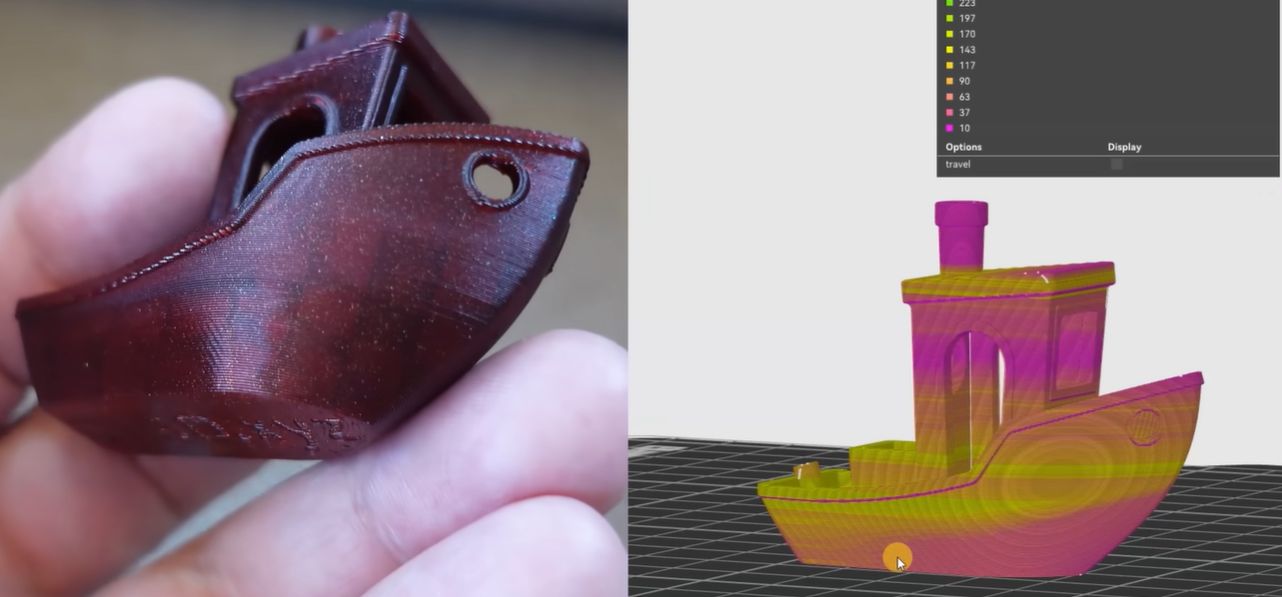

Applicable to a multi-layer perception, a point spread function.

So authors conducted experiments with various f.

Then consider the results with these experiments.

![[The AI Show Episode 156]: AI Answers - Data Privacy, AI Roadmaps, Regulated Industries, Selling AI to the C-Suite & Change Management](https://www.marketingaiinstitute.com/hubfs/ep%20156%20cover.png)

![[The AI Show Episode 155]: The New Jobs AI Will Create, Amazon CEO: AI Will Cut Jobs, Your Brain on ChatGPT, Possible OpenAI-Microsoft Breakup & Veo 3 IP Issues](https://www.marketingaiinstitute.com/hubfs/ep%20155%20cover.png)

![[DEALS] 1min.AI: Lifetime Subscription (82% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

_incamerastock_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Brain_light_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Nothing Phone (3) has a 50MP ‘periscope’ telephoto lens – here are the first samples [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/06/nothing-phone-3-telephoto.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)