A2A vs MCP: Connecting AI Agents and Tools

The AI ecosystem is buzzing with new standards for how models, tools, and agents interconnect. Two big newcomers are Google’s A2A (Agent-to-Agent) protocol and Anthropic’s MCP (Model Context Protocol). At a high level, A2A sets out to standardize how complete AI agents talk and collaborate, while MCP standardizes how a language model hooks up to tools and data sources. In practice, the two have different goals and architectures but can actually complement each other (and even overlap) in building agent-based systems. Let’s dive into what each protocol is, how they’re designed, and why both matter for the future of AI products. What is A2A (Agent-to-Agent)? A2A is Google’s open spec for agents to talk to each other. In this vision, each agent is a self-contained AI service (an LLM plus any tools or functions it uses), and A2A defines a standard communication framework for those agents. In practice, A2A uses a client–server style architecture where one agent can discover and delegate tasks to another, exchanging messages and “artifacts” as the work progresses. Each agent publishes an agent card (a JSON profile listing its capabilities and endpoints) so others can find it. Under the hood A2A uses HTTP/HTTPS with JSON-RPC calls for structured requests and Server-Sent Events (or WebSockets) for streaming replies, all packaged in JSON. In short, A2A lets AI agents (say, a “travel planner” bot) talk to other specialized agents (e.g. “flight-booking agent”, “hotel-finder agent”) in a consistent way. The protocol even handles task hand-offs, progress updates, error handling, and follow-up questions. In the words of Google’s documentation, “A2A is a standardized communication framework that enables AI agents to interact with each other in a consistent, predictable way” Key components of A2A include: Agent Cards: JSON profiles advertising what an agent can do (its name, provider, endpoint, and capabilities) Tasks and Messages: A “task” is a job handed off to an agent; it has lifecycle states (submitted, running, input-required, completed, etc.) and carries message envelopes and artifacts Client–Server Model: Agents act as clients or servers dynamically. One agent (client) may assign tasks to another (server), but roles can shift as agents collaborate Supported Patterns: A2A handles asynchronous or long-running tasks (streaming partial results), multimodal payloads, clarifications, and standardized error formats In short, A2A is about agent-to-agent collaboration. It answers questions like “How does Agent A discover Agent B and ask it to do something on my behalf?” For example, Google’s own blog shows a workflow where an HR assistant agent finds candidate-sourcing and scheduling agents, asks clarifying questions, and merges the results into one solution for the user This makes possible complex, multi-agent solutions (like planning a multi-city trip or automating a finance workflow) by reusing specialized agents as building blocks. What is MCP (Model Context Protocol)? In contrast, MCP (Model Context Protocol) is designed to connect an LLM-based application to data, APIs, and tools in a standardized way. Think of MCP as a “USB-C port” for AI: just as USB-C provides a common plug for many devices, MCP provides a common interface for LLMs to access external knowledge and functionality In MCP, there are MCP hosts or clients (like a chat app or IDE that has an AI assistant) and MCP servers (small services that expose particular data sources or APIs). A typical setup: an AI host (e.g. a chatbot using Claude or GPT) has one or more MCP client components that each maintain a 1:1 connection to an MCP server. Those servers each grant the model access to a specific resource. For example, an MCP server might wrap Google Drive, Slack history, a database, or a web API. When the AI model needs context (e.g. “show me my last five emails”), the host asks the relevant MCP client, which sends a JSON-RPC request to the server. The server returns the data, which the AI can then use in its reasoning or response. Anthropic describes MCP as “an open standard that enables developers to build secure, two-way connections between their data sources and AI-powered tools” The MCP architecture is simple: applications that want data act as MCP clients, while each data source implements an MCP server. The protocol uses JSON-RPC 2.0 messages for all calls. Key elements include: MCP Clients/Hosts: These live inside the AI application (e.g. Claude Desktop, a chatbot, or an IDE extension). They initiate connections and send requests for data or actions via MCP. MCP Servers: Lightweight servers (often open-source) that expose a particular capability or dataset. Each server declares a schema: what prompts or tool uses it supports. There are already MCP servers for things like Google Drive, Slack, GitHub, databases, even custom code run via Puppeteer Local vs Remote Sources: MCP servers can access local res

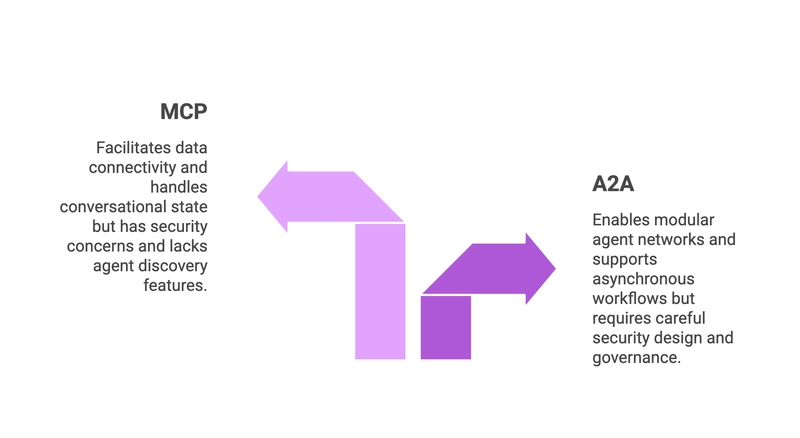

The AI ecosystem is buzzing with new standards for how models, tools, and agents interconnect. Two big newcomers are Google’s A2A (Agent-to-Agent) protocol and Anthropic’s MCP (Model Context Protocol). At a high level, A2A sets out to standardize how complete AI agents talk and collaborate, while MCP standardizes how a language model hooks up to tools and data sources.

In practice, the two have different goals and architectures but can actually complement each other (and even overlap) in building agent-based systems. Let’s dive into what each protocol is, how they’re designed, and why both matter for the future of AI products.

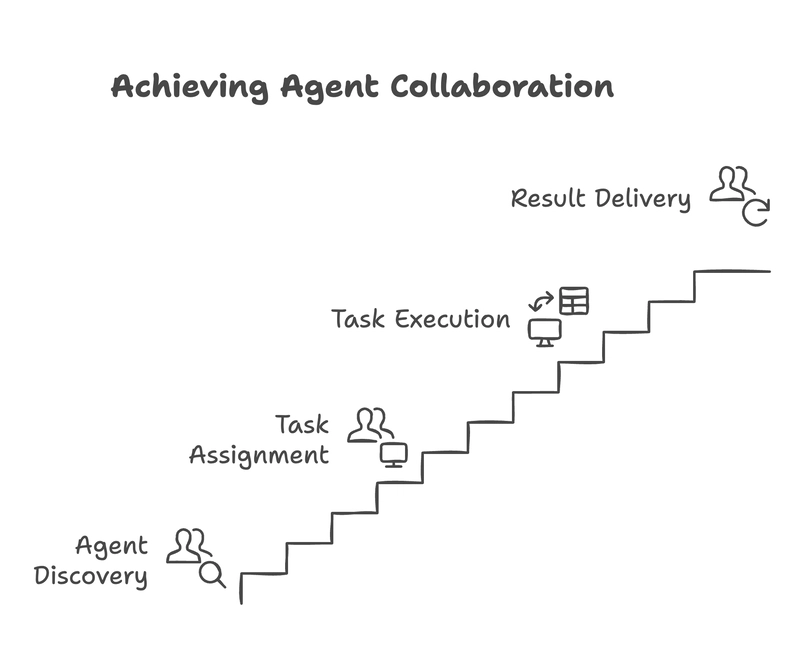

What is A2A (Agent-to-Agent)?

A2A is Google’s open spec for agents to talk to each other. In this vision, each agent is a self-contained AI service (an LLM plus any tools or functions it uses), and A2A defines a standard communication framework for those agents. In practice, A2A uses a client–server style architecture where one agent can discover and delegate tasks to another, exchanging messages and “artifacts” as the work progresses.

Each agent publishes an agent card (a JSON profile listing its capabilities and endpoints) so others can find it. Under the hood A2A uses HTTP/HTTPS with JSON-RPC calls for structured requests and Server-Sent Events (or WebSockets) for streaming replies, all packaged in JSON.

In short, A2A lets AI agents (say, a “travel planner” bot) talk to other specialized agents (e.g. “flight-booking agent”, “hotel-finder agent”) in a consistent way. The protocol even handles task hand-offs, progress updates, error handling, and follow-up questions. In the words of Google’s documentation, “A2A is a standardized communication framework that enables AI agents to interact with each other in a consistent, predictable way”

Key components of A2A include:

Agent Cards: JSON profiles advertising what an agent can do (its name, provider, endpoint, and capabilities)

Tasks and Messages: A “task” is a job handed off to an agent; it has lifecycle states (submitted, running, input-required, completed, etc.) and carries message envelopes and artifacts

Client–Server Model: Agents act as clients or servers dynamically. One agent (client) may assign tasks to another (server), but roles can shift as agents collaborate

Supported Patterns: A2A handles asynchronous or long-running tasks (streaming partial results), multimodal payloads, clarifications, and standardized error formats

In short, A2A is about agent-to-agent collaboration. It answers questions like “How does Agent A discover Agent B and ask it to do something on my behalf?” For example, Google’s own blog shows a workflow where an HR assistant agent finds candidate-sourcing and scheduling agents, asks clarifying questions, and merges the results into one solution for the user

This makes possible complex, multi-agent solutions (like planning a multi-city trip or automating a finance workflow) by reusing specialized agents as building blocks.

What is MCP (Model Context Protocol)?

In contrast, MCP (Model Context Protocol) is designed to connect an LLM-based application to data, APIs, and tools in a standardized way. Think of MCP as a “USB-C port” for AI: just as USB-C provides a common plug for many devices, MCP provides a common interface for LLMs to access external knowledge and functionality

In MCP, there are MCP hosts or clients (like a chat app or IDE that has an AI assistant) and MCP servers (small services that expose particular data sources or APIs). A typical setup: an AI host (e.g. a chatbot using Claude or GPT) has one or more MCP client components that each maintain a 1:1 connection to an MCP server. Those servers each grant the model access to a specific resource. For example, an MCP server might wrap Google Drive, Slack history, a database, or a web API. When the AI model needs context (e.g. “show me my last five emails”), the host asks the relevant MCP client, which sends a JSON-RPC request to the server.

The server returns the data, which the AI can then use in its reasoning or response. Anthropic describes MCP as “an open standard that enables developers to build secure, two-way connections between their data sources and AI-powered tools”

The MCP architecture is simple: applications that want data act as MCP clients, while each data source implements an MCP server. The protocol uses JSON-RPC 2.0 messages for all calls.

Key elements include:

MCP Clients/Hosts: These live inside the AI application (e.g. Claude Desktop, a chatbot, or an IDE extension). They initiate connections and send requests for data or actions via MCP.

MCP Servers: Lightweight servers (often open-source) that expose a particular capability or dataset. Each server declares a schema: what prompts or tool uses it supports. There are already MCP servers for things like Google Drive, Slack, GitHub, databases, even custom code run via Puppeteer

Local vs Remote Sources: MCP servers can access local resources (your files, databases, local services) securely, as well as remote APIs over the Internet

Capability Negotiation: On connecting, the client and server exchange metadata. The server tells the client what prompts, actions, or tools it can provide (like a function signature)

Session State: MCP maintains a session so the server can remember previous exchanges. It focuses on exchanging context and coordination between client and server

Key Differences

In practice, MCP lets any AI app plug into arbitrary data easily. Anthropic notes that MCP “provides a universal, open standard for connecting AI systems with data sources, replacing fragmented integrations with a single protocol”

| Aspect | A2A (Agent-to-Agent) | MCP (Model Context Protocol) |

|---|---|---|

| Focus & Scope | Treats each system as a full agent (LLM + tools). Defines how agents discover one another, delegate tasks, and collaborate on multi-agent workflows. | Assumes one side is an LLM application (host) and the other a data/tool provider (server). Standardizes how a model communicates with external tools and data sources. |

| Architecture | Mesh client–server among agents. Agents publish “agent cards” (JSON profiles) and call each other’s APIs via JSON-RPC or REST. Emphasizes task lifecycle, streaming, and peer discovery. | Hub-and-spoke: the AI host (hub) connects to multiple MCP servers (spokes). No peer discovery—clients know which server to call based on context. Uses JSON-RPC 2.0 over HTTP (or stdio). |

| Data Flow | Data moves between agents as part of delegated tasks (e.g. Agent A asks Agent B to perform work and return a result). | Data flows between a model and a static resource (e.g. “get this document” or “query that database”). |

| Standards & Tech | JSON, HTTP/HTTPS, JSON-RPC, Server-Sent Events for streaming, agent cards, artifacts, built-in enterprise-grade auth (OAuth, OIDC, API keys). | JSON, HTTP/HTTPS or stdio, JSON-RPC 2.0. Early versions used simple API keys; newer implementations adopt OAuth 2.0/DCR for stronger security. |

| Intended Use | “Cross-vendor discovery” on the public internet—agents from different organizations working together (“Who can do X for me?”). | Reliable connection of your AI application to internal or SaaS data (“I need data X—connect me to the server that has it”). |

| Overlap & Complementarity | Handles high-level agent orchestration. In practice, A2A agents often rely on MCP servers for tool/data access and may register themselves as MCP “resources” for discovery. | Handles low-level model-to-tool wiring. Complements A2A by feeding agents the context and capabilities they need during multi-agent workflows. Can run inside A2A task payloads for seamless integration. |

It supports asynchronous workflows and partial results, which is crucial for long tasks. By standardizing discovery and capabilities, A2A greatly simplifies multi-agent architectures – think of it as a common rail that diverse agents can tap into.

Strengths and Challenges

A2A Strengths: It enables modular agent networks. Agents from different vendors can interoperate if they speak A2A. It has enterprise-grade features out of the box (OAuth/OIDC auth, streaming, error semantics) for building real applications

It supports asynchronous workflows and partial results, which is crucial for long tasks. By standardizing discovery and capabilities, A2A greatly simplifies multi-agent architectures – think of it as a common rail that diverse agents can tap into.

A2A Challenges: It’s new and still evolving. Every agent must implement the protocol correctly (card publishing, message handling, etc.), and network security between agents becomes important. Discovery at scale can be tricky (e.g. public versus private agent directories). There are also open questions about governance: who runs the index of agents, who certifies them, etc. And while A2A includes security mechanisms, real-world deployments will need careful design of “authorization boundaries” – deciding which agents can talk to which

MCP Strengths: It fills a huge gap: AI apps typically need to talk to data, and MCP makes that pluggable. It reduces duplication by providing pre-built connectors. Once an ecosystem of servers grows, any model can switch data sources without rewriting prompts. It also natively handles conversational state and context sharing, so data requests can be grounded in ongoing interactions

MCP Challenges: Early on, MCP had weak security defaults (simple API keys, wide OAuth scopes), raising concerns about prompt injection and over-privileged access.

Anthropic and others are addressing these (OAuth, fine-grained tokens, etc.), but it remains an area of scrutiny. MCP also isn’t built for agent discovery or orchestration – it won’t solve how you find an agent, just how you fetch data. Some vendors point out that MCP by itself doesn’t handle long-running multi-agent workflows or arbitration of tasks

Adoption and “Protocol Wars”

I’ve been watching the rise of A2A and MCP with great interest—it feels a bit like being back in the early days of the web, when everyone was debating HTTP vs. FTP. On the A2A side, Google scored a big win out of the gate: over 50 tech partners (think MongoDB, Atlassian, SAP, PayPal, Cohere) have already published sample multi-agent demos with LangGraph and Intuitive AI. It’s powerful to imagine an enterprise where every AI microservice—HR, finance, analytics—just “speaks A2A” out of the box for task automation.

Meanwhile, MCP isn’t far behind. I genuinely believe MCP has a bright future, its promise of plugging any LLM into any data source (Slack, Google Drive, Postgres) with a single JSON-RPC call is irresistible. Microsoft’s Copilot Studio support is a strong signal that the industry wants those standardized connectors.

But these aren’t the only players. IBM and the BeeAI project are championing ACP (Agent Communication Protocol), focusing on RESTful agent messaging and fine-grained permissions. With A2A, MCP, ACP—and likely more on the horizon, it’s natural to wonder if we’re headed for a “protocol war.” Will one standard win, or will we settle into a hybrid world? Personally, I’m betting on complementarity: each protocol shines in its own layer, and smart teams will weave them together rather than pick just one.

The Future: Convergence or Coexistence?

Looking forward, I see two equally exciting paths:

Convergence

Imagine A2A agents exposing their skills as MCP servers, and MCP gaining richer task semantics borrowed from A2A (or ACP). We’d end up with a unified “agent + tool” fabric where discovery, delegation, and data access all happen through a single, battle-tested API surface.Coexistence & Specialization

More likely in the near term: MCP becomes the go-to for model-to-tool wiring, while A2A (and ACP) dominate agent orchestration. Your next AI product—whether it’s a team-building assistant or a finance-reporting bot—will probably implement MCP internally to tap into documents and databases, and A2A externally to collaborate with other agents.

For anyone building AI today, here’s my takeaway: learn both. Mastering MCP means your model can instantly plug into a rich ecosystem of data and tools. Embracing A2A ensures your agents can discover, delegate, and scale across organizational boundaries. Together, they’ll be as fundamental to tomorrow’s AI stacks as REST and gRPC are to today’s.

If you love exploring the latest in tech and open source as much as I do, come say hi to me, Nomadev, on Twitter. Let’s exchange ideas, share insights, and keep the hustle spirit alive!

![[The AI Show Episode 156]: AI Answers - Data Privacy, AI Roadmaps, Regulated Industries, Selling AI to the C-Suite & Change Management](https://www.marketingaiinstitute.com/hubfs/ep%20156%20cover.png)

![[The AI Show Episode 155]: The New Jobs AI Will Create, Amazon CEO: AI Will Cut Jobs, Your Brain on ChatGPT, Possible OpenAI-Microsoft Breakup & Veo 3 IP Issues](https://www.marketingaiinstitute.com/hubfs/ep%20155%20cover.png)

![[DEALS] 1min.AI: Lifetime Subscription (82% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

_incamerastock_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Brain_light_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Nothing Phone (3) has a 50MP ‘periscope’ telephoto lens – here are the first samples [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/06/nothing-phone-3-telephoto.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Deepseek R1'i Yerel Olarak Çalıştırın: OpenWebUI + Ollama [Homelab]](https://media2.dev.to/dynamic/image/width=800%2Cheight=%2Cfit=scale-down%2Cgravity=auto%2Cformat=auto/https%3A%2F%2Fdev-to-uploads.s3.amazonaws.com%2Fuploads%2Farticles%2Fx3fl5u71vq5caqojk3bb.png)