What Is an AI Coding Agent?

AI has quickly become one of the most discussed subjects globally – and it now seems to be able to do just about everything for us. Students are asking it to help them with their homework, and lawyers are even using it for case research. AI agents have grown rapidly in popularity due to the […]

AI has quickly become one of the most discussed subjects globally – and it now seems to be able to do just about everything for us. Students are asking it to help them with their homework, and lawyers are even using it for case research. AI agents have grown rapidly in popularity due to the widespread usage of large language models (LLMs) like OpenAI’s ChatGPT. As a result, developers have also felt pressured to start using AI coding agents.

In light of all this, it has become imperative for us to understand how AI coding agents work – and how we can come up with workable prompts to get the most out of AI in our software development or data science projects. At JetBrains, we have our own coding agent for JetBrains IDEs – we call it Junie. We invest a lot of effort in high performance of Junie explaining the reasoning and logic of the coding agent to make it clearer for all of you.

LLMs and AI coding agents – what’s the connection?

Without LLMs, AI agents as we know them would be totally different. As a rough analogy, LLMs are to AI agents what engines are to cars. Without engines, there would be no cars. However, not all machines with engines are cars.

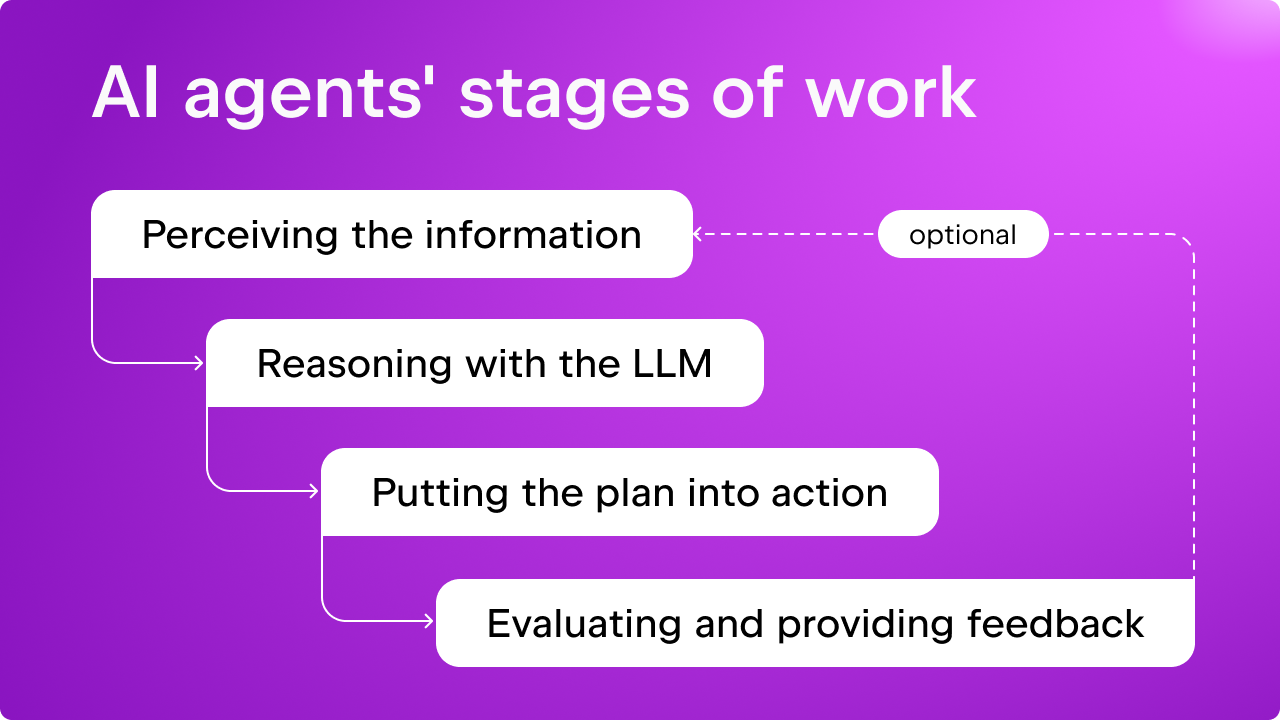

The work of an AI agent comprises several different stages:

- Perceiving the relevant information

At this stage, the agent processes data in your project, including your code and any supporting files, together with your prompt, and sends that data to the LLM that it is using for processing.

- Reasoning with the LLM

Communication with the LLM is usually carried out according to a specific protocol. This protocol makes processing easier by specifying a format that the agent has to adhere to when sending information and prompts to the LLM.

- Putting the plan into action

After the LLM has processed the information, it will provide some suggested actions or generate some code. In the next step, the agent will take these instructions for various actions and perform them.

- Evaluation and feedback

In the last stage, there are options to perform various tests and checks to evaluate the correctness of the result and make adjustments if needed.

AI coding agents aren’t the only type of AI agent that is driven by LLMs. So what makes them special? How are they tailored specifically to the needs of coders? Let’s find out!

AI coding agents are designed to perform coding tasks with less user supervision. They can formulate an action plan that can potentially achieve the goal given by the user and execute them. An AI coding agent like Junie can also evaluate code and run tests to catch any errors that might crop up. Here is a simplified workflow of an AI coding agent:

- Set the scene for the LLM

Before the agent knows what to do, some basic information needs to be provided to the LLM in order to ensure a useful output. For example, we need to tell the model what tools are available and what format we want the action plan to be in. In terms of tools, the action plan might consist of functions that we created and that can be executed to perform a task, such as creating a file or listing a directory. - Generate an action plan

Next, when a user’s prompt about the task is received, the agent asks the LLM to generate an action plan in the desired format. Optionally, we can also ask the LLM to act out a given “thought process”, which is the logic according to which this action plan is formulated. Once the action plan is received, it gets parsed into a format that can be followed and executed. In our example, we ask for an action plan in JSON format, and by using Python’s JSON library, we translate that action plan into a Python dictionary. - Execute the action plan

Now that we have information about the action plan, the predefined tool functions can be executed according to the plan. The result of executing each step is noted and used in the evaluation stage. - Evaluate the result

Finally, we ask the LLM to evaluate the result. In case of failure, the action plan can be updated (or a follow-up plan generated) to fix the error. Once we have an updated plan (or follow-up plan), we can attempt to execute the plan once again and evaluate the result. Keep in mind that there should be a maximum number of attempts or timeouts for this iteration, otherwise we could get stuck in an infinite loop.

Converting your conversation with LLM into coding actions

As an example of how an AI coding agent works, let’s build a simplified version of an AI coding agent that works with Python code.

Set up your LLM model

Earlier we reasoned that an AI coding agent without an LLM would be like a car without an engine. For this reason, it’s crucial that we start by securing access to an LLM model. While you can choose to use proprietary services from companies like OpenAI and Anthropic, for demonstration purposes, we’ll use an open-source model.

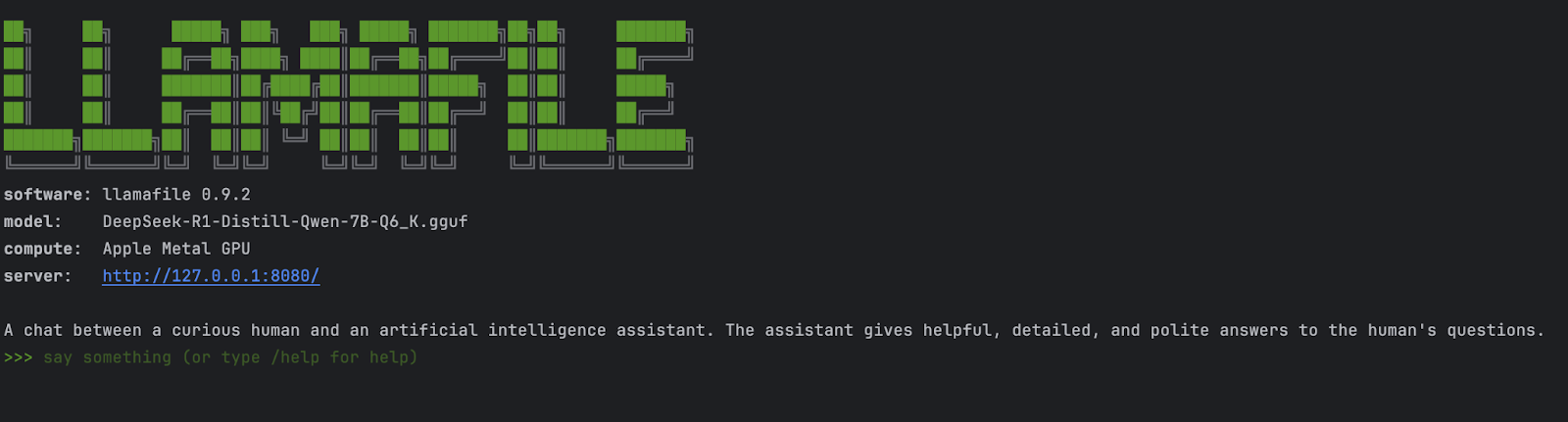

In this example, we’ll use the DeepSeek-R1 model llamafile here on Hugging Face. Follow the instructions here to get the llamafile for the model.

Why llamafile? What is it? llamafile is a single-file executable that runs locally on most computers, with no installation required.

If you’re using macOS, Linux, or BSD, make sure you grant permission for your computer to execute this new file.

chmod +x DeepSeek-R1-Distill-Qwen-7B-Q6_K.llamafile

Now we can launch the model as a local server by running the llamafile. Make sure you pass in the flag `-ngl 999` to offload to your available GPUs.

./DeepSeek-R1-Distill-Qwen-7B-Q6_K.llamafile -ngl 999

Voilà! The model is now available on your local server.

2. Testing the LLM model server with Python code

The llamafile server is also compatible with `openai` APIs. We can provide a prompt and get a result by using a Python script. For example:

#!/usr/bin/env python3 from openai import OpenAI client = OpenAI( base_url="http://127.0.0.1:8080/v1", # "http://:port" api_key = "sk-no-key-required" ) completion = client.chat.completions.create( model="DeepSeek-R1-Distill-Qwen-7B-Q6_K", messages=[ {"role": "assistant", "content": "You are an AI coding agent. Your top priority is perform coding task requested by the user."}, {"role": "user", "content": "create a Python function that returns the square of a number."}, ] ) content = completion.choices[0].message.content print(content)

When you run this script, here’s what you might get back from the LLM:

Okay, so the user asked me to create a Python function that returns the square of a number. Hmm…, I need to figure out how to approach this. Let me start by recalling what a function in Python looks like. I remember that functions are defined using the ‘def’ keyword, followed by the function name and parentheses for parameters.

Alright, the function needs to take a number as input. Let’s name it ‘num’ for clarity. So the function definition would be something like ‘def square(num):’. Now, inside the function, I need to calculate the square of this number. Squaring a number means multiplying it by itself, so that would be ‘num * num’.

Wait, is there another way to square a number in Python? Oh right, there’s the exponentiation operator ‘**’, so I could also write ‘num ** 2’. Either way works, but using multiplication is straightforward and easy to understand for someone new.

Next, I need to make sure the function returns this squared value. So after calculating, I’ll use the ‘return’ statement. Putting it all together, the function should look like:

def square(num):

return num * num

I should test this function to ensure it works correctly. Let me try a few examples. If I pass 3, it should return 9. 3 squared is indeed 9, so that’s good. What about a negative number, like -4? Squaring it should give 16, which the function should handle correctly since multiplying two negatives gives a positive.

Also, zero is a good test case. 0 squared is 0, so the function should return 0 in that case. I think these test cases cover the basic scenarios and confirm that the function is working as intended.

Are there any edge cases I should consider? Maybe very large numbers, but since Python handles big integers well, that shouldn’t be a problem. What about non-integer inputs? The function will still work because multiplying floats is valid, but the user might expect an integer if they pass one. However, the function doesn’t enforce input type, so it’s up to the user to handle that as needed.

Overall, the function seems simple enough and should meet the user’s requirements. I think this is a solid solution.

To create a Python function that returns the square of a number, you can follow these steps:

1. **Define the function**: Use the `def` keyword to create a function named `square` that takes one parameter, `num`.

2. **Calculate the square**: Inside the function, multiply `num` by itself (`num * num`) to get the square.

3. **Return the result**: Use the `return` statement to return the calculated square.

Here’s the code:

“`python

def square(num):

return num * num

“`

**Example usage**:

“`python

print(square(3)) # Output: 9

print(square(-4)) # Output: 16

print(square(0)) # Output: 0

“`

This function takes a number as input and returns its square..<|end▁of▁sentence|>

Wow, that’s a lot. And the “thought process” of the LLM is also there (the text within `

If your curiosity has been piqued and you want to try out a coding agent, I recommend installing Junie. It is compatible with IntelliJ IDEA, PyCharm, WebStorm, GoLand, PhpStorm, RubyMine and RustRover. To learn more about Junie, head over to its official webpage: https://www.jetbrains.com/junie/.

![[The AI Show Episode 154]: AI Answers: The Future of AI Agents at Work, Building an AI Roadmap, Choosing the Right Tools, & Responsible AI Use](https://www.marketingaiinstitute.com/hubfs/ep%20154%20cover.png)

![[The AI Show Episode 153]: OpenAI Releases o3-Pro, Disney Sues Midjourney, Altman: “Gentle Singularity” Is Here, AI and Jobs & News Sites Getting Crushed by AI Search](https://www.marketingaiinstitute.com/hubfs/ep%20153%20cover.png)

![[DEALS] Internxt Cloud Storage Lifetime Subscription (20TB) (89% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![GrandChase tier list of the best characters available [June 2025]](https://media.pocketgamer.com/artwork/na-33057-1637756796/grandchase-ios-android-3rd-anniversary.jpg?#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Paul_Markillie_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![It wasn't just you, Apple Intelligence was down [u]](https://photos5.appleinsider.com/gallery/64086-133417-IMG_2283-xl.jpg)

![Samsung has its own earthquake alert system with options Google should consider [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/02/Galaxy-S25-Ultra-Titanium-Jadegreen-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![iPhone 18 Pro Models to Feature Under-Display Face ID, Keep Same Display Sizes [Rumor]](https://www.iclarified.com/images/news/97657/97657/97657-640.jpg)

![Apple M4 Mac Mini Drops to Just $469 — Save $130 [Lowest Price Ever]](https://www.iclarified.com/images/news/97659/97659/97659-640.jpg)

![Mobile Legends: Bang Bang [MLBB] Free Redeem Codes June 2025](https://www.talkandroid.com/wp-content/uploads/2024/07/Screenshot_20240704-093036_Mobile-Legends-Bang-Bang.jpg)