Scaling/microservices approach to reading files from same directory

My company receives files via SFTP. We currently have a service running on a timer that: polls the inbound directory moves files to an 'In Progress' directory processes files (queueing messages for other microservices to handle) moves them to a 'Done' directory. We run multiple copies of this service, and the solution in the past has been a mutually exclusive set of conditions (think alphabetical/filetype) to ensure that each service instance doesn't step on the others' toes. These conditions are manually set in the configuration, which results in inefficiency when we receive a bunch of files matching a single instance's condition. (All services are capable of processing all files) My question is this: Is there an industry standard way to scale up a file polling service? Ideally one that doesn't involve redrawing disjoint conditions each time? When trying to consider what a solution would look like, I often get stuck on: "use a manager service since each service instance shouldn't need to care about what the other service instances are doing" vs. "avoid unnecessary complexity, and a manager service would require additional overhead, maintenance, and developer time" I've also looked into C#'s FileSystemWatcher or other ways to turn new files into events and feed them into our existing event architecture, but it seems like FileSystemWatcher is known to be unreliable for large volumes of files without polling as a backup. I also can't find anything in our SFTP client that would allow for event hook-ins, nor am I sure that that would be an effective solution given the possibility of premature reads.

My company receives files via SFTP. We currently have a service running on a timer that:

- polls the inbound directory

- moves files to an 'In Progress' directory

- processes files (queueing messages for other microservices to handle)

- moves them to a 'Done' directory.

We run multiple copies of this service, and the solution in the past has been a mutually exclusive set of conditions (think alphabetical/filetype) to ensure that each service instance doesn't step on the others' toes. These conditions are manually set in the configuration, which results in inefficiency when we receive a bunch of files matching a single instance's condition. (All services are capable of processing all files)

My question is this: Is there an industry standard way to scale up a file polling service? Ideally one that doesn't involve redrawing disjoint conditions each time? When trying to consider what a solution would look like, I often get stuck on:

"use a manager service since each service instance shouldn't need to care about what the other service instances are doing"

vs.

"avoid unnecessary complexity, and a manager service would require additional overhead, maintenance, and developer time"

I've also looked into C#'s FileSystemWatcher or other ways to turn new files into events and feed them into our existing event architecture, but it seems like FileSystemWatcher is known to be unreliable for large volumes of files without polling as a backup. I also can't find anything in our SFTP client that would allow for event hook-ins, nor am I sure that that would be an effective solution given the possibility of premature reads.

![[The AI Show Episode 154]: AI Answers: The Future of AI Agents at Work, Building an AI Roadmap, Choosing the Right Tools, & Responsible AI Use](https://www.marketingaiinstitute.com/hubfs/ep%20154%20cover.png)

![[The AI Show Episode 153]: OpenAI Releases o3-Pro, Disney Sues Midjourney, Altman: “Gentle Singularity” Is Here, AI and Jobs & News Sites Getting Crushed by AI Search](https://www.marketingaiinstitute.com/hubfs/ep%20153%20cover.png)

![[DEALS] Internxt Cloud Storage Lifetime Subscription (20TB) (89% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![GrandChase tier list of the best characters available [June 2025]](https://media.pocketgamer.com/artwork/na-33057-1637756796/grandchase-ios-android-3rd-anniversary.jpg?#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Paul_Markillie_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

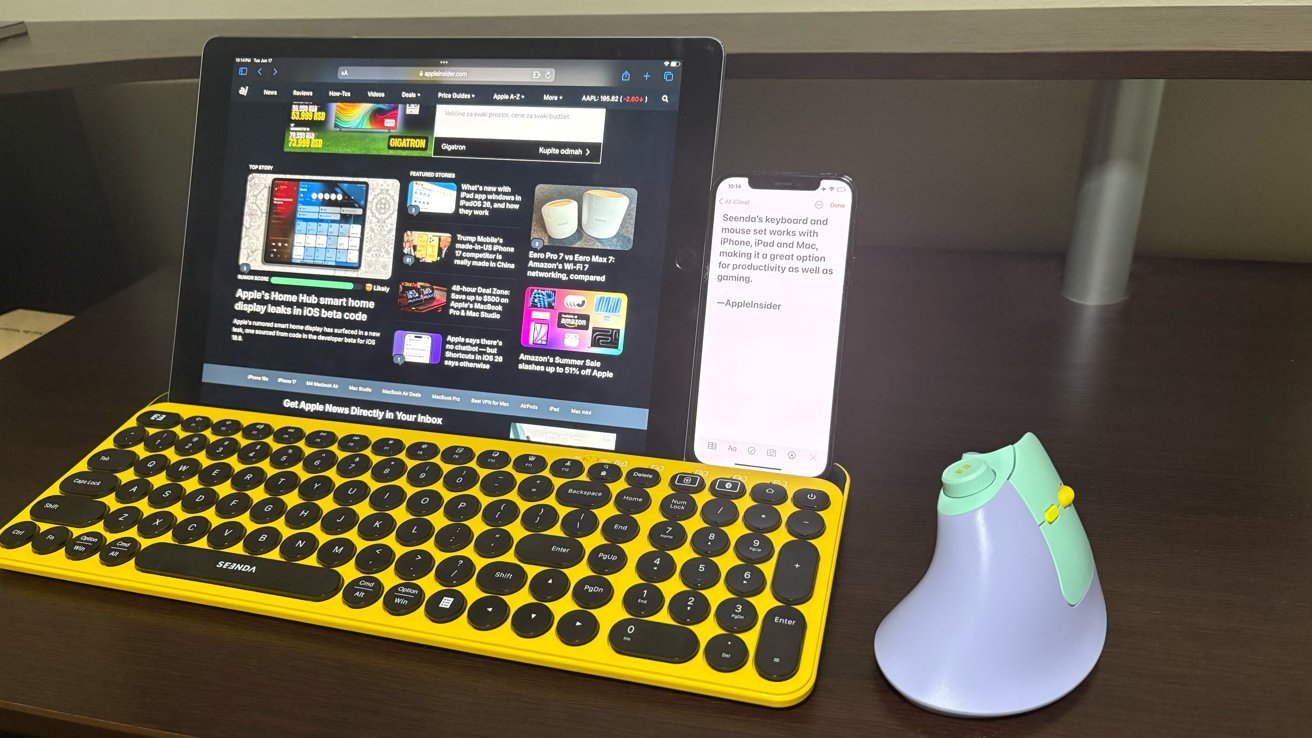

![It wasn't just you, Apple Intelligence was down [u]](https://photos5.appleinsider.com/gallery/64086-133417-IMG_2283-xl.jpg)

![Samsung has its own earthquake alert system with options Google should consider [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/02/Galaxy-S25-Ultra-Titanium-Jadegreen-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![iPhone 18 Pro Models to Feature Under-Display Face ID, Keep Same Display Sizes [Rumor]](https://www.iclarified.com/images/news/97657/97657/97657-640.jpg)

![Apple M4 Mac Mini Drops to Just $469 — Save $130 [Lowest Price Ever]](https://www.iclarified.com/images/news/97659/97659/97659-640.jpg)

![Mobile Legends: Bang Bang [MLBB] Free Redeem Codes June 2025](https://www.talkandroid.com/wp-content/uploads/2024/07/Screenshot_20240704-093036_Mobile-Legends-Bang-Bang.jpg)