Zero-Click Microsoft Copilot Vuln Underscores Emerging AI Security Risks

A critical security vulnerability in Microsoft Copilot that could have allowed attackers to easily access private data serves as a potent demonstration of the real security risks of generative AI. The good news is that while CEOs are gung-ho over AI, security professionals are pressing to make bigger investments in security and privacy, studies show. The Microsoft Copilot vulnerability, dubbed EchoLeak, was listed as CVE-2025-32711 in the NIST’s National Vulnerability Database, which gave the flaw a severity score of 9.3. According to Aim Labs, which discovered EchoLeak and shared its research with the world last week, the “zero-click” flaw could “allow attackers to automatically exfiltrate sensitive and proprietary information from M365 Copilot context, without the user’s awareness, or relying on any specific victim behavior.” Microsoft patched the flaw the following day. EchoLeak serves as a wakeup call to the industry that new AI methods also bring with them new attack surfaces and therefore new security vulnerabilities. While nobody appears to have been harmed with EchoLeak, per Microsoft, the attack is based on a “general design flaws that exist in other RAG applications and AI agents,” Aim Labs states. Those concerns are reflected in a slew of studies released over the past week. For instance, a survey of more than 2,300 senior GenAI decision makers released today by NTT DATA found that “while CEOs and business leaders are committed to GenAI adoption, CISOs and operational leaders lack the necessary guidance, clarity and resources to fully address security risks and infrastructure challenges associated with deployment.” NTT Data found that 99% of C-Suite executives “are planning further GenAI investments over the next two years, with 67% of CEOs planning significant commitments.” Some of those funds will go to cybersecurity, which was cited as a top investment priority for 95% of CIOs and CTOs, the study said. “Yet, even with this optimism, there is a notable disconnect between strategic ambitions and operational execution with nearly half of CISOs (45%) expressing negative sentiments toward GenAI adoption,” NTT DATA said. “More than half (54%) of CISOs say internal guidelines or policies on GenAI responsibility are unclear, yet only 20% of CEOs share the same concern–revealing a stark gap in executive alignment.” The study found other disconnects between the GenAI hopes and dreams of the higher ups and the hard realities of those closer to the ground. Nearly two-thirds of CISOs say their teams “lack the necessary skills to work with the technology.” What’s more, only 38% of CISOs say their GenAI and cybersecurity strategies are aligned, compared to 51% of CEOs, NTT DATA found. “As organizations accelerate GenAI adoption, cybersecurity must be embedded from the outset to reinforce resilience. While CEOs champion innovation, ensuring seamless collaboration between cybersecurity and business strategy is critical to mitigating emerging risks,” stated Sheetal Mehta, senior vice president and global head of cybersecurity at NTT DATA. “A secure and scalable approach to GenAI requires proactive alignment, modern infrastructure, and trusted co-innovation to protect enterprises from emerging threats while unlocking AI’s full potential.” Another study released today, this one from Nutanix, found that leaders at public sector organizations want more investment in security as they adopt AI. The company’s latest Public Sector Enterprise Cloud Index (ECI) study found that 94% of public sector organizations are already adopting AI, such as for content generation or chatbots. As they modernize their IT systems for AI, leaders want their organizations to increase investments in security and privacy too. The ECI indicates that “a significant amount of work needs to be done to improve the foundational levels of data security/governance required to support GenAI solution implementation and success,” Nutanix said. The good news is that 96% of survey respondents agreed that security and privacy are becoming higher priorities with GenAI. “Generative AI is no longer a future concept, it’s already transforming how we work,” said Greg O’Connell, vice president of public sector federal sales at Nutanix. “As public sector leaders look to see outcomes, now is the time to invest in AI-ready infrastructure, data security, privacy, and training to ensure long-term success.” Meanwhile, the folks over at Cybernews–which is an Eastern European security news site with its own team of white hat researchers–analyzed the public-facing websites of companies across the Fortune 500 and discovered that all of them are using AI in one form or another. The Cybernews research project, which utilized Google’s Gemini 2.5 Pro Deep Research model for text analysis, made some interesting findings. For instance, it found that 33% of the Fortune 500 say they’re using AI and big data in a broad manner for analysis, pattern recognition, and optimization,

A critical security vulnerability in Microsoft Copilot that could have allowed attackers to easily access private data serves as a potent demonstration of the real security risks of generative AI. The good news is that while CEOs are gung-ho over AI, security professionals are pressing to make bigger investments in security and privacy, studies show.

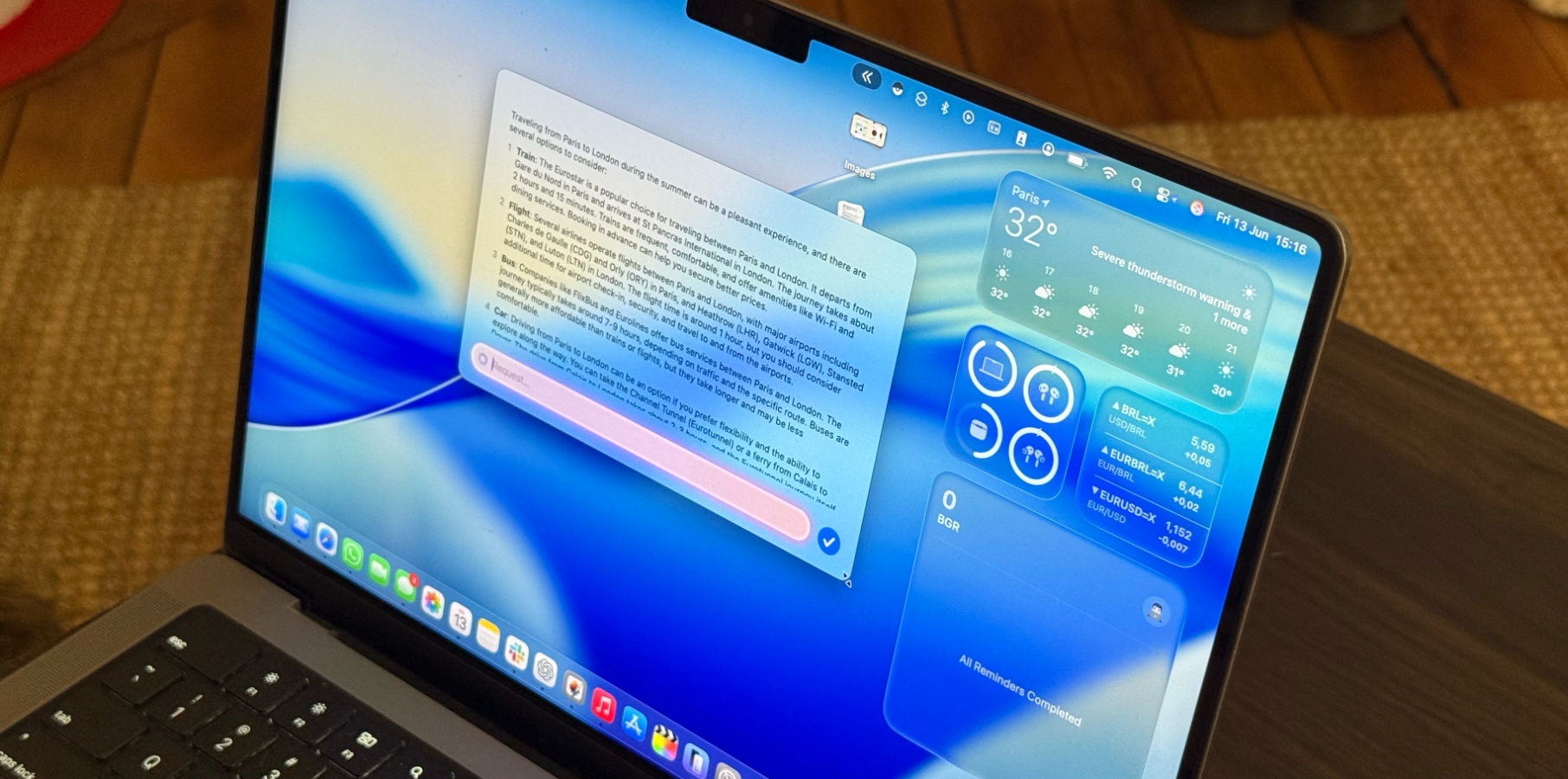

The Microsoft Copilot vulnerability, dubbed EchoLeak, was listed as CVE-2025-32711 in the NIST’s National Vulnerability Database, which gave the flaw a severity score of 9.3. According to Aim Labs, which discovered EchoLeak and shared its research with the world last week, the “zero-click” flaw could “allow attackers to automatically exfiltrate sensitive and proprietary information from M365 Copilot context, without the user’s awareness, or relying on any specific victim behavior.” Microsoft patched the flaw the following day.

EchoLeak serves as a wakeup call to the industry that new AI methods also bring with them new attack surfaces and therefore new security vulnerabilities. While nobody appears to have been harmed with EchoLeak, per Microsoft, the attack is based on a “general design flaws that exist in other RAG applications and AI agents,” Aim Labs states.

Those concerns are reflected in a slew of studies released over the past week. For instance, a survey of more than 2,300 senior GenAI decision makers released today by NTT DATA found that “while CEOs and business leaders are committed to GenAI adoption, CISOs and operational leaders lack the necessary guidance, clarity and resources to fully address security risks and infrastructure challenges associated with deployment.”

NTT Data found that 99% of C-Suite executives “are planning further GenAI investments over the next two years, with 67% of CEOs planning significant commitments.” Some of those funds will go to cybersecurity, which was cited as a top investment priority for 95% of CIOs and CTOs, the study said.

“Yet, even with this optimism, there is a notable disconnect between strategic ambitions and operational execution with nearly half of CISOs (45%) expressing negative sentiments toward GenAI adoption,” NTT DATA said. “More than half (54%) of CISOs say internal guidelines or policies on GenAI responsibility are unclear, yet only 20% of CEOs share the same concern–revealing a stark gap in executive alignment.”

The study found other disconnects between the GenAI hopes and dreams of the higher ups and the hard realities of those closer to the ground. Nearly two-thirds of CISOs say their teams “lack the necessary skills to work with the technology.” What’s more, only 38% of CISOs say their GenAI and cybersecurity strategies are aligned, compared to 51% of CEOs, NTT DATA found.

“As organizations accelerate GenAI adoption, cybersecurity must be embedded from the outset to reinforce resilience. While CEOs champion innovation, ensuring seamless collaboration between cybersecurity and business strategy is critical to mitigating emerging risks,” stated Sheetal Mehta, senior vice president and global head of cybersecurity at NTT DATA. “A secure and scalable approach to GenAI requires proactive alignment, modern infrastructure, and trusted co-innovation to protect enterprises from emerging threats while unlocking AI’s full potential.”

Another study released today, this one from Nutanix, found that leaders at public sector organizations want more investment in security as they adopt AI.

The company’s latest Public Sector Enterprise Cloud Index (ECI) study found that 94% of public sector organizations are already adopting AI, such as for content generation or chatbots. As they modernize their IT systems for AI, leaders want their organizations to increase investments in security and privacy too.

The ECI indicates that “a significant amount of work needs to be done to improve the foundational levels of data security/governance required to support GenAI solution implementation and success,” Nutanix said. The good news is that 96% of survey respondents agreed that security and privacy are becoming higher priorities with GenAI.

“Generative AI is no longer a future concept, it’s already transforming how we work,” said Greg O’Connell, vice president of public sector federal sales at Nutanix. “As public sector leaders look to see outcomes, now is the time to invest in AI-ready infrastructure, data security, privacy, and training to ensure long-term success.”

Meanwhile, the folks over at Cybernews–which is an Eastern European security news site with its own team of white hat researchers–analyzed the public-facing websites of companies across the Fortune 500 and discovered that all of them are using AI in one form or another.

The Cybernews research project, which utilized Google’s Gemini 2.5 Pro Deep Research model for text analysis, made some interesting findings. For instance, it found that 33% of the Fortune 500 say they’re using AI and big data in a broad manner for analysis, pattern recognition, and optimization, while about 22% are using AI for specific business functions like inventory optimization, predictive maintenance, and customer service.

The research project found 14% have developed proprietary LLMs, such as Walmart’s Wallaby or Saudi Aramco’s Metabrain, while about 5% are using LLM services from third-party providers like OpenAI, DeepSeek AI, Anthropic, Google, and others.

While AI use is now ubiquitous, the corporations are not doing enough to mitigate risks of AI, the company said.

“While big companies are quick to jump to the AI bandwagon, the risk management part is lagging behind,” Aras Nazarovas, a senior security researcher at Cybernews, said in the company’s June 12 report. “Companies are left exposed to the new risks associated with AI.”

These risks range from data security and data leakage, which Cybernews said is the most commonly mentioned security concern, to other concerns like prompt injection and model poisoning. New vulnerabilities created in energy control systems algorithmic bias, IP theft, insecure output, and an overall lack of transparency round out the list.

“As companies start to grapple with new challenges and risks, it’s likely to have significant implications for consumers, industries, and the broader economy in the coming years,” Nazarovas said.

This article first appeared on BigDATAwire.

![[The AI Show Episode 153]: OpenAI Releases o3-Pro, Disney Sues Midjourney, Altman: “Gentle Singularity” Is Here, AI and Jobs & News Sites Getting Crushed by AI Search](https://www.marketingaiinstitute.com/hubfs/ep%20153%20cover.png)

![GrandChase tier list of the best characters available [June 2025]](https://media.pocketgamer.com/artwork/na-33057-1637756796/grandchase-ios-android-3rd-anniversary.jpg?#)

_Brain_light_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![[Fixed] How to Recover Unsaved Word Document on Windows 10/11](https://www.pcworld.com/wp-content/uploads/2025/06/How-to-recover-unsaved-word-document-main.png?#)

![Apple Shares New Shot on iPhone Film: 'Big Man' [Video]](https://www.iclarified.com/images/news/97654/97654/97654-640.jpg)

![Apple Still Finalizing Key Parts of Its Foldable iPhone [Kuo]](https://www.iclarified.com/images/news/97655/97655/97655-640.jpg)

![Apple's F1 Camera Rig Revealed [Video]](https://www.iclarified.com/images/news/97651/97651/97651-640.jpg)