How to Build an Advanced BrightData Web Scraper with Google Gemini for AI-Powered Data Extraction

In this tutorial, we walk you through building an enhanced web scraping tool that leverages BrightData’s powerful proxy network alongside Google’s Gemini API for intelligent data extraction. You’ll see how to structure your Python project, install and import the necessary libraries, and encapsulate scraping logic within a clean, reusable BrightDataScraper class. Whether you’re targeting Amazon […] The post How to Build an Advanced BrightData Web Scraper with Google Gemini for AI-Powered Data Extraction appeared first on MarkTechPost.

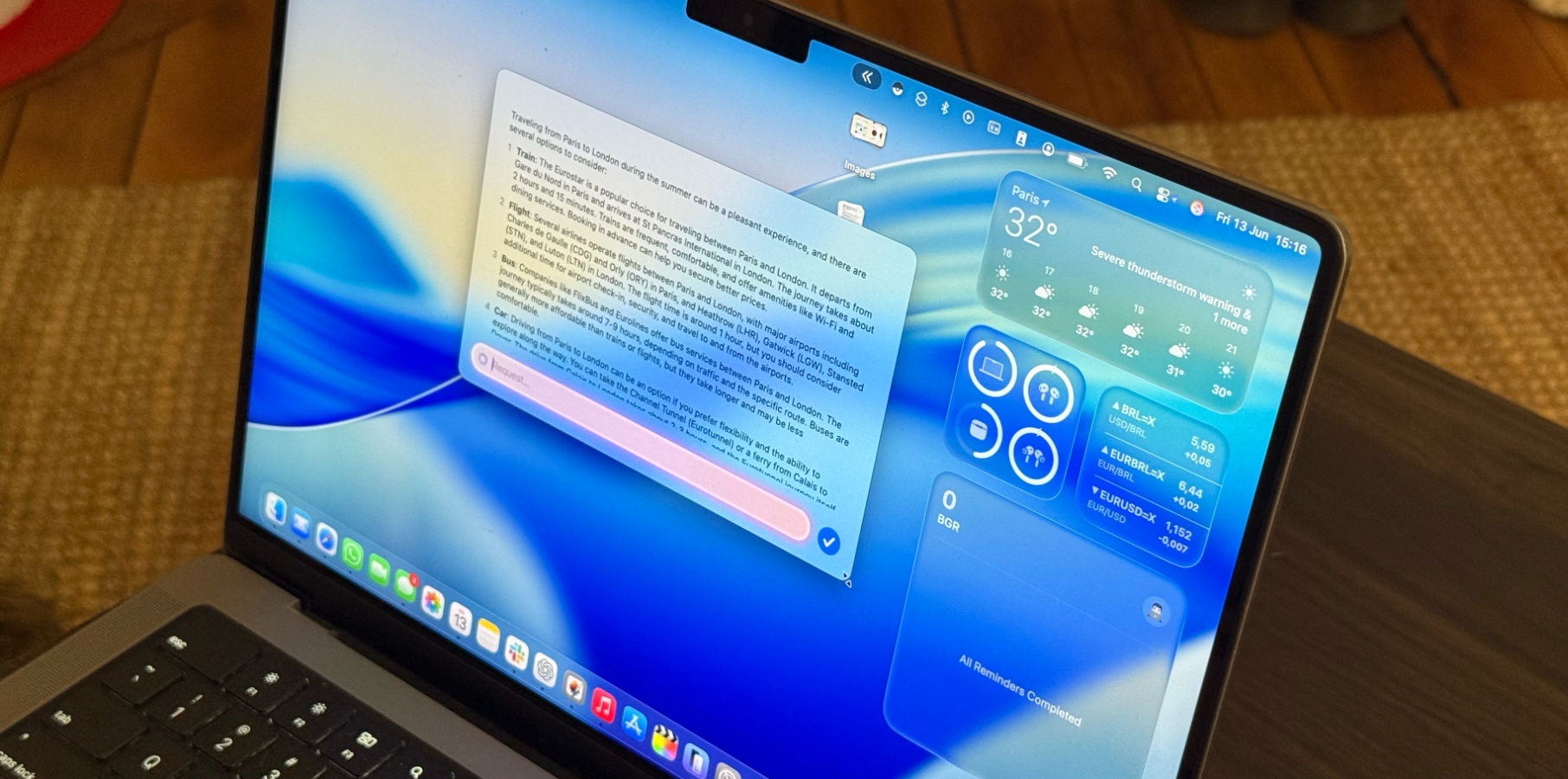

In this tutorial, we walk you through building an enhanced web scraping tool that leverages BrightData’s powerful proxy network alongside Google’s Gemini API for intelligent data extraction. You’ll see how to structure your Python project, install and import the necessary libraries, and encapsulate scraping logic within a clean, reusable BrightDataScraper class. Whether you’re targeting Amazon product pages, bestseller listings, or LinkedIn profiles, the scraper’s modular methods demonstrate how to configure scraping parameters, handle errors gracefully, and return structured JSON results. An optional React-style AI agent integration also shows you how to combine LLM-driven reasoning with real-time scraping, empowering you to pose natural language queries for on-the-fly data analysis.

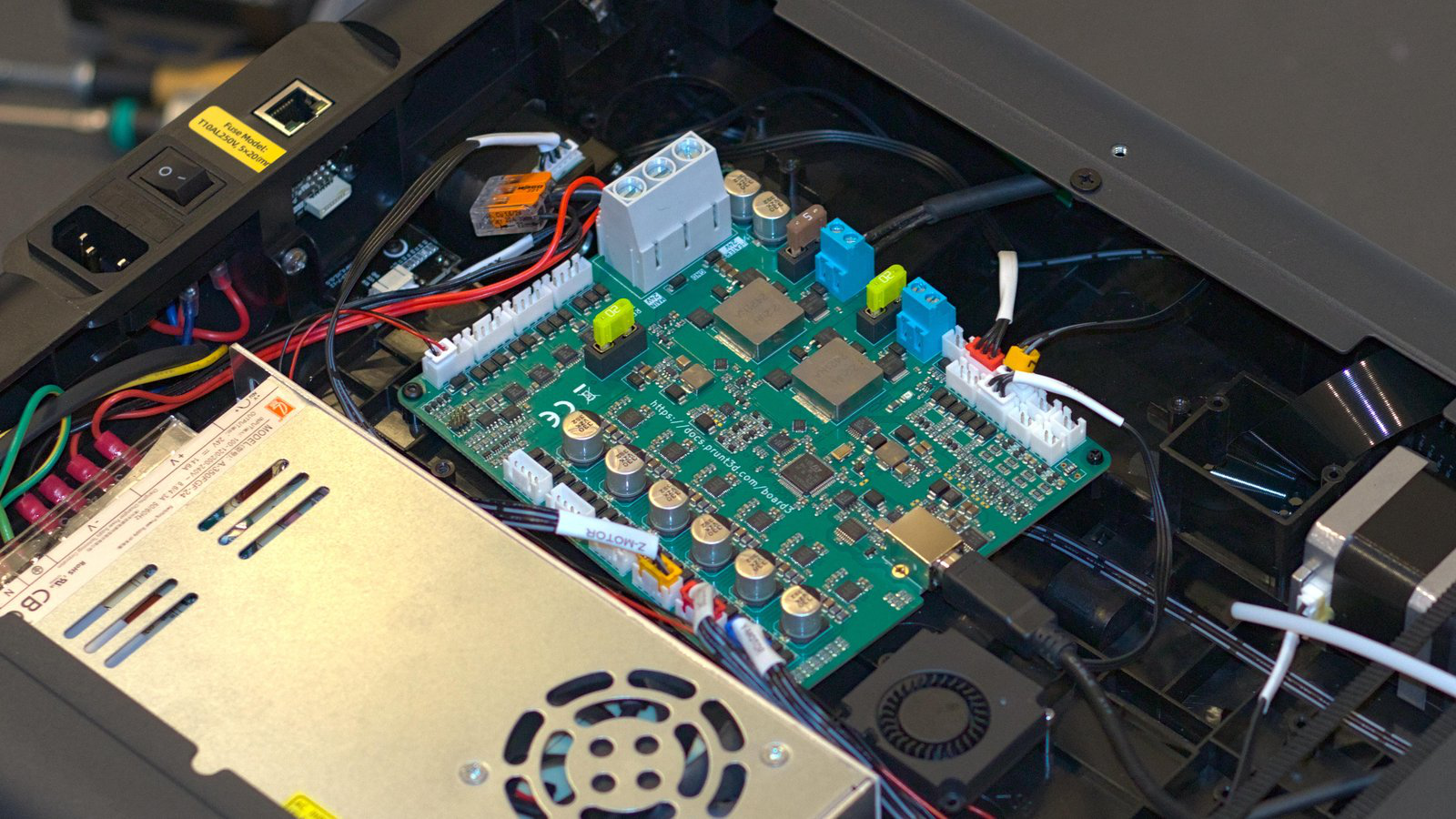

!pip install langchain-brightdata langchain-google-genai langgraph langchain-core google-generativeaiWe install all of the key libraries needed for the tutorial in one step: langchain-brightdata for BrightData web scraping, langchain-google-genai and google-generativeai for Google Gemini integration, langgraph for agent orchestration, and langchain-core for the core LangChain framework.

import os

import json

from typing import Dict, Any, Optional

from langchain_brightdata import BrightDataWebScraperAPI

from langchain_google_genai import ChatGoogleGenerativeAI

from langgraph.prebuilt import create_react_agentThese imports prepare your environment and core functionality: os and json handle system operations and data serialization, while typing provides structured type hints. You then bring in BrightDataWebScraperAPI for BrightData scraping, ChatGoogleGenerativeAI to interface with Google’s Gemini LLM, and create_react_agent to orchestrate these components in a React-style agent.

class BrightDataScraper:

"""Enhanced web scraper using BrightData API"""

def __init__(self, api_key: str, google_api_key: Optional[str] = None):

"""Initialize scraper with API keys"""

self.api_key = api_key

self.scraper = BrightDataWebScraperAPI(bright_data_api_key=api_key)

if google_api_key:

self.llm = ChatGoogleGenerativeAI(

model="gemini-2.0-flash",

google_api_key=google_api_key

)

self.agent = create_react_agent(self.llm, [self.scraper])

def scrape_amazon_product(self, url: str, zipcode: str = "10001") -> Dict[str, Any]:

"""Scrape Amazon product data"""

try:

results = self.scraper.invoke({

"url": url,

"dataset_type": "amazon_product",

"zipcode": zipcode

})

return {"success": True, "data": results}

except Exception as e:

return {"success": False, "error": str(e)}

def scrape_amazon_bestsellers(self, region: str = "in") -> Dict[str, Any]:

"""Scrape Amazon bestsellers"""

try:

url = f"https://www.amazon.{region}/gp/bestsellers/"

results = self.scraper.invoke({

"url": url,

"dataset_type": "amazon_product"

})

return {"success": True, "data": results}

except Exception as e:

return {"success": False, "error": str(e)}

def scrape_linkedin_profile(self, url: str) -> Dict[str, Any]:

"""Scrape LinkedIn profile data"""

try:

results = self.scraper.invoke({

"url": url,

"dataset_type": "linkedin_person_profile"

})

return {"success": True, "data": results}

except Exception as e:

return {"success": False, "error": str(e)}

def run_agent_query(self, query: str) -> None:

"""Run AI agent with natural language query"""

if not hasattr(self, 'agent'):

print("Error: Google API key required for agent functionality")

return

try:

for step in self.agent.stream(

{"messages": query},

stream_mode="values"

):

step["messages"][-1].pretty_print()

except Exception as e:

print(f"Agent error: {e}")

def print_results(self, results: Dict[str, Any], title: str = "Results") -> None:

"""Pretty print results"""

print(f"\n{'='*50}")

print(f"{title}")

print(f"{'='*50}")

if results["success"]:

print(json.dumps(results["data"], indent=2, ensure_ascii=False))

else:

print(f"Error: {results['error']}")

print()The BrightDataScraper class encapsulates all BrightData web-scraping logic and optional Gemini-powered intelligence under a single, reusable interface. Its methods enable you to easily fetch Amazon product details, bestseller lists, and LinkedIn profiles, handling API calls, error handling, and JSON formatting, and even stream natural-language “agent” queries when a Google API key is provided. A convenient print_results helper ensures your output is always cleanly formatted for inspection.

def main():

"""Main execution function"""

BRIGHT_DATA_API_KEY = "Use Your Own API Key"

GOOGLE_API_KEY = "Use Your Own API Key"

scraper = BrightDataScraper(BRIGHT_DATA_API_KEY, GOOGLE_API_KEY)

print(" Read More

Read More

![[The AI Show Episode 153]: OpenAI Releases o3-Pro, Disney Sues Midjourney, Altman: “Gentle Singularity” Is Here, AI and Jobs & News Sites Getting Crushed by AI Search](https://www.marketingaiinstitute.com/hubfs/ep%20153%20cover.png)

![GrandChase tier list of the best characters available [June 2025]](https://media.pocketgamer.com/artwork/na-33057-1637756796/grandchase-ios-android-3rd-anniversary.jpg?#)

_Brain_light_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![[Fixed] How to Recover Unsaved Word Document on Windows 10/11](https://www.pcworld.com/wp-content/uploads/2025/06/How-to-recover-unsaved-word-document-main.png?#)

![Apple's F1 Camera Rig Revealed [Video]](https://www.iclarified.com/images/news/97651/97651/97651-640.jpg)

![Apple Shares New Apple Arcade Ad: 'Hold That Train!' [Video]](https://www.iclarified.com/images/news/97653/97653/97653-640.jpg)