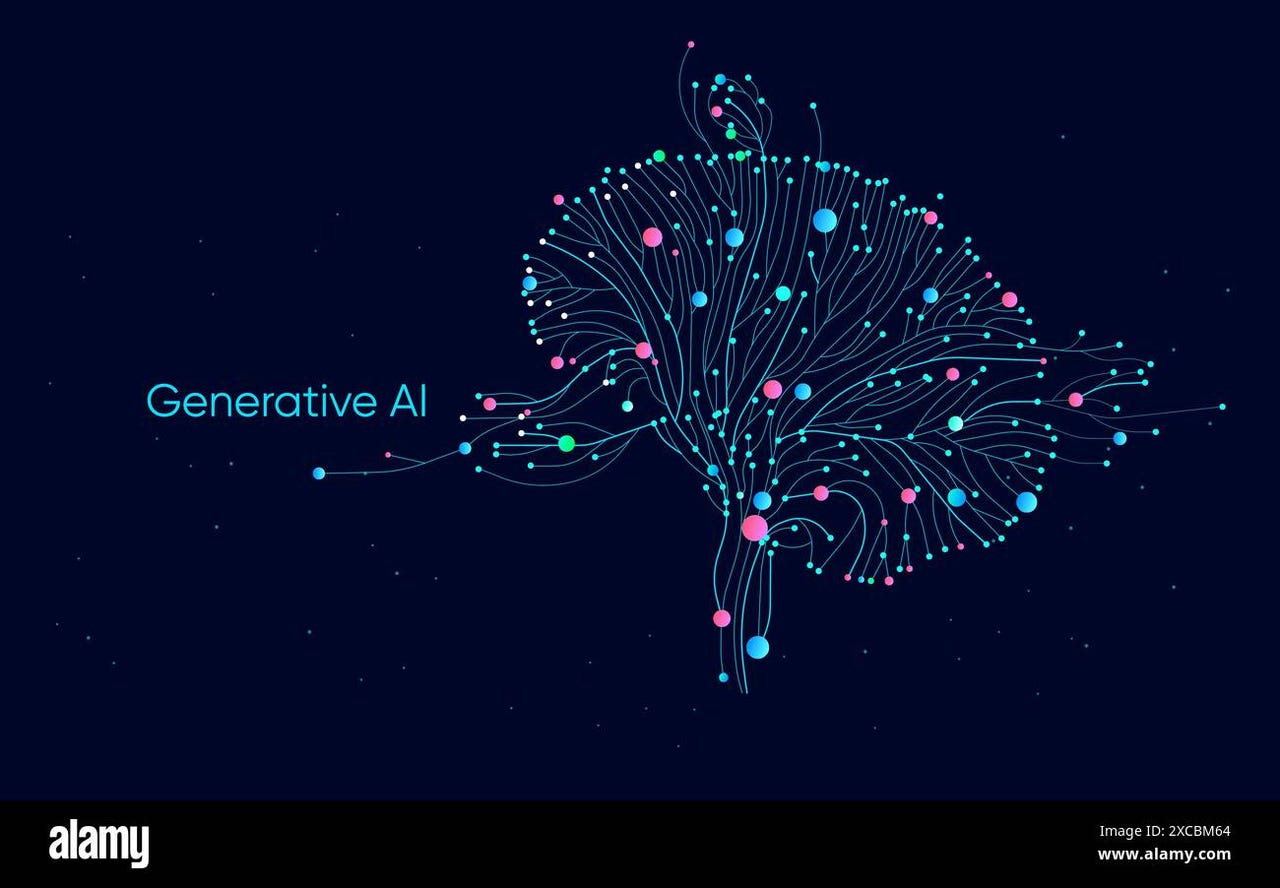

Google Introduces Open-Source Full-Stack AI Agent Stack Using Gemini 2.5 and LangGraph for Multi-Step Web Search, Reflection, and Synthesis

Introduction: The Need for Dynamic AI Research Assistants Conversational AI has rapidly evolved beyond basic chatbot frameworks. However, most large language models (LLMs) still suffer from a critical limitation—they generate responses based only on static training data, lacking the ability to self-identify knowledge gaps or perform real-time information synthesis. As a result, these models often […] The post Google Introduces Open-Source Full-Stack AI Agent Stack Using Gemini 2.5 and LangGraph for Multi-Step Web Search, Reflection, and Synthesis appeared first on MarkTechPost.

Introduction: The Need for Dynamic AI Research Assistants

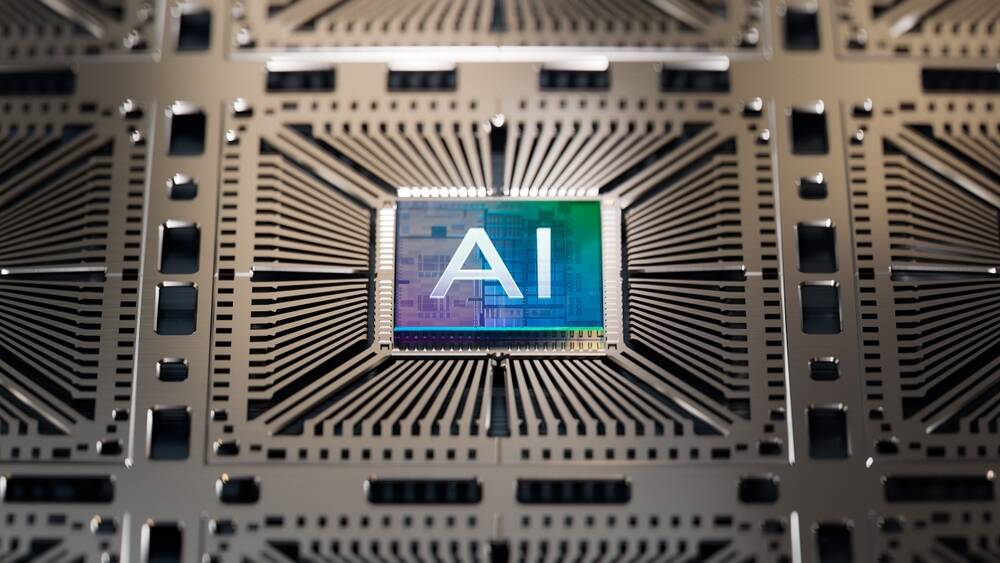

Conversational AI has rapidly evolved beyond basic chatbot frameworks. However, most large language models (LLMs) still suffer from a critical limitation—they generate responses based only on static training data, lacking the ability to self-identify knowledge gaps or perform real-time information synthesis. As a result, these models often deliver incomplete or outdated answers, particularly for evolving or niche topics.

To overcome these issues, AI agents must go beyond passive querying. They need to recognize informational gaps, perform autonomous web searches, validate results, and refine responses—effectively mimicking a human research assistant.

Google’s Full-Stack Research Agent: Gemini 2.5 + LangGraph

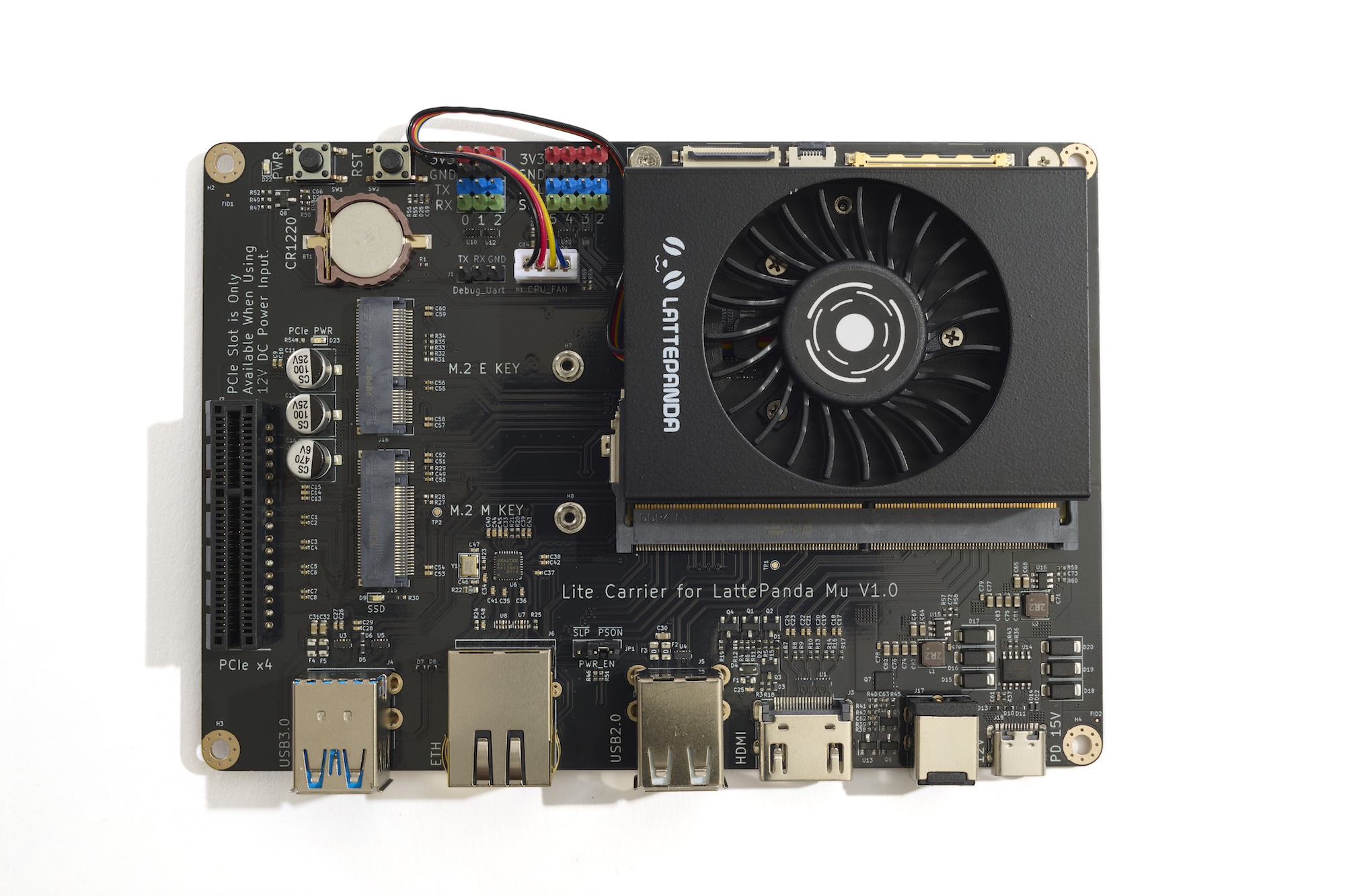

Google, in collaboration with contributors from Hugging Face and other open-source communities, has developed a full-stack research agent stack designed to solve this problem. Built with a React frontend and a FastAPI + LangGraph backend, this system combines language generation with intelligent control flow and dynamic web search.

The research agent stack utilizes the Gemini 2.5 API to process user queries, generating structured search terms. It then performs recursive search-and-reflection cycles using the Google Search API, verifying whether each result sufficiently answers the original query. This iterative process continues until the agent generates a validated, well-cited response.

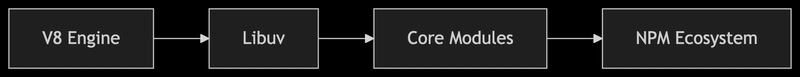

Architecture Overview: Developer-Friendly and Extensible

- Frontend: Built with Vite + React, offering hot reloading and clean module separation.

- Backend: Powered by Python (3.8+), FastAPI, and LangGraph, enabling decision control, evaluation loops, and autonomous query refinement.

- Key Directories: The agent logic resides in

backend/src/agent/graph.py, while UI components are structured underfrontend/. - Local Setup: Requires Node.js, Python, and a Gemini API Key. Run with

make dev, or launch frontend/backend separately. - Endpoints:

- Backend API:

http://127.0.0.1:2024 - Frontend UI:

http://localhost:5173

- Backend API:

This separation of concerns ensures that developers can easily modify the agent’s behavior or UI presentation, making the project suitable for global research teams and tech developers alike.

Technical Highlights and Performance

- Reflective Looping: The LangGraph agent evaluates search results and identifies coverage gaps, autonomously refining queries without human intervention.

- Delayed Response Synthesis: The AI waits until it gathers sufficient information before generating an answer.

- Source Citations: Answers include embedded hyperlinks to original sources, improving trust and traceability.

- Use Cases: Ideal for academic research, enterprise knowledge bases, technical support bots, and consulting tools where accuracy and validation matter.

Why It Matters: A Step Towards Autonomous Web Research

This system illustrates how autonomous reasoning and search synthesis can be integrated directly into LLM workflows. The agent doesn’t just respond—it investigates, verifies, and adapts. This reflects a broader shift in AI development: from stateless Q&A bots to real-time reasoning agents.

The agent enables developers, researchers, and enterprises in regions such as North America, Europe, India, and Southeast Asia to deploy AI research assistants with minimal setup. By using globally accessible tools like FastAPI, React, and Gemini APIs, the project is well-positioned for widespread adoption.

.jpg)

![[The AI Show Episode 151]: Anthropic CEO: AI Will Destroy 50% of Entry-Level Jobs, Veo 3’s Scary Lifelike Videos, Meta Aims to Fully Automate Ads & Perplexity’s Burning Cash](https://www.marketingaiinstitute.com/hubfs/ep%20151%20cover.png)

![[DEALS] Internxt Cloud Storage: Lifetime Subscription (85% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From electrical engineering student to CTO with Hitesh Choudhary [Podcast #175]](https://cdn.hashnode.com/res/hashnode/image/upload/v1749158756824/3996a2ad-53e5-4a8f-ab97-2c77a6f66ba3.png?#)

.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Michael_Vi_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![This one feature makes the Renpho Lynx the most practical smart ring yet [Hands-on]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/06/Lynx-review-FI.jpg.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![What Google Messages features are rolling out [June 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2023/12/google-messages-name-cover.png?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Planning Futuristic 'Glasswing' iPhone With Curved Glass and No Cutouts [Gurman]](https://www.iclarified.com/images/news/97534/97534/97534-640.jpg)

![UGREEN FineTrack Smart Tracker With Apple Find My Support Drops to $9.99 [50% Off]](https://www.iclarified.com/images/news/97529/97529/97529-640.jpg)