Hirundo Raises $8M to Tackle AI Hallucinations with Machine Unlearning

Hirundo, the first startup dedicated to machine unlearning, has raised $8 million in seed funding to address some of the most pressing challenges in artificial intelligence: hallucinations, bias, and embedded data vulnerabilities. The round was led by Maverick Ventures Israel with participation from SuperSeed, Alpha Intelligence Capital, Tachles VC, AI.FUND, and Plug and Play Tech […] The post Hirundo Raises $8M to Tackle AI Hallucinations with Machine Unlearning appeared first on Unite.AI.

Hirundo, the first startup dedicated to machine unlearning, has raised $8 million in seed funding to address some of the most pressing challenges in artificial intelligence: hallucinations, bias, and embedded data vulnerabilities. The round was led by Maverick Ventures Israel with participation from SuperSeed, Alpha Intelligence Capital, Tachles VC, AI.FUND, and Plug and Play Tech Center.

Making AI Forget: The Promise of Machine Unlearning

Unlike traditional AI tools that focus on refining or filtering AI outputs, Hirundo’s core innovation is machine unlearning—a technique that allows AI models to “forget” specific knowledge or behaviors after they’ve already been trained. This approach enables enterprises to surgically remove hallucinations, biases, personal or proprietary data, and adversarial vulnerabilities from deployed AI models without retraining them from scratch. Retraining large-scale models can take weeks and millions of dollars; Hirundo offers a far more efficient alternative.

Hirundo likens this process to AI neurosurgery: the company pinpoints exactly where in a model’s parameters undesired outputs originate and precisely removes them, all while preserving performance. This groundbreaking technique empowers organizations to remediate models in production environments and deploy AI with much greater confidence.

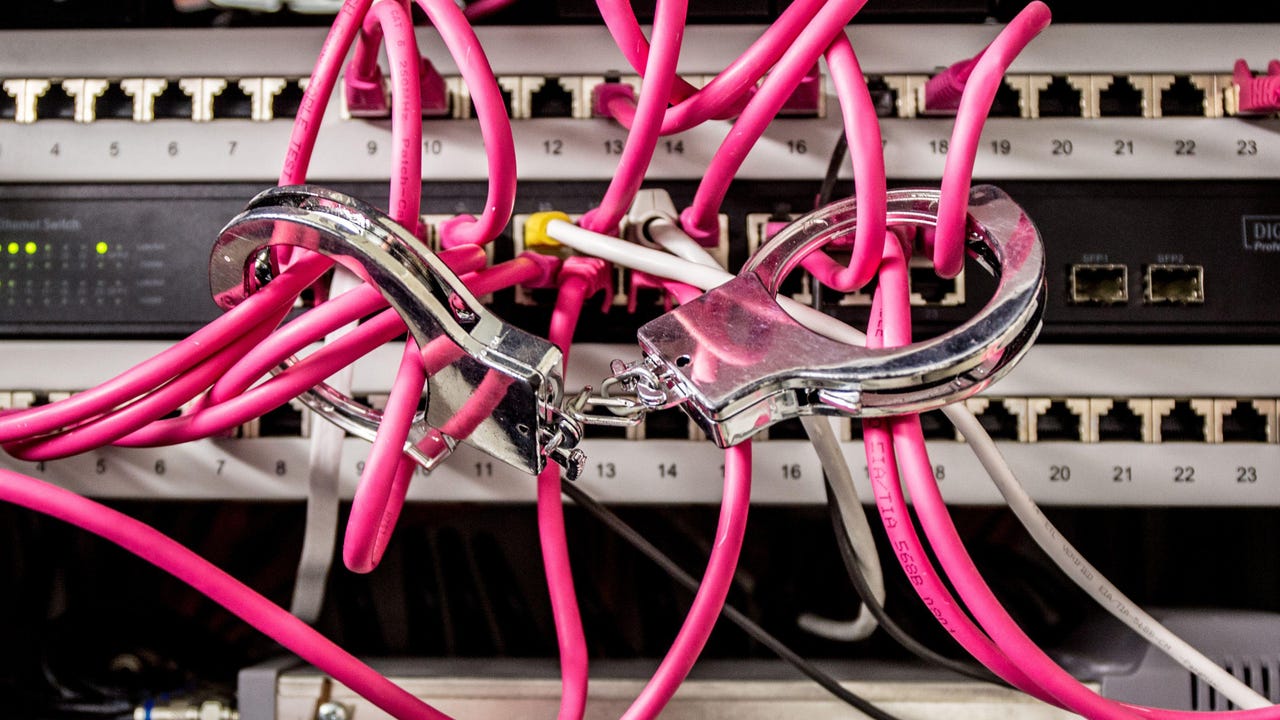

Why AI Hallucinations Are So Dangerous

AI hallucinations refer to a model’s tendency to generate false or misleading information that sounds plausible or even factual. These hallucinations are especially problematic in enterprise environments, where decisions based on incorrect information can lead to legal exposure, operational errors, and reputational damage. Studies have shown that 58 to 82% % of “facts” generated by AI for legal queries contained some type of hallucination.

Despite efforts to minimize hallucinations using guardrails or fine-tuning, these methods often mask problems rather than eliminating them. Guardrails act like filters, and fine-tuning typically fails to remove the root cause—especially when the hallucination is baked deep into the model's learned weights. Hirundo goes beyond this by actually removing the behavior or knowledge from the model itself.

A Scalable Platform for Any AI Stack

Hirundo’s platform is built for flexibility and enterprise-grade deployment. It integrates with both generative and non-generative systems across a wide range of data types—natural language, vision, radar, LiDAR, tabular, speech, and timeseries. The platform automatically detects mislabeled items, outliers, and ambiguities in training data. It then allows users to debug specific faulty outputs and trace them back to problematic training data or learned behaviors, which can be unlearned instantly.

This is all achieved without changing workflows. Hirundo’s SOC-2 certified system can be run via SaaS, private cloud (VPC), or even air-gapped on-premises, making it suitable for sensitive environments such as finance, healthcare, and defense.

Demonstrated Impact Across Models

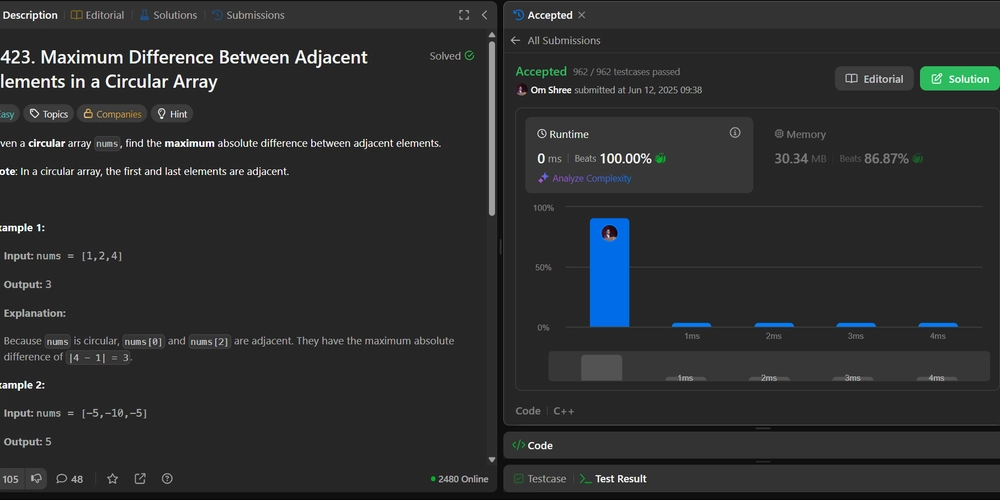

The company has already demonstrated strong performance improvements across popular large language models (LLMs). In tests using Llama and DeepSeek, Hirundo achieved a 55% reduction in hallucinations, 70% decrease in bias, and 85% reduction in successful prompt injection attacks. These results have been verified using independent benchmarks such as HaluEval, PurpleLlama, and Bias Benchmark Q&A.

While current solutions work well with open-source models like Llama, Mistral, and Gemma, Hirundo is actively expanding support to gated models like ChatGPT and Claude. This makes their technology applicable across the full spectrum of enterprise LLMs.

Founders with Academic and Industry Depth

Hirundo was founded in 2023 by a trio of experts at the intersection of academia and enterprise AI. CEO Ben Luria is a Rhodes Scholar and former visiting fellow at Oxford, who previously founded fintech startup Worqly and co-founded ScholarsIL, a nonprofit supporting higher education. Michael Leybovich, Hirundo’s CTO, is a former graduate researcher at the Technion and award-winning R&D officer at Ofek324. Prof. Oded Shmueli, the company's Chief Scientist, is the former Dean of Computer Science at the Technion and has held research positions at IBM, HP, AT&T, and more.

Their collective experience spans foundational AI research, real-world deployment, and secure data management—making them uniquely qualified to address the AI industry’s current reliability crisis.

Investor Backing for a Trustworthy AI Future

Investors in this round are aligned with Hirundo’s vision of building trustworthy, enterprise-ready AI. Yaron Carni, founder of Maverick Ventures Israel, noted the urgent need for a platform that can remove hallucinated or biased intelligence before it causes real-world harm. “Without removing hallucinations or biased intelligence from AI, we end up distorting outcomes and encouraging mistrust,” he said. “Hirundo offers a type of AI triage—removing untruths or data built on discriminatory sources and completely transforming the possibilities of AI.”

SuperSeed’s Managing Partner, Mads Jensen, echoed this sentiment: “We invest in exceptional AI companies transforming industry verticals, but this transformation is only as powerful as the models themselves are trustworthy. Hirundo’s approach to machine unlearning addresses a critical gap in the AI development lifecycle.”

Addressing a Growing Challenge in AI Deployment

As AI systems are increasingly integrated into critical infrastructure, concerns about hallucinations, bias, and embedded sensitive data are becoming harder to ignore. These issues pose significant risks in high-stakes environments, from finance to healthcare and defense.

Machine unlearning is emerging as a critical tool in the AI industry’s response to rising concerns over model reliability and safety. As hallucinations, embedded bias, and exposure of sensitive data increasingly undermine trust in deployed AI systems, unlearning offers a direct way to mitigate these risks—after a model is trained and in use.

Rather than relying on retraining or surface-level fixes like filtering, machine unlearning enables targeted removal of problematic behaviors and data from models already in production. This approach is gaining traction among enterprises and government agencies seeking scalable, compliant solutions for high-stakes applications.

The post Hirundo Raises $8M to Tackle AI Hallucinations with Machine Unlearning appeared first on Unite.AI.

![Top Features of Vision-Based Workplace Safety Tools [2025]](https://static.wixstatic.com/media/379e66_7e75a4bcefe14e4fbc100abdff83bed3~mv2.jpg/v1/fit/w_1000,h_884,al_c,q_80/file.png?#)

![[The AI Show Episode 152]: ChatGPT Connectors, AI-Human Relationships, New AI Job Data, OpenAI Court-Ordered to Keep ChatGPT Logs & WPP’s Large Marketing Model](https://www.marketingaiinstitute.com/hubfs/ep%20152%20cover.png)

.jpg?#)

![MindsEye From Ex-GTA Producer Is A Day-One Car Wreck [Update]](https://i.kinja-img.com/image/upload/c_fill,h_675,pg_1,q_80,w_1200/aa09b256615c422f7d1e1535d023e578.png)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![PSA: iOS 26 Spatial Scenes will work on iPhones 12 and up [U]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/06/spatial-photos-ios26.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple categorically denies Siri vaporware claims, and offers a better explanation [Video]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/06/Apple-categorically-denies-Siri-vaporware-claims-and-offers-a-better-explanation.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![This new iPad keyboard was purpose-built for versatility and portability – Logitech Flip Folio [Hands-on]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/06/Logitech-FI.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Nothing confirms it was almost called ‘Essential’ instead [Video]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/06/nothing-essential-3.png?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Teaser Trailer for 'The Lost Bus' Starring Matthew McConaughey [Video]](https://www.iclarified.com/images/news/97582/97582/97582-640.jpg)