EXPLAIN It! Your Fast Track to Fixing Slow SQL

Ever found yourself staring at a query, wondering why it’s taking an eternity to return results? In the world of database management, slow queries are notorious performance vampires. But how do you shine a light on these shadowy figures and understand what’s happening under the hood? Enter the EXPLAIN command – your magnifying glass for peering into the database's query execution strategy. The term “EXPLAIN” is a powerful SQL command that unveils the execution plan for your query. This plan is the database’s detailed roadmap of how it intends to fetch your data. It reveals crucial information like which indexes will be leveraged (or ignored!), the order in which tables are joined, the method of scanning tables, and much more. Understanding this plan is the first critical step towards transforming a sluggish query into a well-oiled, efficient data retrieval machine. When you prepend EXPLAIN to your SQL query, the database provides a wealth of information, typically including fields like: id: An identifier for each part of the query (especially in complex queries with subqueries or unions). select_type: The type of SELECT query (e.g., SIMPLE, SUBQUERY, UNION). table: The table being accessed. partitions: If partitioning is used, this shows which partitions are involved. type: This is crucial! It indicates the join type or table access method (e.g., ALL for a full table scan, index for an index scan, range for a range scan on an index, ref for an index lookup using a non-unique key, eq_ref for a join using a unique key, const/system for highly optimized lookups). possible_keys: Shows which indexes the database could potentially use. key: The actual index the database decided to use. If NULL, no index was used effectively for this part. key_len: The length of the key (index part) that was used. ref: Shows which columns or constants are compared to the index named in the key column. rows: An estimate of the number of rows the database expects to examine to execute this part of the query. filtered: An estimated percentage of rows that will be filtered by the table condition after being read. Extra: Contains additional valuable information, such as "Using filesort" (needs to sort results), "Using temporary" (needs to create a temporary table), "Using index" (an efficient index-only scan), or "Using where" (filtering rows after retrieval). Let’s dive into two practical case studies to illustrate how EXPLAIN can guide your SQL optimization efforts. Case Study 1: Optimizing a Simple Count Query Scenario Setup: Imagine an e-commerce platform with a database table named ProductSales that logs every product sale. The table structure is roughly: sale_id (INT, Primary Key): Unique identifier for the sale. product_sku (VARCHAR): SKU of the product sold. customer_id (INT): ID of the customer who made the purchase. sale_timestamp (TIMESTAMP): Date and time of the sale. quantity_sold (INT): Number of units sold. sale_amount (DECIMAL): Total amount for this sale line. The Problem: We need to find the total number of sales made after ‘2025–03–01’. Original SQL Query: SELECT COUNT(*) FROM ProductSales WHERE sale_timestamp > '2025-03-01'; Step 1: Use EXPLAIN to Analyze the Query EXPLAIN SELECT COUNT(*) FROM ProductSales WHERE sale_timestamp > '2025-03-01'; Step 2: Analyze the EXPLAIN Output (Hypothetical Initial Output) Let’s assume the initial EXPLAIN output looks like this (simplified table format): +----+-------------+--------------+-------+-----------------+---------------+---------+------+--------+----------+--------------------------+ | id | select_type | table | type | possible_keys | key | key_len | ref | rows | filtered | Extra | +----+-------------+--------------+-------+-----------------+---------------+---------+------+--------+----------+--------------------------+ | 1 | SIMPLE | ProductSales | range | idx_sale_time | idx_sale_time | 5 | NULL | 150000 | 100.00 | Using where; Using index | +----+-------------+--------------+-------+-----------------+---------------+---------+------+--------+----------+--------------------------+ Step 3: Identify the Problem From this EXPLAIN output: type is range: This is good; it means the database is using an index (idx_sale_time on sale_timestamp) to perform a range scan, which is much better than a full table scan (ALL). rows is estimated at 150000: This indicates the query still needs to examine a significant number of rows based on the date range. Extra shows "Using where; Using index": "Using index" is generally good, suggesting parts of the query can be satisfied by the index. "Using where" means the sale_timestamp > '2025-03-01' condition is being applied. Step 4: Optimize the SQL (or rather, ensure optimal conditions) While an index is used, can we do better for a COUNT(*)? If the query can be sat

Ever found yourself staring at a query, wondering why it’s taking an eternity to return results? In the world of database management, slow queries are notorious performance vampires. But how do you shine a light on these shadowy figures and understand what’s happening under the hood? Enter the EXPLAIN command – your magnifying glass for peering into the database's query execution strategy.

The term “EXPLAIN” is a powerful SQL command that unveils the execution plan for your query. This plan is the database’s detailed roadmap of how it intends to fetch your data. It reveals crucial information like which indexes will be leveraged (or ignored!), the order in which tables are joined, the method of scanning tables, and much more. Understanding this plan is the first critical step towards transforming a sluggish query into a well-oiled, efficient data retrieval machine.

When you prepend EXPLAIN to your SQL query, the database provides a wealth of information, typically including fields like:

-

id: An identifier for each part of the query (especially in complex queries with subqueries or unions). -

select_type: The type ofSELECTquery (e.g.,SIMPLE,SUBQUERY,UNION). -

table: The table being accessed. -

partitions: If partitioning is used, this shows which partitions are involved. -

type: This is crucial! It indicates the join type or table access method (e.g.,ALLfor a full table scan,indexfor an index scan,rangefor a range scan on an index,reffor an index lookup using a non-unique key,eq_reffor a join using a unique key,const/systemfor highly optimized lookups). -

possible_keys: Shows which indexes the database could potentially use. -

key: The actual index the database decided to use. IfNULL, no index was used effectively for this part. -

key_len: The length of the key (index part) that was used. -

ref: Shows which columns or constants are compared to the index named in thekeycolumn. -

rows: An estimate of the number of rows the database expects to examine to execute this part of the query. -

filtered: An estimated percentage of rows that will be filtered by the table condition after being read. -

Extra: Contains additional valuable information, such as "Using filesort" (needs to sort results), "Using temporary" (needs to create a temporary table), "Using index" (an efficient index-only scan), or "Using where" (filtering rows after retrieval).

Let’s dive into two practical case studies to illustrate how EXPLAIN can guide your SQL optimization efforts.

Case Study 1: Optimizing a Simple Count Query

Scenario Setup:

Imagine an e-commerce platform with a database table named ProductSales that logs every product sale. The table structure is roughly:

-

sale_id(INT, Primary Key): Unique identifier for the sale. -

product_sku(VARCHAR): SKU of the product sold. -

customer_id(INT): ID of the customer who made the purchase. -

sale_timestamp(TIMESTAMP): Date and time of the sale. -

quantity_sold(INT): Number of units sold. -

sale_amount(DECIMAL): Total amount for this sale line.

The Problem:

We need to find the total number of sales made after ‘2025–03–01’.

Original SQL Query:

SELECT COUNT(*)

FROM ProductSales

WHERE sale_timestamp > '2025-03-01';

Step 1: Use EXPLAIN to Analyze the Query

EXPLAIN SELECT COUNT(*)

FROM ProductSales

WHERE sale_timestamp > '2025-03-01';

Step 2: Analyze the EXPLAIN Output (Hypothetical Initial Output)

Let’s assume the initial EXPLAIN output looks like this (simplified table format):

+----+-------------+--------------+-------+-----------------+---------------+---------+------+--------+----------+--------------------------+

| id | select_type | table | type | possible_keys | key | key_len | ref | rows | filtered | Extra |

+----+-------------+--------------+-------+-----------------+---------------+---------+------+--------+----------+--------------------------+

| 1 | SIMPLE | ProductSales | range | idx_sale_time | idx_sale_time | 5 | NULL | 150000 | 100.00 | Using where; Using index |

+----+-------------+--------------+-------+-----------------+---------------+---------+------+--------+----------+--------------------------+

Step 3: Identify the Problem

From this EXPLAIN output:

-

typeisrange: This is good; it means the database is using an index (idx_sale_timeonsale_timestamp) to perform a range scan, which is much better than a full table scan (ALL). -

rowsis estimated at150000: This indicates the query still needs to examine a significant number of rows based on the date range. -

Extrashows "Using where; Using index": "Using index" is generally good, suggesting parts of the query can be satisfied by the index. "Using where" means thesale_timestamp > '2025-03-01'condition is being applied.

Step 4: Optimize the SQL (or rather, ensure optimal conditions)

While an index is used, can we do better for a COUNT(*)? If the query can be satisfied entirely from the index without ever touching the actual table data, it's called an "index-only scan" (or "covering index"). For COUNT(*), if a relatively small index exists that includes sale_timestamp, the database might use it.

Let’s assume idx_sale_time is just a single-column index on sale_timestamp. The database still uses it for the range, but it might be reading more from the index than strictly necessary if a more specific optimization is possible. However, for a simple COUNT(*) with a range scan on a date, this plan is often already quite good if idx_sale_time is the best available index.

A common scenario where COUNT(*) can be slow is if there's no suitable index on sale_timestamp, forcing a full table scan. If the output had shown type: ALL, the primary optimization would be:

-- Ensure an index exists:

CREATE INDEX idx_sale_timestamp ON ProductSales(sale_timestamp);

Then, re-running the EXPLAIN on the original COUNT(*) query would likely show the improved plan similar to our hypothetical output above.

Step 5 & 6: Re-EXPLAIN and Analyze (Assuming index was just created or to confirm index-only scan)

If we had a situation where idx_sale_time was part of a composite index that could satisfy COUNT(*) entirely (e.g., if the query was COUNT(sale_timestamp) and sale_timestamp was indexed), the Extra column might just show "Using index".

Step 7: Evaluate Optimization Effect

The goal is to ensure the type is efficient (e.g., range or index rather than ALL) and that the Extra column indicates optimal index usage (like “Using index” for an index-only scan if applicable). The rows estimate should also be as low as reasonably possible.

Case Study 2: Optimizing a Multi-Table Join and Aggregation

Let’s consider a more complex scenario involving joins.

Scenario Setup:

An online learning platform has these tables:

-

Users(stores user information): -

user_id(INT, Primary Key) -

user_name(VARCHAR) -

registration_date(DATE) -

CourseCompletions(stores records of users completing courses): -

completion_id(INT, Primary Key) -

user_id(INT, Foreign Key to Users) -

course_id(INT) -

completion_date(DATE)

The Problem:

We need to find the names of all users and the count of courses they completed in the year 2024.

Original SQL Query:

SELECT

u.user_name,

COUNT(cc.course_id) AS courses_completed_2024

FROM

Users u

JOIN

CourseCompletions cc ON u.user_id = cc.user_id

WHERE

cc.completion_date >= '2024-01-01' AND cc.completion_date <= '2024-12-31'

GROUP BY

u.user_name;

Step 1: Use EXPLAIN to Analyze the Query

EXPLAIN SELECT

u.user_name,

COUNT(cc.course_id) AS courses_completed_2024

FROM

Users u

JOIN

CourseCompletions cc ON u.user_id = cc.user_id

WHERE

cc.completion_date >= '2024-01-01' AND cc.completion_date <= '2024-12-31'

GROUP BY

u.user_name;

Step 2: Analyze the EXPLAIN Output (Hypothetical Initial Output)

+----+-------------+-------------------+------+-----------------------------------+-------------+---------+--------------+-------+----------+-------------------------------+

| id | select_type | table | type | possible_keys | key | key_len | ref | rows | filtered | Extra |

+----+-------------+-------------------+------+-----------------------------------+-------------+---------+--------------+-------+----------+-------------------------------+

| 1 | SIMPLE | u | ALL | PRIMARY | NULL | NULL | NULL | 50000 | 100.00 | Using temporary; Using filesort |

| 1 | SIMPLE | cc | ref | idx_user_id,idx_completion_date | idx_user_id | 4 | db.u.user_id | 10 | 5.00 | Using where |

+----+-------------+-------------------+------+-----------------------------------+-------------+---------+--------------+-------+----------+-------------------------------+

Case Study 2: Optimized EXPLAIN Output (Hypothetical)

Step 3: Identify the Problem

- Table

u(Users):typeisALL. This is a full table scan on theUserstable, which is highly inefficient, especially if the table is large. - Table

cc(CourseCompletions):typeisrefusingidx_user_id. This is good for the join condition, but theWHEREclause oncc.completion_dateis applied after the join, potentially on many rows. Thefilteredvalue of5.00forccalso suggests that after joining, only 5% of those rows match the date condition, meaning a lot of unnecessary work was done. -

Extraforu: "Using temporary; Using filesort" indicates that a temporary table is created for theGROUP BYand then sorted, which is expensive.

Step 4: Optimize the SQL

We can optimize this by:

- Filtering the

CourseCompletionstable before joining it withUsers. This dramatically reduces the number of rows involved in the join. - Ensuring appropriate indexes on

CourseCompletions(completion_date)andUsers(user_id)(alreadyPRIMARYwhich is indexed) andCourseCompletions(user_id). A composite index onCourseCompletions(completion_date, user_id, course_id)could be very beneficial.

Optimized SQL Query (using a subquery/derived table for early filtering):

SELECT

u.user_name,

COUNT(filtered_cc.course_id) AS courses_completed_2024

FROM

Users u

JOIN (

SELECT user_id, course_id

FROM CourseCompletions

WHERE completion_date >= '2024-01-01' AND completion_date <= '2024-12-31'

) AS filtered_cc ON u.user_id = filtered_cc.user_id

GROUP BY

u.user_name;

(Ensure *CourseCompletions* has an index on *completion_date* and *user_id* for this to be most effective. A composite index *(completion_date, user_id)* would be ideal for the subquery).

Step 5: Re-run EXPLAIN on the Optimized Query

EXPLAIN SELECT

u.user_name,

COUNT(filtered_cc.course_id) AS courses_completed_2024

FROM

Users u

JOIN (

SELECT user_id, course_id

FROM CourseCompletions

WHERE completion_date >= '2024-01-01' AND completion_date <= '2024-12-31'

) AS filtered_cc ON u.user_id = filtered_cc.user_id

GROUP BY

u.user_name;

Step 6: Analyze the Optimized EXPLAIN Output (Hypothetical)

+----+-------------+-------------------+--------+-----------------------------------+---------------------+---------+---------------------+------+----------+------------------------------------+

| id | select_type | table | type | possible_keys | key | key_len | ref | rows | filtered | Extra |

+----+-------------+-------------------+--------+-----------------------------------+---------------------+---------+---------------------+------+----------+------------------------------------+

| 1 | PRIMARY | | ALL | NULL | NULL | NULL | NULL | 2000 | 100.00 | Using temporary; Using filesort |

| 1 | PRIMARY | u | eq_ref | PRIMARY | PRIMARY | 4 | filtered_cc.user_id | 1 | 100.00 | |

| 2 | DERIVED | CourseCompletions | range | idx_completion_date,idx_user_id | idx_completion_date | 5 | NULL | 2000 | 100.00 | Using where; Using index condition |

+----+-------------+-------------------+--------+-----------------------------------+---------------------+---------+---------------------+------+----------+------------------------------------+

(Note: The exact plan for derived tables can vary. The key is that *CourseCompletions* is filtered first.)

Step 7: Evaluate Optimization Effect

- The subquery (derived table

filtered_cc) now filtersCourseCompletionsusingidx_completion_date(arangescan), significantly reducing the rows (rows: 2000instead of potentially joining all 500,000 completions first). - The join between

Users(u) and the smallerfiltered_ccresult set is now more efficient.ucan use itsPRIMARYkey effectively (type: eq_ref). - The “Using temporary; Using filesort” might still be present due to

GROUP BY u.user_nameifu.user_nameisn't indexed or if the join order results in unsorted data for grouping. Further optimization could involve indexingu.user_nameor ensuring the join order allows theGROUP BYto use an index.

Through these steps, we’ve analyzed and optimized the original queries, enhancing their efficiency. In real-world applications, more iterations and fine-tuning based on specific database structures and data distributions are often necessary.

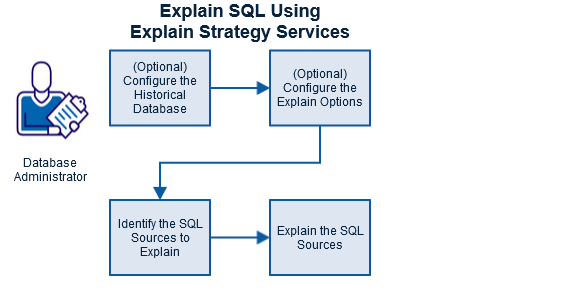

Streamline Your SQL Optimization with Chat2DB

Understanding EXPLAIN plans is a vital skill, but sifting through complex outputs and manually iterating on optimizations can be time-consuming. This is where modern database tools can lend a powerful hand.

Chat2DB (https://chat2db.ai) is an intelligent, AI-powered database client designed to simplify your interaction with various databases like MySQL, PostgreSQL, Oracle, SQL Server, and more.

Imagine having a copilot for your SQL tasks:

- AI-Powered Query Assistance: Generate complex SQL from natural language, get suggestions for optimizing existing queries, or even ask for an explanation of a query plan in simpler terms.

- Intuitive

EXPLAINExecution: Easily runEXPLAINon your queries directly within the interface and view the results. (Future versions might even offer visual plan analysis!) - Seamless Database Management: Connect to multiple databases, manage schemas, and execute queries with a user-friendly experience.

By integrating AI assistance, Chat2DB can help you apply the principles discussed in this article more effectively, identify bottlenecks faster, and ultimately write better, more performant SQL. It empowers both seasoned DBAs and developers new to SQL optimization to improve database efficiency.

![[The AI Show Episode 156]: AI Answers - Data Privacy, AI Roadmaps, Regulated Industries, Selling AI to the C-Suite & Change Management](https://www.marketingaiinstitute.com/hubfs/ep%20156%20cover.png)

![[The AI Show Episode 155]: The New Jobs AI Will Create, Amazon CEO: AI Will Cut Jobs, Your Brain on ChatGPT, Possible OpenAI-Microsoft Breakup & Veo 3 IP Issues](https://www.marketingaiinstitute.com/hubfs/ep%20155%20cover.png)

_incamerastock_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Brain_light_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Senators reintroduce App Store bill to rein in ‘gatekeeper power in the app economy’ [U]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/06/app-store-senate.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)