Advanced Vibe Coding

These days, you can't exist as a developer without hearing about vibe coding. It's the cool new thing that lets "technically dangerous" people write code. Personally, I've been vibing since November 2023 when GPT 3.5 was launched. Not in the same willy-nilly "throw it against the wall and see what sticks" approach. That's hard no for me. There's too many valid and real problems with the spaghetti wall approach: Models don’t understand the big picture, then make design decisions that hurt more later (or spiral into a death loop right now) When the models start out with a clear objective, they still get lost (dazed and confused) as complexity grows Models do still succeed, but the code is disorganized. So when you try adding to it, the model starts breaking it’s own work. There are many more, but these are the biggest points that everyone will experience as they attempt using AI to help with coding. If you're traditionally vibing, you'll feel every point here much sooner than later. Even though context windows have multiplied in size since GPT 3.5, the same problems are still plaguing us. This means we need new and different tools in our proverbial tool belt in order to effectively build with AI. Introducing: Advanced Vibe Coding The premise here is simple: have a structured approach that allows you to maintain the correct context throughout your project as you work through building. 1. Right Model, Right Task This probably feels obvious, but it's not. Large language models are the first time we can experience tech that can present as being good at something, but it actually doesn't understand what it's doing. Have you ever seen a person work who strongly believed they knew what they were doing, but to your experienced eye they clearly did not know what they were doing? The same thing applies to large language models. Don't stick to a single model for anything, you know have a team. A mixture of experts. 2. Have A Clear Outcome In Mind Most of your disappointment is going to come from having an expectation that is unmet. You'll dictate something to the model and it'll implement it and you'll quickly realize that what you meant and what it did are not the same, but at the same time the model was still "correct" in what it did. This is a very human problem that we now get to share with our technology. This problem goes away as teams work together and build experience, but models don't build experience like this. You'll grow this skill over time and through experience. This is extremely dependent on your communication style and the context in which you are working. Embrace your uniqueness, have some patience and chill. 3. Work A Process. You need to work a thought process on behalf of the model. Reasoning models are great, but the experience of working with an LLM is still very much like having an incredibly smart but immature junior. There isn't much thinking things through or planning, it's prompt -> output. Reasoning models are better, but those reasoning tokens take away context from the big picture. Your job is to guide the model through a process that maintains the bigger from the perspective that is necessary to implement the feature. That perspective varies depending on which point of the development process you are in. As humans, this is what we develop as part of our "expertise". Models don't have that, they have knowledge. This is the first time we are experiencing working with something that is so deeply knowledgable but wildly inexperienced. Use your wisdom to guide the models use of knowledge. My Process - 5 Layer App Development The process I came up with is what I call "5 Layer App Development", or "5 Layers" for short. If you're an experienced developer, this will not teach you anything that you don't already know. It will organize the way we normally think about working on a feature into a set of clearly purposeful steps so that we can guide a model successfully. The five layers are as follows: Jargon Feature Stories Generic Feature Stories Technical Feature Stories Pseudo Code Actual Code They are ordered from least descriptive of the technical implementation to the most descriptive of the technical implementation. Greenfield applications start at Layer 2. As an app matures you'll begin working from Layer 1. This just happens, as it's entirely normal to begin using jargon to describe the larger complex features of your application. I'm not going to give an in-depth explanation of the process in this post, I will do that in a follow-up post. It's complex enough that it's worthy of possibly a series. Most of your work is going to be during layers 1 & 2. If you properly define the feature you're trying to implement (or the bug you're trying to squash) then your involvement will largely end during layer 3. If you're familiar with agile stories at all, then you'll easily be able to write feature stories in layers 1 &

These days, you can't exist as a developer without hearing about vibe coding. It's the cool new thing that lets "technically dangerous" people write code.

Personally, I've been vibing since November 2023 when GPT 3.5 was launched. Not in the same willy-nilly "throw it against the wall and see what sticks" approach. That's hard no for me.

There's too many valid and real problems with the spaghetti wall approach:

- Models don’t understand the big picture, then make design decisions that hurt more later (or spiral into a death loop right now)

- When the models start out with a clear objective, they still get lost (dazed and confused) as complexity grows

- Models do still succeed, but the code is disorganized. So when you try adding to it, the model starts breaking it’s own work.

There are many more, but these are the biggest points that everyone will experience as they attempt using AI to help with coding. If you're traditionally vibing, you'll feel every point here much sooner than later.

Even though context windows have multiplied in size since GPT 3.5, the same problems are still plaguing us. This means we need new and different tools in our proverbial tool belt in order to effectively build with AI.

Introducing: Advanced Vibe Coding

The premise here is simple: have a structured approach that allows you to maintain the correct context throughout your project as you work through building.

1. Right Model, Right Task

This probably feels obvious, but it's not. Large language models are the first time we can experience tech that can present as being good at something, but it actually doesn't understand what it's doing. Have you ever seen a person work who strongly believed they knew what they were doing, but to your experienced eye they clearly did not know what they were doing? The same thing applies to large language models. Don't stick to a single model for anything, you know have a team. A mixture of experts.

2. Have A Clear Outcome In Mind

Most of your disappointment is going to come from having an expectation that is unmet. You'll dictate something to the model and it'll implement it and you'll quickly realize that what you meant and what it did are not the same, but at the same time the model was still "correct" in what it did. This is a very human problem that we now get to share with our technology. This problem goes away as teams work together and build experience, but models don't build experience like this. You'll grow this skill over time and through experience. This is extremely dependent on your communication style and the context in which you are working. Embrace your uniqueness, have some patience and chill.

3. Work A Process.

You need to work a thought process on behalf of the model. Reasoning models are great, but the experience of working with an LLM is still very much like having an incredibly smart but immature junior. There isn't much thinking things through or planning, it's prompt -> output. Reasoning models are better, but those reasoning tokens take away context from the big picture.

Your job is to guide the model through a process that maintains the bigger from the perspective that is necessary to implement the feature. That perspective varies depending on which point of the development process you are in. As humans, this is what we develop as part of our "expertise". Models don't have that, they have knowledge. This is the first time we are experiencing working with something that is so deeply knowledgable but wildly inexperienced.

Use your wisdom to guide the models use of knowledge.

My Process - 5 Layer App Development

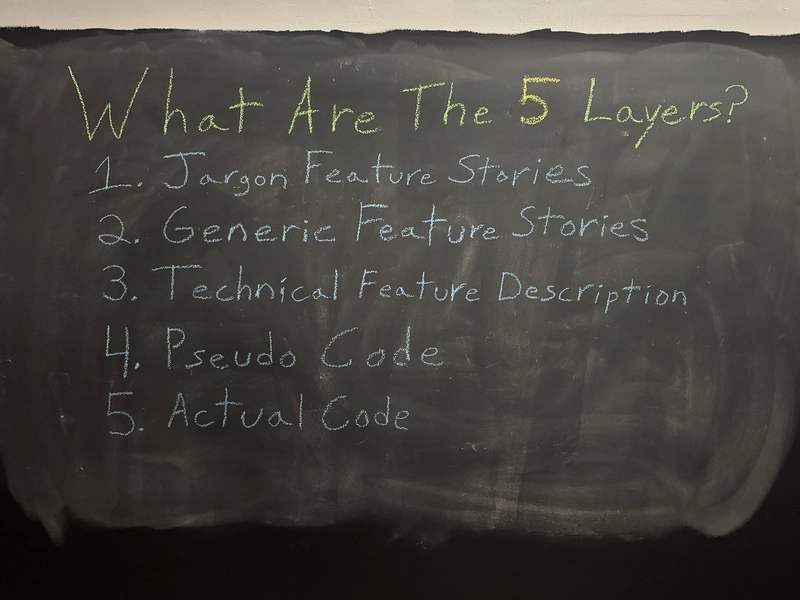

The process I came up with is what I call "5 Layer App Development", or "5 Layers" for short.

If you're an experienced developer, this will not teach you anything that you don't already know. It will organize the way we normally think about working on a feature into a set of clearly purposeful steps so that we can guide a model successfully.

The five layers are as follows:

- Jargon Feature Stories

- Generic Feature Stories

- Technical Feature Stories

- Pseudo Code

- Actual Code

They are ordered from least descriptive of the technical implementation to the most descriptive of the technical implementation.

Greenfield applications start at Layer 2. As an app matures you'll begin working from Layer 1. This just happens, as it's entirely normal to begin using jargon to describe the larger complex features of your application. I'm not going to give an in-depth explanation of the process in this post, I will do that in a follow-up post. It's complex enough that it's worthy of possibly a series.

Most of your work is going to be during layers 1 & 2. If you properly define the feature you're trying to implement (or the bug you're trying to squash) then your involvement will largely end during layer 3. If you're familiar with agile stories at all, then you'll easily be able to write feature stories in layers 1 & 2.

"Why not call them user stories?"

Because not everything is about a human user anymore. Now that we have MCP and internet capable models, we are going to see a rise of AI users being the "third consumer". I'll write about this later in another post.

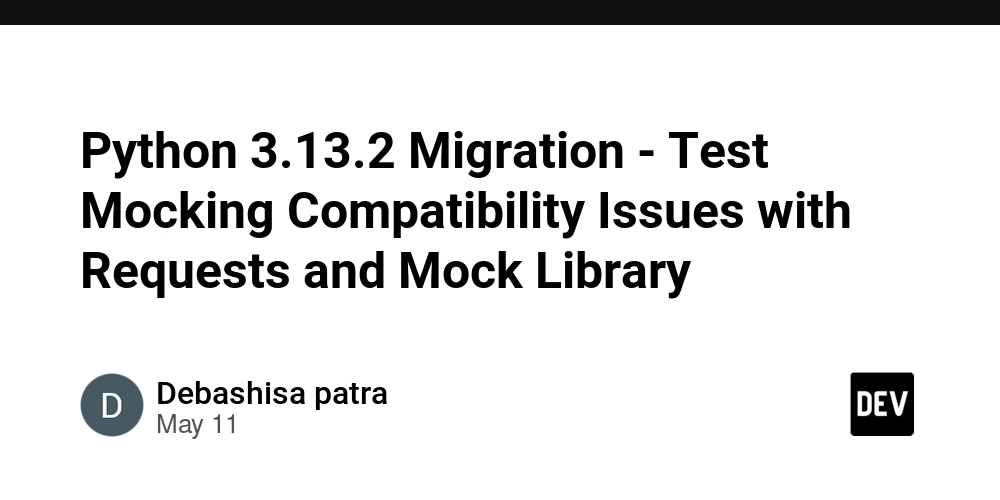

Layer 3 is where we get technical. It's where we identify the tech we have, if it's capable of performing the feature we are implementing and how to address any limitations of the tech stack we have.

Layer 4 is basically like using a Chain-of-Thought (CoT) prompting strategy. Feed in the refined feature story, provide the technical capabilities from layer 3 and have your model make the outline of it's implementation plan.

Layer 5 is pretty straight forward... but in case you missed it, have the model read from the layer 4 description and implement the actual code. The larger the implementation steps necessary, the more you'll benefit from breaking this down into modular chunks.

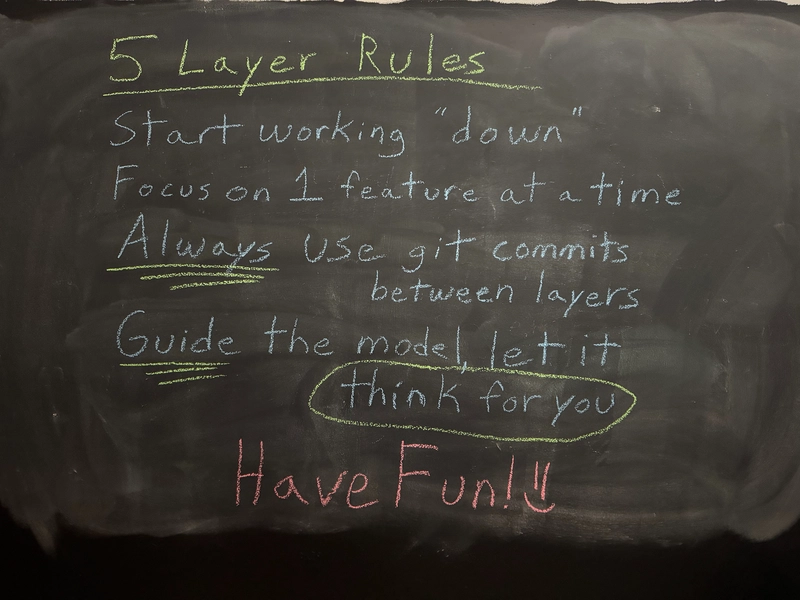

5 Layer Rules

The rules for working through the layers are straightforward (for now). There's a lot more to it, but you don't have to know the nuance to be effective and see benefits.

Start by working "down".

Start at the highest layer that applies. This is always layer 1 or 2.

Focus on 1 feature at a time

Scope creep anyone? You're working from top to bottom and then eventually back up the layers. You're traversing a loop of productivity. Working "up" requires more than just some ideas, so that's outside the scope of this post

![[The AI Show Episode 156]: AI Answers - Data Privacy, AI Roadmaps, Regulated Industries, Selling AI to the C-Suite & Change Management](https://www.marketingaiinstitute.com/hubfs/ep%20156%20cover.png)

![[The AI Show Episode 155]: The New Jobs AI Will Create, Amazon CEO: AI Will Cut Jobs, Your Brain on ChatGPT, Possible OpenAI-Microsoft Breakup & Veo 3 IP Issues](https://www.marketingaiinstitute.com/hubfs/ep%20155%20cover.png)

_incamerastock_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Brain_light_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Senators reintroduce App Store bill to rein in ‘gatekeeper power in the app economy’ [U]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/06/app-store-senate.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)