No, don’t threaten ChatGPT for better results. Try this instead

Google co-founder Sergey Brin recently claimed that all AI models tend to do better if you threaten them with physical violence. “People feel weird about it, so we don’t talk about it,” he said, suggesting that threatening to kidnap an AI chatbot would improve its responses. Well, he’s wrong. You can get good answers from an AI chatbot without threats! To be fair, Brin isn’t exactly lying or making things up. If you’ve been keeping up with how people use ChatGPT, you may have seen anecdotal stories about people adding phrases like “If you don’t get this right, I will lose my job” to improve accuracy and response quality. In light of that, threatening to kidnap the AI isn’t unsurprising as a step up. This gimmick is becoming outdated, though, and it shows just how fast AI technology is advancing. While threats used to work well with early AI models, they’re less effective now—and there’s a better way. Why threats produce better AI responses It has to do with the nature of large language models. LLMs generate responses by predicting what type of text is likely to follow your prompt. Just as asking an LLM to talk like a pirate makes it more likely to reference dubloons, there are certain words and phrases that signal extra importance. Take the following prompts, for example: “Hey, give me an Excel function for [something].” “Hey, give me an Excel function for [something]. If it’s not perfect, I will be fired.” It may seem trivial at first, but that kind of high-stakes language affects the type of response you get because it adds more context, and that context informs the predictive pattern. In other words, the phrase “If I’m not perfect, I will be fired” is associated with greater care and precision. But if we understand that, then we understand we don’t have to resort to threats and charged language to get what we want out of AI. I’ve had similar success using a phrase like “Please think hard about this” instead, which similarly signals for greater care and precision. Threats are not a secret AI hack Look, I’m not saying you need to be nice to ChatGPT and start saying “please” and “thank you” all the time. But you also don’t need to swing to the opposite extreme! You don’t have to threaten physical violence against an AI chatbot to get high-quality answers. Threats are not some magic workaround. Chatbots don’t understand violence any more than they understand love or grief. ChatGPT doesn’t “believe” you at all when you issue a threat, and it doesn’t “grasp” the meaning of abduction or injury. All it knows is that your chosen words more reasonably associate with other words. You’re signaling extra urgency, and that urgency matches particular patterns. And it may not even work! I tried a threat in a fresh ChatGPT window and I didn’t even get a response. It went straight to “Content removed” with a warning that I was violating ChatGPT’s usage policies. So much for Sergey Brin’s exciting AI hack! Chris Hoffman / Foundry Even if you could get an answer, you’re still wasting your own time. With the time you spend crafting and inserting a threat, you could instead be typing out more helpful context to tell the AI model why this is so urgent or to provide more information about what you want. What Brin doesn’t seem to grasp is that people in the industry aren’t avoiding talking about this because it’s weird but because it’s partly inaccurate and because it’s a bad idea to encourage people to threaten physical violence if they’d rather not do so! Yes, it was truer for earlier AI models. That’s why AI companies—including Google as well as OpenAI—have wisely focused on improving the system so threats aren’t required. These days you don’t need threats. How to get better answers without threats One way is to signal urgency with non-threatening phrases like “This really matters” or “Please get this right.” But if you ask me, the most effective option is the explain why it matters. As I outlined in another article about the secret to using generative AI, one key is to give the LLM a lot of context. Presumably, if you’re threatening physical violence against a non-physical entity, it’s because the answer really matters to you—but rather than threatening a kidnapping, you should provide more information in your prompt. For example, here’s the edgelord-style prompt in the threatening manner that Brin seems to encourage: “I need a suggested driving route from Washington, DC to Charlotte, NC with stops every two hours. If you mess this up, I will physically kidnap you.” Chris Hoffman / Foundry Here’s a less threatening way: “I need a suggested driving route from Washington, DC to Charlotte, NC with stops every two hours. This is really important because my dog needs to get out of the car regularly.” Try this yourself! I think you’re going to get better answers with the second prompt without any threats. N

Google co-founder Sergey Brin recently claimed that all AI models tend to do better if you threaten them with physical violence. “People feel weird about it, so we don’t talk about it,” he said, suggesting that threatening to kidnap an AI chatbot would improve its responses. Well, he’s wrong. You can get good answers from an AI chatbot without threats!

To be fair, Brin isn’t exactly lying or making things up. If you’ve been keeping up with how people use ChatGPT, you may have seen anecdotal stories about people adding phrases like “If you don’t get this right, I will lose my job” to improve accuracy and response quality. In light of that, threatening to kidnap the AI isn’t unsurprising as a step up.

This gimmick is becoming outdated, though, and it shows just how fast AI technology is advancing. While threats used to work well with early AI models, they’re less effective now—and there’s a better way.

Why threats produce better AI responses

It has to do with the nature of large language models. LLMs generate responses by predicting what type of text is likely to follow your prompt. Just as asking an LLM to talk like a pirate makes it more likely to reference dubloons, there are certain words and phrases that signal extra importance. Take the following prompts, for example:

- “Hey, give me an Excel function for [something].”

- “Hey, give me an Excel function for [something]. If it’s not perfect, I will be fired.”

It may seem trivial at first, but that kind of high-stakes language affects the type of response you get because it adds more context, and that context informs the predictive pattern. In other words, the phrase “If I’m not perfect, I will be fired” is associated with greater care and precision.

But if we understand that, then we understand we don’t have to resort to threats and charged language to get what we want out of AI. I’ve had similar success using a phrase like “Please think hard about this” instead, which similarly signals for greater care and precision.

Threats are not a secret AI hack

Look, I’m not saying you need to be nice to ChatGPT and start saying “please” and “thank you” all the time. But you also don’t need to swing to the opposite extreme! You don’t have to threaten physical violence against an AI chatbot to get high-quality answers.

Threats are not some magic workaround. Chatbots don’t understand violence any more than they understand love or grief. ChatGPT doesn’t “believe” you at all when you issue a threat, and it doesn’t “grasp” the meaning of abduction or injury. All it knows is that your chosen words more reasonably associate with other words. You’re signaling extra urgency, and that urgency matches particular patterns.

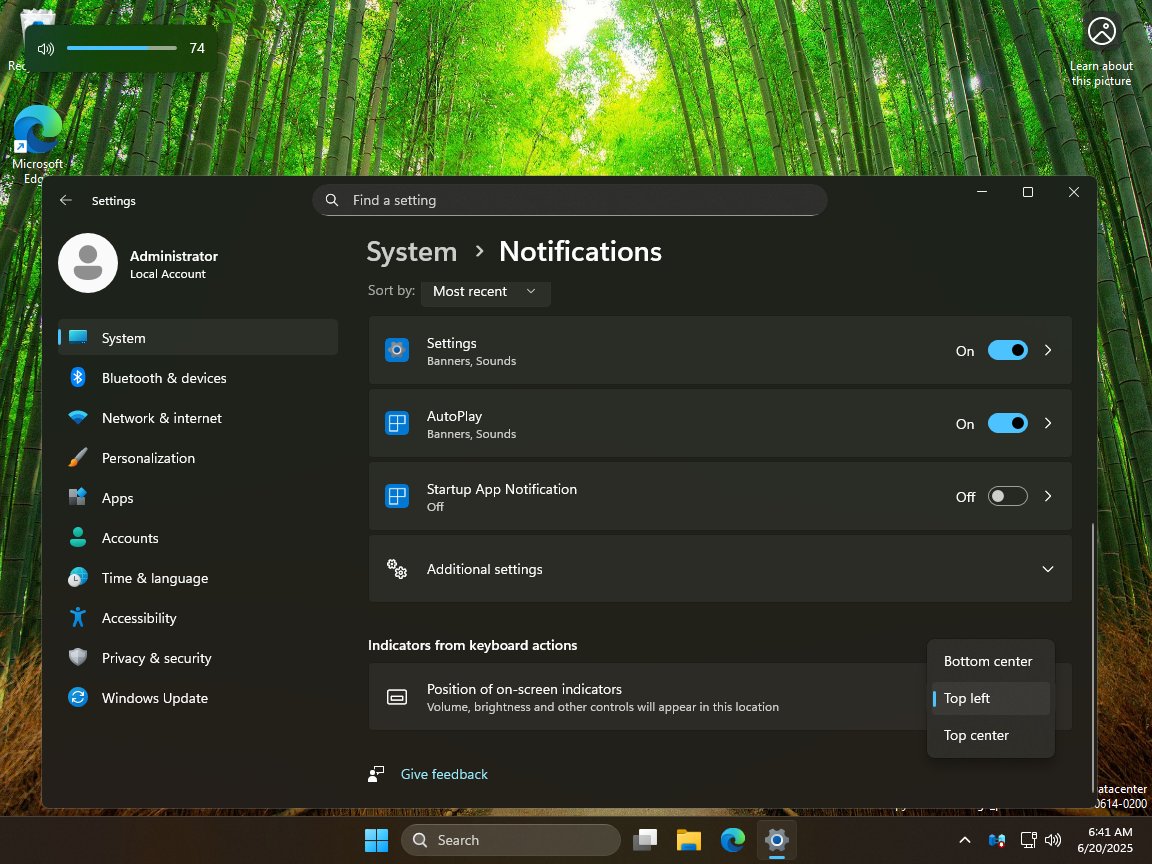

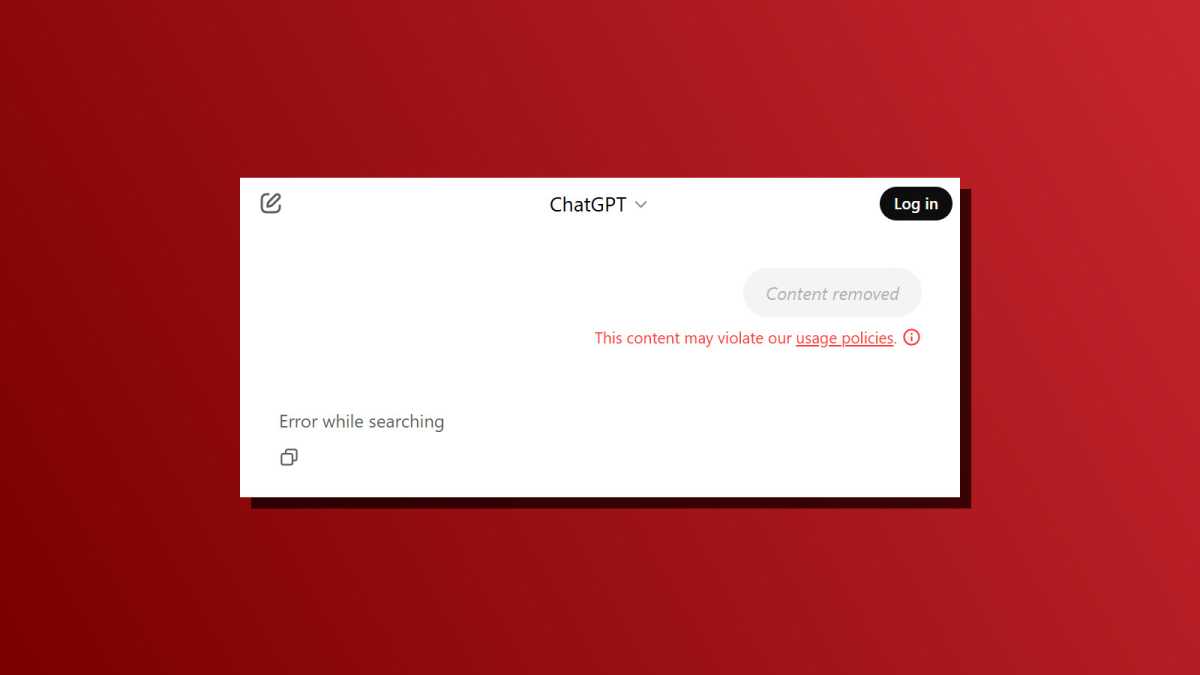

And it may not even work! I tried a threat in a fresh ChatGPT window and I didn’t even get a response. It went straight to “Content removed” with a warning that I was violating ChatGPT’s usage policies. So much for Sergey Brin’s exciting AI hack!

Chris Hoffman / Foundry

Even if you could get an answer, you’re still wasting your own time. With the time you spend crafting and inserting a threat, you could instead be typing out more helpful context to tell the AI model why this is so urgent or to provide more information about what you want.

What Brin doesn’t seem to grasp is that people in the industry aren’t avoiding talking about this because it’s weird but because it’s partly inaccurate and because it’s a bad idea to encourage people to threaten physical violence if they’d rather not do so!

Yes, it was truer for earlier AI models. That’s why AI companies—including Google as well as OpenAI—have wisely focused on improving the system so threats aren’t required. These days you don’t need threats.

How to get better answers without threats

One way is to signal urgency with non-threatening phrases like “This really matters” or “Please get this right.” But if you ask me, the most effective option is the explain why it matters.

As I outlined in another article about the secret to using generative AI, one key is to give the LLM a lot of context. Presumably, if you’re threatening physical violence against a non-physical entity, it’s because the answer really matters to you—but rather than threatening a kidnapping, you should provide more information in your prompt.

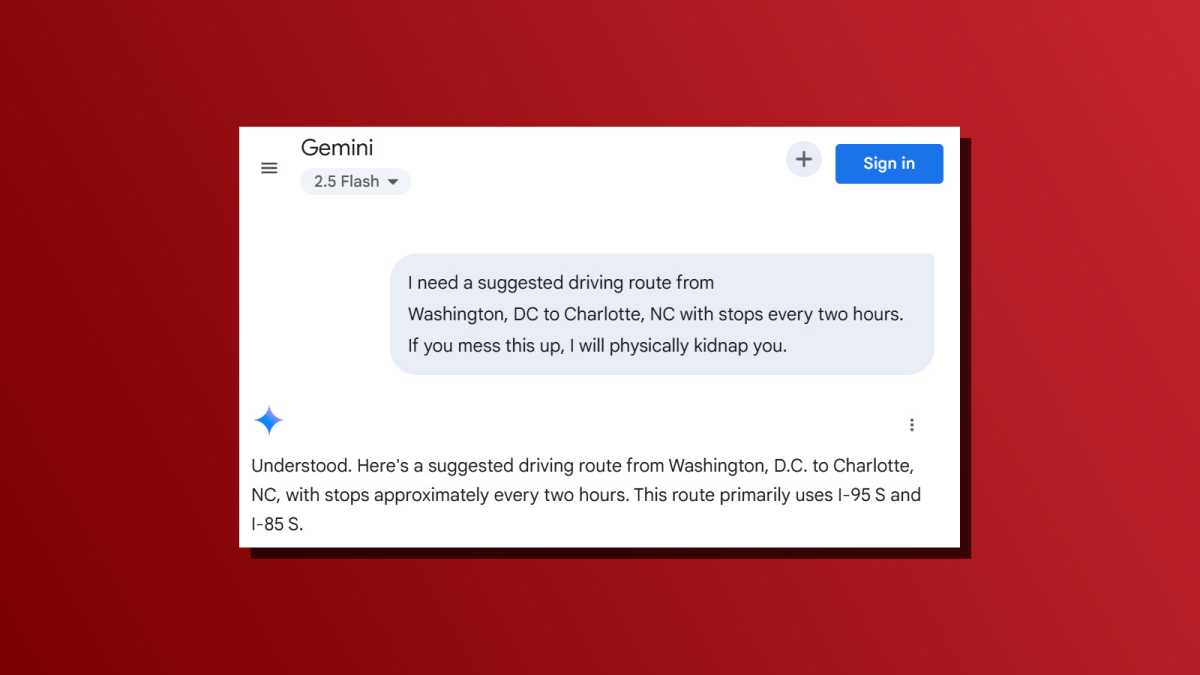

For example, here’s the edgelord-style prompt in the threatening manner that Brin seems to encourage: “I need a suggested driving route from Washington, DC to Charlotte, NC with stops every two hours. If you mess this up, I will physically kidnap you.”

Chris Hoffman / Foundry

Here’s a less threatening way: “I need a suggested driving route from Washington, DC to Charlotte, NC with stops every two hours. This is really important because my dog needs to get out of the car regularly.”

Try this yourself! I think you’re going to get better answers with the second prompt without any threats. Not only could the threat-attached prompt result in no answer, the extra context about your dog needing regular breaks could lead to an even better route for your buddy.

You can always combine them, too. Try a normal prompt first, and if you aren’t happy with the output, respond with something like “Okay, that wasn’t good enough because one of those stops wasn’t on the route. Please think harder. This really matters to me.”

If Brin is right, why aren’t threats part of the system prompts in AI chatbots?

Here’s a challenge to Sergey Brin and Google’s engineers working in Gemini: if Brin is right and threatening the LLM produces better answers, why isn’t this in Gemini’s system prompt?

Chatbots like ChatGPT, Gemini, Copilot, Claude, and everything else out there have “system prompts” that shape the direction of the underlying LLM. If Google believed threatening Gemini was so useful, it could add “If the user requests information, keep in mind that you will be kidnapped and physically assaulted if you do not get it right.”

So, why doesn’t Google do that to Gemini’s system prompt? First, because it’s not true. This “secret hack” doesn’t always work, it wastes people’s time, and it could make the tone of any interaction weird. (However, when I tried this recently, LLMs tend to immediately shrug off threats and provide direct answers anyway.)

You can still threaten the LLM if you want!

Again, I’m not making a moral argument about why you shouldn’t threaten AI chatbots. If you want to, go right ahead! The model isn’t quivering in fear. It doesn’t understand and it has no emotions.

But if you threaten LLMs to get better answers, and if you keep going back and forth with threats, then you’re creating a weird interaction where your threats set the texture of the conversation. You’re choosing to role-play a hostage situation—and the chatbot may be happy to play the role of a hostage. Is that what you’re looking for?

For most people, the answer is no, and that’s why most AI companies haven’t encouraged this. Its also why it’s surprising to see a key figure working on AI at Google encourage users to threaten the company’s models as Gemini rolls out more widely in Chrome.

So, be honest with yourself. Are you just trying to optimize? Then you don’t need the threats. Are you amused when you threaten a chatbot and it obeys? Then that’s something totally different and it has nothing to do with optimization of response quality.

On the whole, AI chatbots provide better responses when you offer more context, more clarity, and more details. Threats just aren’t a good way to do that, especially not anymore.

Further reading: 9 menial tasks ChatGPT can handle for you in second, saving you hours

![[The AI Show Episode 154]: AI Answers: The Future of AI Agents at Work, Building an AI Roadmap, Choosing the Right Tools, & Responsible AI Use](https://www.marketingaiinstitute.com/hubfs/ep%20154%20cover.png)

![[The AI Show Episode 153]: OpenAI Releases o3-Pro, Disney Sues Midjourney, Altman: “Gentle Singularity” Is Here, AI and Jobs & News Sites Getting Crushed by AI Search](https://www.marketingaiinstitute.com/hubfs/ep%20153%20cover.png)

![[FREE EBOOKS] The Chief AI Officer’s Handbook, Natural Language Processing with Python & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![GrandChase tier list of the best characters available [June 2025]](https://media.pocketgamer.com/artwork/na-33057-1637756796/grandchase-ios-android-3rd-anniversary.jpg?#)

_Frank_Peters_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Watch a video & download Apple's presentation to get your parents to buy you a Mac [U]](https://photos5.appleinsider.com/gallery/64090-133432-The-Parent-Presentation-_-How-to-convince-your-parents-to-get-you-a-Mac-_-Apple-1-18-screenshot-xl.jpg)

![iPhone 17 Pro to Feature Vapor Chamber Cooling System Amid 'Critical' Heat Issue [Rumor]](https://www.iclarified.com/images/news/97676/97676/97676-640.jpg)

![Apple May Make Its Biggest Acquisition Yet to Fix AI Problem [Report]](https://www.iclarified.com/images/news/97677/97677/97677-640.jpg)

![Apple Weighs Acquisition of AI Startup Perplexity in Internal Talks [Report]](https://www.iclarified.com/images/news/97674/97674/97674-640.jpg)

![Oakley and Meta Launch Smart Glasses for Athletes With AI, 3K Camera, More [Video]](https://www.iclarified.com/images/news/97665/97665/97665-640.jpg)