From Hugging Face to Production: Deploying Segment Anything (SAM) with Jozu's Model Import Feature

In this rapidly growing field of the computer vision domain, deploying some cutting edge state of the art models from research to production environments can be a really tough task to look for. Models like the Segment Anything Model (SAM) by Meta offer remarkable capabilities however, it comes with some complexities that can create problems for seamless integration. Jozu on the other hand acts as an MLOps platform that is designed to streamline this integration with its new features. It has simplified the deployment process, which enables the teams to bring out amazing models like SAM into the production with less problems and minimal friction. Exploring Segment Anything Model (SAM) Segment Anything Model (SAM) developed by Meta AI, represents a significant advancement in image segmentation. Trained on a vast dataset of over 11 million images and 1.1. Billion masks, SAM improvised at generating high quality object masks from various input prompts, such as points or boxes. Its architecture consists of three main components, image encoder, prompt encoder and mask decoder, working together in unison to provide precise segmentation results. One of the SAM's unique features is its zero shot performance, allowing it to generalize across various segmentation tasks without additional training. This kind of flexibility makes it a great tool for applications ranging from medical imaging to autonomous vehicles. However, even after its capabilities, integrating SAM into production environments can be a challenging task, due to its deployment complexities and due to this Jozu's features comes in handy which provides a streamlined pathway to use SAM effectively. Jozu's Hugging Face Import Feature: From

In this rapidly growing field of the computer vision domain, deploying some cutting edge state of the art models from research to production environments can be a really tough task to look for. Models like the Segment Anything Model (SAM) by Meta offer remarkable capabilities however, it comes with some complexities that can create problems for seamless integration. Jozu on the other hand acts as an MLOps platform that is designed to streamline this integration with its new features. It has simplified the deployment process, which enables the teams to bring out amazing models like SAM into the production with less problems and minimal friction.

Exploring Segment Anything Model (SAM)

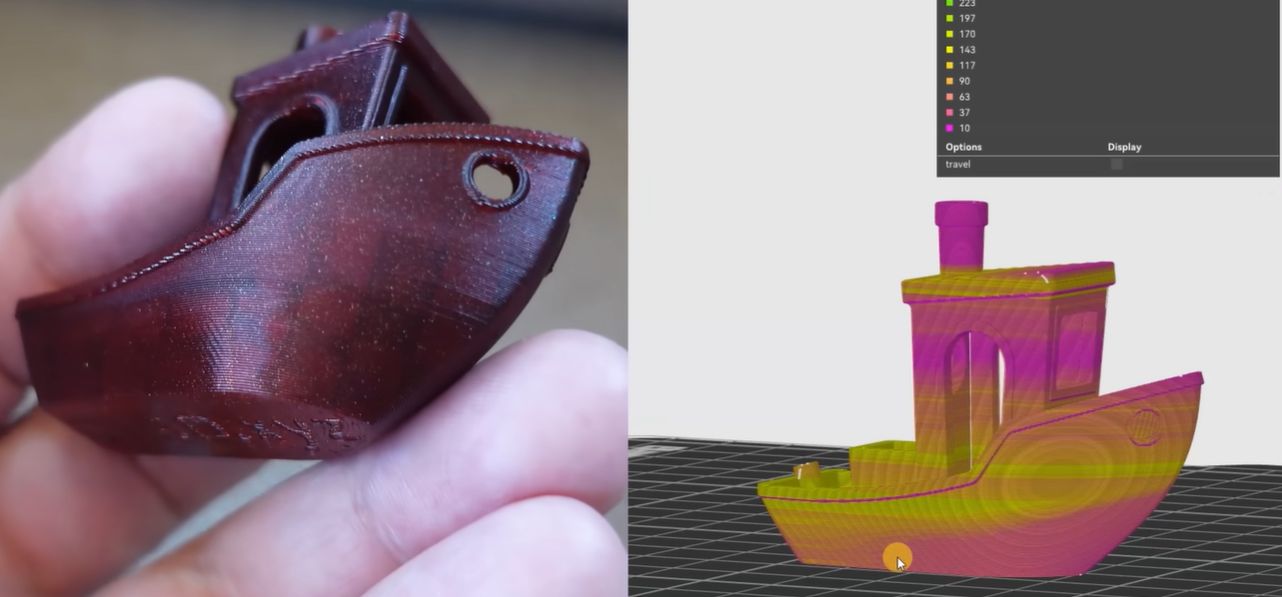

Segment Anything Model (SAM) developed by Meta AI, represents a significant advancement in image segmentation. Trained on a vast dataset of over 11 million images and 1.1. Billion masks, SAM improvised at generating high quality object masks from various input prompts, such as points or boxes. Its architecture consists of three main components, image encoder, prompt encoder and mask decoder, working together in unison to provide precise segmentation results.

One of the SAM's unique features is its zero shot performance, allowing it to generalize across various segmentation tasks without additional training. This kind of flexibility makes it a great tool for applications ranging from medical imaging to autonomous vehicles. However, even after its capabilities, integrating SAM into production environments can be a challenging task, due to its deployment complexities and due to this Jozu's features comes in handy which provides a streamlined pathway to use SAM effectively.

![[The AI Show Episode 156]: AI Answers - Data Privacy, AI Roadmaps, Regulated Industries, Selling AI to the C-Suite & Change Management](https://www.marketingaiinstitute.com/hubfs/ep%20156%20cover.png)

![[The AI Show Episode 155]: The New Jobs AI Will Create, Amazon CEO: AI Will Cut Jobs, Your Brain on ChatGPT, Possible OpenAI-Microsoft Breakup & Veo 3 IP Issues](https://www.marketingaiinstitute.com/hubfs/ep%20155%20cover.png)

_incamerastock_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Brain_light_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Nothing Phone (3) has a 50MP ‘periscope’ telephoto lens – here are the first samples [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/06/nothing-phone-3-telephoto.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)