Beyond the Hype Part 2: Enter Google's A2A Protocol - Complementing MCP for Agent Collaboration

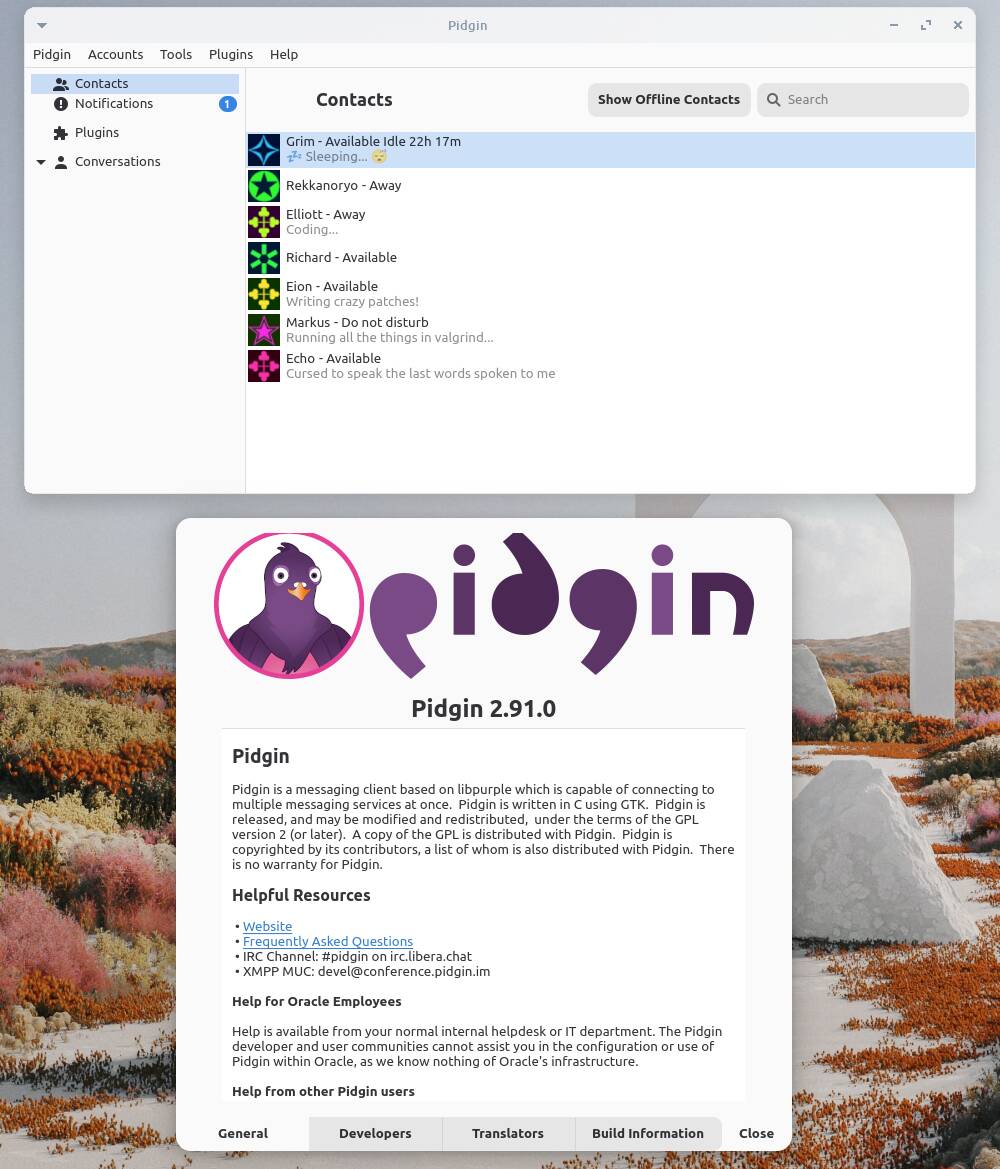

(Originally published: April 12, 2025) In our previous article, "Beyond the Hype: Understanding the Limitations of Anthropic's Model Context Protocol for Tool Calling", we explored Anthropic's MCP – a commendable effort to standardize how AI agents interact with tools, drawing parallels to USB for hardware. We discussed its potential limitations, including stateful communication challenges, potential context window issues in large deployments, and its indirect nature for LLM-tool interaction. MCP aimed to solve the "M×N" tool integration problem, but these challenges highlighted the need for further evolution or complementary approaches. Enter Google's Agent 2 Agent (A2A) protocol, announced in early 2025. Google explicitly positions A2A not as a replacement for MCP, but as a complementary standard addressing a different, yet equally critical, layer of the AI puzzle: direct communication between autonomous AI agents. Google argues that building sophisticated agentic systems requires two distinct layers: Tool & Data Integration: How an agent accesses external capabilities (MCP's focus). Inter-Agent Communication & Coordination: How multiple agents work together (A2A's focus). This article dives into Google's A2A protocol, exploring how it facilitates agent collaboration, how its design differs from MCP, and how these two protocols can work synergistically to build more powerful AI systems. What is Google's Agent 2 Agent (A2A) Protocol? At its core, A2A is an open protocol designed to standardize how independent AI agents discover, communicate, securely exchange information, and coordinate actions across various services and systems. It provides the "social rules" for agents to interact effectively. Key concepts underpin A2A: 1. Discovering Agents: The Agent Card Think of this as an agent's business card or public profile. It's a standardized metadata file (typically at /.well-known/agent.json) advertising an agent's capabilities, skills, communication endpoint (URL), and required authentication. This allows agents (acting as clients) to find other agents that can perform specific tasks. 2. Talking to Agents: A2A Servers and A2A Clients An A2A Server is simply an AI agent that exposes an HTTP endpoint and understands the A2A protocol's methods. An A2A Client is any application or agent that wants to interact with an A2A Server by sending requests to its advertised URL. 3. Getting Things Done: Tasks The fundamental unit of work. A client initiates a Task by sending a message. There are endpoints for quick requests (tasks/send) and long-running jobs (tasks/sendSubscribe). Tasks have a defined lifecycle (e.g., submitted, working, input-required, completed, failed), providing clear status tracking. 4. The Conversation: Messages and Parts Interactions happen through Messages, each with a role ("user" for the initiator, "agent" for the responder). Messages contain Parts, which are the basic units of content. A2A supports various types: TextPart: Plain text. FilePart: Files (inline bytes or via URI). DataPart: Structured JSON data (e.g., forms). This multi-modal capability allows agents to exchange rich information beyond simple text. 5. The Results: Artifacts The output generated by an agent performing a task is called an Artifact. Like messages, artifacts can contain multiple Parts of different types. 6. Handling Long Jobs: Streaming and Push Notifications For tasks that take time, A2A uses Server-Sent Events (SSE) via the tasks/sendSubscribe endpoint. This allows the server agent to push real-time status updates (TaskStatusUpdateEvent) or intermediate results (TaskArtifactUpdateEvent) back to the client. A2A also supports Push Notifications, where a server can proactively send updates to a client-specified webhook URL (configured via tasks/pushNotification/set), avoiding the need for constant polling. 7. Under the Hood: The Tech Stack A2A builds on familiar web standards: HTTP: The transport layer. JSON-RPC 2.0: For structured request/response messages. Server-Sent Events (SSE): For real-time streaming updates. How A2A Complements (and Differs From) MCP Understanding the differences clarifies why Google sees A2A and MCP as complementary: 1. Primary Focus: MCP: Agent-to-Tool/Data communication. Standardizing how one agent uses external resources. A2A: Agent-to-Agent communication. Standardizing how multiple agents collaborate. 2. Communication & State Management: MCP: Relies on stateful SSE sessions, potentially complicating integration with stateless REST APIs and impacting server scalability as context must be maintained per client. A2A: Uses standard, generally stateless HTTP requests (POST) to initiate tasks. While SSE is used for streaming updates within a long-running task (introducing state for that specific stream), the

(Originally published: April 12, 2025)

In our previous article, "Beyond the Hype: Understanding the Limitations of Anthropic's Model Context Protocol for Tool Calling", we explored Anthropic's MCP – a commendable effort to standardize how AI agents interact with tools, drawing parallels to USB for hardware. We discussed its potential limitations, including stateful communication challenges, potential context window issues in large deployments, and its indirect nature for LLM-tool interaction. MCP aimed to solve the "M×N" tool integration problem, but these challenges highlighted the need for further evolution or complementary approaches.

Enter Google's Agent 2 Agent (A2A) protocol, announced in early 2025. Google explicitly positions A2A not as a replacement for MCP, but as a complementary standard addressing a different, yet equally critical, layer of the AI puzzle: direct communication between autonomous AI agents.

Google argues that building sophisticated agentic systems requires two distinct layers:

- Tool & Data Integration: How an agent accesses external capabilities (MCP's focus).

- Inter-Agent Communication & Coordination: How multiple agents work together (A2A's focus).

This article dives into Google's A2A protocol, exploring how it facilitates agent collaboration, how its design differs from MCP, and how these two protocols can work synergistically to build more powerful AI systems.

What is Google's Agent 2 Agent (A2A) Protocol?

At its core, A2A is an open protocol designed to standardize how independent AI agents discover, communicate, securely exchange information, and coordinate actions across various services and systems. It provides the "social rules" for agents to interact effectively.

Key concepts underpin A2A:

1. Discovering Agents: The Agent Card

- Think of this as an agent's business card or public profile. It's a standardized metadata file (typically at

/.well-known/agent.json) advertising an agent's capabilities, skills, communication endpoint (URL), and required authentication. This allows agents (acting as clients) to find other agents that can perform specific tasks.

2. Talking to Agents: A2A Servers and A2A Clients

- An A2A Server is simply an AI agent that exposes an HTTP endpoint and understands the A2A protocol's methods.

- An A2A Client is any application or agent that wants to interact with an A2A Server by sending requests to its advertised URL.

3. Getting Things Done: Tasks

- The fundamental unit of work. A client initiates a

Taskby sending a message. - There are endpoints for quick requests (

tasks/send) and long-running jobs (tasks/sendSubscribe). - Tasks have a defined lifecycle (e.g.,

submitted,working,input-required,completed,failed), providing clear status tracking.

4. The Conversation: Messages and Parts

- Interactions happen through

Messages, each with a role ("user" for the initiator, "agent" for the responder). - Messages contain

Parts, which are the basic units of content. A2A supports various types:-

TextPart: Plain text. -

FilePart: Files (inline bytes or via URI). -

DataPart: Structured JSON data (e.g., forms).

-

- This multi-modal capability allows agents to exchange rich information beyond simple text.

5. The Results: Artifacts

- The output generated by an agent performing a task is called an

Artifact. Like messages, artifacts can contain multiplePartsof different types.

6. Handling Long Jobs: Streaming and Push Notifications

- For tasks that take time, A2A uses Server-Sent Events (SSE) via the

tasks/sendSubscribeendpoint. This allows the server agent to push real-time status updates (TaskStatusUpdateEvent) or intermediate results (TaskArtifactUpdateEvent) back to the client. - A2A also supports Push Notifications, where a server can proactively send updates to a client-specified webhook URL (configured via

tasks/pushNotification/set), avoiding the need for constant polling.

7. Under the Hood: The Tech Stack

- A2A builds on familiar web standards:

- HTTP: The transport layer.

- JSON-RPC 2.0: For structured request/response messages.

- Server-Sent Events (SSE): For real-time streaming updates.

How A2A Complements (and Differs From) MCP

Understanding the differences clarifies why Google sees A2A and MCP as complementary:

1. Primary Focus:

- MCP: Agent-to-Tool/Data communication. Standardizing how one agent uses external resources.

- A2A: Agent-to-Agent communication. Standardizing how multiple agents collaborate.

2. Communication & State Management:

- MCP: Relies on stateful SSE sessions, potentially complicating integration with stateless REST APIs and impacting server scalability as context must be maintained per client.

- A2A: Uses standard, generally stateless HTTP requests (POST) to initiate tasks. While SSE is used for streaming updates within a long-running task (introducing state for that specific stream), the fundamental interaction model is more task-centric and aligns better with REST principles. This task-based state management might be more scalable for managing numerous inter-agent interactions compared to MCP's session-based state.

3. Tool Integration:

- MCP: Directly defines how tools are described and invoked. Tool integration is its core purpose.

- A2A: Does not directly specify tool integration. It assumes agents communicating via A2A already have ways to access tools – potentially using MCP, direct API calls, or other internal mechanisms. Google's Agent Development Kit (ADK), for instance, supports building agents that can use MCP for tools and A2A for communication.

4. Context Window Concerns (Indirect Benefit):

- MCP: Integrating many tools via MCP could potentially overload an LLM's context window, as each connection adds overhead.

- A2A: By facilitating communication between specialized agents, A2A enables distributed architectures. Instead of one monolithic agent juggling numerous MCP tools, you could have multiple focused agents collaborating via A2A. Each agent manages its own context, potentially reducing the load on any single LLM. A2A doesn't solve MCP's context issue directly, but promotes patterns that mitigate it.

Putting It Together: Synergistic Use Cases

The real power emerges when MCP and A2A work together. MCP equips individual agents with skills; A2A lets them function as a team.

-

Example: Car Repair Shop

- A

Customerinteracts with aShop Employee Agent(via A2A). -

Shop Employee Agentuses MCP to:- Run diagnostics (

Tool: Engine Diagnostics). - Check inventory (

Resource: Parts Database).

- Run diagnostics (

- If a part is needed,

Shop Employee Agentuses A2A to communicate with aParts Supplier Agentto place an order.

- A

-

Example: Multi-Stage Hiring

- A

Recruiter Agentcoordinates (via A2A) with:-

Candidate Sourcing Agent: Uses MCP to query job boards/databases. -

Interview Scheduling Agent: Uses MCP to access calendar APIs.

-

- A2A manages the workflow: sourcing agent finds candidates -> notifies scheduling agent -> scheduling agent confirms -> notifies recruiter agent.

- A

These examples show MCP handling the "how" (tool use) for individual agents, while A2A handles the "who" and "when" (collaboration) between agents.

A2A in the Broader Landscape

How does A2A compare to other standards like Agents.json or llms.txt?

- Agents.json: Focuses on standardizing how a single agent interacts with APIs using OpenAPI, emphasizing stateless interaction. It's about making API calls easier for an agent.

- llms.txt: Aims to help AIs better understand website content by providing a structured site overview. It's about information retrieval from a specific source.

- A2A: Is broader, focusing on general-purpose communication and task coordination between any type of autonomous agents, regardless of how they access tools or information (APIs, websites, MCP tools, etc.).

While Agents.json helps an agent use a specific type of tool (APIs) and llms.txt helps access a specific type of data (websites), A2A focuses on the interaction between the agents themselves.

Conclusion: The Two-Layer Approach to Interoperability

Google's A2A protocol doesn't replace MCP; it complements it by addressing a different, crucial layer: inter-agent communication.

- MCP: Standardizes the Agent-Tool interface.

- A2A: Standardizes the Agent-Agent interface.

Together, they offer a powerful two-layer model for building sophisticated AI systems. MCP provides the building blocks of individual agent capability, while A2A provides the framework for collaboration and orchestration.

The introduction of an open standard like A2A, backed by major players and gaining traction, has the potential to unlock significant innovation in the AI ecosystem. It paves the way for more modular, interoperable, and collaborative AI applications where specialized agents can seamlessly work together. While the AI standards landscape is still evolving, the complementary nature of MCP and A2A offers a promising path towards building the next generation of intelligent, interconnected AI solutions.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![[DEALS] Microsoft Visual Studio Professional 2022 + The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From Accountant to Data Engineer with Alyson La [Podcast #168]](https://cdn.hashnode.com/res/hashnode/image/upload/v1744420903260/fae4b593-d653-41eb-b70b-031591aa2f35.png?#)

.png?#)

![What Google Messages features are rolling out [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2023/12/google-messages-name-cover.png?resize=1200%2C628&quality=82&strip=all&ssl=1)

![iPadOS 19 Will Be More Like macOS [Gurman]](https://www.iclarified.com/images/news/97001/97001/97001-640.jpg)

![Apple TV+ Summer Preview 2025 [Video]](https://www.iclarified.com/images/news/96999/96999/96999-640.jpg)

![Apple Watch SE 2 On Sale for Just $169.97 [Deal]](https://www.iclarified.com/images/news/96996/96996/96996-640.jpg)