Train Your Own LLM

Ever wondered how large language models like ChatGPT are actually built? Behind these impressive AI tools lies a complex but fascinating process of data preparation, model training, and fine-tuning. While it might seem like something only experts wit...

Ever wondered how large language models like ChatGPT are actually built? Behind these impressive AI tools lies a complex but fascinating process of data preparation, model training, and fine-tuning. While it might seem like something only experts with massive resources can do, it’s actually possible to learn how to build your own language model from scratch. And with the right guidance, you can go from loading raw text data to chatting with your very own AI assistant.

We just published a course on the freeCodeCamp.org YouTube channel that will teach you all about training a language model from start to finish. Created and taught by Imad Saddik, this course takes a beginner-friendly approach to one of the most powerful areas of machine learning. Using Moroccan Darija as a working example, Imad walks you through every step of the process, from tokenizing raw text to fine-tuning a functional chatbot. Whether you're interested in natural language processing, AI development, or simply want to deepen your understanding of how modern language models work, this course is a fantastic place to start.

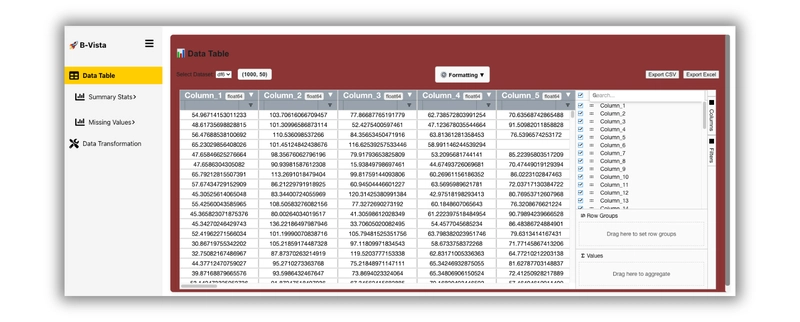

The course begins with the basics: you’ll learn how to gather and prepare your training data. Then, you’ll dive into tokenization, where you’ll build a tokenizer from scratch using the Byte Pair Encoding (BPE) method. This step is important because language models don’t process raw text directly. They process sequences of tokens, which are smaller chunks of language. Once your tokenizer is ready, you’ll use it to encode your dataset, preparing it for the model training phase.

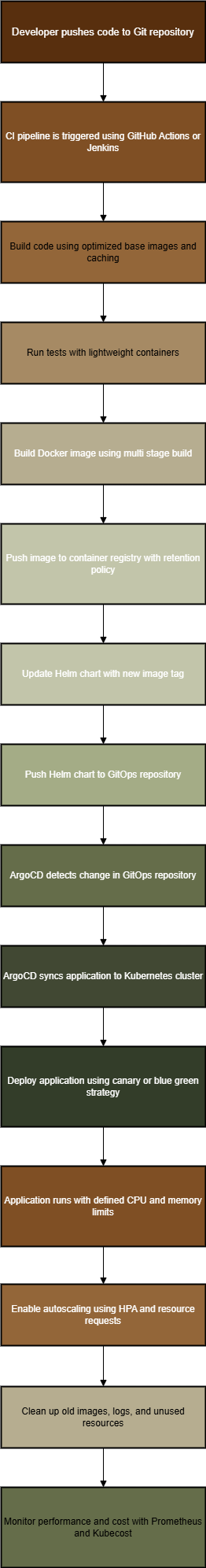

Next, the course takes you deep into the heart of modern AI: the Transformer architecture. You’ll explore how transformers work, why they’ve revolutionized language modeling, and how their attention mechanisms allow them to understand and generate human-like text. With this foundation in place, you'll pre-train a language model on your encoded data, allowing it to learn the patterns and structure of the language from scratch.

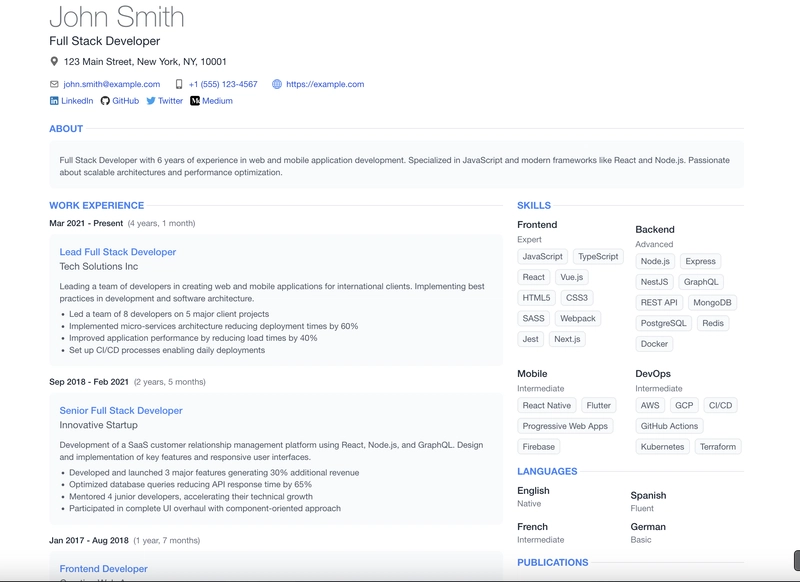

But the journey doesn’t stop there. You’ll then learn how to create a supervised fine-tuning dataset. This step is key to turning your general-purpose model into something more task-specific, like a helpful chatbot. You’ll go through the process of instruction tuning, teaching your model how to follow prompts and perform useful tasks. And to make fine-tuning more efficient, the course introduces you to LoRA (Low-Rank Adaptation), a technique that allows you to adapt large models without retraining everything from scratch.

Finally, you’ll scale up your work, fine-tuning the model to become a conversational AI assistant that you can interact with in real-time. By the end of the course, you’ll have built your own end-to-end language model pipeline.

Check it out now on the freeCodeCamp.org YouTube channel and start building your AI assistant today (4-hour watch).

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![From Accountant to Data Engineer with Alyson La [Podcast #168]](https://cdn.hashnode.com/res/hashnode/image/upload/v1744420903260/fae4b593-d653-41eb-b70b-031591aa2f35.png?#)

.png?#)

![Apple Posts Full First Episode of 'Your Friends & Neighbors' on YouTube [Video]](https://www.iclarified.com/images/news/96990/96990/96990-640.jpg)

![Apple May Implement Global iPhone Price Increases to Mitigate Tariff Impacts [Report]](https://www.iclarified.com/images/news/96987/96987/96987-640.jpg)