The Basis of Cognitive Complexity: Teaching CNNs to See Connections

Transforming CNNs: From task-specific learning to abstract generalization The post The Basis of Cognitive Complexity: Teaching CNNs to See Connections appeared first on Towards Data Science.

Liberating education consists in acts of cognition, not transferrals of information. Paulo freire

Many authors suggest that artificial intelligence models do not possess the same capabilities as humans, especially when it comes to plasticity, flexibility, and adaptation.

One of the aspects that models do not capture are several causal relationships about the external world.

This article discusses these issues:

- The parallelism between convolutional neural networks (CNNs) and the human visual cortex

- Limitations of CNNs in understanding causal relations and learning abstract concepts

- How to make CNNs learn simple causal relations

Is it the same? Is it different?

Convolutional networks (CNNs) [2] are multi-layered neural networks that take images as input and can be used for multiple tasks. One of the most fascinating aspects of CNNs is their inspiration from the human visual cortex [1]:

- Hierarchical processing. The visual cortex processes images hierarchically, where early visual areas capture simple features (such as edges, lines, and colors) and deeper areas capture more complex features such as shapes, objects, and scenes. CNN, due to its layered structure, captures edges and textures in the early layers, while layers further down capture parts or whole objects.

- Receptive fields. Neurons in the visual cortex respond to stimuli in a specific local region of the visual field (commonly called receptive fields). As we go deeper, the receptive fields of the neurons widen, allowing more spatial information to be integrated. Thanks to pooling steps, the same happens in CNNs.

- Feature sharing. Although biological neurons are not identical, similar features are recognized across different parts of the visual field. In CNNs, the various filters scan the entire image, allowing patterns to be recognized regardless of location.

- Spatial invariance. Humans can recognize objects even when they are moved, scaled, or rotated. CNNs also possess this property.

These features have made CNNs perform well in visual tasks to the point of superhuman performance:

Russakovsky et al. [22] recently reported that human performance yields a 5.1% top-5 error on the ImageNet dataset. This number is achieved by a human annotator who is well-trained on the validation images to be better aware of the existence of relevant classes. […] Our result (4.94%) exceeds the reported human-level performance. —source [3]

Although CNNs perform better than humans in several tasks, there are still cases where they fail spectacularly. For example, in a 2024 study [4], AI models failed to generalize image classification. State-of-the-art models perform better than humans for objects on upright poses but fail when objects are on unusual poses.

In conclusion, our results show that (1) humans are still much more robust than most networks at recognizing objects in unusual poses, (2) time is of the essence for such ability to emerge, and (3) even time-limited humans are dissimilar to deep neural networks. —source [4]

In the study [4], they note that humans need time to succeed in a task. Some tasks require not only visual recognition but also abstractive cognition, which requires time.

The generalization abilities that make humans capable come from understanding the laws that govern relations among objects. Humans recognize objects by extrapolating rules and chaining these rules to adapt to new situations. One of the simplest rules is the “same-different relation”: the ability to define whether two objects are the same or different. This ability develops rapidly during infancy and is also importantly associated with language development [5-7]. In addition, some animals such as ducks and chimpanzees also have it [8]. In contrast, learning same-different relations is very difficult for neural networks [9-10].

Convolutional networks show difficulty in learning this relationship. Likewise, they fail to learn other types of causal relationships that are simple for humans. Therefore, many researchers have concluded that CNNs lack the inductive bias necessary to be able to learn these relationships.

These negative results do not mean that neural networks are completely incapable of learning same-different relations. Much larger and longer trained models can learn this relation. For example, vision-transformer models pre-trained on ImageNet with contrastive learning can show this ability [12].

Can CNNs learn same-different relationships?

The fact that broad models can learn these kinds of relationships has rekindled interest in CNNs. The same-different relationship is considered among the basic logical operations that make up the foundations for higher-order cognition and reasoning. Showing that shallow CNNs can learn this concept would allow us to experiment with other relationships. Moreover, it will allow models to learn increasingly complex causal relationships. This is an important step in advancing the generalization capabilities of AI.

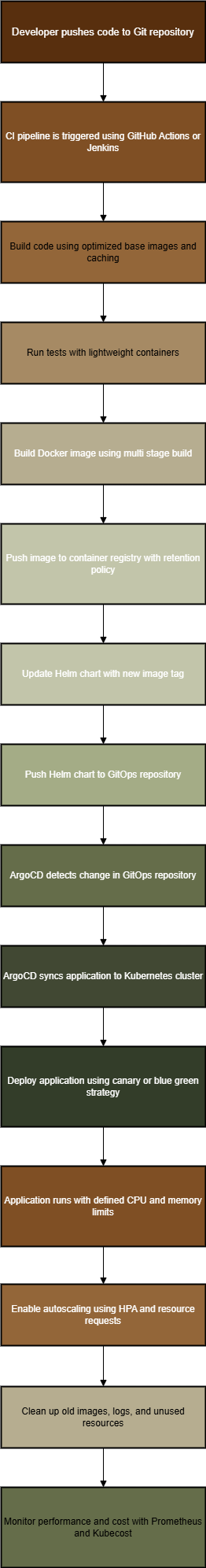

Previous work suggests that CNNs do not have the architectural inductive biases to be able to learn abstract visual relations. Other authors assume that the problem is in the training paradigm. In general, the classical gradient descent is used to learn a single task or a set of tasks. Given a task t or a set of tasks T, a loss function L is used to optimize the weights φ that should minimize the function L:

This can be viewed as simply the sum of the losses across different tasks (if we have more than one task). Instead, the Model-Agnostic Meta-Learning (MAML) algorithm [13] is designed to search for an optimal point in weight space for a set of related tasks. MAML seeks to find an initial set of weights θ that minimizes the loss function across tasks, facilitating rapid adaptation:

The difference may seem small, but conceptually, this approach is directed toward abstraction and generalization. If there are multiple tasks, traditional training tries to optimize weights for different tasks. MAML tries to identify a set of weights that is optimal for different tasks but at the same time equidistant in the weight space. This starting point θ allows the model to generalize more effectively across different tasks.

Since we now have a method biased toward generalization and abstraction, we can test whether we can make CNNs learn the same-different relationship.

In this study [11], they compared shallow CNNs trained with classic gradient descent and meta-learning on a dataset designed for this report. The dataset consists of 10 different tasks that test for the same-different relationship.

The authors [11] compare CNNs of 2, 4, or 6 layers trained in a traditional way or with meta-learning, showing several interesting results:

- The performance of traditional CNNs shows similar behavior to random guessing.

- Meta-learning significantly improves performance, suggesting that the model can learn the same-different relationship. A 2-layer CNN performs little better than chance, but by increasing the depth of the network, performance improves to near-perfect accuracy.

One of the most intriguing results of [11] is that the model can be trained in a leave-one-out way (use 9 tasks and leave one out) and show out-of-distribution generalization capabilities. Thus, the model has learned abstracting behavior that is hardly seen in such a small model (6 layers).

Conclusions

Although convolutional networks were inspired by how the human brain processes visual stimuli, they do not capture some of its basic capabilities. This is especially true when it comes to causal relations or abstract concepts. Some of these relationships can be learned from large models only with extensive training. This has led to the assumption that small CNNs cannot learn these relations due to a lack of architecture inductive bias. In recent years, efforts have been made to create new architectures that could have an advantage in learning relational reasoning. Yet most of these architectures fail to learn these kinds of relationships. Intriguingly, this can be overcome through the use of meta-learning.

The advantage of meta-learning is to incentivize more abstractive learning. Meta-learning pressure toward generalization, trying to optimize for all tasks at the same time. To do this, learning more abstract features is favored (low-level features, such as the angles of a particular shape, are not useful for generalization and are disfavored). Meta-learning allows a shallow CNN to learn abstract behavior that would otherwise require many more parameters and training.

The shallow CNNs and same-different relationship are a model for higher cognitive functions. Meta-learning and different forms of training could be useful to improve the reasoning capabilities of the models.

Another thing!

You can look for my other articles on Medium, and you can also connect or reach me on LinkedIn or in Bluesky. Check this repository, which contains weekly updated ML & AI news, or here for other tutorials and here for AI reviews. I am open to collaborations and projects, and you can reach me on LinkedIn.

Reference

Here is the list of the principal references I consulted to write this article, only the first name for an article is cited.

- Lindsay, 2020, Convolutional Neural Networks as a Model of the Visual System: Past, Present, and Future, link

- Li, 2020, A Survey of Convolutional Neural Networks: Analysis, Applications, and Prospects, link

- He, 2015, Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification, link

- Ollikka, 2024, A comparison between humans and AI at recognizing objects in unusual poses, link

- Premark, 1981, The codes of man and beasts, link

- Blote, 1999, Young children’s organizational strategies on a same–different task: A microgenetic study and a training study, link

- Lupker, 2015, Is there phonologically based priming in the same-different task? Evidence from Japanese-English bilinguals, link

- Gentner, 2021, Learning same and different relations: cross-species comparisons, link

- Kim, 2018, Not-so-clevr: learning same–different relations strains feedforward neural networks, link

- Puebla, 2021, Can deep convolutional neural networks support relational reasoning in the same-different task? link

- Gupta, 2025, Convolutional Neural Networks Can (Meta-)Learn the Same-Different Relation, link

- Tartaglini, 2023, Deep Neural Networks Can Learn Generalizable Same-Different Visual Relations, link

- Finn, 2017, Model-agnostic meta-learning for fast adaptation of deep networks, link

The post The Basis of Cognitive Complexity: Teaching CNNs to See Connections appeared first on Towards Data Science.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![From Accountant to Data Engineer with Alyson La [Podcast #168]](https://cdn.hashnode.com/res/hashnode/image/upload/v1744420903260/fae4b593-d653-41eb-b70b-031591aa2f35.png?#)

.png?#)

![Apple Posts Full First Episode of 'Your Friends & Neighbors' on YouTube [Video]](https://www.iclarified.com/images/news/96990/96990/96990-640.jpg)

![Apple May Implement Global iPhone Price Increases to Mitigate Tariff Impacts [Report]](https://www.iclarified.com/images/news/96987/96987/96987-640.jpg)