Learnings from a Machine Learning Engineer — Part 6: The Human Side

Practical advice for the humans involved with machine learning The post Learnings from a Machine Learning Engineer — Part 6: The Human Side appeared first on Towards Data Science.

In my

These elements require a certain degree of in-depth expertise, and they (usually) have well-defined metrics and established processes that are within our control.

Now it’s time to consider…

The human aspects of machine learning

Yes, this may seem like an oxymoron! But it is the interaction with people — the ones you work with and the ones who use your application — that help bring the technology to life and provide a sense of fulfillment to your work.

These human interactions include:

- Communicating technical concepts to a non-technical audience.

- Understanding how your end-users engage with your application.

- Providing clear expectations on what the model can and cannot do.

I also want to touch on the impact to people’s jobs, both positive and negative, as AI becomes a part of our everyday lives.

Overview

As in my previous articles, I will gear this discussion around an image classification application. With that in mind, these are the groups of people involved with your project:

- AI/ML Engineer (that’s you) — bringing life to the Machine Learning application.

- MLOps team — your peers who will deploy, monitor, and enhance your application.

- Subject matter experts — the ones who will provide the care and feeding of labeled data.

- Stakeholders — the ones who are looking for a solution to a real world problem.

- End-users — the ones who will be using your application. These could be internal and external customers.

- Marketing — the ones who will be promoting usage of your application.

- Leadership — the ones who are paying the bill and need to see business value.

Let’s dive right in…

AI/ML Engineer

You may be a part of a team or a lone wolf. You may be an individual contributor or a team leader.

Whatever your role, it is important to see the whole picture — not only the coding, the data science, and the technology behind AI/ML — but the value that it brings to your organization.

Understand the business needs

Your company faces many challenges to reduce expenses, improve customer satisfaction, and remain profitable. Position yourself as someone who can create an application that helps achieve their goals.

- What are the pain points in a business process?

- What is the value of using your application (time savings, cost savings)?

- What are the risks of a poor implementation?

- What is the roadmap for future enhancements and use-cases?

- What other areas of the business could benefit from the application, and what design choices will help future-proof your work?

Communication

Deep technical discussions with your peers is probably our comfort zone. However, to be a more successful AI/ML Engineer, you should be able to clearly explain the work you are doing to different audiences.

With practice, you can explain these topics in ways that your non-technical business users can follow along with, and understand how your technology will benefit them.

To help you get comfortable with this, try creating a PowerPoint with 2–3 slides that you can cover in 5–10 minutes. For example, explain how a neural network can take an image of a cat or a dog and determine which one it is.

Practice giving this presentation in your mind, to a friend — even your pet dog or cat! This will get you more comfortable with the transitions, tighten up the content, and ensure you cover all the important points as clearly as possible.

- Be sure to include visuals — pure text is boring, graphics are memorable.

- Keep an eye on time — respect your audience’s busy schedule and stick to the 5–10 minutes you are given.

- Put yourself in their shoes — your audience is interested in how the technology will benefit them, not on how smart you are.

Creating a technical presentation is a lot like the Feynman Technique — explaining a complex subject to your audience by breaking it into easily digestible pieces, with the added benefit of helping you understand it more completely yourself.

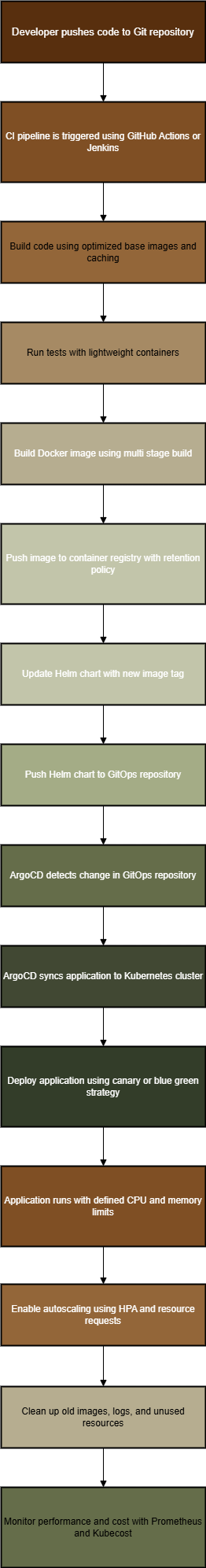

MLOps team

These are the people that deploy your application, manage data pipelines, and monitor infrastructure that keeps things running.

Without them, your model lives in a Jupyter notebook and helps nobody!

These are your technical peers, so you should be able to connect with their skillset more naturally. You speak in jargon that sounds like a foreign language to most people. Even so, it is extremely helpful for you to create documentation to set expectations around:

- Process and data flows.

- Data quality standards.

- Service level agreements for model performance and availability.

- Infrastructure requirements for compute and storage.

- Roles and responsibilities.

It is easy to have a more informal relationship with your MLOps team, but remember that everyone is trying to juggle many projects at the same time.

Email and chat messages are fine for quick-hit issues. But for larger tasks, you will want a system to track things like user stories, enhancement requests, and break-fix issues. This way you can prioritize the work and ensure you don’t forget something. Plus, you can show progress to your supervisor.

Some great tools exist, such as:

- Jira, GitHub, Azure DevOps Boards, Asana, Monday, etc.

We are all professionals, so having a more formal system to avoid miscommunication and mistrust is good business.

Subject matter experts

These are the team members that have the most experience working with the data that you will be using in your AI/ML project.

SMEs are very skilled at dealing with messy data — they are human, after all! They can handle one-off situations by considering knowledge outside of their area of expertise. For example, a doctor may recognize metal inserts in a patient’s X-ray that indicate prior surgery. They may also notice a faulty X-ray image due to equipment malfunction or technician error.

However, your machine learning model only knows what it knows, which comes from the data it was trained on. So, those one-off cases may not be appropriate for the model you are training. Your SMEs need to understand that clear, high quality training material is what you are looking for.

Think like a computer

In the case of an image classification application, the output from the model communicates to you how well it was trained on the data set. This comes in the form of error rates, which is very much like when a student takes an exam and you can tell how well they studied by seeing how many questions — and which ones — they get wrong.

In order to reduce error rates, your image data set needs to be objectively “good” training material. To do this, put yourself in an analytical mindset and ask yourself:

- What images will the computer get the most useful information out of? Make sure all the relevant features are visible.

- What is it about an image that confused the model? When it makes an error, try to understand why — objectively — by looking at the entire picture.

- Is this image a “one-off” or a typical example of what the end-users will send? Consider creating a new subclass of exceptions to the norm.

Be sure to communicate to your SMEs that model performance is directly tied to data quality and give them clear guidance:

- Provide visual examples of what works.

- Provide counter-examples of what does not work.

- Ask for a wide variety of data points. In the X-ray example, be sure to get patients with different ages, genders, and races.

- Provide options to create subclasses of your data for further refinement. Use that X-ray from a patient with prior surgery as a subclass, and eventually as you can get more examples over time, the model can handle them.

This also means that you should become familiar with the data they are working with — perhaps not expert level, but certainly above a novice level.

Lastly, when working with SMEs, be cognizant of the impression they may have that the work you are doing is somehow going to replace their job. It can feel threatening when someone asks you how to do your job, so be mindful.

Ideally, you are building a tool with honest intentions and it will enable your SMEs to augment their day-to-day work. If they can use the tool as a second opinion to validate their conclusions in less time, or perhaps even avoid mistakes, then this is a win for everyone. Ultimately, the goal is to allow them to focus on more challenging situations and achieve better outcomes.

I have more to say on this in my closing remarks.

Stakeholders

These are the people you will have the closest relationship with.

Stakeholders are the ones who created the business case to have you build the machine learning model in the first place.

They have a vested interest in having a model that performs well. Here are some key point when working with your stakeholder:

- Be sure to listen to their needs and requirements.

- Anticipate their questions and be prepared to respond.

- Be on the lookout for opportunities to improve your model performance. Your stakeholders may not be as close to the technical details as you are and may not think there is any room for improvement.

- Bring issues and problems to their attention. They may not want to hear bad news, but they will appreciate honesty over evasion.

- Schedule regular updates with usage and performance reports.

- Explain technical details in terms that are easy to understand.

- Set expectations on regular training and deployment cycles and timelines.

Your role as an AI/ML Engineer is to bring to life the vision of your stakeholders. Your application is making their lives easier, which justifies and validates the work you are doing. It’s a two-way street, so be sure to share the road.

End-users

These are the people who are using your application. They may also be your harshest critics, but you may never even hear their feedback.

Think like a human

Recall above when I suggested to “think like a computer” when analyzing the data for your training set. Now it’s time to put yourself in the shoes of a non-technical user of your application.

End-users of an image classification model communicate their understanding of what’s expected of them by way of poor images. These are like the students that didn’t study for the exam, or worse didn’t read the questions, so their answers don’t make sense.

Your model may be really good, but if end-users misuse the application or are not satisfied with the output, you should be asking:

- Are the instructions confusing or misleading? Did the user focus the camera on the subject being classified, or is it more of a wide-angle image? You can’t blame the user if they follow bad instructions.

- What are their expectations? When the results are presented to the user, are they satisfied or are they frustrated? You may noticed repeated images from frustrated users.

- Are the usage patterns changing? Are they trying to use the application in unexpected ways? This may be an opportunity to improve the model.

Inform your stakeholders of your observations. There may be simple fixes to improve end-user satisfaction, or there may be more complex work ahead.

If you are lucky, you may discover an unexpected way to leverage the application that leads to expanded usage or exciting benefits to your business.

Explainability

Most AI/ML model are considered “black boxes” that perform millions of calculations on extremely high dimensional data and produce a rather simplistic result without any reason behind it.

The Answer to Ultimate Question of Life, the Universe, and Everything is 42.

— The Hitchhikers Guide to the Galaxy

Depending on the situation, your end-users may require more explanation of the results, such as with medical imaging. Where possible, you should consider incorporating model explainability techniques such as LIME, SHAP, and others. These responses can help put a human touch to cold calculations.

Now it’s time to switch gears and consider higher-ups in your organization.

Marketing team

These are the people who promote the use of your hard work. If your end-users are completely unaware of your application, or don’t know where to find it, your efforts will go to waste.

The marketing team controls where users can find your app on your website and link to it through social media channels. They also see the technology through a different lens.

The above hype cycle is a good representation of how technical advancements tends to flow. At the beginning, there can be an unrealistic expectation of what your new AI/ML tool can do — it’s the greatest thing since sliced bread!

Then the “new” wears off and excitement wanes. You may face a lack of interest in your application and the marketing team (as well as your end-users) move on to the next thing. In reality, the value of your efforts are somewhere in the middle.

Understand that the marketing team’s interest is in promoting the use of the tool because of how it will benefit the organization. They may not need to know the technical inner workings. But they should understand what the tool can do, and be aware of what it cannot do.

Honest and clear communication up-front will help smooth out the hype cycle and keep everyone interested longer. This way the crash from peak expectations to the trough of disillusionment is not so severe that the application is abandoned altogether.

Leadership team

These are the people that authorize spending and have the vision for how the application fits into the overall company strategy. They are driven by factors that you have no control over and you may not even be aware of. Be sure to provide them with the key information about your project so they can make informed decisions.

Depending on your role, you may or may not have direct interaction with executive leadership in your company. Your job is to summarize the costs and benefits associated with your project, even if that is just with your immediate supervisor who will pass this along.

Your costs will likely include:

- Compute and storage — training and serving a model.

- Image data collection — both real-world and synthetic or staged.

- Hours per week — SME, MLOps, AI/ML engineering time.

Highlight the savings and/or value added:

- Provide measures on speed and accuracy.

- Translate efficiencies into FTE hours saved and customer satisfaction.

- Bonus points if you can find a way to produce revenue.

Business leaders, much like the marketing team, may follow the hype cycle:

- Be realistic about model performance. Don’t try to oversell it, but be honest about the opportunities for improvement.

- Consider creating a human benchmark test to measure accuracy and speed for an SME. It is easy to say human accuracy is 95%, but it’s another thing to measure it.

- Highlight short-term wins and how they can become long-term success.

Conclusion

I hope you can see that, beyond the technical challenges of creating an AI/ML application, there are many humans involved in a successful project. Being able to interact with these individuals, and meet them where they are in terms of their expectations from the technology, is vital to advancing the adoption of your application.

Key takeaways:

- Understand how your application fits into the business needs.

- Practice communicating to a non-technical audience.

- Collect measures of model performance and report these regularly to your stakeholders.

- Expect that the hype cycle could help and hurt your cause, and that setting consistent and realistic expectations will ensure steady adoption.

- Be aware that factors outside of your control, such as budgets and business strategy, could affect your project.

And most importantly…

Don’t let machines have all the fun learning!

Human nature gives us the curiosity we need to understand our world. Take every opportunity to grow and expand your skills, and remember that human interaction is at the heart of machine learning.

Closing remarks

Advancements in AI/ML have the potential (assuming they are properly developed) to do many tasks as well as humans. It would be a stretch to say “better than” humans because it can only be as good as the training data that humans provide. However, it is safe to say AI/ML can be faster than humans.

The next logical question would be, “Well, does that mean we can replace human workers?”

This is a delicate topic, and I want to be clear that I am not an advocate of eliminating jobs.

I see my role as an AI/ML Engineer as being one that can create tools that aide in someone else’s job or enhance their ability to complete their work successfully. When used properly, the tools can validate difficult decisions and speed through repetitive tasks, allowing your experts to spend more time on the one-off situations that require more attention.

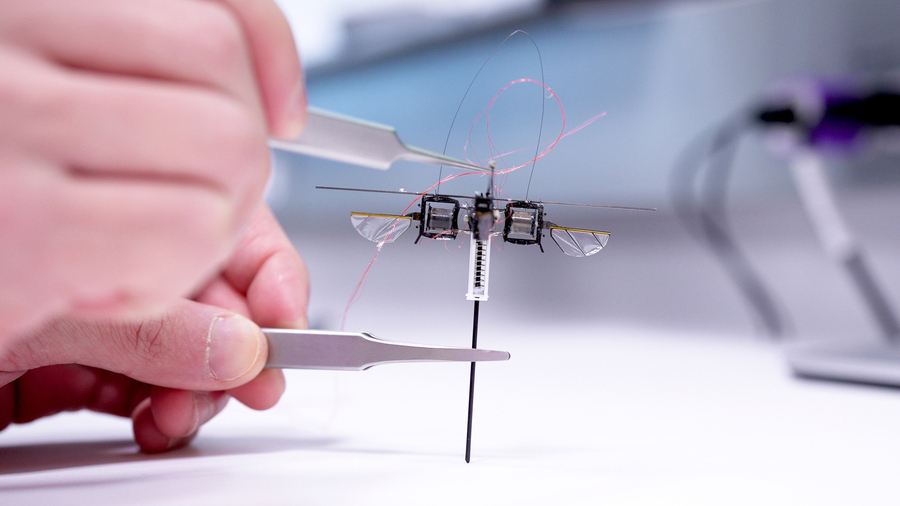

There may also be new career opportunities, from the care-and-feeding of data, quality assessment, user experience, and even to new roles that leverage the technology in exciting and unexpected ways.

Unfortunately, business leaders may make decisions that impact people’s jobs, and this is completely out of your control. But all is not lost — even for us AI/ML Engineers…

There are things we can do

- Be kind to the fellow human beings that we call “coworkers”.

- Be aware of the fear and uncertainty that comes with technological advancements.

- Be on the lookout for ways to help people leverage AI/ML in their careers and to make their lives better.

This is all part of being human.

The post Learnings from a Machine Learning Engineer — Part 6: The Human Side appeared first on Towards Data Science.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![From Accountant to Data Engineer with Alyson La [Podcast #168]](https://cdn.hashnode.com/res/hashnode/image/upload/v1744420903260/fae4b593-d653-41eb-b70b-031591aa2f35.png?#)

.png?#)

![Apple Posts Full First Episode of 'Your Friends & Neighbors' on YouTube [Video]](https://www.iclarified.com/images/news/96990/96990/96990-640.jpg)

![Apple May Implement Global iPhone Price Increases to Mitigate Tariff Impacts [Report]](https://www.iclarified.com/images/news/96987/96987/96987-640.jpg)