Automating Cross-Region Replication in AWS S3 Using Lambda Triggers

When you're storing critical or sensitive data in Amazon S3, relying on a single region isn't always safe. That's where Cross-Region Replication (CRR) comes in—it helps protect your data by automatically copying objects from one region to another. While Amazon S3 offers built-in Cross-Region Replication (CRR), sometimes your use case demands more than just copying data—you may need custom logic, logging, security validation, or notifications. When I was introduced to Cross-Region Replication (CRR) in S3, I thought AWS handled everything internally. But then came the question: “What if I want to control that replication manually?” Maybe I want to inject logic, like logging, filtering, integrating with other AWS services, or sending a notification when a file is replicated. That’s where AWS Lambda comes in as a trigger to enable event-based CRR tailored to your needs. In this article, I’ll walk you through how to use AWS Lambda as a trigger for cross-region replication of S3 objects. This gives you fine-grained control over what happens when files land in your bucket. Why Use Lambda with CRR? S3 CRR is powerful, but it’s also rigid. It works great for general replication. Sometimes you want more control over what gets replicated, when, and how—and Lambda gives you that power. So, if you want to: Replicate only certain file types? Filter by tags or extension? Perform actions before or after replication? Log replication activity or send email alerts? Encrypt files or trigger backup workflows? Notify admins when CRR happens That’s where Lambda + S3 event notifications shine. You can customize your logic with Python or Node.js and scale it on-demand. Real-World Use Cases Disaster Recovery: Say; A bank or any company replicates transaction logs from s3://transactions-east to s3://transactions-west using Lambda. This ensures data availability and operational continuity even if a region goes down. Compliance &Compliance-Driven Redundancy: Government regulations or Healthcare data may require data to be stored in specific regions. With Lambda, every upload can be monitored and replicated with logs saved in CloudWatch or DynamoDB. Media/Content Workflows A media company stores videos in us-east-1 for editing, but automatically pushes final edits to an archival bucket in `us-west-2 ' triggered by uploads. Data Segmentation & Backup An e-commerce platform might replicate invoices, but skip raw logs—Lambda filters based on filename or tags before replication. Architecture Overview Here’s what we’ll use: S3 Bucket (Source) – The primary location where files are uploaded. S3 Bucket (Destination) – A bucket in a different AWS region. AWS Lambda – To execute replication logic when a file is uploaded. IAM Role – Permissions for Lambda to access both buckets. S3 Event Notification – Triggers the Lambda function on file upload (Optionally) SNS – again, for visibility. Steps to Set Up This Function Step 1: Create Two Buckets in Different Regions. source-bucket: my-crr-source (Region: us-east-1) destination-bucket: my-crr-destination (Region: us-west-2) Make sure versioning is enabled on both. Go to Source Bucket → Replication Rules → Create Rule Choose Destination Bucket IAM Role: Allow S3 to replicate objects Save the rule Create IAM Role for Lambda Assign permissions: s3:GetObject s3:PutObject s3:ListBucket Trust policy for Lambda. Step 3: Create an SNS Topic for Email Notification Go to SNS Console → Create Topic Topic Name: S3ReplicationNotification Type: Standard Create a Subscription Protocol: Email Enter your email address Confirm subscription (Check your email for confirmation) Write Lambda Function Here’s a basic Python code to replicate objects on s3:ObjectCreated:* event: ` import boto3 import os s3 = boto3.client('s3') DESTINATION_BUCKET = 'destination-bucket-name' def lambda_handler(event, context): for record in event['Records']: src_bucket = record['s3']['bucket']['name'] key = record['s3']['object']['key'] copy_source = {'Bucket': src_bucket, 'Key': key} s3.copy_object( CopySource=copy_source, Bucket=DESTINATION_BUCKET, Key=key ) return { 'statusCode': 200, 'body': f"Replicated {key} to {DESTINATION_BUCKET}" } ` Deploy the Lambda Function Use the Python boto3 SDK to copy objects from the source to the destination bucket. Step 5: Set Up S3 Event Trigger for Lambda Go to Lambda → Create Function → Author from Scratch Runtime: Python 3.9 Execution Role: Attach IAM Policy with AmazonS3FullAccess AmazonSNSFullAccess Go to Source Bucket → Properties → Event Notifications Create new event notification Event Name: S3ReplicationTrigger Event Type: PUT (File Uploads) Destination: Lambda Function (Select the Lambda function) Test Your Setup Upload a File to source-bucket-us-east-1 Check Destination Bucket (File should appear there) Check Email for replication notification For large files or spe

When you're storing critical or sensitive data in Amazon S3, relying on a single region isn't always safe. That's where Cross-Region Replication (CRR) comes in—it helps protect your data by automatically copying objects from one region to another. While Amazon S3 offers built-in Cross-Region Replication (CRR), sometimes your use case demands more than just copying data—you may need custom logic, logging, security validation, or notifications. When I was introduced to Cross-Region Replication (CRR) in S3, I thought AWS handled everything internally.

But then came the question: “What if I want to control that replication manually?” Maybe I want to inject logic, like logging, filtering, integrating with other AWS services, or sending a notification when a file is replicated. That’s where AWS Lambda comes in as a trigger to enable event-based CRR tailored to your needs.

In this article, I’ll walk you through how to use AWS Lambda as a trigger for cross-region replication of S3 objects. This gives you fine-grained control over what happens when files land in your bucket.

Why Use Lambda with CRR?

S3 CRR is powerful, but it’s also rigid. It works great for general replication. Sometimes you want more control over what gets replicated, when, and how—and Lambda gives you that power. So, if you want to:

- Replicate only certain file types?

- Filter by tags or extension?

- Perform actions before or after replication?

- Log replication activity or send email alerts?

- Encrypt files or trigger backup workflows?

- Notify admins when CRR happens

That’s where Lambda + S3 event notifications shine. You can customize your logic with Python or Node.js and scale it on-demand.

Real-World Use Cases

- Disaster Recovery: Say; A bank or any company replicates transaction logs from s3://transactions-east to s3://transactions-west using Lambda. This ensures data availability and operational continuity even if a region goes down.

- Compliance &Compliance-Driven Redundancy: Government regulations or Healthcare data may require data to be stored in specific regions. With Lambda, every upload can be monitored and replicated with logs saved in CloudWatch or DynamoDB.

-

Media/Content Workflows

A media company stores videos in

us-east-1for editing, but automatically pushes final edits to an archival bucket in `us-west-2 ' triggered by uploads. - Data Segmentation & Backup An e-commerce platform might replicate invoices, but skip raw logs—Lambda filters based on filename or tags before replication.

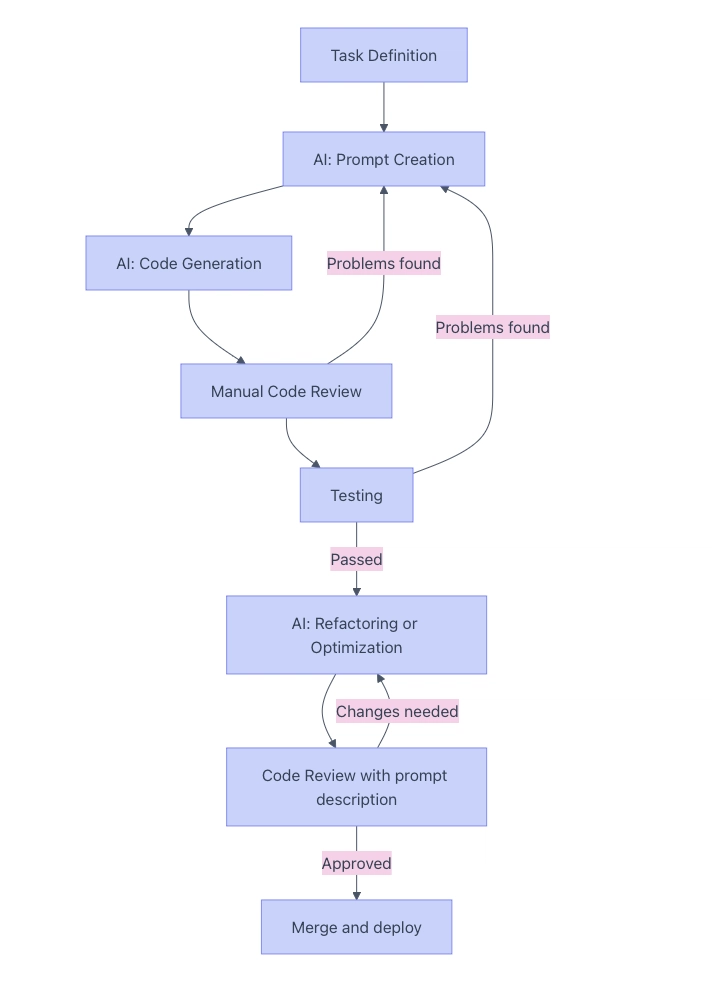

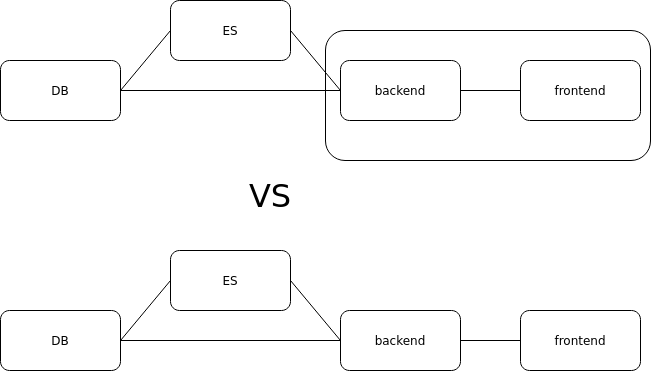

Architecture Overview

Here’s what we’ll use:

- S3 Bucket (Source) – The primary location where files are uploaded.

- S3 Bucket (Destination) – A bucket in a different AWS region.

- AWS Lambda – To execute replication logic when a file is uploaded.

- IAM Role – Permissions for Lambda to access both buckets.

- S3 Event Notification – Triggers the Lambda function on file upload

- (Optionally) SNS – again, for visibility.

Steps to Set Up This Function

Step 1: Create Two Buckets in Different Regions.

source-bucket: my-crr-source (Region: us-east-1)

destination-bucket: my-crr-destination (Region: us-west-2)

Make sure versioning is enabled on both.

- Go to Source Bucket → Replication Rules → Create Rule

- Choose Destination Bucket

- IAM Role: Allow S3 to replicate objects

- Save the rule

- Create IAM Role for Lambda Assign permissions:

- s3:GetObject

- s3:PutObject

- s3:ListBucket

- Trust policy for Lambda.

Step 3: Create an SNS Topic for Email Notification

- Go to SNS Console → Create Topic

- Topic Name: S3ReplicationNotification

- Type: Standard

- Create a Subscription

- Protocol: Email

- Enter your email address

- Confirm subscription (Check your email for confirmation)

- Write Lambda Function Here’s a basic Python code to replicate objects on s3:ObjectCreated:* event:

`

import boto3

import os

s3 = boto3.client('s3')

DESTINATION_BUCKET = 'destination-bucket-name'

def lambda_handler(event, context):

for record in event['Records']:

src_bucket = record['s3']['bucket']['name']

key = record['s3']['object']['key']

copy_source = {'Bucket': src_bucket, 'Key': key}

s3.copy_object(

CopySource=copy_source,

Bucket=DESTINATION_BUCKET,

Key=key

)

return {

'statusCode': 200,

'body': f"Replicated {key} to {DESTINATION_BUCKET}"

}

`

Deploy the Lambda Function

Use the Python boto3 SDK to copy objects from the source to the destination bucket.

Step 5: Set Up S3 Event Trigger for Lambda

- Go to Lambda → Create Function → Author from Scratch

- Runtime: Python 3.9

Execution Role: Attach IAM Policy with

AmazonS3FullAccess

AmazonSNSFullAccessGo to Source Bucket → Properties → Event Notifications

Create new event notification

Event Name: S3ReplicationTrigger

Event Type: PUT (File Uploads)

Destination: Lambda Function (Select the Lambda function)

- Test Your Setup

- Upload a File to source-bucket-us-east-1

- Check Destination Bucket (File should appear there)

- Check Email for replication notification

- For large files or specific object types, include filters or size checks in the Lambda code.

- Ensure that Lambda has access to both buckets and is deployed in the same region as the source bucket.

- You can extend this by integrating SNS to alert teams when a CRR is performed.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

.png?#)

.webp?#)

![[Fixed] Gemini app is failing to generate Audio Overviews](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/03/Gemini-Audio-Overview-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![What’s new in Android’s April 2025 Google System Updates [U: 4/14]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-3.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Seeds tvOS 18.5 Beta 2 to Developers [Download]](https://www.iclarified.com/images/news/97011/97011/97011-640.jpg)

![Apple Releases macOS Sequoia 15.5 Beta 2 to Developers [Download]](https://www.iclarified.com/images/news/97014/97014/97014-640.jpg)