A Framework for Using Generative AI in Software Testing

The landscape of software testing is rapidly evolving, and generative AI is at the heart of this transformation. By harnessing the power of advanced machine learning and large language models, organizations can reimagine how they validate, verify, and deliver high-quality software at scale. This article outlines a structured approach for leveraging generative AI across the entire software testing life cycle, with a focus on strategic implementation, skills development, and long-term thinking. The framework is informed by genQE.ai vision for integrating intelligent automation in quality engineering. Understanding Generative AI in Software Testing Generative AI refers to a class of artificial intelligence that can produce content — such as text, code, test cases, or data — based on learned patterns from vast datasets. In software testing, this capability is especially valuable: generative AI can create test scenarios, generate data, predict defects, and assist in decision-making. Unlike traditional automation tools, generative AI models adapt contextually, enabling intelligent responses to changes in requirements, code, or test environments. This adaptability is key to creating resilient, scalable, and faster testing processes. Building Generative AI Skills for Software Testing Adopting generative AI requires a shift in mindset and skillset across testing teams. Key competencies include: Prompt engineering — Crafting effective inputs to elicit useful outputs from AI models. Test design thinking — Leveraging AI to enhance exploratory testing, edge case identification, and scenario coverage. Data literacy — Understanding how AI models are trained, their limitations, and how to validate their outputs. AI-augmented decision-making — Using generative AI to assist with risk analysis and test prioritization. Organizations should invest in upskilling quality engineers to collaborate with AI rather than just consume its outputs. Opportunities for Generative AI Across the Software Testing Life Cycle Generative AI is not confined to a single testing activity. Its capabilities span across the entire testing life cycle, driving efficiency and quality at every stage. Requirement Phase Testing During requirement analysis, generative AI can: Extract functional and non-functional requirements from user stories or documents. Detect ambiguities or gaps in requirements. Suggest testable acceptance criteria aligned with business objectives. This early validation helps reduce costly defects later in the cycle. Test Planning In the planning phase, generative AI supports: Estimating test efforts based on historical data and project scope. Creating intelligent test strategies that adapt to risk levels and system complexity. Identifying optimal testing approaches (e.g., regression, exploratory, or scenario-based testing). Tools like genQE.ai can incorporate organizational knowledge to tailor planning recommendations to specific business domains. Test Case Development Here, generative AI can significantly accelerate: Authoring of test cases from natural language requirements. Generation of edge cases and negative scenarios that may be overlooked. Maintenance of test cases in response to changes in application behavior. With contextual understanding, genQE.ai enables precision and depth in test design that aligns with actual user journeys. Test Environment Setup Generative AI aids in: Identifying configuration requirements based on system architecture. Suggesting environment specifications from previous successful test runs. Automating infrastructure provisioning scripts for consistent test execution. This reduces delays often associated with environment readiness. Test Execution During execution, AI enables: Dynamic test selection based on code changes and risk levels. Real-time anomaly detection during test runs. Intelligent rerun strategies for failed tests to isolate flakiness from genuine defects. Such adaptability improves efficiency and provides rapid feedback to development teams. Test Cycle Closure At the end of a cycle, generative AI contributes to: Summarizing test results with insights and trends. Recommending future improvements based on retrospective analysis. Supporting audit-ready reporting with traceability from requirements to test outcomes. genQE.ai intelligent reporting features ensure transparency and accountability at closure. Thinking Long-Term about Generative AI in Software Development The role of generative AI in software testing is only beginning. As models become more capable and trustworthy, their influence will extend beyond testing to encompass broader software development practices. Forward-looking organizations should: Embrace AI-augmented quality engineering as a strategic differentiator. Embed AI capabilities within their DevOps pipelines for continuous validation. Foster a culture of innovation and experimentation within testing teams. genQE.ai represents a step toward this future

The landscape of software testing is rapidly evolving, and generative AI is at the heart of this transformation. By harnessing the power of advanced machine learning and large language models, organizations can reimagine how they validate, verify, and deliver high-quality software at scale. This article outlines a structured approach for leveraging generative AI across the entire software testing life cycle, with a focus on strategic implementation, skills development, and long-term thinking. The framework is informed by genQE.ai vision for integrating intelligent automation in quality engineering.

Understanding Generative AI in Software Testing

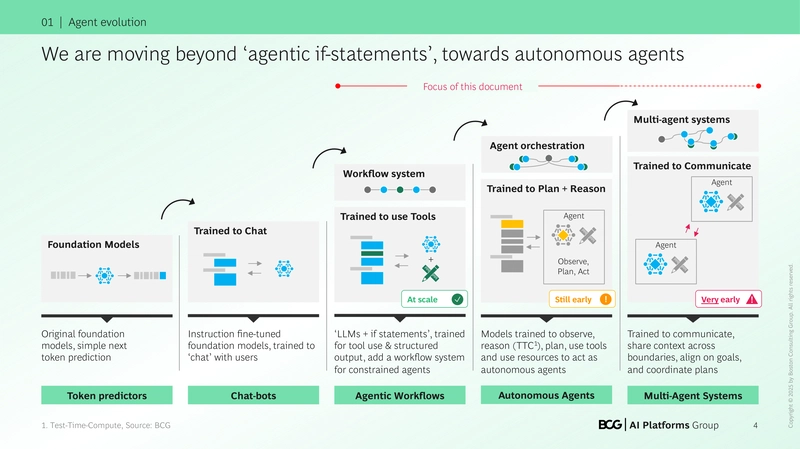

Generative AI refers to a class of artificial intelligence that can produce content — such as text, code, test cases, or data — based on learned patterns from vast datasets. In software testing, this capability is especially valuable: generative AI can create test scenarios, generate data, predict defects, and assist in decision-making.

Unlike traditional automation tools, generative AI models adapt contextually, enabling intelligent responses to changes in requirements, code, or test environments. This adaptability is key to creating resilient, scalable, and faster testing processes.

Building Generative AI Skills for Software Testing

Adopting generative AI requires a shift in mindset and skillset across testing teams. Key competencies include:

Prompt engineering — Crafting effective inputs to elicit useful outputs from AI models.

Test design thinking — Leveraging AI to enhance exploratory testing, edge case identification, and scenario coverage.

Data literacy — Understanding how AI models are trained, their limitations, and how to validate their outputs.

AI-augmented decision-making — Using generative AI to assist with risk analysis and test prioritization.

Organizations should invest in upskilling quality engineers to collaborate with AI rather than just consume its outputs.

Opportunities for Generative AI Across the Software Testing Life Cycle

Generative AI is not confined to a single testing activity. Its capabilities span across the entire testing life cycle, driving efficiency and quality at every stage.

Requirement Phase Testing

During requirement analysis, generative AI can:

Extract functional and non-functional requirements from user stories or documents.

Detect ambiguities or gaps in requirements.

Suggest testable acceptance criteria aligned with business objectives.

This early validation helps reduce costly defects later in the cycle.

Test Planning

In the planning phase, generative AI supports:

Estimating test efforts based on historical data and project scope.

Creating intelligent test strategies that adapt to risk levels and system complexity.

Identifying optimal testing approaches (e.g., regression, exploratory, or scenario-based testing).

Tools like genQE.ai can incorporate organizational knowledge to tailor planning recommendations to specific business domains.

Test Case Development

Here, generative AI can significantly accelerate:

Authoring of test cases from natural language requirements.

Generation of edge cases and negative scenarios that may be overlooked.

Maintenance of test cases in response to changes in application behavior.

With contextual understanding, genQE.ai enables precision and depth in test design that aligns with actual user journeys.

Test Environment Setup

Generative AI aids in:

Identifying configuration requirements based on system architecture.

Suggesting environment specifications from previous successful test runs.

Automating infrastructure provisioning scripts for consistent test execution.

This reduces delays often associated with environment readiness.

Test Execution

During execution, AI enables:

Dynamic test selection based on code changes and risk levels.

Real-time anomaly detection during test runs.

Intelligent rerun strategies for failed tests to isolate flakiness from genuine defects.

Such adaptability improves efficiency and provides rapid feedback to development teams.

Test Cycle Closure

At the end of a cycle, generative AI contributes to:

Summarizing test results with insights and trends.

Recommending future improvements based on retrospective analysis.

Supporting audit-ready reporting with traceability from requirements to test outcomes.

genQE.ai intelligent reporting features ensure transparency and accountability at closure.

Thinking Long-Term about Generative AI in Software Development

The role of generative AI in software testing is only beginning. As models become more capable and trustworthy, their influence will extend beyond testing to encompass broader software development practices.

Forward-looking organizations should:

Embrace AI-augmented quality engineering as a strategic differentiator.

Embed AI capabilities within their DevOps pipelines for continuous validation.

Foster a culture of innovation and experimentation within testing teams.

genQE.ai represents a step toward this future — where quality is not just assured, but intelligently engineered.

Conclusion

Generative AI is reshaping the contours of software testing, introducing new possibilities for speed, quality, and insight. By adopting a thoughtful framework and leveraging platforms like genQE.ai, organizations can unlock the full potential of this technology. As with any transformation, success lies not just in the tools, but in the people and processes that evolve to harness them.

%20Abstract%20Background%20102024%20SOURCE%20Amazon.jpg)

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

.jpg?#)

.jpg?#)

_Wavebreakmedia_Ltd_IFE-240611_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Alexey_Kotelnikov_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Brian_Jackson_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Netflix Unveils Redesigned TV Interface With Smarter Recommendations [Video]](https://www.iclarified.com/images/news/97249/97249/97249-640.jpg)

![Apple Seeds visionOS 2.5 RC to Developers [Download]](https://www.iclarified.com/images/news/97240/97240/97240-640.jpg)