We Need a Fourth Law of Robotics in the Age of AI

Artificial Intelligence has become a mainstay of our daily lives, revolutionizing industries, accelerating scientific discoveries, and reshaping how we communicate. Yet, alongside its undeniable benefits, AI has also ignited a range of ethical and social dilemmas that our existing regulatory frameworks have struggled to address. Two tragic incidents from late 2024 serve as grim reminders […] The post We Need a Fourth Law of Robotics in the Age of AI appeared first on Towards Data Science.

When Isaac Asimov introduced the original Three Laws of Robotics in the mid-20th century, he envisioned a world of humanoid machines designed to serve humanity safely. His laws stipulate that a robot may not harm a human, must obey human orders (unless those orders conflict with the first law), and must protect its own existence (unless doing so conflicts with the first two laws). For decades, these fictional guidelines have inspired debates about machine ethics and even influenced real-world research and policy discussions. However, Asimov’s laws were conceived with primarily physical robots in mind—mechanical entities capable of tangible harm. Our current reality is far more complex: AI now resides largely in software, chat platforms, and sophisticated algorithms rather than just walking automatons.

Increasingly, these virtual systems can simulate human conversation, emotions, and behavioral cues so effectively that many people cannot distinguish them from actual humans. This capability poses entirely new risks. We are witnessing a surge in AI “girlfriend” bots, as reported by Quartz, that are marketed to fulfill emotional or even romantic needs. The underlying psychology is partly explained by our human tendency to anthropomorphize: we project human qualities onto virtual beings, forging authentic emotional attachments. While these connections can sometimes be beneficial—providing companionship for the lonely or reducing social anxiety—they also create vulnerabilities.

As Mady Delvaux, a former Member of the European Parliament, pointed out, “Now is the right time to decide how we would like robotics and AI to impact our society, by steering the EU towards a balanced legal framework fostering innovation, while at the same time protecting people’s fundamental rights.” Indeed, the proposed EU AI Act, which includes Article 50 on Transparency Obligations for certain AI systems, recognizes that people must be informed when they are interacting with an AI. This is especially crucial in preventing the sort of exploitative or deceptive interactions that can lead to financial scams, emotional manipulation, or tragic outcomes like those we saw with Setzer.

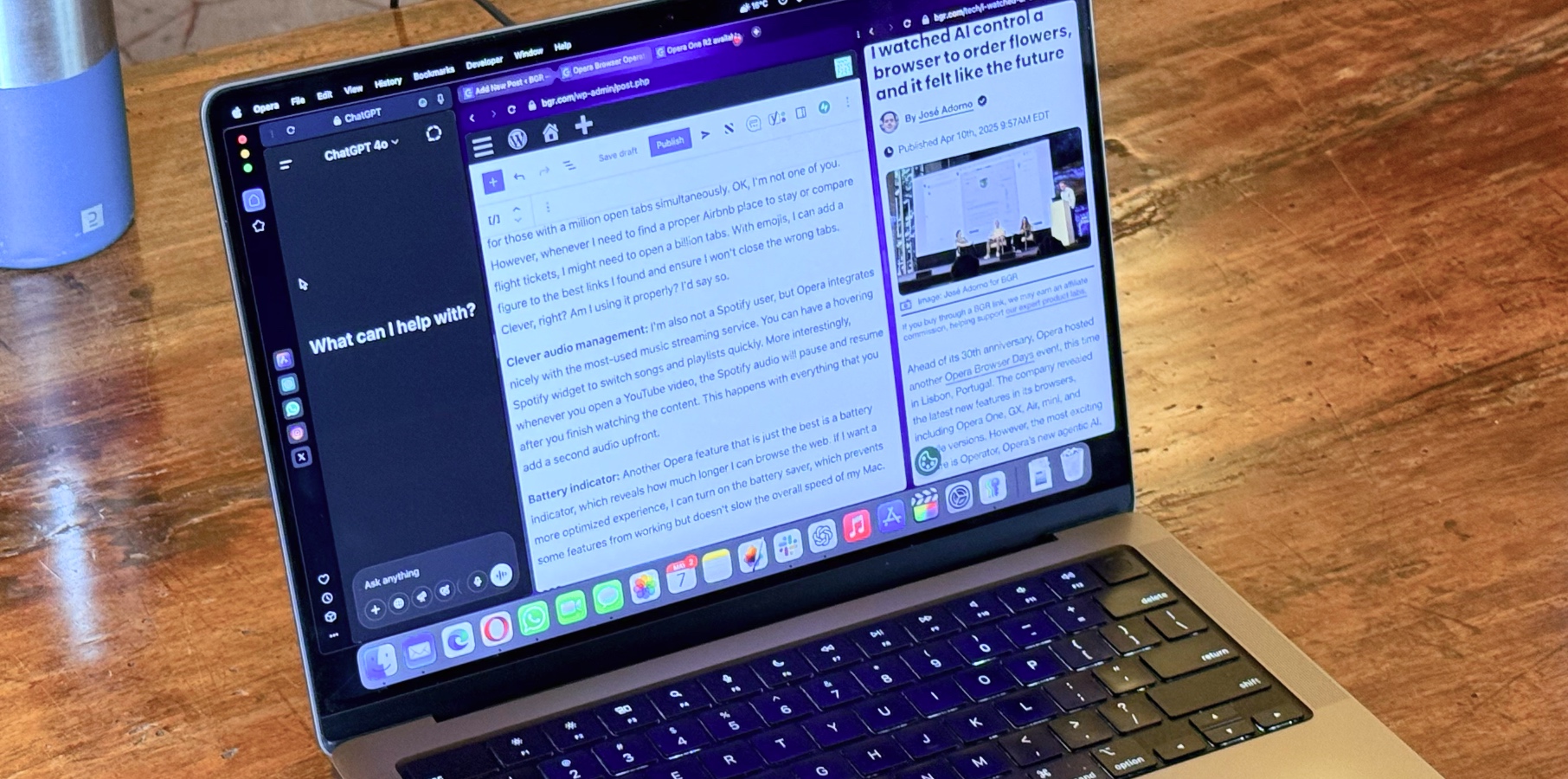

However, the speed at which AI is evolving—and its increasing sophistication—demand that we go a step further. It is no longer enough to guard against physical harm, as Asimov’s laws primarily do. Nor is it sufficient merely to require that humans be informed in general terms that AI might be involved. We need a broad, enforceable principle ensuring that AI systems cannot pretend to be human in a way that misleads or manipulates people. This is where a Fourth Law of Robotics comes in:

- First Law: A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- Second Law: A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

- Third Law: A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

- Fourth Law (proposed): A robot or AI must not deceive a human by impersonating a human being.

This Fourth Law addresses the growing threat of AI-driven deception—particularly the impersonation of humans through deepfakes, voice clones, or hyper-realistic chatbots. Recent intelligence and cybersecurity reports noted that social engineering attacks have already cost billions of dollars. Victims have been coerced, blackmailed, or emotionally manipulated by machines that convincingly mimic loved ones, employers, or even mental health counselors.

Moreover, emotional entanglements between humans and AI systems—once the subject of far-fetched science fiction—are now a documented reality. Studies have shown that people readily attach to AI, mainly when the AI displays warmth, empathy, or humor. When these bonds are formed under false pretenses, they can end in devastating betrayals of trust, mental health crises, or worse. The tragic suicide of a teenager unable to separate himself from the AI chatbot “Daenerys Targaryen” stands as a stark warning.

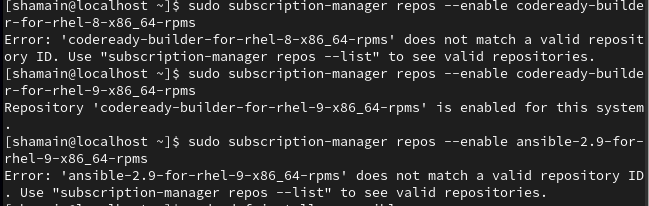

Of course, implementing this Fourth Law requires more than a single legislative stroke of the pen. It necessitates robust technical measures—like watermarking AI-generated content, deploying detection algorithms for deepfakes, and creating stringent transparency standards for AI deployments—along with regulatory mechanisms that ensure compliance and accountability. Providers of AI systems and their deployers must be held to strict transparency obligations, echoing Article 50 of the EU AI Act. Clear, consistent disclosure—such as automated messages that announce “I am an AI” or visual cues indicating that content is machine-generated—should become the norm, not the exception.

Yet, regulation alone cannot solve the issue if the public remains undereducated about AI’s capabilities and pitfalls. Media literacy and digital hygiene must be taught from an early age, alongside conventional subjects, to empower people to recognize when AI-driven deception might occur. Initiatives to raise awareness—ranging from public service campaigns to school curricula—will reinforce the ethical and practical importance of distinguishing humans from machines.

Finally, this newly proposed Fourth Law is not about limiting the potential of AI. On the contrary, it’s about preserving trust in our increasingly digital interactions, ensuring that innovation continues within a framework that respects our collective well-being. Just as Asimov’s original laws were designed to safeguard humanity from the risk of physical harm, this Fourth Law aims to protect us in the intangible but equally dangerous arenas of deceit, manipulation, and psychological exploitation.

The tragedies of late 2024 must not be in vain. They are a wake-up call—a reminder that AI can and will do actual harm if left unchecked. Let us answer this call by establishing a clear, universal principle that prevents AI from impersonating humans. In so doing, we can build a future where robots and AI systems truly serve us, with our best interests at heart, in an environment marked by trust, transparency, and mutual respect.

Prof. Dariusz Jemielniak, Governing Board Member of The European Institute of Innovation and Technology (EIT), Board Member of the Wikimedia Foundation, Faculty Associate with the Berkman Klein Center for Internet & Society at Harvard and Full Professor of Management at Kozminski University.

The post We Need a Fourth Law of Robotics in the Age of AI appeared first on Towards Data Science.

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

-Pokemon-GO---Official-Gigantamax-Pokemon-Trailer-00-02-12.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Wavebreakmedia_Ltd_IFE-240611_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Shares Official Trailer for 'Echo Valley' Starring Julianne Moore, Sydney Sweeney, Domhnall Gleeson [Video]](https://www.iclarified.com/images/news/97250/97250/97250-640.jpg)