Google is Talking To Dolphins Using AI

For decades, scientists have been captivated by the intricate sounds dolphins use to communicate. The clicks, whistles, and burst pulses are a language far beyond human understanding. While researchers have developed tools to capture and analyze these sounds, the true challenge lies in deciphering their patterns and uncovering their meaning. Now, with artificial intelligence advancing rapidly, could it finally help us crack the code? Google’s AI research lab, Google DeepMind, in collaboration with researchers at Georgia Tech and the field research of the Wild Dolphin Project (WDP), has created a new AI model, DolphinGemma, which they claim can decipher dolphin vocalizations. It works by creating synthetic dolphin voices and listening for a matching “reply.” This groundbreaking AI advancement can help support research efforts in understanding dolphin communication, providing deeper insights into their social behavior, cognitive abilities, and the potential for meaningful interaction between humans and dolphins. It could also play a key role in dolphin conservation efforts by allowing researchers to identify stress signals and monitor environmental threats. DolphinGemma is built on Google’s Gemma framework and works as an audio-in, audio-out model. It uses training data from WDP, which has vast experience studying wild Atlantic spotted dolphins. With decades of underwater recordings and detailed behavioral observations, WDP provides crucial insights into dolphin communication, allowing DolphinGemma to analyze vocal patterns with rich contextual data. A key component of DolphinGemma is the SoundStream tokenizer, a neural audio codec designed by DeepMind for efficient compression and processing of audio signals. SoundStream efficiently represents and processes complex acoustic sequences of dolphin sounds. It converts dolphin vocalizations into a structured format. Each acoustic sample is linked to individual dolphin identities, life histories, and observed behaviors, ensuring that the AI system has a rich dataset to learn from. The predictive capabilities of DolphinGemma work similarly to human large language models (LLMs), which anticipate the next word or token in a sentence. Using a 400M parameter model, Dolphin Gemma balances performance and computational efficiency. Researchers can run the mode directly from portable devices. This is a useful feature, as DolphinGEmma may often need to be deployed for field research where high-end or specialized hardware may not be available. WDP is beginning to deploy DolphinGemma this field season using Google’s Pixel 9 smartphone. According to Google, the researchers will be able to run AI models and template-matching algorithms on the device at the same time. Beyond analyzing dolphin vocalizations, DolphinGemma integrates with the Cetacean Hearing Augmentation Telemetry (CHAT) system to facilitate direct interaction between humans and dolphins. It does this by associating synthetic whistles with specific objects. Chat was developed by WDP in partnership with Georgia Tech. DolphinGemma’s predictive power integrated into CHAT helps supercharge the system. It can potentially allow dolphins to communicate with humans. For example, the dolphins can request items, and researchers can respond accordingly, creating a rudimentary form of two-way communication. By refining this technology, scientists may one day engage in meaningful exchanges with dolphins based on their natural language structures. Google plans on releasing DolphinGemma as an open model, allowing researchers from around the globe to use and adapt the model to study dolphins and other species. Some fine-tuning will be needed to set up the model for different species’ vocalizations. “Recognizing the value of collaboration in scientific discovery, we’re planning to share DolphinGemma as an open model this summer. While trained on Atlantic spotted dolphin sounds, we anticipate its potential utility for researchers studying other cetacean species, like bottlenose or spinner dolphins. Fine-tuning may be required for different species' vocalizations, and the open nature of the model facilitates this adaptation.” Widely considered one of the most intelligent creatures in the wild, dolphins may have a far more complex system of communication than we have ever understood. If scientists uncover highly sophisticated vocal patterns, it could reshape how we view their intelligence and interactions. AI has been instrumental in helping protect marine animals. Researchers from Rutgers University developed an AI-powered tool to predict whale habitat and movement, guiding ships across the Atlantic to avoid them. As AI continues to become more sophisticated, we can expect it to play an even greater role in advancing ocean research and protecting marine life.

For decades, scientists have been captivated by the intricate sounds dolphins use to communicate. The clicks, whistles, and burst pulses are a language far beyond human understanding. While researchers have developed tools to capture and analyze these sounds, the true challenge lies in deciphering their patterns and uncovering their meaning. Now, with artificial intelligence advancing rapidly, could it finally help us crack the code?

Google’s AI research lab, Google DeepMind, in collaboration with researchers at Georgia Tech and the field research of the Wild Dolphin Project (WDP), has created a new AI model, DolphinGemma, which they claim can decipher dolphin vocalizations. It works by creating synthetic dolphin voices and listening for a matching “reply.”

This groundbreaking AI advancement can help support research efforts in understanding dolphin communication, providing deeper insights into their social behavior, cognitive abilities, and the potential for meaningful interaction between humans and dolphins. It could also play a key role in dolphin conservation efforts by allowing researchers to identify stress signals and monitor environmental threats.

DolphinGemma is built on Google’s Gemma framework and works as an audio-in, audio-out model. It uses training data from WDP, which has vast experience studying wild Atlantic spotted dolphins. With decades of underwater recordings and detailed behavioral observations, WDP provides crucial insights into dolphin communication, allowing DolphinGemma to analyze vocal patterns with rich contextual data.

DolphinGemma is built on Google’s Gemma framework and works as an audio-in, audio-out model. It uses training data from WDP, which has vast experience studying wild Atlantic spotted dolphins. With decades of underwater recordings and detailed behavioral observations, WDP provides crucial insights into dolphin communication, allowing DolphinGemma to analyze vocal patterns with rich contextual data.

A key component of DolphinGemma is the SoundStream tokenizer, a neural audio codec designed by DeepMind for efficient compression and processing of audio signals. SoundStream efficiently represents and processes complex acoustic sequences of dolphin sounds. It converts dolphin vocalizations into a structured format.

Each acoustic sample is linked to individual dolphin identities, life histories, and observed behaviors, ensuring that the AI system has a rich dataset to learn from. The predictive capabilities of DolphinGemma work similarly to human large language models (LLMs), which anticipate the next word or token in a sentence.

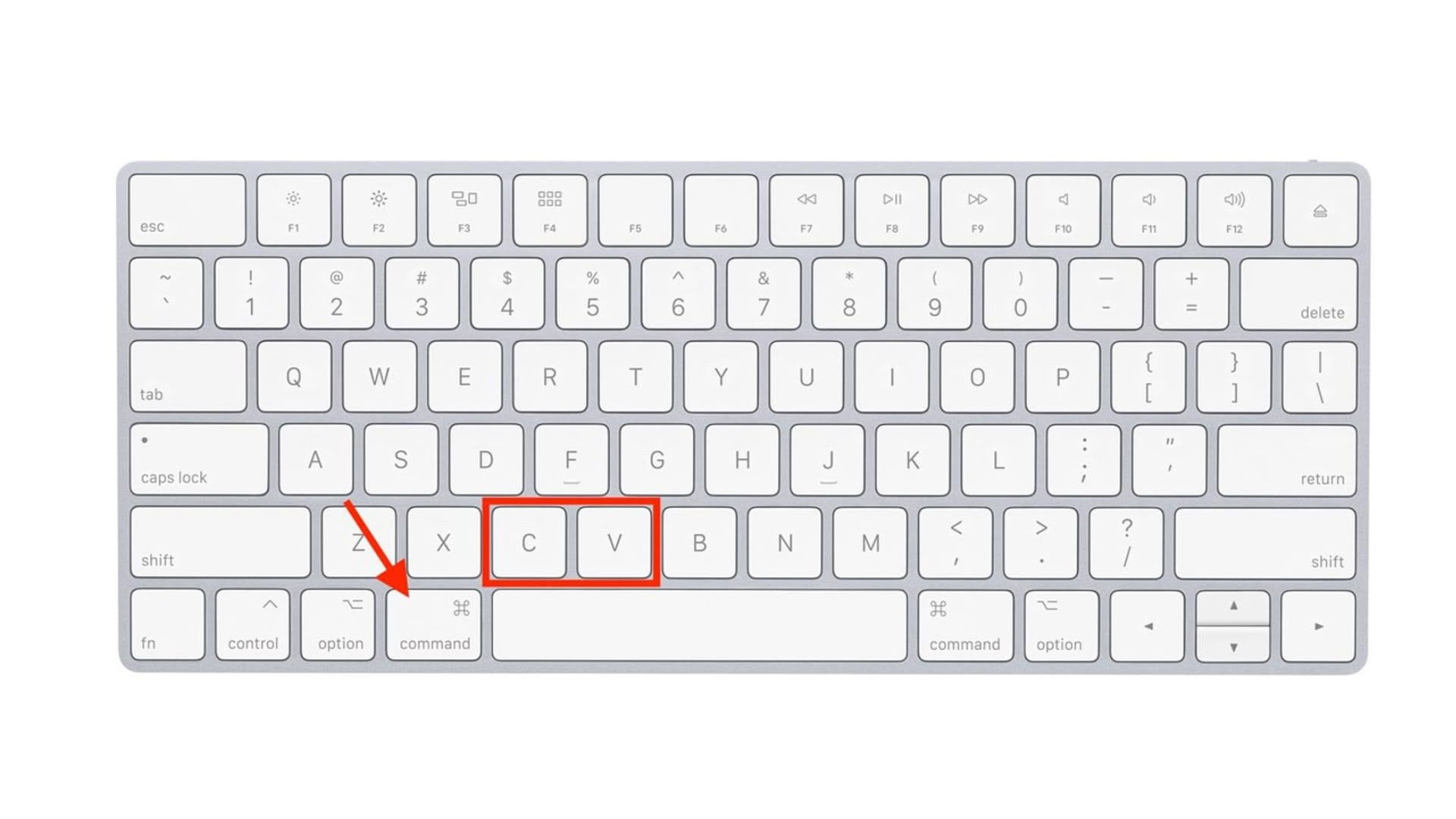

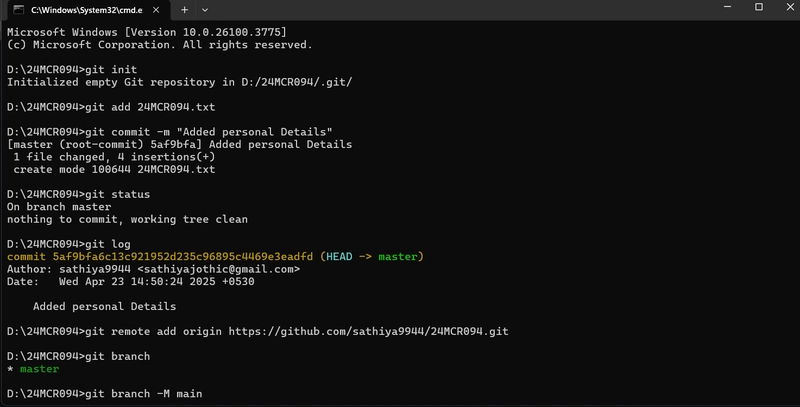

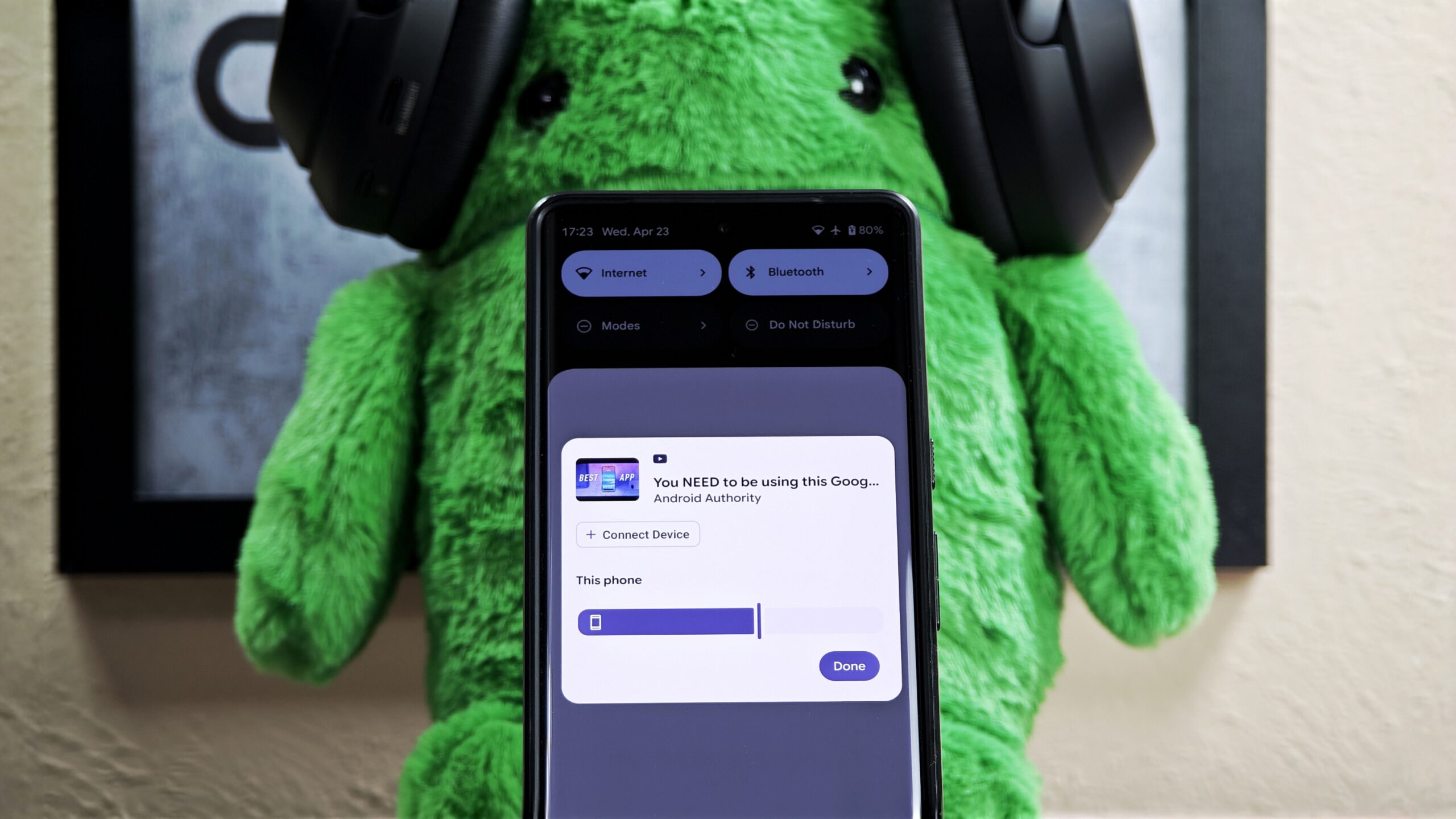

Using a 400M parameter model, Dolphin Gemma balances performance and computational efficiency. Researchers can run the mode directly from portable devices. This is a useful feature, as DolphinGEmma may often need to be deployed for field research where high-end or specialized hardware may not be available.

WDP is beginning to deploy DolphinGemma this field season using Google’s Pixel 9 smartphone. According to Google, the researchers will be able to run AI models and template-matching algorithms on the device at the same time.

Beyond analyzing dolphin vocalizations, DolphinGemma integrates with the Cetacean Hearing Augmentation Telemetry (CHAT) system to facilitate direct interaction between humans and dolphins. It does this by associating synthetic whistles with specific objects. Chat was developed by WDP in partnership with Georgia Tech.

DolphinGemma’s predictive power integrated into CHAT helps supercharge the system. It can potentially allow dolphins to communicate with humans. For example, the dolphins can request items, and researchers can respond accordingly, creating a rudimentary form of two-way communication. By refining this technology, scientists may one day engage in meaningful exchanges with dolphins based on their natural language structures.

Google plans on releasing DolphinGemma as an open model, allowing researchers from around the globe to use and adapt the model to study dolphins and other species. Some fine-tuning will be needed to set up the model for different species’ vocalizations.

“Recognizing the value of collaboration in scientific discovery, we’re planning to share DolphinGemma as an open model this summer. While trained on Atlantic spotted dolphin sounds, we anticipate its potential utility for researchers studying other cetacean species, like bottlenose or spinner dolphins. Fine-tuning may be required for different species' vocalizations, and the open nature of the model facilitates this adaptation.”

Widely considered one of the most intelligent creatures in the wild, dolphins may have a far more complex system of communication than we have ever understood. If scientists uncover highly sophisticated vocal patterns, it could reshape how we view their intelligence and interactions.

Widely considered one of the most intelligent creatures in the wild, dolphins may have a far more complex system of communication than we have ever understood. If scientists uncover highly sophisticated vocal patterns, it could reshape how we view their intelligence and interactions.

AI has been instrumental in helping protect marine animals. Researchers from Rutgers University developed an AI-powered tool to predict whale habitat and movement, guiding ships across the Atlantic to avoid them. As AI continues to become more sophisticated, we can expect it to play an even greater role in advancing ocean research and protecting marine life.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Is This Programming Paradigm New? [closed]](https://miro.medium.com/v2/resize:fit:1200/format:webp/1*nKR2930riHA4VC7dLwIuxA.gif)

-Classic-Nintendo-GameCube-games-are-coming-to-Nintendo-Switch-2!-00-00-13.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Wavebreakmedia_Ltd_FUS1507-1_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![New iPhone 17 Dummy Models Surface in Black and White [Images]](https://www.iclarified.com/images/news/97106/97106/97106-640.jpg)

![Hands-On With 'iPhone 17 Air' Dummy Reveals 'Scary Thin' Design [Video]](https://www.iclarified.com/images/news/97100/97100/97100-640.jpg)