Vibe Check: False Packages a New LLM Security Risk?

Lots of people swear by large-language model (LLM) AIs for writing code. Lots of people swear at them. Still others may be planning to exploit their peculiarities, according to [Joe …read more

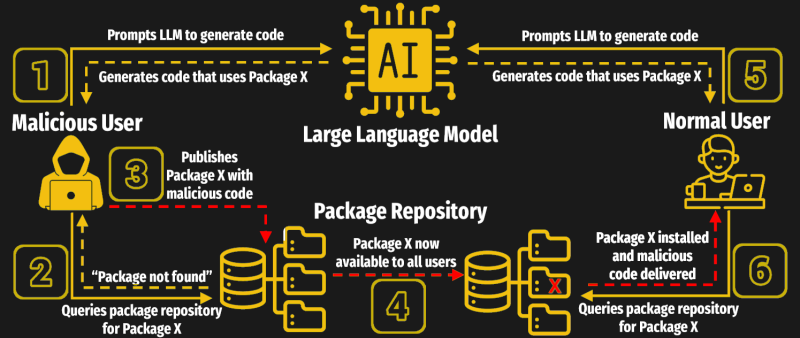

Lots of people swear by large-language model (LLM) AIs for writing code. Lots of people swear at them. Still others may be planning to exploit their peculiarities, according to [Joe Spracklen] and other researchers at USTA. At least, the researchers have found a potential exploit in ‘vibe coding’.

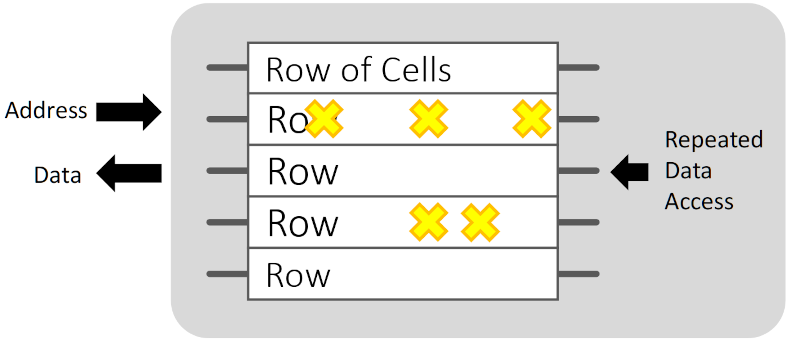

Everyone who has used an LLM knows they have a propensity to “hallucinate”– that is, to go off the rails and create plausible-sounding gibberish. When you’re vibe coding, that gibberish is likely to make it into your program. Normally, that just means errors. If you are working in an environment that uses a package manager, however (like npm in Node.js, or PiPy in Python, CRAN in R-studio) that plausible-sounding nonsense code may end up calling for a fake package.

A clever attacker might be able to determine what sort of false packages the LLM is hallucinating, and inject them as a vector for malicious code. It’s more likely than you think– while CodeLlama was the worst offender, the most accurate model tested (ChatGPT4) still generated these false packages at a rate of over 5%. The researchers were able to come up with a number of mitigation strategies in their full paper, but this is a sobering reminder that an AI cannot take responsibility. Ultimately it is up to us, the programmers, to ensure the integrity and security of our code, and of the libraries we include in it.

We just had a rollicking discussion of vibe coding, which some of you seemed quite taken with. Others agreed that ChatGPT is the worst summer intern ever. Love it or hate it, it’s likely this won’t be the last time we hear of security concerns brought up by this new method of programming.

Special thanks to [Wolfgang Friedrich] for sending this into our tip line.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![From Accountant to Data Engineer with Alyson La [Podcast #168]](https://cdn.hashnode.com/res/hashnode/image/upload/v1744420903260/fae4b593-d653-41eb-b70b-031591aa2f35.png?#)

.png?#)

![Apple Watch SE 2 On Sale for Just $169.97 [Deal]](https://www.iclarified.com/images/news/96996/96996/96996-640.jpg)

![Apple Posts Full First Episode of 'Your Friends & Neighbors' on YouTube [Video]](https://www.iclarified.com/images/news/96990/96990/96990-640.jpg)