LightPROF: A Lightweight AI Framework that Enables Small-Scale Language Models to Perform Complex Reasoning Over Knowledge Graphs (KGs) Using Structured Prompts

Large Language Models (LLMs) have revolutionized natural language processing, with abilities on complex zero-shot tasks through extensive training data and vast parameters. However, LLMs often struggle with knowledge-intensive tasks due to limited task-specific prior knowledge and understanding capabilities. LLMs need access to reliable and continuously updated knowledge bases for effective reasoning, with Knowledge Graphs (KGs) […] The post LightPROF: A Lightweight AI Framework that Enables Small-Scale Language Models to Perform Complex Reasoning Over Knowledge Graphs (KGs) Using Structured Prompts appeared first on MarkTechPost.

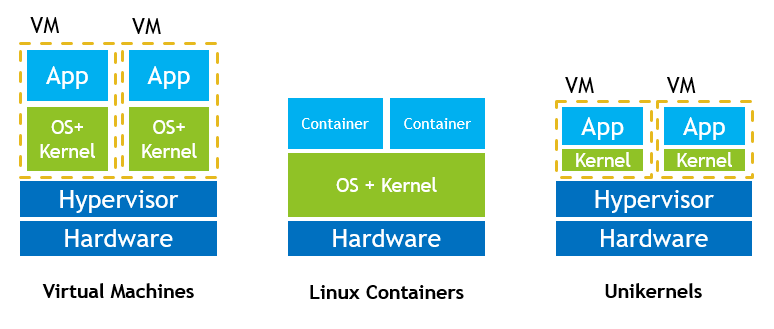

Large Language Models (LLMs) have revolutionized natural language processing, with abilities on complex zero-shot tasks through extensive training data and vast parameters. However, LLMs often struggle with knowledge-intensive tasks due to limited task-specific prior knowledge and understanding capabilities. LLMs need access to reliable and continuously updated knowledge bases for effective reasoning, with Knowledge Graphs (KGs) being ideal candidates due to their structured semantic framework. Current approaches to LLM reasoning on KGs encounter two obstacles: representing KG content as extensive text fails to convey rich logical relationships within the graph structure, and retrieval and reasoning processes demand numerous LLM calls and substantial reasoning power.

Prompt engineering has emerged as a critical technique for expanding LLM capabilities across various applications without modifying model parameters. The field has evolved from simple zero-shot and few-shot prompts to more complex approaches like Chain-of-Thought (CoT), Tree-of-Thoughts (ToT), and Graph-of-Thoughts (GoT). KG-based LLM reasoning has gained traction as KGs provide explicit, structured knowledge that enhances LLMs’ knowledge awareness with clear logical structures. More flexible solutions like KAPING, KGGPT, StructGPT, ToG, and KnowledgeNavigator construct LLM prompts using KG factual information with various techniques like semantic similarity retrieval, multi-step reasoning frameworks, and beam search on KGs to enhance reasoning capabilities.

Researchers from Beijing University of Posts and Telecommunications, Hangzhou Dianzi University, Singapore Management University, National University of Singapore, Institute of Computing Technology at Chinese Academy of Sciences, and Xi’an Jiaotong University have proposed LightPROF, a Lightweight and efficient Prompt learning-ReasOning Framework. The RetrieveEmbed-Reason framework enables small-scale LLMs to perform stable retrieval and efficient reasoning on KGs. It contains three core components: Retrieval, Embedding, and Reasoning modules. The Retrieval uses relations as fundamental retrieval units and limits the scope based on question semantics, the Embedding uses a compact Transformer-based Knowledge Adapter, and the Reasoning combines embedded representation vectors with carefully designed prompts. LightPROF supports various open-source LLMs and KGs while only requiring Knowledge Adapter tuning during training.

LightPROF is evaluated on two Freebase-based public datasets: WebQuestionsSP (WebQSP) and ComplexWebQuestions (CWQ). WebQSP serves as a benchmark with fewer questions (4,737) but a larger KG, and CWQ is designed for complex KG question answering with 34,689 question-answer pairs built upon WebQSP. Performance is measured using match accuracy (Hits@1), which evaluates whether the model’s top answer is correct. LightPROF is compared against three categories of baseline methods: full fine-tuning approaches (including KV-Mem, EmbedKGQA, TransferNet, NSM, etc), vanilla LLM methods (featuring LLaMa series models), and LLM+KGs methods (such as StructGPT, ToG, KnowledgeNavigator, and AgentBench).

LightPROF significantly outperforms state-of-the-art models, achieving 83.7% accuracy on the WebQSP dataset and 59.3% on the more challenging CWQ dataset. These results validate LightPROF’s effectiveness in handling multi-hop and complex reasoning challenges in KG question answering. When integrating different LLMs within the framework, LightPROF consistently enhances performance regardless of the baseline capabilities of the original models. This plug-and-play integration strategy eliminates the need for costly LLM fine-tuning. Efficiency evaluations against StructGPT reveal LightPROF’s superior resource utilization, with a 30% reduction in processing time, 98% reduction in input token usage, and significantly lower tokens per request.

In conclusion, researchers introduced LightPROF, a novel framework that enhances LLM reasoning through accurate retrieval and efficient encoding of KGs. It narrows the retrieval scope by sampling KGs using stable relationships as units. Researchers developed a complex Knowledge Adapter that effectively parses graph structures and integrates information to enable efficient reasoning with smaller LLMs. It condenses reasoning graphs into fewer tokens while achieving comprehensive alignment with LLM input space through the Projector component. Future research directions include developing KG encoders with strong generalization capabilities that can be applied to unseen KG data without retraining and designing unified cross-modal encoders capable of handling multimodal KGs.

Check out Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 85k+ ML SubReddit.

The post LightPROF: A Lightweight AI Framework that Enables Small-Scale Language Models to Perform Complex Reasoning Over Knowledge Graphs (KGs) Using Structured Prompts appeared first on MarkTechPost.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![[DEALS] Microsoft Visual Studio Professional 2022 + The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From Accountant to Data Engineer with Alyson La [Podcast #168]](https://cdn.hashnode.com/res/hashnode/image/upload/v1744420903260/fae4b593-d653-41eb-b70b-031591aa2f35.png?#)

.png?#)

![iPadOS 19 Will Be More Like macOS [Gurman]](https://www.iclarified.com/images/news/97001/97001/97001-640.jpg)

![Apple TV+ Summer Preview 2025 [Video]](https://www.iclarified.com/images/news/96999/96999/96999-640.jpg)

![Apple Watch SE 2 On Sale for Just $169.97 [Deal]](https://www.iclarified.com/images/news/96996/96996/96996-640.jpg)