"Unlocking LLMs: Enhancing Reasoning and Text Generation with Structural Alignment"

In a world where the ability to communicate effectively can make or break opportunities, understanding how large language models (LLMs) operate is more crucial than ever. Have you ever found yourself frustrated by text generation that lacks coherence or depth? Or perhaps you've wondered why some AI responses seem so much more insightful than others? If these questions resonate with you, you're not alone. Many grapple with the challenge of harnessing LLMs for meaningful and reasoned outputs. In this blog post, we will embark on an enlightening journey into the realm of LLMs, demystifying their inner workings while focusing on a groundbreaking concept: structural alignment. This innovative approach promises to enhance reasoning capabilities in text generation—transforming mere words into powerful narratives that engage and inform. By exploring how structural alignment works and its real-world applications, you'll gain invaluable insights into leveraging LLM technology for your own projects or interests. Join us as we unlock the potential of advanced language models and explore future trends that could redefine our interaction with artificial intelligence forever! Understanding LLMs: The Basics of Language Models Large Language Models (LLMs) are sophisticated AI systems designed to understand and generate human-like text. They leverage vast datasets, employing deep learning techniques to learn patterns in language. Key components influencing their performance include model size, training steps, and hyperparameters. As models scale up, they often exhibit improved reasoning capabilities; however, overparameterization can hinder this performance. Factors such as graph structure complexity and search entropy play crucial roles in optimizing these models for specific tasks like question-answering or long-form text generation. Recent research emphasizes the need for empirical scaling methods that align model architecture with knowledge graphs to enhance reasoning efficiency. Key Components Influencing LLM Performance The interplay between various elements is vital for maximizing an LLM's potential. Hyperparameters dictate how well a model learns from data while maintaining stability during training processes like Proximal Policy Optimization (PPO). Moreover, understanding knowledge graph intricacies allows researchers to fine-tune models effectively—leading to significant advancements in areas such as multilingual retrieval-augmented generation (RAG). By integrating external knowledge into inference processes, these enhancements facilitate better comprehension across diverse languages and contexts. Through continuous exploration of structural alignment methodologies and reinforcement learning strategies like Adversarial Imitation Feedback (RLAIF), the landscape of language modeling is evolving rapidly—promising more coherent and contextually relevant outputs tailored for complex tasks. The Importance of Reasoning in Text Generation Reasoning plays a pivotal role in the effectiveness of text generation, particularly within Large Language Models (LLMs). As these models scale up, their reasoning capabilities can be significantly impacted by factors such as overparameterization and hyperparameters. Research indicates that optimizing model size for specific knowledge graphs enhances reasoning performance, which is crucial for generating coherent and contextually relevant text. Additionally, understanding graph structures and search entropy provides insights into how LLMs process information. By employing empirical scaling methods, researchers aim to refine LLM training scenarios to bolster reasoning abilities further. Enhancing Coherence Through Reasoning The ability to reason logically not only aids in producing accurate responses but also ensures that generated content maintains coherence across longer texts. This is where techniques like Structural Alignment come into play; they align LLM outputs with human-like discourse structures. Such alignment allows models to leverage linguistic frameworks effectively while tackling challenges associated with long-form text generation. Ultimately, enhancing reasoning capabilities through structured approaches leads to more sophisticated interactions between users and language models. What is Structural Alignment? A Quick Overview Structural Alignment refers to a novel method designed to enhance the coherence and quality of text generated by Large Language Models (LLMs). This approach leverages linguistic structures, particularly hierarchical discourse trees based on Rhetorical Structure Theory (RST), to align LLM outputs with human-like discourse patterns. By addressing challenges in generating long-form text, Structural Alignment facilitates better reasoning capabilities within models. It employs reinforcement learning techniques such as Adversarial Imitation Feedback (RLAIF) and Proximal Policy Optimization (PPO) for training stability, while also implementing a

In a world where the ability to communicate effectively can make or break opportunities, understanding how large language models (LLMs) operate is more crucial than ever. Have you ever found yourself frustrated by text generation that lacks coherence or depth? Or perhaps you've wondered why some AI responses seem so much more insightful than others? If these questions resonate with you, you're not alone. Many grapple with the challenge of harnessing LLMs for meaningful and reasoned outputs. In this blog post, we will embark on an enlightening journey into the realm of LLMs, demystifying their inner workings while focusing on a groundbreaking concept: structural alignment. This innovative approach promises to enhance reasoning capabilities in text generation—transforming mere words into powerful narratives that engage and inform. By exploring how structural alignment works and its real-world applications, you'll gain invaluable insights into leveraging LLM technology for your own projects or interests. Join us as we unlock the potential of advanced language models and explore future trends that could redefine our interaction with artificial intelligence forever!

Understanding LLMs: The Basics of Language Models

Large Language Models (LLMs) are sophisticated AI systems designed to understand and generate human-like text. They leverage vast datasets, employing deep learning techniques to learn patterns in language. Key components influencing their performance include model size, training steps, and hyperparameters. As models scale up, they often exhibit improved reasoning capabilities; however, overparameterization can hinder this performance. Factors such as graph structure complexity and search entropy play crucial roles in optimizing these models for specific tasks like question-answering or long-form text generation. Recent research emphasizes the need for empirical scaling methods that align model architecture with knowledge graphs to enhance reasoning efficiency.

Key Components Influencing LLM Performance

The interplay between various elements is vital for maximizing an LLM's potential. Hyperparameters dictate how well a model learns from data while maintaining stability during training processes like Proximal Policy Optimization (PPO). Moreover, understanding knowledge graph intricacies allows researchers to fine-tune models effectively—leading to significant advancements in areas such as multilingual retrieval-augmented generation (RAG). By integrating external knowledge into inference processes, these enhancements facilitate better comprehension across diverse languages and contexts.

Through continuous exploration of structural alignment methodologies and reinforcement learning strategies like Adversarial Imitation Feedback (RLAIF), the landscape of language modeling is evolving rapidly—promising more coherent and contextually relevant outputs tailored for complex tasks.

The Importance of Reasoning in Text Generation

Reasoning plays a pivotal role in the effectiveness of text generation, particularly within Large Language Models (LLMs). As these models scale up, their reasoning capabilities can be significantly impacted by factors such as overparameterization and hyperparameters. Research indicates that optimizing model size for specific knowledge graphs enhances reasoning performance, which is crucial for generating coherent and contextually relevant text. Additionally, understanding graph structures and search entropy provides insights into how LLMs process information. By employing empirical scaling methods, researchers aim to refine LLM training scenarios to bolster reasoning abilities further.

Enhancing Coherence Through Reasoning

The ability to reason logically not only aids in producing accurate responses but also ensures that generated content maintains coherence across longer texts. This is where techniques like Structural Alignment come into play; they align LLM outputs with human-like discourse structures. Such alignment allows models to leverage linguistic frameworks effectively while tackling challenges associated with long-form text generation. Ultimately, enhancing reasoning capabilities through structured approaches leads to more sophisticated interactions between users and language models.

What is Structural Alignment? A Quick Overview

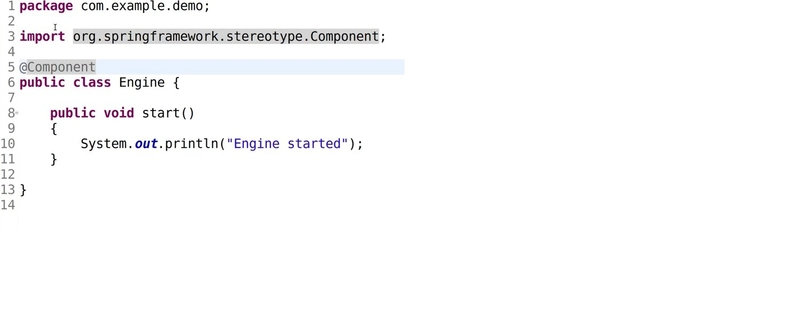

Structural Alignment refers to a novel method designed to enhance the coherence and quality of text generated by Large Language Models (LLMs). This approach leverages linguistic structures, particularly hierarchical discourse trees based on Rhetorical Structure Theory (RST), to align LLM outputs with human-like discourse patterns. By addressing challenges in generating long-form text, Structural Alignment facilitates better reasoning capabilities within models. It employs reinforcement learning techniques such as Adversarial Imitation Feedback (RLAIF) and Proximal Policy Optimization (PPO) for training stability, while also implementing a reward system that incorporates discourse motifs and token-level rewards.

Key Features of Structural Alignment

One significant aspect of Structural Alignment is its focus on optimizing model performance through surface-level and graph-level alignment strategies. These methods not only improve the generation process but also ensure that the produced content adheres closely to established linguistic norms. The empirical scaling methods proposed aim at fine-tuning hyperparameters relevant to knowledge graphs, thus enhancing overall reasoning abilities in LLMs. As research continues in this area, it lays a foundation for future advancements aimed at improving both ethical considerations and practical applications in natural language processing tasks.

How Structural Alignment Enhances LLM Performance

Structural alignment plays a pivotal role in improving the performance of Large Language Models (LLMs) by ensuring that generated text adheres to human-like discourse structures. This method leverages linguistic frameworks, such as Rhetorical Structure Theory (RST), to create hierarchical discourse trees that guide the model's output. By aligning surface-level and graph-level structures, LLMs can produce more coherent and contextually relevant long-form content. The integration of reinforcement learning techniques like Adversarial Imitation Feedback (RLAIF) further stabilizes training processes while enhancing reasoning capabilities through structured rewards based on discourse motifs.

Key Mechanisms for Improvement

The application of structural alignment not only optimizes token generation but also addresses challenges associated with overparameterization in LLMs. It allows models to focus on essential elements within knowledge graphs, thereby refining their reasoning abilities during text generation tasks. Moreover, employing length-penalty normalization ensures that outputs maintain quality without sacrificing coherence or relevance across longer texts. As these models evolve with improved structural understanding, they pave the way for enhanced applications in various domains including education, content creation, and multilingual processing—ultimately transforming how machines understand and generate language.

Real-World Applications of Enhanced LLMs

Enhanced Large Language Models (LLMs) are revolutionizing various industries by improving text generation, comprehension, and interaction capabilities. In customer service, for instance, LLMs facilitate automated responses that mimic human-like conversation patterns through structural alignment techniques. This leads to more coherent interactions and higher user satisfaction. In education, these models assist in personalized learning experiences by generating tailored content based on student queries or progress assessments.

Multilingual Capabilities

The integration of retrieval-augmented generation (RAG) allows enhanced LLMs to process multilingual information effectively. By leveraging external knowledge during inference, they outperform traditional monolingual systems in tasks such as open-domain question answering across diverse languages. This capability is particularly beneficial for global organizations aiming to provide localized support without compromising quality.

Content Creation and Analysis

In the realm of content creation, enhanced LLMs streamline the writing process by producing high-quality long-form texts while adhering to linguistic structures like Rhetorical Structure Theory (RST). They can generate essays or articles with improved coherence and relevance through advanced reinforcement learning strategies that reward meaningful discourse motifs. These applications not only save time but also enhance creativity in professional writing environments.

Overall, the real-world implications of enhanced LLMs extend beyond mere automation; they foster deeper engagement and understanding across various sectors while addressing complex challenges inherent in language processing tasks.

Future Trends in LLM Development and Reasoning

The future of Large Language Models (LLMs) is poised for significant advancements, particularly in their reasoning capabilities. Research indicates that while increasing model size can enhance performance, overparameterization may hinder reasoning efficiency. Therefore, a balanced approach to scaling is essential. Innovations such as Structural Alignment are expected to play a pivotal role by aligning LLMs with human-like discourse structures, improving coherence in long-form text generation.

Key Areas of Focus

Future developments will likely emphasize hyperparameter optimization and the integration of complex knowledge graphs to refine reasoning abilities further. The introduction of retrieval-augmented generation techniques will also be crucial for multilingual applications, allowing models to leverage external knowledge effectively during inference. Additionally, reinforcement learning methods like Adversarial Imitation Feedback (RLAIF) promise enhanced training stability and improved reward systems based on discourse motifs.

As researchers explore these avenues, we anticipate breakthroughs that not only improve the quality of generated content but also address ethical considerations surrounding AI-generated text. This multifaceted approach ensures that LLMs evolve into more reliable tools capable of producing coherent narratives across diverse languages and contexts while maintaining alignment with human communication standards.

In conclusion, the exploration of large language models (LLMs) reveals their transformative potential in text generation and reasoning capabilities. Understanding the foundational aspects of LLMs is crucial as they serve as the backbone for various applications across industries. The significance of reasoning cannot be overstated; it enhances the coherence and relevance of generated content, making interactions more meaningful. Structural alignment emerges as a pivotal technique that optimizes LLM performance by ensuring that generated outputs are not only contextually appropriate but also logically sound. As we witness real-world applications ranging from customer service automation to creative writing assistance, it's clear that enhanced LLMs can significantly impact productivity and creativity alike. Looking ahead, future trends suggest an ongoing evolution in LLM development with a focus on refining reasoning abilities further, promising even greater advancements in how machines understand and generate human-like text. Embracing these innovations will undoubtedly shape our interaction with technology in profound ways moving forward.

FAQs on "Unlocking LLMs: Enhancing Reasoning and Text Generation with Structural Alignment"

1. What are Language Models (LLMs) and how do they work?

Language models, or LLMs, are algorithms designed to understand and generate human language. They analyze vast amounts of text data to learn patterns in language usage, enabling them to predict the next word in a sentence or generate coherent paragraphs based on given prompts.

2. Why is reasoning important in text generation?

Reasoning is crucial for generating meaningful and contextually appropriate responses. It allows LLMs to not only produce grammatically correct sentences but also ensure that the content makes logical sense, adheres to facts, and aligns with user intent.

3. What does structural alignment mean in the context of LLMs?

Structural alignment refers to organizing information within an LLM's architecture so that it can better relate different pieces of knowledge during processing. This technique enhances the model’s ability to draw connections between concepts, improving its reasoning capabilities.

4. How does structural alignment improve the performance of LLMs?

By implementing structural alignment, LLMs can more effectively integrate diverse information sources while maintaining coherence in their outputs. This leads to enhanced reasoning abilities which result in higher quality text generation that is relevant and accurate.

5. What are some real-world applications of enhanced LLMs using structural alignment?

Enhanced LLMs utilizing structural alignment have numerous applications including advanced chatbots for customer service, automated content creation for marketing purposes, improved educational tools for personalized learning experiences, and sophisticated research assistants capable of synthesizing complex information efficiently.

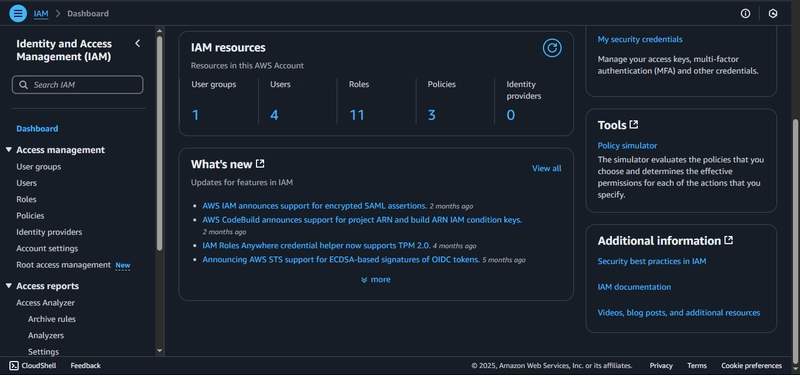

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

-11.11.2024-4-49-screenshot.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_jvphoto_Alamy.jpg?#)

.png?#)

![Apple Debuts Official Trailer for 'Murderbot' [Video]](https://www.iclarified.com/images/news/96972/96972/96972-640.jpg)

![Alleged Case for Rumored iPhone 17 Pro Surfaces Online [Image]](https://www.iclarified.com/images/news/96969/96969/96969-640.jpg)

![Apple Rushes Five Planes of iPhones to US Ahead of New Tariffs [Report]](https://www.iclarified.com/images/news/96967/96967/96967-640.jpg)