Google to bring Ironwood TPU, Gemini 2.5 Flash, and more cutting-edge AI innovations

AI holds the power to improve lives, enhance productivity, and reimagine processes on a scale previously unimaginable, says Thomas Kurian, CEO, Google Cloud.

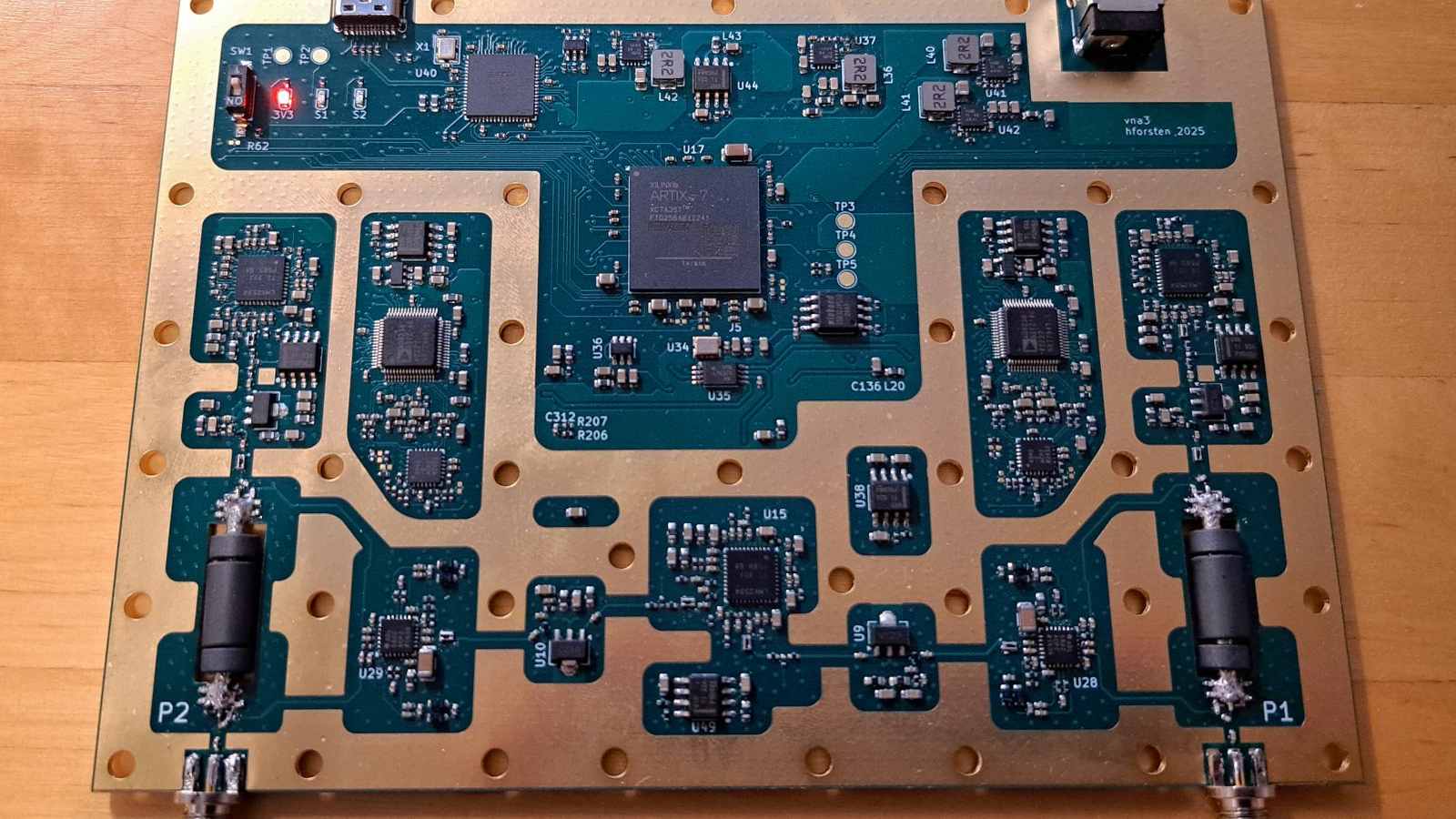

Tech giant has developed its seventh-generation TPU—Ironwood—designed to support the next phase of generative AI and its vast compute and communication demands.

This was announced at Google Cloud Next ‘25, the company’s annual flagship conference that focuses on cloud computing, AI, and emerging technologies.

TPUs or tensor processing units are application-specific integrated circuits developed by Google to accelerate machine learning workloads.

“Our seventh generation TPU, Ironwood, represents our largest and most powerful TPU to date, a more than 10x improvement from our most recent high-performance TPU,” said Thomas Kurian, CEO, Google Cloud, in a blog post on Google Cloud Next ‘25.

He added that Ironwood features over 9,000 chips per pod, delivering 42.5 exaflops of compute per pod, meeting the exponentially growing demands of thinking AI models, like Gemini 2.5.

Chips per pod is the number of AI chips that are grouped together in one unit (or pod). Exaflops per pod measures the total computing power the pod has; one exaflop equals a billion billion (10¹⁸) calculations per second.

Ironwood is the first TPU designed specifically for inference, said Amin Vahdat, VP & GM of ML, Systems, and Cloud AI, in a blog post. He highlighted that TPUs have powered Google’s most demanding AI training and serving workloads for more than a decade.

The seventh-generation TPU is purpose-built to power thinking, inferential AI models at scale. This advancement will enable Google and its customers to develop and deploy more sophisticated inferential AI models at scale.

Inferential AI models use existing data to arrive at predictions, decisions, and conclusions without the need for new training.

Gemini 2.5 Flash

Google also announced the Gemini 2.5 Flash model at Google’s Cloud Next.

Gemini 2.5 Flash is a workhorse model optimised specifically for low latency and cost efficiency. It will be available on Vertex AI, Google’s platform for building and managing AI applications and agents.

“Flash is ideal for everyday use cases like providing fast responses during high-volume customer interactions, where real-time summaries or quick access to documents are needed,” said Kurian.

He added that Gemini 2.5 Flash adjusts the depth of reasoning based on the complexity of prompts, and one can control performance based on customers’ budgets.

Gemini, Google’s most advanced family of AI models, recently saw the addition of Gemini 2.5, its most intelligent AI model to date. Gemini 2.5 is capable of reasoning before responding, leading to enhanced performance.

Gemini 2.5 Pro, the first model in this family, is now available for public preview on Vertex AI. It’s designed to tackle the toughest tasks that require deep reasoning and coding expertise. It can perform deep data analysis, extract valuable insights from dense documents like legal contracts and medical records, and handle complex coding tasks by understanding entire codebases.

The AI model can understand and use up to one million words or word pieces at once, allowing it to process much longer texts or conversations without losing context.

AI agents

At the event, Google Cloud launched Agent2Agent, an open protocol for secure communication between diverse AI agents across enterprise platforms, developed with industry partners.

AI agents excel at breaking down multifaceted problems into smaller, actionable steps, coordinating resources, and adapting through continuous learning to improve outcomes over time.

“We are proud to be the first hyperscaler to create an open Agent2Agent protocol to help enterprises support multi-agent ecosystems, so agents can communicate with each other, regardless of the underlying technology,” Kurian stated.

More than 50 partners, including Accenture, Box, Deloitte, Salesforce, SAP, ServiceNow, and TCS, are actively contributing to defining this protocol, he added.

The tech firm is also bringing a new AI Agent Marketplace—a dedicated section within Google Cloud Marketplace that will allow customers to browse, purchase, and manage AI agents built by its partners.

Partners including Accenture, BigCommerce, Deloitte Elastic, UiPath, Typeface, and VMware are offering agents through the AI Agent Marketplace. More agents are in the pipeline from partners including Cognizant, Slalom, and Wipro.

More innovations

Among other innovations, Google Distributed Cloud (GDC) is bringing Google’s models to on-premises environments.

Kurian noted that the firm has partnered with NVIDIA to bring Gemini to NVIDIA Blackwell systems, with Dell as a key partner, so the AI model can be used locally in air-gapped and connected environments.

NVIDIA Blackwell is a cutting-edge GPU architecture developed for AI and accelerated computing.

Air gapping refers to strictly limiting or disconnecting a computer or a network from other networks to prevent unauthorised access.

“This complements our GDC air-gapped product, which is now authorized for the US Government Secret and Top Secret levels, and on which Gemini is available, provides the highest levels of security and compliance,” said the Google Cloud chief.

The tech giant is also accelerating technology transformations through the use of cloud and generative AI for companies including Manipal Hospitals, The L'Oréal Groupe, Reddit, and Samsung.

“The opportunity presented by AI is unlike anything we have ever witnessed. It holds the power to improve lives, enhance productivity, and reimagine processes on a scale previously unimaginable,” Kurian remarked, in the blog post.

Edited by Swetha Kannan

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Blue Archive tier list [April 2025]](https://media.pocketgamer.com/artwork/na-33404-1636469504/blue-archive-screenshot-2.jpg?#)

.png?#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

.webp?#)

![PSA: It’s not just you, Spotify is down [U: Fixed]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2023/06/spotify-logo-2.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![[Update: Optional] Google rolling out auto-restart security feature to Android](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-2.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Releases iOS 18.4.1 and iPadOS 18.4.1 [Download]](https://www.iclarified.com/images/news/97043/97043/97043-640.jpg)

![Apple Releases visionOS 2.4.1 for Vision Pro [Download]](https://www.iclarified.com/images/news/97046/97046/97046-640.jpg)

![Apple Vision 'Air' Headset May Feature Titanium and iPhone 5-Era Black Finish [Rumor]](https://www.iclarified.com/images/news/97040/97040/97040-640.jpg)

![[Update: Trump Backtracks]The U.S. Just Defunded a Key Security Database, And Your Android Phone Could Pay the Price](https://www.androidheadlines.com/wp-content/uploads/2025/03/Android-logo-AM-AH.jpg)