QA - Best methods for getting large amount of test data into event consuming applications generated from an API application [closed]

I have a Web API application with these endpoints, e.g A, B, C and D. B, C and D rely on a value returned in the response from A. A needs to happen first before the others. B, C and D can be in any sequence. At the end of the workflow for B, C and D, an event is published. There are a number of applications that consume these events for different purposes, each with their own application configuration. Each application is tested individually with acceptance tests (also unit tests within the codebase), by using Postman for the APIs and an inhouse C# solution using NUnit for the event consuming applications. There is a dedicated QA environment. What's the best method to get large amount of data into all these applications, starting from the API? I'm not really concerned with doing any kind of verification as the functionality is already verified. This is more of an end to end question to pump random data through the API and logging/metrics will hopefully show if a consuming application isn't behaving as expecting. Is it easier to write an inhouse C# test application that would build up each request and use something like Faker to set random values and random sequences of requests, or read from something like a csv or excel file where there is more control of values? Are there other tools out there that would do something similar?

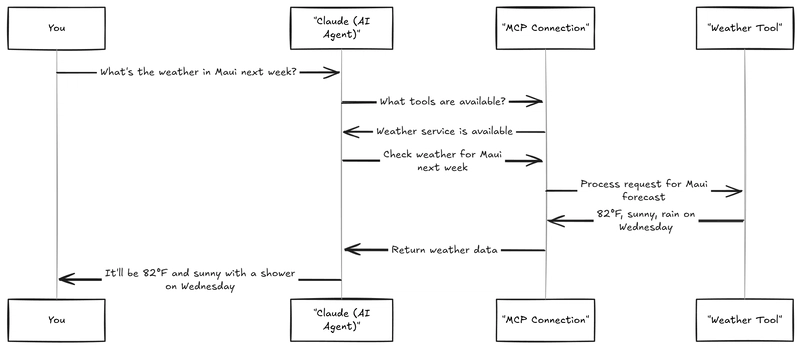

I have a Web API application with these endpoints, e.g A, B, C and D. B, C and D rely on a value returned in the response from A. A needs to happen first before the others. B, C and D can be in any sequence. At the end of the workflow for B, C and D, an event is published.

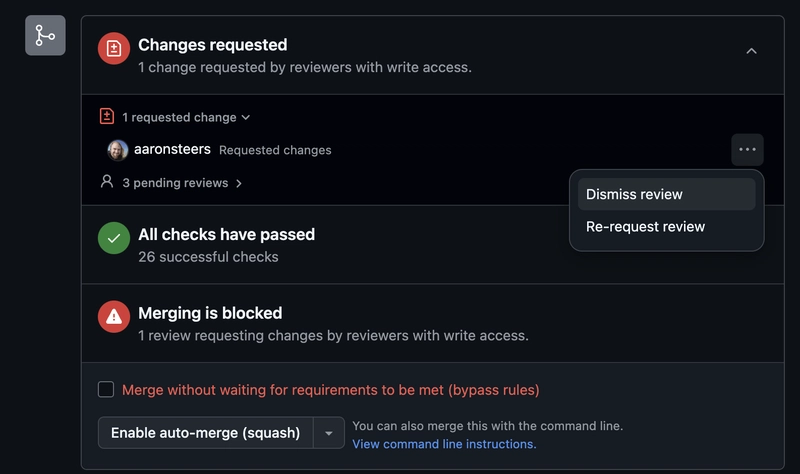

There are a number of applications that consume these events for different purposes, each with their own application configuration. Each application is tested individually with acceptance tests (also unit tests within the codebase), by using Postman for the APIs and an inhouse C# solution using NUnit for the event consuming applications. There is a dedicated QA environment.

What's the best method to get large amount of data into all these applications, starting from the API? I'm not really concerned with doing any kind of verification as the functionality is already verified. This is more of an end to end question to pump random data through the API and logging/metrics will hopefully show if a consuming application isn't behaving as expecting.

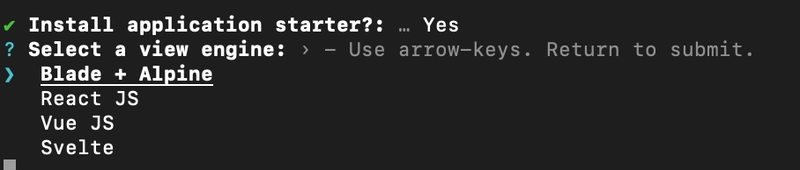

Is it easier to write an inhouse C# test application that would build up each request and use something like Faker to set random values and random sequences of requests, or read from something like a csv or excel file where there is more control of values? Are there other tools out there that would do something similar?

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![From Accountant to Data Engineer with Alyson La [Podcast #168]](https://cdn.hashnode.com/res/hashnode/image/upload/v1744420903260/fae4b593-d653-41eb-b70b-031591aa2f35.png?#)

.png?#)

![Apple Watch SE 2 On Sale for Just $169.97 [Deal]](https://www.iclarified.com/images/news/96996/96996/96996-640.jpg)

![Apple Posts Full First Episode of 'Your Friends & Neighbors' on YouTube [Video]](https://www.iclarified.com/images/news/96990/96990/96990-640.jpg)