Stop OOMs with Semaphores

Go makes it easy to write concurrent code — just add go doSomething() and you're off. But if you're not careful, you can overwhelm your own service with too many goroutines. Here's how to avoid accidentally DDoSing yourself using a simple, effective semaphore. Semaphore is a concurrency pattern that has existed long before Go did, but is exceptionally easy to implement with Go’s channels. There are many use cases for semaphores in computer science, but one of the most practical in Go is limiting the number of goroutines your program spawns. Goroutines are cheap but not free. Unbounded goroutines can lead to degraded performance due to CPU contention, runaway memory usage (heap and stack), and even goroutine leaks — where goroutines silently keep running forever. Example: The following code snippet was adapted from go-chi: https://github.com/go-chi/chi. It creates a simple web server with one http endpoint to process large files provided as multipart requests, and uses a semaphore to limit global concurrency, ensuring the service never processes more than a fixed number of files at once — regardless of how many users hit the endpoint. func main() { maxGoroutinesEnv := os.Getenv("MAX_GOROUTINES") maxGoroutines, err := strconv.Atoi(maxGoroutinesEnv) if err != nil { log.Fatalf("failed to load MAX_GOROUTINES env var %w", err) } // create semaphore sem := NewSemaphore(maxGoroutines) r := chi.NewRouter() r.Use(middleware.Logger) r.Get("/", func(w http.ResponseWriter, r *http.Request) { processListOfLargeCustomerProvidedConfigs(w, r, sem) }) http.ListenAndServe(":3000", r) } func processListOfLargeCustomerProvidedConfigs( w http.ResponseWriter, r *http.Request, sem *Semaphore, ) { err := r.ParseMultipartForm(50

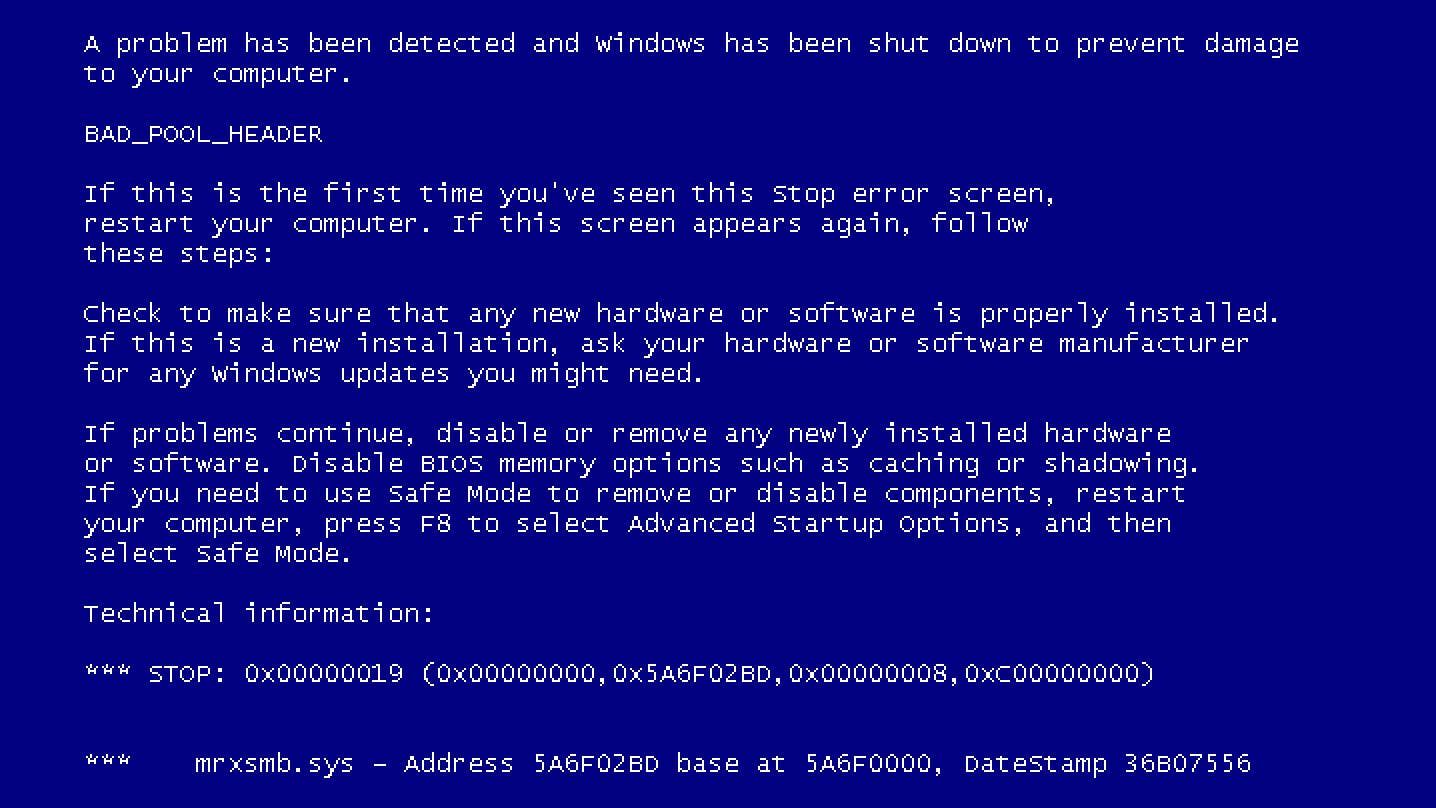

Go makes it easy to write concurrent code — just add go doSomething() and you're off. But if you're not careful, you can overwhelm your own service with too many goroutines. Here's how to avoid accidentally DDoSing yourself using a simple, effective semaphore.

Semaphore is a concurrency pattern that has existed long before Go did, but is exceptionally easy to implement with Go’s channels.

There are many use cases for semaphores in computer science, but one of the most practical in Go is limiting the number of goroutines your program spawns.

Goroutines are cheap but not free. Unbounded goroutines can lead to degraded performance due to CPU contention, runaway memory usage (heap and stack), and even goroutine leaks — where goroutines silently keep running forever.

Example:

The following code snippet was adapted from go-chi: https://github.com/go-chi/chi. It creates a simple web server with one http endpoint to process large files provided as multipart requests, and uses a semaphore to limit global concurrency, ensuring the service never processes more than a fixed number of files at once — regardless of how many users hit the endpoint.

func main() {

maxGoroutinesEnv := os.Getenv("MAX_GOROUTINES")

maxGoroutines, err := strconv.Atoi(maxGoroutinesEnv)

if err != nil {

log.Fatalf("failed to load MAX_GOROUTINES env var %w", err)

}

// create semaphore

sem := NewSemaphore(maxGoroutines)

r := chi.NewRouter()

r.Use(middleware.Logger)

r.Get("/", func(w http.ResponseWriter, r *http.Request) {

processListOfLargeCustomerProvidedConfigs(w, r, sem)

})

http.ListenAndServe(":3000", r)

}

func processListOfLargeCustomerProvidedConfigs(

w http.ResponseWriter,

r *http.Request,

sem *Semaphore,

) {

err := r.ParseMultipartForm(50 << 20)

if err != nil {

errMsg := fmt.Sprintf("could not parse multipart form: %w", err)

http.Error(w, errMsg, http.StatusBadRequest)

return

}

files := r.MultipartForm.File["configs"]

if len(files) == 0 {

http.Error(w, "no files in request", http.StatusBadRequest)

return

}

for _, f := range files {

// blocks if the semaphore is "full"

sem.Acquire(1)

go func(f *multipart.FileHeader) {

defer sem.Release(1)

// memory and cpu intensive task

processFile(f)

}(f)

}

}

As you can see above, every time we attempt to create a goroutine to process a file, we block if the semaphore is "full" and automatically continue once a piece of work has been released.

A Minimal Semaphore Implementation:

Here’s the full implementation used in the example:

type Semaphore struct {

c chan struct{}

}

func NewSemaphore(w int) *Semaphore {

return &Semaphore{

// create a buffered channel with capacity

// equal to the weight of the semaphore

c: make(chan struct{}, w),

}

}

func (s *Semaphore) Acquire(w int) {

for range w {

// Send an empty struct to the channel.

// Blocks if the channel is full — meaning we've reached our concurrency limit.

// We use `struct{}` to avoid extra allocations.

s.c <- struct{}{}

}

}

func (s *Semaphore) Release(w int) {

// pull the desired amount of work

// out of the semaphore channel

for range w {

<-s.c

}

}

![[The AI Show Episode 156]: AI Answers - Data Privacy, AI Roadmaps, Regulated Industries, Selling AI to the C-Suite & Change Management](https://www.marketingaiinstitute.com/hubfs/ep%20156%20cover.png)

![[The AI Show Episode 155]: The New Jobs AI Will Create, Amazon CEO: AI Will Cut Jobs, Your Brain on ChatGPT, Possible OpenAI-Microsoft Breakup & Veo 3 IP Issues](https://www.marketingaiinstitute.com/hubfs/ep%20155%20cover.png)

.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Michael_Burrell_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)