Running LLMs Locally: Using Ollama, LM Studio, and HuggingFace on a Budget

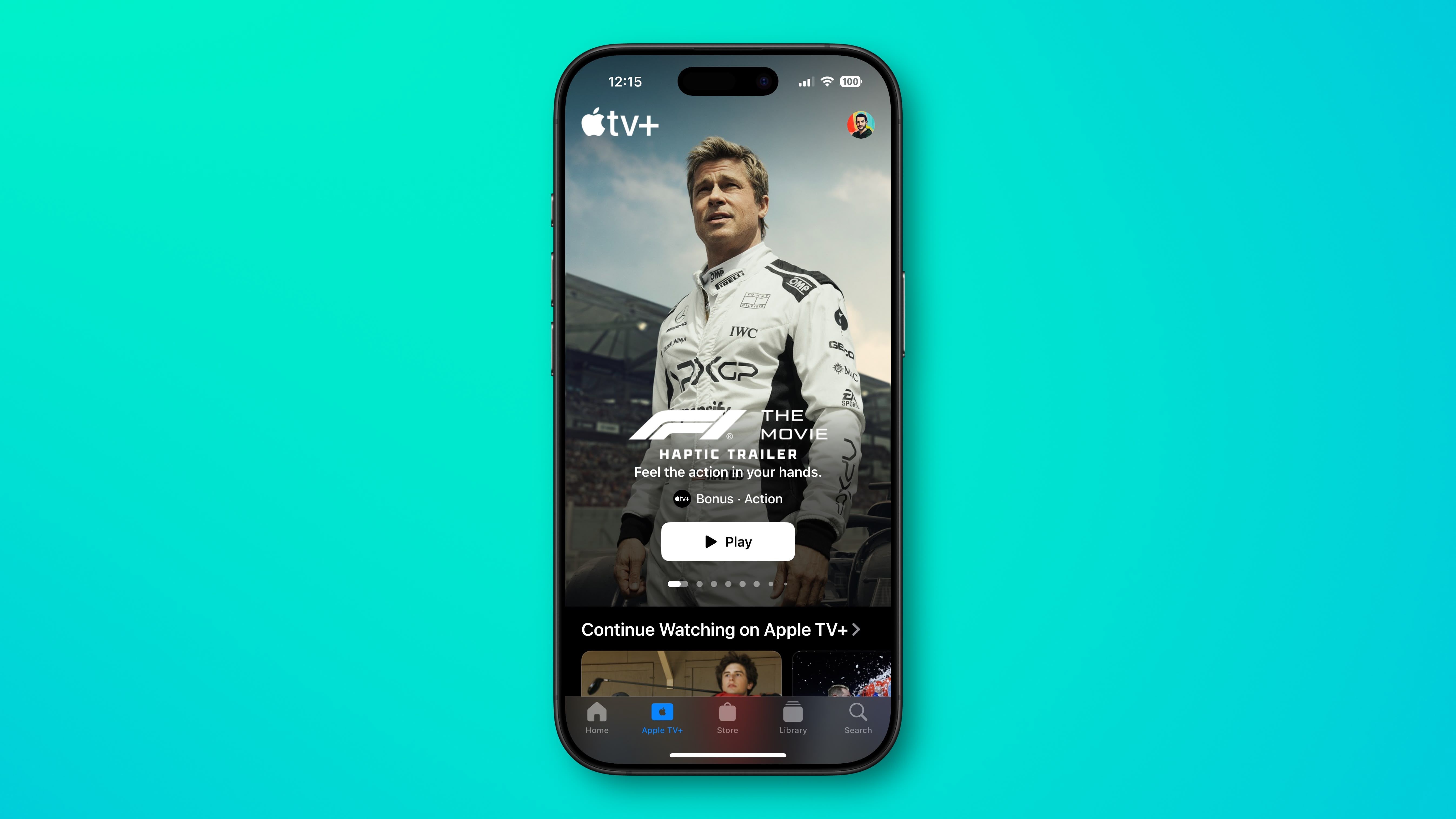

How to serve and fine-tune models like Mistral or LLaMA 3 on your own hardware. With the rise of powerful open-weight models like Mistral, LLaMA 3, and Gemma, running large language models (LLMs) locally has become more accessible than ever—even on consumer-grade hardware. This guide covers: ✅ Best tools for local LLM inference (Ollama, LM …

![Top Features of Vision-Based Workplace Safety Tools [2025]](https://static.wixstatic.com/media/379e66_7e75a4bcefe14e4fbc100abdff83bed3~mv2.jpg/v1/fit/w_1000,h_884,al_c,q_80/file.png?#)

![[The AI Show Episode 152]: ChatGPT Connectors, AI-Human Relationships, New AI Job Data, OpenAI Court-Ordered to Keep ChatGPT Logs & WPP’s Large Marketing Model](https://www.marketingaiinstitute.com/hubfs/ep%20152%20cover.png)

.jpg?#)

![MindsEye From Ex-GTA Producer Is A Day-One Car Wreck [Update]](https://i.kinja-img.com/image/upload/c_fill,h_675,pg_1,q_80,w_1200/aa09b256615c422f7d1e1535d023e578.png)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_incamerastock_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![PSA: iOS 26 Spatial Scenes will work on iPhones 12 and up [U]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/06/spatial-photos-ios26.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple categorically denies Siri vaporware claims, and offers a better explanation [Video]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/06/Apple-categorically-denies-Siri-vaporware-claims-and-offers-a-better-explanation.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![This new iPad keyboard was purpose-built for versatility and portability – Logitech Flip Folio [Hands-on]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/06/Logitech-FI.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![iOS 26 and its ‘Liquid Glass’ redesign is being compared to ancient Android skins [Video]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/06/ios-26-android-phones.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Teaser Trailer for 'The Lost Bus' Starring Matthew McConaughey [Video]](https://www.iclarified.com/images/news/97582/97582/97582-640.jpg)

![Apple Debuts Trailer for Third Season of 'Foundation' [Video]](https://www.iclarified.com/images/news/97589/97589/97589-640.jpg)