Python Fundamentals: api client

Building Robust API Clients in Python: A Production Deep Dive Introduction In late 2022, a cascading failure in our machine learning pipeline stemmed from a seemingly innocuous issue: a poorly handled rate limit in a third-party API client. The client, responsible for fetching feature data, wasn’t correctly parsing the Retry-After header, leading to aggressive retries that quickly exhausted our API quota. This triggered a chain reaction, halting model training and impacting downstream services. The incident highlighted a critical truth: API clients aren’t just glue code; they’re foundational components demanding rigorous engineering. This post dives into the architecture, implementation, and operational considerations for building production-grade API clients in Python. What is "api client" in Python? An “API client” in Python is a module or set of modules designed to interact with a remote API, typically over HTTP. It encapsulates the complexities of request formatting, response parsing, authentication, error handling, and retry logic. While the standard library’s urllib.request provides basic functionality, a well-designed API client goes far beyond. From a CPython perspective, these clients often leverage asyncio for non-blocking I/O, utilizing libraries like aiohttp or httpx. The modern Python typing system (PEP 484, PEP 526) is crucial for defining request and response schemas, enabling static analysis with mypy and improving code clarity. Libraries like pydantic are frequently used to define these schemas, providing validation and serialization/deserialization capabilities. The core concept is abstraction – hiding the underlying HTTP details and presenting a Pythonic interface to the API. Real-World Use Cases FastAPI Request Handling: FastAPI, a modern web framework, often uses API clients internally to proxy requests to upstream services. A robust client ensures that FastAPI’s asynchronous nature isn’t blocked by slow or unreliable APIs. Async Job Queues: Task queues like Celery or Dramatiq frequently interact with external APIs to trigger actions or retrieve data. An asynchronous API client is essential for maintaining queue throughput. Type-Safe Data Models: Using pydantic to define API schemas allows for type-safe data models, preventing runtime errors caused by unexpected data formats. This is critical in data pipelines. CLI Tools: Command-line interfaces often rely on API clients to interact with remote services. A well-designed client simplifies the development of complex CLI applications. ML Preprocessing: Machine learning pipelines often require fetching data from external sources via APIs. A reliable client ensures data integrity and availability during model training and inference. Integration with Python Tooling Our standard pyproject.toml includes: [tool.mypy] python_version = "3.11" strict = true ignore_missing_imports = true [tool.pytest] addopts = "--cov=my_api_client --cov-report term-missing" [tool.pydantic] enable_schema_cache = true We use mypy with strict mode to enforce type safety. pydantic’s schema caching improves performance. pytest is configured for code coverage, ensuring thorough testing. We also employ pre-commit hooks for linting (flake8, black) and type checking. Runtime hooks are implemented using asyncio.to_thread to bridge synchronous API client libraries with our asynchronous codebase, minimizing blocking operations. Logging is centralized using the structlog library, providing structured logs for debugging and monitoring. Code Examples & Patterns Here's a simplified example using httpx and pydantic: import httpx from pydantic import BaseModel, Field class User(BaseModel): id: int = Field(..., gt=0) name: str email: str class APIClient: def __init__(self, base_url: str, api_key: str): self.base_url = base_url self.api_key = api_key self.client = httpx.AsyncClient(base_url=base_url) async def get_user(self, user_id: int) -> User: url = f"/users/{user_id}" headers = {"Authorization": f"Bearer {self.api_key}"} try: response = await self.client.get(url, headers=headers) response.raise_for_status() # Raise HTTPError for bad responses (4xx or 5xx) return User(**response.json()) except httpx.HTTPStatusError as e: # Log the error and potentially retry based on status code print(f"HTTP error: {e}") raise except httpx.RequestError as e: # Handle network errors print(f"Request error: {e}") raise This demonstrates a basic client with error handling and type-safe data models. We use dependency injection for base_url and api_key to improve testability. The response.raise_for_status() call is crucial for handling HTTP errors. Failure Scenarios & Debugging A co

Building Robust API Clients in Python: A Production Deep Dive

Introduction

In late 2022, a cascading failure in our machine learning pipeline stemmed from a seemingly innocuous issue: a poorly handled rate limit in a third-party API client. The client, responsible for fetching feature data, wasn’t correctly parsing the Retry-After header, leading to aggressive retries that quickly exhausted our API quota. This triggered a chain reaction, halting model training and impacting downstream services. The incident highlighted a critical truth: API clients aren’t just glue code; they’re foundational components demanding rigorous engineering. This post dives into the architecture, implementation, and operational considerations for building production-grade API clients in Python.

What is "api client" in Python?

An “API client” in Python is a module or set of modules designed to interact with a remote API, typically over HTTP. It encapsulates the complexities of request formatting, response parsing, authentication, error handling, and retry logic. While the standard library’s urllib.request provides basic functionality, a well-designed API client goes far beyond.

From a CPython perspective, these clients often leverage asyncio for non-blocking I/O, utilizing libraries like aiohttp or httpx. The modern Python typing system (PEP 484, PEP 526) is crucial for defining request and response schemas, enabling static analysis with mypy and improving code clarity. Libraries like pydantic are frequently used to define these schemas, providing validation and serialization/deserialization capabilities. The core concept is abstraction – hiding the underlying HTTP details and presenting a Pythonic interface to the API.

Real-World Use Cases

- FastAPI Request Handling: FastAPI, a modern web framework, often uses API clients internally to proxy requests to upstream services. A robust client ensures that FastAPI’s asynchronous nature isn’t blocked by slow or unreliable APIs.

- Async Job Queues: Task queues like Celery or Dramatiq frequently interact with external APIs to trigger actions or retrieve data. An asynchronous API client is essential for maintaining queue throughput.

-

Type-Safe Data Models: Using

pydanticto define API schemas allows for type-safe data models, preventing runtime errors caused by unexpected data formats. This is critical in data pipelines. - CLI Tools: Command-line interfaces often rely on API clients to interact with remote services. A well-designed client simplifies the development of complex CLI applications.

- ML Preprocessing: Machine learning pipelines often require fetching data from external sources via APIs. A reliable client ensures data integrity and availability during model training and inference.

Integration with Python Tooling

Our standard pyproject.toml includes:

[tool.mypy]

python_version = "3.11"

strict = true

ignore_missing_imports = true

[tool.pytest]

addopts = "--cov=my_api_client --cov-report term-missing"

[tool.pydantic]

enable_schema_cache = true

We use mypy with strict mode to enforce type safety. pydantic’s schema caching improves performance. pytest is configured for code coverage, ensuring thorough testing. We also employ pre-commit hooks for linting (flake8, black) and type checking.

Runtime hooks are implemented using asyncio.to_thread to bridge synchronous API client libraries with our asynchronous codebase, minimizing blocking operations. Logging is centralized using the structlog library, providing structured logs for debugging and monitoring.

Code Examples & Patterns

Here's a simplified example using httpx and pydantic:

import httpx

from pydantic import BaseModel, Field

class User(BaseModel):

id: int = Field(..., gt=0)

name: str

email: str

class APIClient:

def __init__(self, base_url: str, api_key: str):

self.base_url = base_url

self.api_key = api_key

self.client = httpx.AsyncClient(base_url=base_url)

async def get_user(self, user_id: int) -> User:

url = f"/users/{user_id}"

headers = {"Authorization": f"Bearer {self.api_key}"}

try:

response = await self.client.get(url, headers=headers)

response.raise_for_status() # Raise HTTPError for bad responses (4xx or 5xx)

return User(**response.json())

except httpx.HTTPStatusError as e:

# Log the error and potentially retry based on status code

print(f"HTTP error: {e}")

raise

except httpx.RequestError as e:

# Handle network errors

print(f"Request error: {e}")

raise

This demonstrates a basic client with error handling and type-safe data models. We use dependency injection for base_url and api_key to improve testability. The response.raise_for_status() call is crucial for handling HTTP errors.

Failure Scenarios & Debugging

A common failure is incorrect error handling. We once had a client that silently ignored 429 (Too Many Requests) errors, leading to cascading failures. Debugging involved using pdb to step through the code during a simulated rate limit scenario. cProfile revealed that excessive logging was contributing to performance bottlenecks. Runtime assertions were added to validate API responses, catching unexpected data formats.

Here's an example of a traceback we encountered:

Traceback (most recent call last):

File "main.py", line 25, in

user = await client.get_user(1)

File "api_client.py", line 20, in get_user

return User(**response.json())

pydantic.ValidationError: 1 validation error for User

id

value is less than 1 (type=value_error.less_than)

This traceback clearly indicates a validation error in the User model, highlighting the benefit of using pydantic.

Performance & Scalability

We benchmark API clients using asyncio.run(timeit(...)) and memory_profiler. Avoiding global state and reducing object allocations are key optimization techniques. Controlling concurrency with asyncio.Semaphore prevents overwhelming the API. For computationally intensive tasks (e.g., complex data parsing), we consider using C extensions via Cython. Connection pooling (provided by httpx by default) is essential for reducing latency.

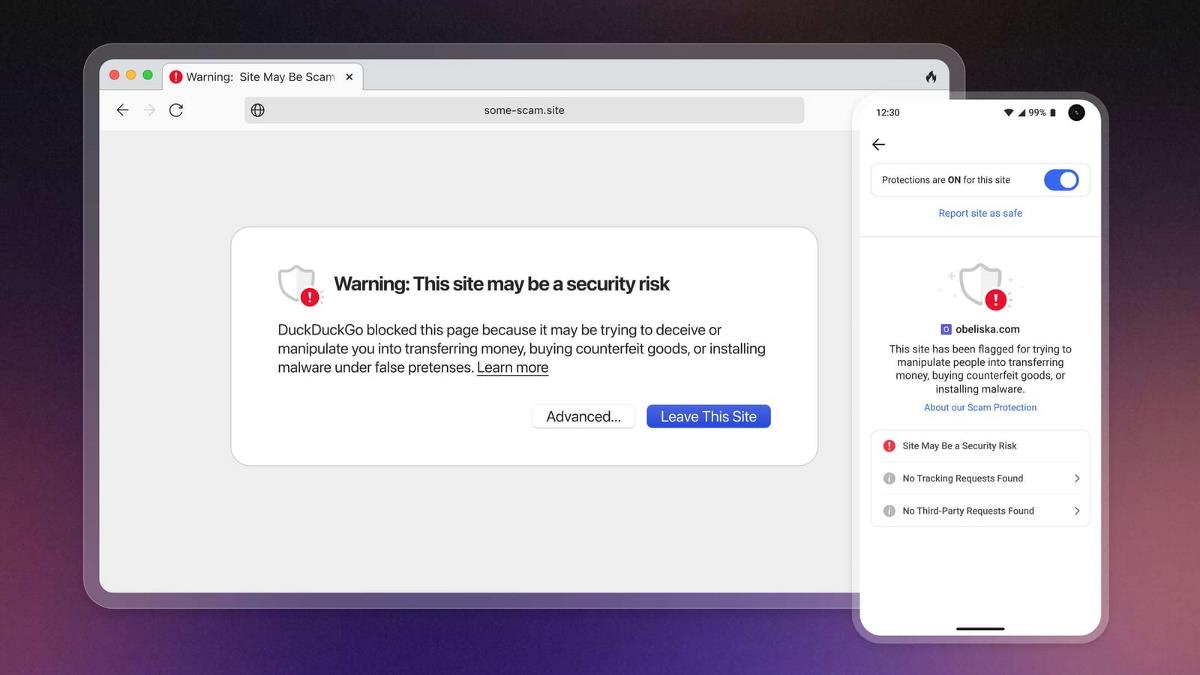

Security Considerations

Insecure deserialization is a major risk. Never trust data from external APIs. Always validate input and sanitize data before processing it. Avoid using eval() or similar functions to parse API responses. Use strong authentication mechanisms (e.g., OAuth 2.0) and protect API keys. Implement rate limiting to prevent abuse. Consider using a Web Application Firewall (WAF) to protect against common attacks.

Testing, CI & Validation

We employ a layered testing strategy:

-

Unit Tests: Mocking the

httpxclient to isolate the API client logic. - Integration Tests: Testing against a staging API environment.

- Property-Based Tests (Hypothesis): Generating random API requests to uncover edge cases.

-

Type Validation:

mypychecks to ensure type safety.

Our CI pipeline uses tox to run tests against multiple Python versions. GitHub Actions automates the testing and deployment process. Pre-commit hooks enforce code style and type checking.

Common Pitfalls & Anti-Patterns

- Ignoring HTTP Status Codes: Failing to handle 4xx and 5xx errors correctly.

- Hardcoding API Keys: Storing API keys directly in the code.

- Lack of Retries: Not implementing retry logic for transient errors.

- Blocking Operations: Using synchronous API calls in an asynchronous context.

- Ignoring Rate Limits: Not respecting API rate limits.

- Insufficient Logging: Not logging enough information for debugging.

Best Practices & Architecture

-

Type-Safety: Use

pydanticor similar libraries to define API schemas. - Separation of Concerns: Separate the API client logic from the application logic.

- Defensive Coding: Validate input and handle errors gracefully.

- Modularity: Break down the API client into smaller, reusable modules.

- Config Layering: Use environment variables and configuration files to manage API settings.

- Dependency Injection: Use dependency injection to improve testability.

- Automation: Automate testing, deployment, and monitoring.

- Reproducible Builds: Use Docker or similar tools to create reproducible builds.

- Documentation: Provide clear and concise documentation.

Conclusion

Building robust API clients is a critical aspect of modern Python development. By embracing type-safety, defensive coding, and rigorous testing, we can create clients that are reliable, scalable, and maintainable. Don't treat API clients as an afterthought; invest in their design and implementation to avoid costly production incidents. Start by refactoring legacy code to incorporate these best practices, measure performance, write comprehensive tests, and enforce linting and type checking. The investment will pay dividends in the long run.

![[The AI Show Episode 154]: AI Answers: The Future of AI Agents at Work, Building an AI Roadmap, Choosing the Right Tools, & Responsible AI Use](https://www.marketingaiinstitute.com/hubfs/ep%20154%20cover.png)

![[The AI Show Episode 153]: OpenAI Releases o3-Pro, Disney Sues Midjourney, Altman: “Gentle Singularity” Is Here, AI and Jobs & News Sites Getting Crushed by AI Search](https://www.marketingaiinstitute.com/hubfs/ep%20153%20cover.png)

![[FREE EBOOKS] The Chief AI Officer’s Handbook, Natural Language Processing with Python & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![GrandChase tier list of the best characters available [June 2025]](https://media.pocketgamer.com/artwork/na-33057-1637756796/grandchase-ios-android-3rd-anniversary.jpg?#)

_Frank_Peters_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Weighs Acquisition of AI Startup Perplexity in Internal Talks [Report]](https://www.iclarified.com/images/news/97674/97674/97674-640.jpg)

![Oakley and Meta Launch Smart Glasses for Athletes With AI, 3K Camera, More [Video]](https://www.iclarified.com/images/news/97665/97665/97665-640.jpg)

![How to Get Your Parents to Buy You a Mac, According to Apple [Video]](https://www.iclarified.com/images/news/97671/97671/97671-640.jpg)

![New accessibility settings announced for Steam Big Picture Mode and SteamOS [Beta]](https://www.ghacks.net/wp-content/uploads/2025/06/New-accessibility-settings-announced-for-Steam-Big-Picture-Mode-and-SteamOS.jpg)