Anthropic Deploys Multiple Claude Agents for 'Research' Tool - Says Coding is Less Parallelizable

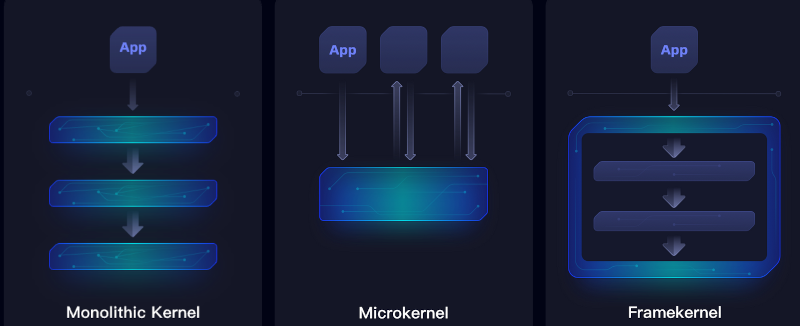

In April Anthorpic introduced a new AI trick: multiple Claude agents combine for a "Research" feature that can "search across both your internal work context and the web" (as well as Google Workspace "and any integrations...") But a recent Anthropic blog post notes this feature "involves an agent that plans a research process based on user queries, and then uses tools to create parallel agents that search for information simultaneously," which brings challenges "in agent coordination, evaluation, and reliability.... The model must operate autonomously for many turns, making decisions about which directions to pursue based on intermediate findings." Multi-agent systems work mainly because they help spend enough tokens to solve the problem.... This finding validates our architecture that distributes work across agents with separate context windows to add more capacity for parallel reasoning. The latest Claude models act as large efficiency multipliers on token use, as upgrading to Claude Sonnet 4 is a larger performance gain than doubling the token budget on Claude Sonnet 3.7. Multi-agent architectures effectively scale token usage for tasks that exceed the limits of single agents. There is a downside: in practice, these architectures burn through tokens fast. In our data, agents typically use about 4Ã-- more tokens than chat interactions, and multi-agent systems use about 15Ã-- more tokens than chats. For economic viability, multi-agent systems require tasks where the value of the task is high enough to pay for the increased performance. Further, some domains that require all agents to share the same context or involve many dependencies between agents are not a good fit for multi-agent systems today. For instance, most coding tasks involve fewer truly parallelizable tasks than research, and LLM agents are not yet great at coordinating and delegating to other agents in real time. We've found that multi-agent systems excel at valuable tasks that involve heavy parallelization, information that exceeds single context windows, and interfacing with numerous complex tools. Thanks to Slashdot reader ZipNada for sharing the news. Read more of this story at Slashdot.

Read more of this story at Slashdot.

![[The AI Show Episode 154]: AI Answers: The Future of AI Agents at Work, Building an AI Roadmap, Choosing the Right Tools, & Responsible AI Use](https://www.marketingaiinstitute.com/hubfs/ep%20154%20cover.png)

![[The AI Show Episode 153]: OpenAI Releases o3-Pro, Disney Sues Midjourney, Altman: “Gentle Singularity” Is Here, AI and Jobs & News Sites Getting Crushed by AI Search](https://www.marketingaiinstitute.com/hubfs/ep%20153%20cover.png)

![[FREE EBOOKS] The Chief AI Officer’s Handbook, Natural Language Processing with Python & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![GrandChase tier list of the best characters available [June 2025]](https://media.pocketgamer.com/artwork/na-33057-1637756796/grandchase-ios-android-3rd-anniversary.jpg?#)

_Frank_Peters_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple tells students ‘how to convince your parents to get you a Mac’ [Update: Removed]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/06/screenshot-2025-06-20-at-09.14.21.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Weighs Acquisition of AI Startup Perplexity in Internal Talks [Report]](https://www.iclarified.com/images/news/97674/97674/97674-640.jpg)

![Oakley and Meta Launch Smart Glasses for Athletes With AI, 3K Camera, More [Video]](https://www.iclarified.com/images/news/97665/97665/97665-640.jpg)

![How to Get Your Parents to Buy You a Mac, According to Apple [Video]](https://www.iclarified.com/images/news/97671/97671/97671-640.jpg)

![New accessibility settings announced for Steam Big Picture Mode and SteamOS [Beta]](https://www.ghacks.net/wp-content/uploads/2025/06/New-accessibility-settings-announced-for-Steam-Big-Picture-Mode-and-SteamOS.jpg)