Popular Video AI Models Every Developer Should Know

Ever wondered how Netflix recommends the perfect movie trailer, how security cameras detect unusual activity, or how sports broadcasters create instant highlights? The secret lies in Video AI models. Video AI models enable automated analysis of complex visual data in real-time, enhancing efficiency, accuracy, and decision-making across sports analytics, surveillance, and content creation. With the explosion of video content (~500 hours of video are uploaded to YouTube every minute), video-centric AI applications are more critical than ever. These applications become possible only due to the underlying AI models allowing machines to analyze, interpret, and even predict events from videos. Video AI models enable advanced tasks like object detection, action recognition, and semantic segmentation, which traditional computer vision models would fall short of. In this blog, we’ll dive into some of the most popular Video AI models every developer should know, and how they’re shaping the future of video intelligence. YOLO (You Only Look Once): Real-Time Object Detection YOLO (You Only Look Once) is a real-time object detection model that processes an entire image in a single pass through its neural network. Unlike traditional methods like R-CNN or Fast R-CNN that rely on region proposals and multiple stages, YOLO treats detection as a regression problem, predicting bounding boxes and class probabilities directly. This makes YOLO exceptionally fast and ideal for real-time applications with great accuracy. Key Features and Benefits Real-time speed - Delivers predictions at high frame rates suitable for real-time object detection. Accuracy - Generates fewer false positives and effectively detects multiple objects in a single frame due to consideration of global context during training. Scalable and lightweight - Optimized versions of YOLO can be easily deployed on edge devices with limited computational power. Multi-scale prediction - Later versions of YOLO perform predictions at multiple scales, improving accuracy for small objects. YOLO v8: Latest Advances YOLO v8 offers an improved architecture as compared to previous models in terms of speed, and accuracy, and can perform object detection, classification, and segmentation tasks. Some key modifications are as follows. Basic architecture of YOLO v8 (Source) Anchor-free detection - The model directly predicts the center of an object instead of offsets, which helps improve the learning speed for custom datasets. Mosaic data augmentation - For better generalization, four images are mixed during training to provide variable locations, occlusions, etc. C2f module - The model’s backbone consists of a C2f module instead of C3, which helps speed up the training process with improved gradient flow. Decoupled head - The classification and regression tasks are no longer performed together to increase the model performance. Common Use Cases Surveillance: YOLO powers real-time crowd detection, intruder alerts, and suspicious activity monitoring in security cameras, enhancing public safety. Retail: Tasks like object counting, customer behavior analysis, and automated inventory tracking help retailers optimize stock management and improve the shopping experience. Sports: YOLO enables real-time tracking of players, balls, and equipment, providing performance analytics and enhancing live broadcasts. MoViNet: Efficient Action Recognition for Embedding Extraction MoViNet (Mobile Video Networks) is an advanced video recognition model for real-time action recognition tasks. MoViNet can stream videos for online inference while being computationally efficient and requiring minimal memory, as opposed to previous 2D CNN-based architectures, which were resource-intensive. MoViNet architecture for streaming eval (Source) MoViNet leverages techniques such as Neural Architecture Search (NAS) for generating 3D CNN architectures, stream buffering, and temporal ensembles of streaming MoViNets to improve accuracy and efficiency. This makes MoViNets optimized to run on edge devices such as smartphones and wearables, where low latency is critical. Key Features and Benefits Low latency - Optimized for real-time inference with low latency due to the stream buffer technique. High performance - Achieves competitive accuracy on various video recognition benchmarks. Scalability - MoViNet models are available in different variants, allowing developers to choose a model that suits their resource constraints and performance needs. MoViNet for Edge Computing MoViNet is ideally built for edge devices due to its low memory requirements, computational efficiency, and low latency. Techniques like stream buffering (processing video streams frame by frame, keeping memory footprint constant, and reducing processing time) and causal convolutions (sequentially processing video frames) allow on-the-fly de

Ever wondered how Netflix recommends the perfect movie trailer, how security cameras detect unusual activity, or how sports broadcasters create instant highlights? The secret lies in Video AI models. Video AI models enable automated analysis of complex visual data in real-time, enhancing efficiency, accuracy, and decision-making across sports analytics, surveillance, and content creation. With the explosion of video content (~500 hours of video are uploaded to YouTube every minute), video-centric AI applications are more critical than ever.

These applications become possible only due to the underlying AI models allowing machines to analyze, interpret, and even predict events from videos. Video AI models enable advanced tasks like object detection, action recognition, and semantic segmentation, which traditional computer vision models would fall short of. In this blog, we’ll dive into some of the most popular Video AI models every developer should know, and how they’re shaping the future of video intelligence.

YOLO (You Only Look Once): Real-Time Object Detection

YOLO (You Only Look Once) is a real-time object detection model that processes an entire image in a single pass through its neural network. Unlike traditional methods like R-CNN or Fast R-CNN that rely on region proposals and multiple stages, YOLO treats detection as a regression problem, predicting bounding boxes and class probabilities directly. This makes YOLO exceptionally fast and ideal for real-time applications with great accuracy.

Key Features and Benefits

Real-time speed - Delivers predictions at high frame rates suitable for real-time object detection.

Accuracy - Generates fewer false positives and effectively detects multiple objects in a single frame due to consideration of global context during training.

Scalable and lightweight - Optimized versions of YOLO can be easily deployed on edge devices with limited computational power.

Multi-scale prediction - Later versions of YOLO perform predictions at multiple scales, improving accuracy for small objects.

YOLO v8: Latest Advances

YOLO v8 offers an improved architecture as compared to previous models in terms of speed, and accuracy, and can perform object detection, classification, and segmentation tasks. Some key modifications are as follows.

Basic architecture of YOLO v8 (Source)

Anchor-free detection - The model directly predicts the center of an object instead of offsets, which helps improve the learning speed for custom datasets.

Mosaic data augmentation - For better generalization, four images are mixed during training to provide variable locations, occlusions, etc.

C2f module - The model’s backbone consists of a C2f module instead of C3, which helps speed up the training process with improved gradient flow.

Decoupled head - The classification and regression tasks are no longer performed together to increase the model performance.

Common Use Cases

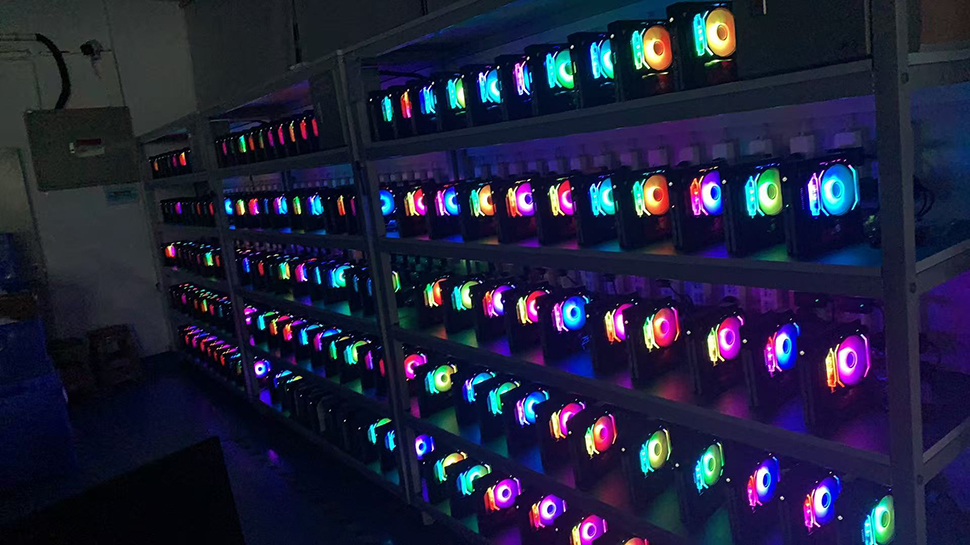

Surveillance: YOLO powers real-time crowd detection, intruder alerts, and suspicious activity monitoring in security cameras, enhancing public safety.

Retail: Tasks like object counting, customer behavior analysis, and automated inventory tracking help retailers optimize stock management and improve the shopping experience.

Sports: YOLO enables real-time tracking of players, balls, and equipment, providing performance analytics and enhancing live broadcasts.

MoViNet: Efficient Action Recognition for Embedding Extraction

MoViNet (Mobile Video Networks) is an advanced video recognition model for real-time action recognition tasks. MoViNet can stream videos for online inference while being computationally efficient and requiring minimal memory, as opposed to previous 2D CNN-based architectures, which were resource-intensive.

MoViNet architecture for streaming eval (Source)

MoViNet leverages techniques such as Neural Architecture Search (NAS) for generating 3D CNN architectures, stream buffering, and temporal ensembles of streaming MoViNets to improve accuracy and efficiency. This makes MoViNets optimized to run on edge devices such as smartphones and wearables, where low latency is critical.

Key Features and Benefits

Low latency - Optimized for real-time inference with low latency due to the stream buffer technique.

High performance - Achieves competitive accuracy on various video recognition benchmarks.

Scalability - MoViNet models are available in different variants, allowing developers to choose a model that suits their resource constraints and performance needs.

MoViNet for Edge Computing

MoViNet is ideally built for edge devices due to its low memory requirements, computational efficiency, and low latency. Techniques like stream buffering (processing video streams frame by frame, keeping memory footprint constant, and reducing processing time) and causal convolutions (sequentially processing video frames) allow on-the-fly detection of various actions which makes MoViNets suitable for uses in fitness trackers, sports analytics, and autonomous vehicles.

Common Use Cases

Sports Analytics - MoViNet can analyze player actions and movements, helping coaches and analysts track performance, strategy adherence, and injury risks in real-time.

Healthcare Monitoring - In physical therapy or elder care, MoViNet can analyze patient movements to ensure exercise compliance, track rehabilitation progress, and detect falls or unusual behavior.

Smart Homes - MoViNet enables activity recognition and gesture control, allowing users to intuitively interact with smart home devices or automate home security with anomaly recognition.

SlowFast: Temporal Modeling for Action Recognition

SlowFast is a two-stream convolutional neural network designed for action recognition. It treats spatial structures and temporal events separately, as not all spatiotemporal orientations change equally fast in a video. Hence, it processes video at two different frame rates:

Low frame rate and high frame rate in SlowFast networks (Source)

1) The Slow pathway operates at a low frame rate to capture spatial semantics (eg, objects or appearance).

2) The Fast pathway operates at a high frame rate, capturing rapid actions and fine temporal information (eg, clapping, waving, etc.).

By combining high spatial resolution from the Slow stream with fine-grained motion cues from the Fast stream, SlowFast models achieve state-of-the-art performance in complex action recognition tasks.

Key Features and Benefits

Dual-Stream Architecture - The slow and fast streams capture rich spatial information and temporal changes merged through lateral connection, making action recognition efficient.

Computational Efficiency - The reduced number of channels in the fast stream makes the model lightweight and computationally efficient.

Action Recognition Performance - The model executed state-of-the-art performance on Kinetics-400, Kinetics-600, Charades, and Ava datasets.

SlowFast’s Strengths in Complex Video Analysis

SlowFast network is particularly effective for complex action recognition tasks. The slow stream maintains a global understanding of the scenes over longer time spans while the fast stream precisely models rapid temporal changes. The fast stream has temporal convolutions in every block which helps to capture fine-grained temporal details. As a result, SlowFast can detect and recognize complex behaviors and actions.

Common Use Cases

Sports Analytics - SlowFast can be used to track player movements, capture team movement patterns, and for strategy analysis in matches.

Surveillance - SlowFast can help detect suspicious behaviors by analyzing both rapid movements and long-term patterns, making them ideal for real-time security monitoring.

Entertainment - SlowFast can classify action scenes in movies, enable automated content tagging, and offer personalized recommendations on entertainment platforms.

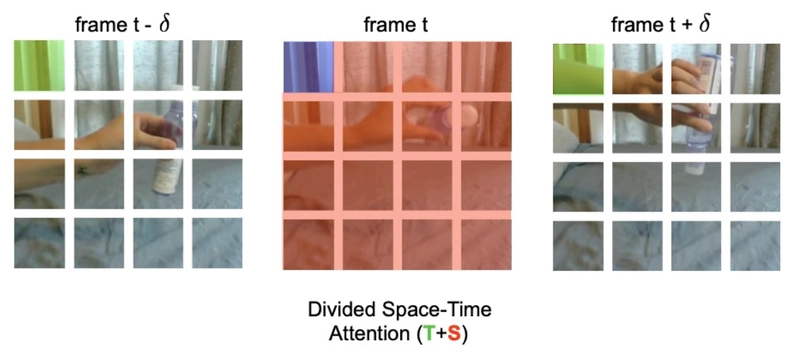

TimeSformer: Transformers for Video Understanding and Embedding

TimeSformer (Time-Space Transformer) is a video classification and action recognition model based purely on transformers, which are popularly used for Natural Language Processing tasks. The model uses self-attention mechanisms across both spatial and temporal dimensions, allowing efficient and accurate modeling of video data. Compared to modern 3D CNN models, TimeSformer is three times faster to train and requires less than one-tenth the amount of compute for inference, making it ideal for processing videos in real-time.

Divided space-time attention in TimeSformer architecture (Source)

Key Features and Benefits

Scalability - As expensive 3D convolutions are eliminated, large models can be trained on longer video clips (temporal extent of 102 seconds) enabling understanding of complex human actions.

Low Computational Cost - The model has less computational cost as the input video is processed in a small set of patches and the type of self-attention used does not do an exhaustive comparison of all patches saving time and resources.

Divided Space-Time Attention - The self-attention mechanism is split into two sub-parts - temporal attention and spatial attention - which increases the efficiency and accuracy of the model.

TimeSformer for Video Classification and Recognition

TimeSformer is a novel architecture based on transformers that helps to overcome the limitation of 3D convolutional filters for being unable to model space-time dependencies beyond their small receptive field. First, the input video is presented as a time-space sequence of image patches extracted from individual frames. These patches are processed through a self-attention mechanism (comparison of each patch to other patches) across both spatial and temporal dimensions. This makes TimeSformer perform video classification and recognition by capturing fine temporal details precisely and efficiently.

Common Use Cases

Action Recognition - TimeSformer works great to detect specific activities in videos, such as identifying different sports movements or human interactions in surveillance footage.

Video Classification - As TimeSformer can model long-range video sequences, it can categorize entire videos into genres or themes (e.g., comedy, sports, documentaries).

Content Moderation - TimeSformer can identify inappropriate or harmful content in videos for automated filtering on platforms like YouTube and TikTok.

CLIP (Contrastive Language-Image Pre-Training): Bridging Text and Video

CLIP (Contrastive Language-Image Pretraining) is a multimodal AI model developed by OpenAI that learns visual concepts through natural language prompts. Having been trained on large-scale internet data, it generalizes well to various tasks such as video classification, action recognition, and OCR. CLIP associates text and images in a shared embedding space through contrastive pretraining which is then used for zero-shot classification. CLIP can be further extended to video by processing frames as image inputs and aligning them with textual descriptions which makes it highly effective for multimodal search and retrieval.

Overview of CLIP architecture (Source)

Key Features and Benefits

Text-video alignment - CLIP can easily work with natural language to search within video content, e.g., ‘find all scenes with cats’.

Cost efficiency and performance - CLIP overcomes the need for costly datasets and aids good real-world performance with out-of-the-box predictions.

Efficiency and flexibility - CLIP learns from unfiltered, noisy, and varied data making, it highly efficient for a variety of tasks and is flexible to adapt to new tasks.

Versatility - CLIP works across various video tasks such as retrieval, captioning, and action recognition.

CLIP for Video Search and Retrieval

CLIP can be used for video search and retrieval by processing the video as individual frames and creating their embeddings along with text descriptions in a shared embedding space. With this, users can leverage a vector database like Milvus or Zilliz Cloud to simply search the video through natural language queries. For example, when searching for ‘all scenes with a beach’ in a video of Miami, all relevant clips can be retrieved that best match this description. Furthermore, CLIP can be combined with other AI models such as TimeSformer or SlowFast for enhanced analysis of motion dynamics and pave the way for advanced applications such as automated content tagging and video summarization.

Common Use Cases

Multimodal Search and Retrieval – Users can search for content within images or videos using natural language queries, making it useful for media libraries and stock footage platforms.

Automated Content Moderation – CLIP can detect inappropriate or harmful content in images or videos, helping with social media moderation and copyright enforcement.

Image and Video Captioning – CLIP can generate descriptive captions for images and videos, which is valuable for automating annotations or for enhancing content discovery.

I3D (Inflated 3D ConvNet): 3D Convolutions for Video Embeddings

I3D (Inflated 3D ConvNet) is a convolutional architecture introduced by DeepMind that extends traditional 2D Convolutional Neural Networks (CNNs) into 3D spatiotemporal models by inflating the pre-trained 2D CNN filters into 3D volumetric filters. 3D filters are created by adding an additional channel that allows treating a single image as a video during training. 3D convolutions enable the model to capture temporal dynamics for video understanding tasks better.

An example of inflated Inception-V1 architecture (left) and its inception submodule (right) (Source)

Key Features and Benefits

Inflated 3D Convolutions - Pre-trained 2D CNN weights are used for initialization to enhance training efficiency. 2D CNN filters are inflated to 3D by replicating them across time.

Large Scale Training on Kinetics Dataset - I3D has been pre-trained on the Kinetics dataset (human action recognition) making it robust for transfer learning.

Two-Stream Architecture - I3D also incorporates an optional two-stream architecture where the RGB stream processes the raw video frames for appearance features whereas the optical flow stream uses precomputed optical flow for motion cues to improve accuracy.

Common Use Cases

Human Action Recognition - I3D is great for action recognition tasks in surveillance, healthcare, or in sports analytics (eg: ‘running’, ‘playing soccer’, etc.)

Gesture Recognition - I3D can also detect hand gestures for sign language translation or AR/VR interactions.

Video Anomaly Detection - I3D can be leveraged to identify unusual events such as accidents or theft in security footage.

Conclusion

Video AI models are changing how we perceive video data by extracting as much relevant information as possible. They are transforming industries such as surveillance, sports analytics, and healthcare through various applications. In this blog, we discussed the most popular video AI models every developer should know to unlock new use cases and research. YOLO excels in object detection, MoViNet in efficient action recognition, and SlowFast in temporal modeling. TimeSformer leverages transformers for long-range video understanding, while CLIP bridges text and video for multimodal search, and I3D uses 3D convolutions for spatiotemporal modeling. Together, these cutting-edge models can empower the future of video intelligence.

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![Ditching a Microsoft Job to Enter Startup Hell with Lonewolf Engineer Sam Crombie [Podcast #171]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746753508177/0cd57f66-fdb0-4972-b285-1443a7db39fc.png?#)

.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

-Nintendo-Switch-2-Hands-On-Preview-Mario-Kart-World-Impressions-&-More!-00-10-30.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

-xl.jpg)

![New iPad 11 (A16) On Sale for Just $277.78! [Lowest Price Ever]](https://www.iclarified.com/images/news/97273/97273/97273-640.jpg)

![Apple Foldable iPhone to Feature New Display Tech, 19% Thinner Panel [Rumor]](https://www.iclarified.com/images/news/97271/97271/97271-640.jpg)