Introducing SmallRye LLM - Injecting Langchain4J AI Service for Jakarta EE and Microprofile developers.

If you're a Java developer wanting to build AI agents or interface with various Large Language Models (LLM) in Java you will be familiar with Langchain4J. What is Langchain4J (simplified)? LangChain4J is essentially a toolbox for Java developers that makes it easier to add advanced language AI capabilities (like chatbots or text generators) to their applications, without having to deal with a ton of complicated details. Imagine you want to build a smart app that can answer questions, generate content, or chat like a human. Normally, to talk to an artificial intelligence model (such as ChatGPT or similar), you have to learn different interfaces and deal with lots of technical stuff. LangChain4J simplifies all of that by providing a set of ready-made tools and a standard way (a unified API) to interact with various AI providers and related services—all within Java. Here’s a breakdown in simple terms: Unified Interface: Instead of figuring out how each AI provider works, LangChain4J gives you one common interface to work with. This means if you want to switch from one AI service to another, you usually don’t have to rewrite your entire code. Built-in Tools: It comes with many handy features. For example, it can manage conversation history (so your chatbot “remembers” what was said earlier), help format and manage prompts that are sent to the AI, and even assist with tasks like searching and storing text in a way that makes it easy for the AI to work with. Easier Integration: Think of it like a connector or an adapter that plugs the powerful capabilities of modern AI models into your Java application without you needing to worry about the low-level details. Ready-to-Use Examples: LangChain4J offers examples and patterns so you can quickly see how to use these AI features, whether you’re building a customer support chatbot, an educational tool, or a sophisticated search engine. In short, if you’re a Java developer wanting to build an AI-powered app, LangChain4J gives you a smoother, simpler way to tap into the power of large language models without starting from scratch or getting tangled in the complex details of each individual AI service. Langchain4J Ai service Langchain4J introduced AI services, a high‑level API that abstracts and automates the many steps required to integrate large language models (LLMs) into a Java application. Instead of manually constructing prompts, handling context (or chat memory), formatting outputs, and managing tools or external data retrieval, you simply define a plain Java interface that represents the “service” you want to build. The framework then creates a proxy implementation of that interface, which automatically takes care of converting your method calls into the underlying low‑level operations needed to communicate with the LLM. LangChain4J AI Services lets you sidestep all this complexity. You simply create an interface like this: interface Assistant { @UserMessage("Answer this question: {{it}}") String chat(String userMessage); } Then, by calling a builder method from AI Services, you receive an object that implements this interface. When you invoke the chat method, the framework automatically: Converts your plain string into the proper LLM input (often wrapping it as a “user message”). Attaches any preset system instructions you may have provided (using annotations like @SystemMessage). Optionally manages and appends the conversation history if you have configured chat memory. Sends the composed prompt to the LLM. Parses and converts the output back into a Java type (in this case, a String). Langchain4J in enterprise Java For enterprise Java developers, the idea of creating modular and loosely-coupled applications and having component frameworks to injecting interfaces based on business requirements is what makes building enterprise-ready applications in Java beautiful. There are various enterprise Java frameworks that have Langchain4J integration to build AI‑powered applications (such as chatbots and content summarizers) with minimal configuration and boilerplate code. Langchain4J Spring: integration of LangChain4J framework with the Spring ecosystem - typically Spring Boot. Quarkus Langchain4J: integration of LangChain4J with the Quarkus framework These above-mentioned frameworks leverages on the their respective framework capabilites and benefits by abstracting away the complexity of LLM interactions, thus ensuring that developers write robust, clean and module AI Services. But what about Jakarta EE? If you're familiar with Jakarta EE, it provides various frameworks where interfaces are declared and used for injection, with minimal configuration while abstracting the complexities of the framework (Jakarta Persistence, JPA comes to mind). Introducing Langchain4J Microprofile with SmallRye-LLM. Langchain4J Microprofile (currently known as SmallRye LLM) is a lightw

If you're a Java developer wanting to build AI agents or interface with various Large Language Models (LLM) in Java you will be familiar with Langchain4J.

What is Langchain4J (simplified)?

LangChain4J is essentially a toolbox for Java developers that makes it easier to add advanced language AI capabilities (like chatbots or text generators) to their applications, without having to deal with a ton of complicated details.

Imagine you want to build a smart app that can answer questions, generate content, or chat like a human. Normally, to talk to an artificial intelligence model (such as ChatGPT or similar), you have to learn different interfaces and deal with lots of technical stuff. LangChain4J simplifies all of that by providing a set of ready-made tools and a standard way (a unified API) to interact with various AI providers and related services—all within Java.

Here’s a breakdown in simple terms:

Unified Interface: Instead of figuring out how each AI provider works, LangChain4J gives you one common interface to work with. This means if you want to switch from one AI service to another, you usually don’t have to rewrite your entire code.

Built-in Tools: It comes with many handy features. For example, it can manage conversation history (so your chatbot “remembers” what was said earlier), help format and manage prompts that are sent to the AI, and even assist with tasks like searching and storing text in a way that makes it easy for the AI to work with.

Easier Integration: Think of it like a connector or an adapter that plugs the powerful capabilities of modern AI models into your Java application without you needing to worry about the low-level details.

Ready-to-Use Examples: LangChain4J offers examples and patterns so you can quickly see how to use these AI features, whether you’re building a customer support chatbot, an educational tool, or a sophisticated search engine.

In short, if you’re a Java developer wanting to build an AI-powered app, LangChain4J gives you a smoother, simpler way to tap into the power of large language models without starting from scratch or getting tangled in the complex details of each individual AI service.

Langchain4J Ai service

Langchain4J introduced AI services, a high‑level API that abstracts and automates the many steps required to integrate large language models (LLMs) into a Java application. Instead of manually constructing prompts, handling context (or chat memory), formatting outputs, and managing tools or external data retrieval, you simply define a plain Java interface that represents the “service” you want to build. The framework then creates a proxy implementation of that interface, which automatically takes care of converting your method calls into the underlying low‑level operations needed to communicate with the LLM.

LangChain4J AI Services lets you sidestep all this complexity. You simply create an interface like this:

interface Assistant {

@UserMessage("Answer this question: {{it}}")

String chat(String userMessage);

}

Then, by calling a builder method from AI Services, you receive an object that implements this interface. When you invoke the chat method, the framework automatically:

- Converts your plain string into the proper LLM input (often wrapping it as a “user message”).

- Attaches any preset system instructions you may have provided (using annotations like

@SystemMessage). - Optionally manages and appends the conversation history if you have configured chat memory.

- Sends the composed prompt to the LLM.

- Parses and converts the output back into a Java type (in this case, a

String).

Langchain4J in enterprise Java

For enterprise Java developers, the idea of creating modular and loosely-coupled applications and having component frameworks to injecting interfaces based on business requirements is what makes building enterprise-ready applications in Java beautiful.

There are various enterprise Java frameworks that have Langchain4J integration to build AI‑powered applications (such as chatbots and content summarizers) with minimal configuration and boilerplate code.

- Langchain4J Spring: integration of LangChain4J framework with the Spring ecosystem - typically Spring Boot.

- Quarkus Langchain4J: integration of LangChain4J with the Quarkus framework

These above-mentioned frameworks leverages on the their respective framework capabilites and benefits by abstracting away the complexity of LLM interactions, thus ensuring that developers write robust, clean and module AI Services.

But what about Jakarta EE? If you're familiar with Jakarta EE, it provides various frameworks where interfaces are declared and used for injection, with minimal configuration while abstracting the complexities of the framework (Jakarta Persistence, JPA comes to mind).

Introducing Langchain4J Microprofile with SmallRye-LLM.

Langchain4J Microprofile (currently known as SmallRye LLM) is a lightweight Java library that brings Langchain4J AI services capabilities into the Jakarta EE, MicroProfile and Quarkus ecosystems. This means that it leverages familiar dependency injection (CDI), configuration via MicroProfile Config, and other MicroProfile standards.

The Key features provided by Langchain4J Microprofile:

- Build with CDI at its core. With

@RegisterAIServiceannotation annotated on a Lanchain4J AI service interface, the AI service proxy becomes CDI discoverable bean ready for injection. There are 2 CDI service extensions provided that developers can select to make their AI service CDI discoverable: CDI portable extension or CDI Build Compatible Extension (introduced in CDI 4.0 and higher). -

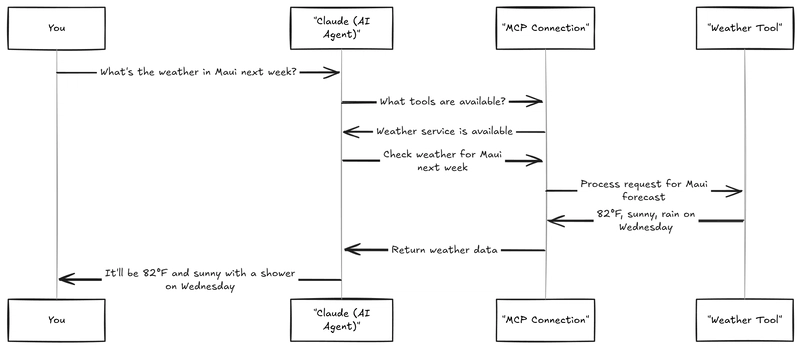

Langchain4J Microprofile config: Developers can benefit from the power of Microprofile Config to build fundamental elements of Langchain4J such as

ChatLanguageModel,ChatMessage,ChatMemory,ContentRetriever,ToolProvider(useful for Model Context Protocol, MCP), and more, without requiring developers to write builders to generate such elements. - Langchain4J Microprofile Fault Tolerance: Leveraging the power of Microprofile Fault Tolerance on your existing Lanchain4J AI services (such as

@Retry,@Timeout,@RateLimit,Fallback, etc). - Langchain4J Microprofile Telemetry: When enabled, developers can observer their LLM metrics (that follows the Semantic Conventions for GenAI Metrics), through Open Telemetry.

Langchain4J Microprofile examples.

There are existing examples provided that showcase the power of Langchain4J Microprofile feature set. These examples is based on a simplified car booking application inspired from the Java meets AI talk from Lize Raes at Devoxx Belgium 2023 with additional work from Jean-François James. The original demo is from Dmytro Liubarskyi.

The Car Booking examples run on the following environments:

- GlassFish

- Payara

- Helidon (both with CDI Build Compatible Extension and CDI Portable Extension)

- Open Liberty

- Wildfly

- Quarkus

Building your own Langchain4J AI Service.

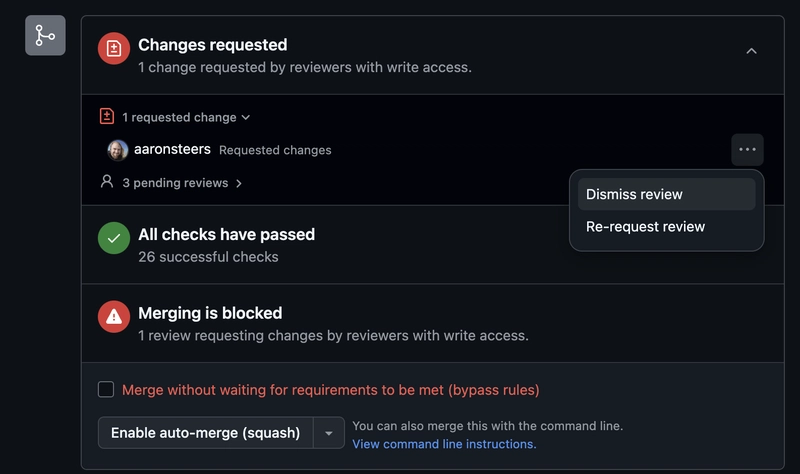

In this example, we'll build our own Assistant AI Service (see code above) using Langchain4J Microprofile. Please ensure that your project is Mavenized.

The current release (as of the time of writing is 0.0.4 and supports Langchain4J version 1.0.0-beta2 (the latest at this time of writing).

- Import SmallRye-LLM Portable Extension to your project. This will enable your AI service(s) to be registered for CDI injection.

- Import SmallRye-LLM Microprofile Config. This will allow us to create Langchain4J features using the power of Microprofile Config specification:

- Annotate your Langchain4J AI service with

@RegisterAIServiceand provide a name for yourChatLanguageModel(this name will be used by CDI container, more about it later).

@RegisterAIService(chatLanguageModelName = "chat-model-openai")

interface Assistant {

@UserMessage("Answer this question: {{it}}")

String chat(String userMessage);

}

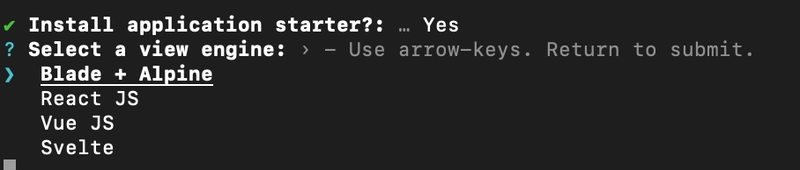

- Configure your

ChatLanguageModelobject in yourmicroprofile-config.properties(most application provide this file insideresources\META-INFfolder).

For this example, we'll use the AzureOpenAiChatModel. When configuring such a model, Langchain4J comes with a Builder that allows you to build a reference of ChatLanguageModel, depending on the LLM that you select.

A typical way one would create a ChatLanguageModel programmatically (using Olllama in this case):

private static final String MODEL = "mistral";

private static final String BASE_URL = "http://localhost:11434";

ChatLanguageModel model = OllamaChatModel.builder()

.baseUrl(BASE_URL)

.modelName(MODEL)

.temperature(0.2)

.timeout(timeout)

.build();

With Microprofile Config enabled, we can provide the same feature as a configuration:

The configuration format for any feature relies that the Object has a Builder provided by Langchain4J, otherwise it will break.

The configuration to build the feature is of the following format:

smallrye.llm.plugin..config.=

Example, to create AzureOpenAiChatModel using its Builder internally by CDI, we've configured it as follows:

smallrye.llm.plugin.chat-model-openai.class=dev.langchain4j.model.azure.AzureOpenAiChatModel

smallrye.llm.plugin.chat-model-openai.config.api-key=${azure.openai.api.key}

smallrye.llm.plugin.chat-model-openai.config.endpoint=${azure.openai.endpoint}

smallrye.llm.plugin.chat-model-openai.config.service-version=2024-02-15-preview

smallrye.llm.plugin.chat-model-openai.config.deployment-name=${azure.openai.deployment.name}

smallrye.llm.plugin.chat-model-openai.config.temperature=0.1

smallrye.llm.plugin.chat-model-openai.config.topP=0.1

smallrye.llm.plugin.chat-model-openai.config.timeout=PT120S

smallrye.llm.plugin.chat-model-openai.config.max-retries=2

smallrye.llm.plugin.chat-model-openai.config.logRequestsAndResponses=true

The configuration must start with smallrye.llm.plugin in order for the CDI extension to detect the Langchain4J configuration. Next we, specify a CDI name for our ChatLanguageModel. In this case it's named chat-model-openai. That's the same name we provided on the @RegisterAIService which tells CDI that it must find the chat-ai-openai ChatLanguageModeland register it to Langchain4J when building Assistant AI service.

The builder-property-method-name is case-sensitive (is named exactly as found on Builder. structure.

By default, all objects configured with Microprofile config is @ApplicationScoped, unless specified otherwise.

And that's it!

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![From Accountant to Data Engineer with Alyson La [Podcast #168]](https://cdn.hashnode.com/res/hashnode/image/upload/v1744420903260/fae4b593-d653-41eb-b70b-031591aa2f35.png?#)

.png?#)

![Apple Watch SE 2 On Sale for Just $169.97 [Deal]](https://www.iclarified.com/images/news/96996/96996/96996-640.jpg)

![Apple Posts Full First Episode of 'Your Friends & Neighbors' on YouTube [Video]](https://www.iclarified.com/images/news/96990/96990/96990-640.jpg)