"How we automated ServiceNow change approvals using GPT-4 and AWS APIs."

Introduction Change management in IT operations is a critical yet time-consuming process. Traditionally, human reviewers assess change requests (CRs) for risks, compliance, and operational impact before approval. But with AI-powered Large Language Models (LLMs), we can automate approvals, reduce human bottlenecks, and accelerate deployments while maintaining security and compliance. This article explores how AI-driven change management can transform approvals for cloud (AWS/Azure), networking, and CI/CD pipelines—using ServiceNow as the orchestration platform. Why Automate Change Management? ✅ Faster Deployments – Reduce approval delays from hours/days to minutes. ✅ Consistency & Compliance – AI ensures every change follows policies (e.g., AWS Well-Architected, PCI-DSS). ✅ Risk Reduction – LLMs analyze historical incidents to predict failures. ✅ Cost Efficiency – Fewer manual reviews = lower operational overhead. Use Cases for AI-Powered Change Management 1. Cloud Infrastructure Changes (AWS/Azure) Auto-approving routine updates (e.g., scaling EC2/VM instances). Risk-checking Terraform/CloudFormation changes before deployment. Compliance validation (e.g., ensuring S3 buckets aren’t public). 2. Networking & Security Policies Firewall rule reviews (e.g., detecting overly permissive rules). VPN/peering change approvals based on least-privilege principles. 3. CI/CD Pipeline Modifications Validating deployment scripts (e.g., Jenkins/GitHub Actions). Detecting risky IaC (Infrastructure-as-Code) changes (e.g., unintended deletion of resources). 4. Database & Storage Changes Schema change impact analysis (e.g., PostgreSQL/MySQL migrations). Storage class optimizations (e.g., moving S3 data to Glacier). High-Level Solution Architecture Here’s how an AI-driven change management system works in ServiceNow: graph TD A[Change Request in ServiceNow] --> B[AI Agent (LLM)] B --> C{Automated Risk Assessment} C -->|Low Risk| D[Auto-Approved & Executed] C -->|High Risk| E[Human Escalation] B --> F[Check Cloud (AWS/Azure) APIs] B --> G[Validate CI/CD Pipeline] B --> H[Review Network Configs] D --> I[Deployment via Terraform/Ansible] I --> J[Post-Change Validation & Logging] Key Components: ServiceNow Change Module – Submits CRs to the AI agent. LLM (GPT-4/Claude/Llama 3) – Analyzes risk, compliance, and past incidents. Cloud APIs (AWS/Azure) – Fetches real-time configs for validation. CI/CD Integration – Checks pipeline scripts before execution. Approval Workflow – Auto-approves low-risk changes, escalates exceptions. Implementation Steps Train the LLM on historical change data (approved/rejected CRs). Integrate with ServiceNow via APIs to fetch/submit CRs. Connect to Cloud APIs for real-time checks (e.g., AWS Config). Set up Guardrails – Ensure AI only approves within policy bounds. Monitor & Refine – Continuously improve AI accuracy with feedback. Challenges & Mitigations ⚠ False Positives/Negatives → Start with low-risk changes, then expand. ⚠ Regulatory Compliance → Keep human oversight for critical systems. ⚠ Integration Complexity → Use pre-built ServiceNow AI connectors. End-to-End AI-Powered Change Management Workflow 1. High-Level Architecture Diagram graph LR A[User Submits Change Request (ServiceNow)] --> B[AI Agent (LLM) Evaluates Risk] B --> C{Low Risk?} C -->|Yes| D[Auto-Approved & Executed] C -->|No| E[Human Reviewer] D --> F[Deploy via Terraform/Ansible] F --> G[Post-Execution Validation] G --> H[Log Results in ServiceNow] subgraph "AI Agent Hosting Options" B --> I[(On-Prem LLM (e.g., Llama 3, Claude)] B --> J[Cloud LLM (e.g., GPT-4, Bedrock)] B --> K[Hybrid (Private + Public AI)] end subgraph "Integration Layer" B --> L[AWS/Azure APIs] B --> M[CI/CD Pipeline (Jenkins/GitHub Actions)] B --> N[Network Config DB] end 2. Detailed Workflow Steps Step Process AI Agent’s Role Integration Points 1. Change Request Submission User submits CR in ServiceNow (e.g., "Increase AWS ASG capacity"). – ServiceNow API 2. AI Agent Risk Assessment LLM reviews: - Historical incidents (similar past failures) - Compliance checks (e.g., AWS best practices) - Impact analysis (dependencies, blast radius) Generates risk score (Low/Medium/High) AWS Config, Azure Policy, Jira/Splunk logs 3. Approval Decision - Low risk → Auto-approved - Medium/High → Escalate to human Provides reasoning (e.g., "No recent failures for ASG scaling") ServiceNow Workflow 4. Execution Approved changes trigger: - Terraform Apply (IaC) - Ansible Playbook (config mgmt) - Jenkins/GitHub Actions (CI/CD) Monitors

Introduction

Change management in IT operations is a critical yet time-consuming process. Traditionally, human reviewers assess change requests (CRs) for risks, compliance, and operational impact before approval. But with AI-powered Large Language Models (LLMs), we can automate approvals, reduce human bottlenecks, and accelerate deployments while maintaining security and compliance.

This article explores how AI-driven change management can transform approvals for cloud (AWS/Azure), networking, and CI/CD pipelines—using ServiceNow as the orchestration platform.

Why Automate Change Management?

✅ Faster Deployments – Reduce approval delays from hours/days to minutes.

✅ Consistency & Compliance – AI ensures every change follows policies (e.g., AWS Well-Architected, PCI-DSS).

✅ Risk Reduction – LLMs analyze historical incidents to predict failures.

✅ Cost Efficiency – Fewer manual reviews = lower operational overhead.

Use Cases for AI-Powered Change Management

1. Cloud Infrastructure Changes (AWS/Azure)

- Auto-approving routine updates (e.g., scaling EC2/VM instances).

- Risk-checking Terraform/CloudFormation changes before deployment.

- Compliance validation (e.g., ensuring S3 buckets aren’t public).

2. Networking & Security Policies

- Firewall rule reviews (e.g., detecting overly permissive rules).

- VPN/peering change approvals based on least-privilege principles.

3. CI/CD Pipeline Modifications

- Validating deployment scripts (e.g., Jenkins/GitHub Actions).

- Detecting risky IaC (Infrastructure-as-Code) changes (e.g., unintended deletion of resources).

4. Database & Storage Changes

- Schema change impact analysis (e.g., PostgreSQL/MySQL migrations).

- Storage class optimizations (e.g., moving S3 data to Glacier).

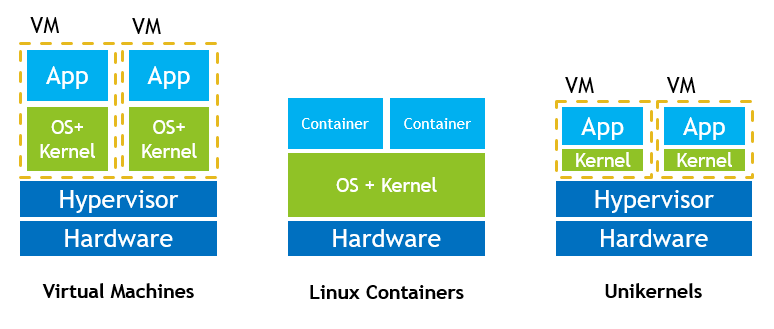

High-Level Solution Architecture

Here’s how an AI-driven change management system works in ServiceNow:

graph TD

A[Change Request in ServiceNow] --> B[AI Agent (LLM)]

B --> C{Automated Risk Assessment}

C -->|Low Risk| D[Auto-Approved & Executed]

C -->|High Risk| E[Human Escalation]

B --> F[Check Cloud (AWS/Azure) APIs]

B --> G[Validate CI/CD Pipeline]

B --> H[Review Network Configs]

D --> I[Deployment via Terraform/Ansible]

I --> J[Post-Change Validation & Logging]

Key Components:

- ServiceNow Change Module – Submits CRs to the AI agent.

- LLM (GPT-4/Claude/Llama 3) – Analyzes risk, compliance, and past incidents.

- Cloud APIs (AWS/Azure) – Fetches real-time configs for validation.

- CI/CD Integration – Checks pipeline scripts before execution.

- Approval Workflow – Auto-approves low-risk changes, escalates exceptions.

Implementation Steps

- Train the LLM on historical change data (approved/rejected CRs).

- Integrate with ServiceNow via APIs to fetch/submit CRs.

- Connect to Cloud APIs for real-time checks (e.g., AWS Config).

- Set up Guardrails – Ensure AI only approves within policy bounds.

- Monitor & Refine – Continuously improve AI accuracy with feedback.

Challenges & Mitigations

⚠ False Positives/Negatives → Start with low-risk changes, then expand.

⚠ Regulatory Compliance → Keep human oversight for critical systems.

⚠ Integration Complexity → Use pre-built ServiceNow AI connectors.

End-to-End AI-Powered Change Management Workflow

1. High-Level Architecture Diagram

graph LR

A[User Submits Change Request (ServiceNow)] --> B[AI Agent (LLM) Evaluates Risk]

B --> C{Low Risk?}

C -->|Yes| D[Auto-Approved & Executed]

C -->|No| E[Human Reviewer]

D --> F[Deploy via Terraform/Ansible]

F --> G[Post-Execution Validation]

G --> H[Log Results in ServiceNow]

subgraph "AI Agent Hosting Options"

B --> I[(On-Prem LLM (e.g., Llama 3, Claude)]

B --> J[Cloud LLM (e.g., GPT-4, Bedrock)]

B --> K[Hybrid (Private + Public AI)]

end

subgraph "Integration Layer"

B --> L[AWS/Azure APIs]

B --> M[CI/CD Pipeline (Jenkins/GitHub Actions)]

B --> N[Network Config DB]

end

2. Detailed Workflow Steps

| Step | Process | AI Agent’s Role | Integration Points |

|---|---|---|---|

| 1. Change Request Submission | User submits CR in ServiceNow (e.g., "Increase AWS ASG capacity"). | – | ServiceNow API |

| 2. AI Agent Risk Assessment | LLM reviews: - Historical incidents (similar past failures) - Compliance checks (e.g., AWS best practices) - Impact analysis (dependencies, blast radius) |

Generates risk score (Low/Medium/High) | AWS Config, Azure Policy, Jira/Splunk logs |

| 3. Approval Decision | - Low risk → Auto-approved - Medium/High → Escalate to human |

Provides reasoning (e.g., "No recent failures for ASG scaling") | ServiceNow Workflow |

| 4. Execution | Approved changes trigger: - Terraform Apply (IaC) - Ansible Playbook (config mgmt) - Jenkins/GitHub Actions (CI/CD) |

Monitors execution status | AWS CloudFormation, Azure DevOps |

| 5. Post-Change Validation | AI verifies: - No downtime detected (CloudWatch/New Relic) - Compliance still met |

Logs results & suggests improvements | Prometheus, Datadog |

| 6. Feedback Loop | AI learns from: - False positives/negatives - Human overrides |

Retrains model periodically | ServiceNow CMDB |

3. Where is the AI Agent Hosted?

| Option | Pros | Cons | Best For |

|---|---|---|---|

| 1. Cloud LLM (OpenAI GPT-4, AWS Bedrock, Azure OpenAI) | - Easy setup - High accuracy - Scalable |

- Data privacy concerns - API costs |

Companies using public cloud with low regulatory constraints |

| 2. On-Prem LLM (Llama 3, Claude, Mistral) | - Full data control - No vendor lock-in - Compliant (HIPAA/GDPR) |

- Requires GPU infra - Maintenance overhead |

Highly regulated industries (finance, healthcare) |

| 3. Hybrid (Private LLM + Public API Fallback) | - Balances cost & compliance - Uses cloud for complex queries |

- Integration complexity | Enterprises needing flexibility |

4. Key Integration Points

- ServiceNow API → CR submission/approval status.

- AWS/Azure APIs → Real-time cloud config checks.

- CI/CD Tools → Pre-deployment validation (e.g., Jenkins plugins).

- Monitoring Tools → Post-change health checks (New Relic, Splunk).

Final Thoughts

This workflow eliminates 70-80% of manual reviews while keeping critical changes human-audited. The AI Agent can be hosted on-cloud, on-prem, or hybrid, depending on security needs.

Conclusion

AI-powered change management reduces manual work, speeds up deployments, and minimizes risks. By integrating LLMs with ServiceNow, enterprises can automate approvals for cloud, DevOps, and networking—while keeping humans in the loop for critical decisions.

Next Steps?

- Pilot AI approvals for non-critical changes.

- Measure time/cost savings vs. manual reviews.

- Scale to more complex workflows.

What’s your take? Have you tried AI for IT change management? Let’s discuss in the comments!

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![[DEALS] Microsoft Visual Studio Professional 2022 + The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From Accountant to Data Engineer with Alyson La [Podcast #168]](https://cdn.hashnode.com/res/hashnode/image/upload/v1744420903260/fae4b593-d653-41eb-b70b-031591aa2f35.png?#)

.png?#)

![iPadOS 19 Will Be More Like macOS [Gurman]](https://www.iclarified.com/images/news/97001/97001/97001-640.jpg)

![Apple TV+ Summer Preview 2025 [Video]](https://www.iclarified.com/images/news/96999/96999/96999-640.jpg)

![Apple Watch SE 2 On Sale for Just $169.97 [Deal]](https://www.iclarified.com/images/news/96996/96996/96996-640.jpg)