Enigmata’s Multi-Stage and Mix-Training Reinforcement Learning Recipe Drives Breakthrough Performance in LLM Puzzle Reasoning

Large Reasoning Models (LRMs), trained from LLMs using reinforcement learning (RL), demonstrated great performance in complex reasoning tasks, including mathematics, STEM, and coding. However, existing LRMs face challenges in completing various puzzle tasks that require purely logical reasoning skills, which are easy and obvious for humans. Current methods targeting puzzles focus only on designing benchmarks […] The post Enigmata’s Multi-Stage and Mix-Training Reinforcement Learning Recipe Drives Breakthrough Performance in LLM Puzzle Reasoning appeared first on MarkTechPost.

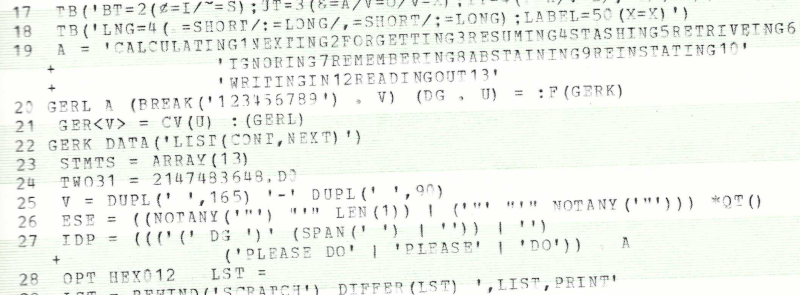

Large Reasoning Models (LRMs), trained from LLMs using reinforcement learning (RL), demonstrated great performance in complex reasoning tasks, including mathematics, STEM, and coding. However, existing LRMs face challenges in completing various puzzle tasks that require purely logical reasoning skills, which are easy and obvious for humans. Current methods targeting puzzles focus only on designing benchmarks for evaluation, lacking the training methods and resources for modern LLMs to tackle this challenge. Current puzzle datasets lack diversity and scalability, covering limited puzzle types with little control over generation or difficulty. Moreover, due to the success of the “LLM+RLVR” paradigm, it has become crucial to obtain large, diverse, and challenging sets of verifiable puzzle prompts for training agents.

Reinforcement Learning with Verifiable Rewards (RLVR) has emerged as a key method for improving models’ reasoning capabilities, removing the need for reward models by directly assigning rewards based on objectively verifiable answers. Puzzles are particularly well-suited for RLVR. However, most prior RLVR research has overlooked the puzzles’ potential for delivering effective reward signals. In puzzle reasoning of LLMs, existing benchmarks evaluate different types of reasoning, including abstract, deductive, and compositional reasoning. Few benchmarks support scalable generation and difficulty control but lack puzzle diversity. Moreover, the improvement of LLMs’ puzzle-solving abilities mainly falls into two categories: tool integration and RLVR.

Researchers from ByteDance Seed, Fudan University, Tsinghua University, Nanjing University, and Shanghai Jiao Tong University have proposed Enigmata, the first comprehensive toolkit designed for improving LLMs with puzzle reasoning skills. It contains 36 tasks across seven categories, each featuring a generator that produces unlimited examples with controllable difficulty and a rule-based verifier for automatic evaluation. The researchers further developed Enigmata-Eval as a rigorous benchmark and created optimized multi-task RLVR strategies. Puzzle data from Enigmata enhances SoTA performance on advanced math and STEM reasoning tasks like AIME, BeyondAIME, and GPQA when trained on larger models like Seed1.5-Thinking. This shows the generalization benefits of Enigmata.

The Enigmata-Data comprises 36 puzzle tasks organized into 7 primary categories, including Crypto, Arithmetic, Logic, Grid, Graph, Search, and Sequential Puzzle, making it the only dataset having multiple task categories with scalability, automatic verification, and public availability. The data construction follows a three-phase pipeline: Tasks Collection and Design, Auto-Generator and Verifier Development, and Sliding Difficulty Control. Moreover, the Enigmata-Eval is developed by systematically sampling from the broader dataset, aiming to extract 50 instances per difficulty level for each task. The final evaluation set contains 4,758 puzzle instances rather than the theoretical maximum of 5,400, due to inherent constraints, where some tasks generate fewer instances per difficulty level.

The proposed model outperforms most public models on Enigmata-Eval with 32B parameters, showing the effectiveness of the dataset and training recipe. The model stands out on the challenging ARC-AGI benchmark, surpassing strong reasoning models such as Gemini 2.5 Pro, o3-mini, and o1. The Qwen2.5-32B-Enigmata shows outstanding performance in structured reasoning categories, outperforming in Crypto, Arithmetic, and Logic tasks, suggesting effective development of rule-based reasoning capabilities. The model shows competitive performance in search tasks that require strategic exploration and planning capabilities. Moreover, Crypto and Arithmetic tasks tend to provide the highest accuracy, while spatial and sequential tasks remain more difficult.

In this paper, researchers introduced Enigmata, a comprehensive suite for equipping LLMs with advanced puzzle reasoning that integrates seamlessly with RL using verifiable rule-based rewards. The trained Enigmata-Model shows superior performance and robust generalization skills through RLVR training. Experiments reveal that when applied to larger models such as Seed1.5-Thinking (20B/200B parameters), synthetic puzzle data brings additional benefits in other domains, including mathematics and STEM reasoning over state-of-the-art models. Enigmata provides a solid foundation for the research community to advance reasoning model development, offering a unified framework that effectively bridges logical puzzle-solving with broader reasoning capabilities in LLMs.

Check out the Paper, GitHub Page and Project Page. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 95k+ ML SubReddit and Subscribe to our Newsletter.

The post Enigmata’s Multi-Stage and Mix-Training Reinforcement Learning Recipe Drives Breakthrough Performance in LLM Puzzle Reasoning appeared first on MarkTechPost.

![[The AI Show Episode 150]: AI Answers: AI Roadmaps, Which Tools to Use, Making the Case for AI, Training, and Building GPTs](https://www.marketingaiinstitute.com/hubfs/ep%20150%20cover.png)

![[The AI Show Episode 149]: Google I/O, Claude 4, White Collar Jobs Automated in 5 Years, Jony Ive Joins OpenAI, and AI’s Impact on the Environment](https://www.marketingaiinstitute.com/hubfs/ep%20149%20cover.png)

![Z buffer problem in a 2.5D engine similar to monument valley [closed]](https://i.sstatic.net/OlHwug81.jpg)

-1280x720.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Zoonar_GmbH_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![M4 MacBook Air Hits New All-Time Low of $837.19 [Deal]](https://www.iclarified.com/images/news/97480/97480/97480-640.jpg)