Built for Brains: Why AI Loves Linux

There’s a reason Linux why nearly every major AI project runs on Linux? It's not coincidence or just developer preference, there's a fundamental compatibility between Linux's architecture and the demands of modern artificial intelligence. You could be running massive language models or deploying computer vision at the edge, Linux is the brain making it all possible. For this article, we are looking into the symbiotic relationship between Linux and AI. For those just starting their Linux journey or curious about how operating systems impact machine learning, this exploration might change how you view your development environment. What You'll Learn Beyond Open Source: Technical Advantages Linux brings to AI The perfect environment for AI tools and frameworks Why AI performance needs Linux (GPUs and drivers) Scalable Operations: From Laptop to Cluster Performance Optimization: Linux's Resource Management Edge Career Implications: Skills That Transfer Starting Your Linux + AI Journey Conclusion: Not Just an Option, But a Necessity 1. Beyond Open Source: Technical Advantages Linux brings to AI While Linux's open-source nature aligns with AI's collaborative ethos, the technical advantages run deeper. Linux gives AI developers unprecedented control over: Memory management: Critical when training models that push RAM limits. I/O optimization: For handling massive datasets efficiently. Scheduler customization: To maximize CPU/GPU utilization during training. AI moves fast. Linux was built for fast-moving innovation. Because Linux is open source, developers can build, tweak, and optimize their environments freely. There are no licensing bottlenecks, no waiting on a vendor to roll out support for a new Python version, and no hidden system processes draining performance. The AI community values transparency and collaboration, two things that are baked into Linux’s DNA. 2. The perfect environment for AI tools and frameworks Let’s talk tooling. Major AI frameworks like TensorFlow, PyTorch, Hugging Face Transformers, and OpenCV are developed and tested primarily on Linux. If you're training or deploying models, chances are your documentation, community help, and packages are optimized for Linux first. On top of that, Linux offers better support for package management, Python environments, and developer-first workflows. Setting up Anaconda, Jupyter, or virtual environments is smoother and more consistent. 3. Why AI performance needs Linux (GPUs and drivers) High-performance AI requires serious GPU acceleration and Linux leads here too. CUDA (NVIDIA’s GPU computing toolkit) performs best on Linux. When training large language models or computer vision models, using the right drivers and kernel modules can be the difference between minutes and hours. Linux gives engineers more control over GPU allocation, driver compatibility, and low-level optimizations. That’s one reason cloud providers like AWS, GCP, and Azure all offer Linux-based AI-optimized VM instances. AI's computational demands require serious hardware acceleration. Linux excels here because: Direct kernel access: NVIDIA's CUDA drivers integrate more deeply with Linux. Lower overhead: Less system resources consumed by the OS itself. Fine-grained control: Ability to allocate specific GPUs to specific workloads. This translates to real performance gains. 4. Scalable Operations: From Laptop to Cluster Modern AI doesn't stay on one machine, it scales across clusters. Linux dominates here through: Containerization: Docker and Kubernetes were built for Linux first. Orchestration: Tools like Kubeflow and MLflow work seamlessly on Linux. Resource sharing: Better multi-user environment for teams sharing compute. If you're deploying models with TensorFlow Serving, running inference on the edge, or using ML pipelines in CI/CD workflows, Linux brings the stability and customization needed to manage AI in real-world environments. 5. Performance Optimization: Linux's Resource Management Edge When squeezing every ounce of performance matters (and in AI, it always does), Linux offers: Transparent resource monitoring: See exactly where bottlenecks occur. Minimal background processes: More resources for your models. Dynamic configuration: Adjust system parameters without rebooting. 6. Career Implications: Skills That Transfer Understanding Linux isn't just about being comfortable with a command line, it's about developing a mental model of how AI systems function at scale: Debugging skills: Trace issues across the entire stack, from hardware to model. Deployment expertise: Move from notebook to production confidently. Performance optimization: Make informed decisions about infrastructure. MLOps capabilities: Bridge the gap between research and engineering. The Linux advantage manifests most clearly in production environments: Edge

There’s a reason Linux why nearly every major AI project runs on Linux? It's not coincidence or just developer preference, there's a fundamental compatibility between Linux's architecture and the demands of modern artificial intelligence. You could be running massive language models or deploying computer vision at the edge, Linux is the brain making it all possible.

For this article, we are looking into the symbiotic relationship between Linux and AI. For those just starting their Linux journey or curious about how operating systems impact machine learning, this exploration might change how you view your development environment.

What You'll Learn

- Beyond Open Source: Technical Advantages Linux brings to AI

- The perfect environment for AI tools and frameworks

- Why AI performance needs Linux (GPUs and drivers)

- Scalable Operations: From Laptop to Cluster

- Performance Optimization: Linux's Resource Management Edge

- Career Implications: Skills That Transfer

- Starting Your Linux + AI Journey

- Conclusion: Not Just an Option, But a Necessity

1. Beyond Open Source: Technical Advantages Linux brings to AI

While Linux's open-source nature aligns with AI's collaborative ethos, the technical advantages run deeper. Linux gives AI developers unprecedented control over:

- Memory management: Critical when training models that push RAM limits.

- I/O optimization: For handling massive datasets efficiently.

- Scheduler customization: To maximize CPU/GPU utilization during training.

AI moves fast. Linux was built for fast-moving innovation. Because Linux is open source, developers can build, tweak, and optimize their environments freely. There are no licensing bottlenecks, no waiting on a vendor to roll out support for a new Python version, and no hidden system processes draining performance.

The AI community values transparency and collaboration, two things that are baked into Linux’s DNA.

2. The perfect environment for AI tools and frameworks

Let’s talk tooling.

Major AI frameworks like TensorFlow, PyTorch, Hugging Face Transformers, and OpenCV are developed and tested primarily on Linux. If you're training or deploying models, chances are your documentation, community help, and packages are optimized for Linux first.

On top of that, Linux offers better support for package management, Python environments, and developer-first workflows. Setting up Anaconda, Jupyter, or virtual environments is smoother and more consistent.

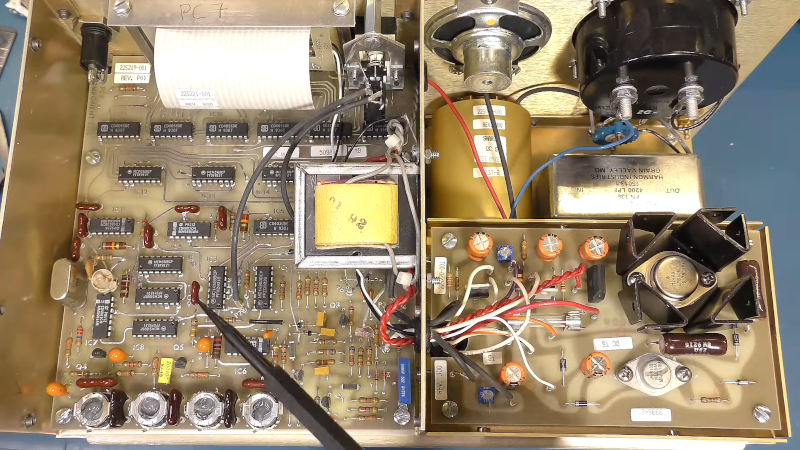

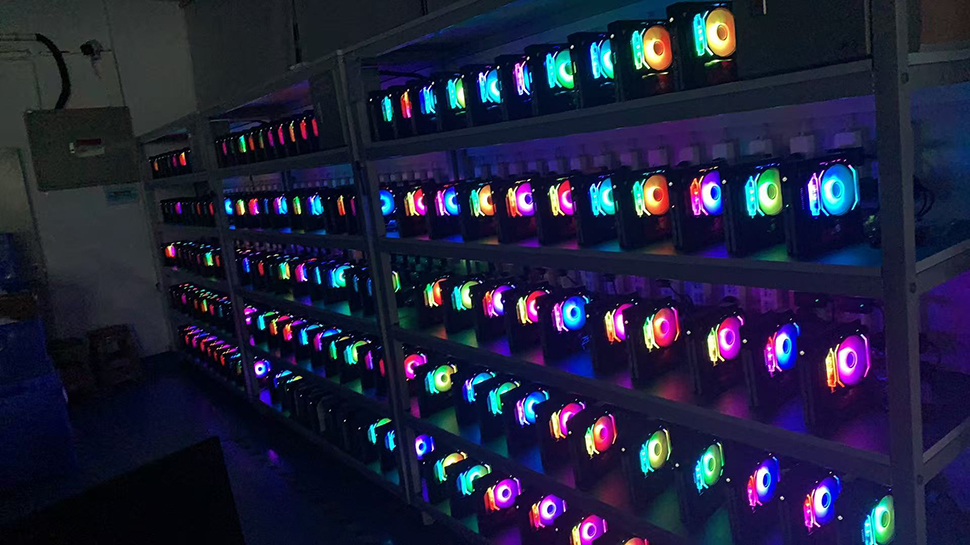

3. Why AI performance needs Linux (GPUs and drivers)

High-performance AI requires serious GPU acceleration and Linux leads here too. CUDA (NVIDIA’s GPU computing toolkit) performs best on Linux. When training large language models or computer vision models, using the right drivers and kernel modules can be the difference between minutes and hours.

Linux gives engineers more control over GPU allocation, driver compatibility, and low-level optimizations. That’s one reason cloud providers like AWS, GCP, and Azure all offer Linux-based AI-optimized VM instances.

AI's computational demands require serious hardware acceleration. Linux excels here because:

- Direct kernel access: NVIDIA's CUDA drivers integrate more deeply with Linux.

- Lower overhead: Less system resources consumed by the OS itself.

- Fine-grained control: Ability to allocate specific GPUs to specific workloads.

This translates to real performance gains.

4. Scalable Operations: From Laptop to Cluster

Modern AI doesn't stay on one machine, it scales across clusters. Linux dominates here through:

- Containerization: Docker and Kubernetes were built for Linux first.

- Orchestration: Tools like Kubeflow and MLflow work seamlessly on Linux.

- Resource sharing: Better multi-user environment for teams sharing compute.

If you're deploying models with TensorFlow Serving, running inference on the edge, or using ML pipelines in CI/CD workflows, Linux brings the stability and customization needed to manage AI in real-world environments.

5. Performance Optimization: Linux's Resource Management Edge

When squeezing every ounce of performance matters (and in AI, it always does), Linux offers:

- Transparent resource monitoring: See exactly where bottlenecks occur.

- Minimal background processes: More resources for your models.

- Dynamic configuration: Adjust system parameters without rebooting.

6. Career Implications: Skills That Transfer

Understanding Linux isn't just about being comfortable with a command line, it's about developing a mental model of how AI systems function at scale:

- Debugging skills: Trace issues across the entire stack, from hardware to model.

- Deployment expertise: Move from notebook to production confidently.

- Performance optimization: Make informed decisions about infrastructure.

- MLOps capabilities: Bridge the gap between research and engineering.

The Linux advantage manifests most clearly in production environments:

- Edge deployment: Linux powers everything from Raspberry Pi computer vision to Tesla's self-driving systems.

- Model serving: TensorFlow Serving, Triton Inference Server, and other tools expect Linux environments.

- Inference optimization: From kernel tweaks to compiler optimizations, Linux enables peak performance.

7. Starting Your Linux + AI Journey

If you're convinced but unsure where to begin:

- Set up a dual-boot system or dedicated Linux VM for ML work.

- Learn basic bash scripting for data preprocessing and automation.

- Explore containerization with Docker to make environments reproducible.

- Experiment with GPU monitoring tools like

nvidia-smiandnvtop.

8. Conclusion: Not Just an Option, But a Necessity

Linux isn't just one choice among many for AI work, it's increasingly the only practical option for serious development and deployment. From research breakthroughs to production systems serving billions of predictions, Linux's flexibility, performance, and ecosystem make it indispensable.

That's why AI loves Linux.

What's your experience with Linux in AI workflows? Have you noticed performance differences between operating systems? Let's discuss in the comments! You can also follow me on Dev.to and connect with me on LinkedIn

#30DaysLinuxChallenge #CloudWhistler #RedHat #DeepLearning #DevOps #Linux #OpenSource #DataScience #Womenwhobuild #MachineLearning #AI #LinuxForAI #Ansible #OpenShift #SysAdmin #MLOps #CloudEngineer

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![Ditching a Microsoft Job to Enter Startup Hell with Lonewolf Engineer Sam Crombie [Podcast #171]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746753508177/0cd57f66-fdb0-4972-b285-1443a7db39fc.png?#)

.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

-Nintendo-Switch-2-Hands-On-Preview-Mario-Kart-World-Impressions-&-More!-00-10-30.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

-xl.jpg)

![New iPad 11 (A16) On Sale for Just $277.78! [Lowest Price Ever]](https://www.iclarified.com/images/news/97273/97273/97273-640.jpg)

![Apple Foldable iPhone to Feature New Display Tech, 19% Thinner Panel [Rumor]](https://www.iclarified.com/images/news/97271/97271/97271-640.jpg)

![[Weekly funding roundup May 3-9] VC inflow into Indian startups touches new high](https://images.yourstory.com/cs/2/220356402d6d11e9aa979329348d4c3e/WeeklyFundingRoundupNewLogo1-1739546168054.jpg)