Exploring a Python Project on GitHub

Exploring Multimodal Conversational AI in the Medical Domain with RAG and LLaVA In the world of healthcare, quick access to accurate medical information is crucial for providing quality patient care. With the advancements in AI technology, conversational AI systems have become increasingly popular for answering medical queries. This GitHub repo presents a Capstone Project focused on developing a multimodal conversational AI system that can answer medical queries using both text and images. Key Components of the Repo README.md: The README file provides an overview of the project, detailing the use of Retrieval-Augmented Generation (RAG) for text-based medical knowledge retrieval and LLaVA (Large Language and Vision Assistant) for analyzing chest X-rays. environment.yml: This file contains the project's environment configuration, specifying the necessary dependencies such as Python, PyTorch, Transformers, FastAPI, and more. new_temp.py: This source code file loads a LLaVA model for conditional generation of medical text. requirements.txt: Lists additional dependencies and libraries essential for the project, including data handling, API, and backend components. setup_project.ps1: This script defines the project structure with directories for data, source code, and preprocessing scripts. temp.py: Another source code file that loads a LLaVA model and processor for text generation. Code Snippets Here's a snippet from the new_temp.py source code: # Load LLaVA model for conditional text generation model = LLaVA() text = model.generate_text() print(text) And a snippet from the temp.py source code: # Load LLaVA model and processor for text generation model = LLaVA() processor = Processor() text = model.generate_text(processor) print(text) Example Usage To run the project and generate medical text, follow these steps: Clone the repo: git clone https://github.com/yourusername/capstone-project.git. Set up the project environment: conda env create -f environment.yml. Run the script new_temp.py to generate medical text using the LLaVA model. Conclusion Developing a multimodal conversational AI system for the medical domain is a challenging yet rewarding task. By leveraging the power of both text and images, this project aims to provide accurate and efficient answers to medical queries. With the use of RAG and LLaVA models, the system can analyze text-based medical knowledge and interpret chest X-rays to offer comprehensive solutions. While this project may not have gained much attention yet, it holds immense potential for revolutionizing the way medical queries are handled in the healthcare industry. As technology continues to advance, the integration of AI in healthcare will play a significant role in improving patient care and outcomes.

Exploring Multimodal Conversational AI in the Medical Domain with RAG and LLaVA

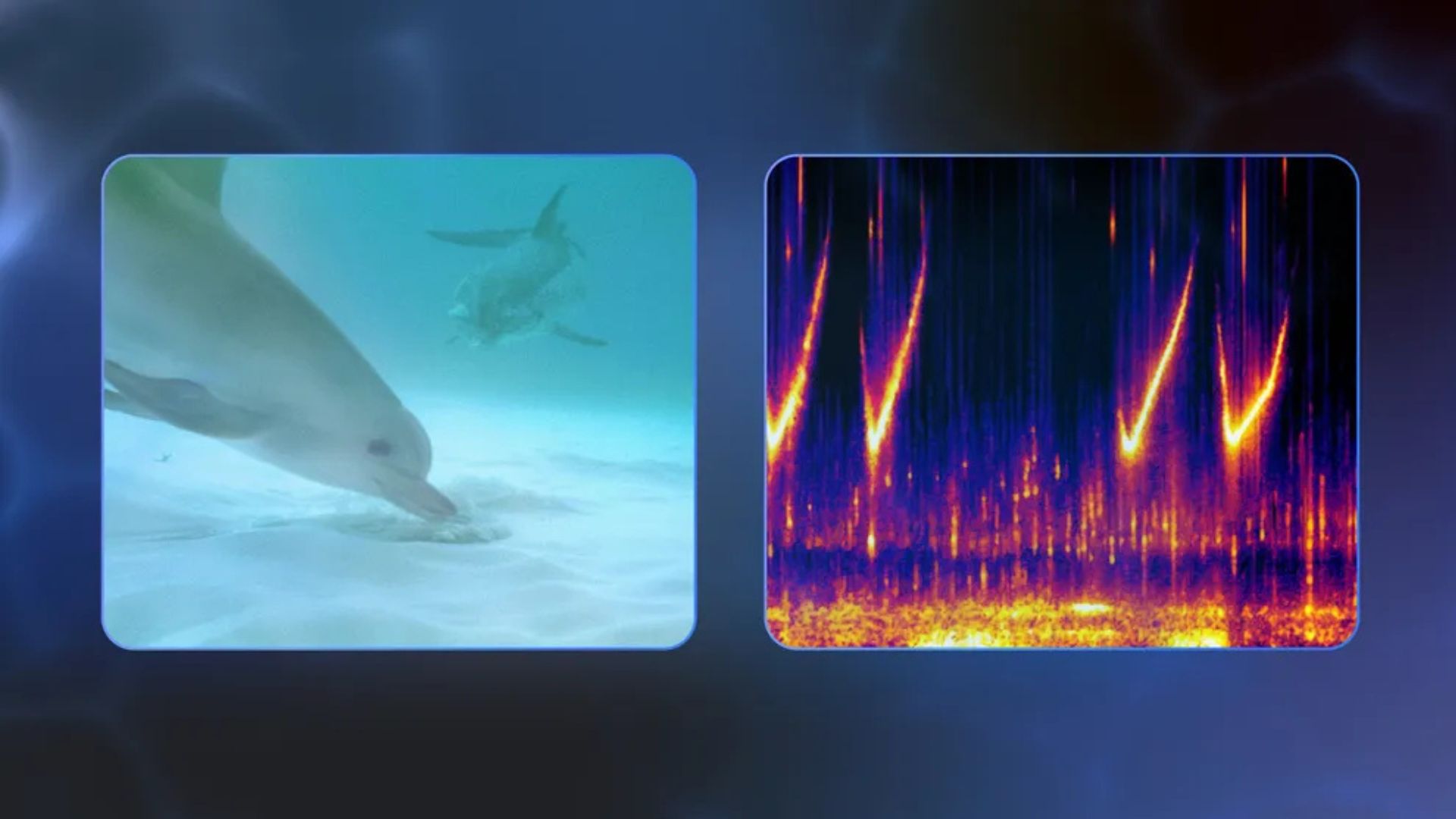

In the world of healthcare, quick access to accurate medical information is crucial for providing quality patient care. With the advancements in AI technology, conversational AI systems have become increasingly popular for answering medical queries. This GitHub repo presents a Capstone Project focused on developing a multimodal conversational AI system that can answer medical queries using both text and images.

Key Components of the Repo

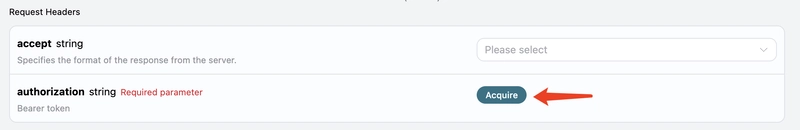

README.md: The README file provides an overview of the project, detailing the use of Retrieval-Augmented Generation (RAG) for text-based medical knowledge retrieval and LLaVA (Large Language and Vision Assistant) for analyzing chest X-rays.

environment.yml: This file contains the project's environment configuration, specifying the necessary dependencies such as Python, PyTorch, Transformers, FastAPI, and more.

new_temp.py: This source code file loads a LLaVA model for conditional generation of medical text.

requirements.txt: Lists additional dependencies and libraries essential for the project, including data handling, API, and backend components.

setup_project.ps1: This script defines the project structure with directories for data, source code, and preprocessing scripts.

temp.py: Another source code file that loads a LLaVA model and processor for text generation.

Code Snippets

Here's a snippet from the new_temp.py source code:

# Load LLaVA model for conditional text generation

model = LLaVA()

text = model.generate_text()

print(text)

And a snippet from the temp.py source code:

# Load LLaVA model and processor for text generation

model = LLaVA()

processor = Processor()

text = model.generate_text(processor)

print(text)

Example Usage

To run the project and generate medical text, follow these steps:

- Clone the repo:

git clone https://github.com/yourusername/capstone-project.git. - Set up the project environment:

conda env create -f environment.yml. - Run the script

new_temp.pyto generate medical text using the LLaVA model.

Conclusion

Developing a multimodal conversational AI system for the medical domain is a challenging yet rewarding task. By leveraging the power of both text and images, this project aims to provide accurate and efficient answers to medical queries. With the use of RAG and LLaVA models, the system can analyze text-based medical knowledge and interpret chest X-rays to offer comprehensive solutions.

While this project may not have gained much attention yet, it holds immense potential for revolutionizing the way medical queries are handled in the healthcare industry. As technology continues to advance, the integration of AI in healthcare will play a significant role in improving patient care and outcomes.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![From fast food worker to cybersecurity engineer with Tae'lur Alexis [Podcast #169]](https://cdn.hashnode.com/res/hashnode/image/upload/v1745242807605/8a6cf71c-144f-4c91-9532-62d7c92c0f65.png?#)

![BPMN-procesmodellering [closed]](https://i.sstatic.net/l7l8q49F.png)

.jpg?#)

.jpg?#)

![CarPlay app with web browser for streaming video hits App Store [U]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2024/11/carplay-apple.jpeg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![What’s new in Android’s April 2025 Google System Updates [U: 4/21]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-3.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Releases iOS 18.5 Beta 3 and iPadOS 18.5 Beta 3 [Download]](https://www.iclarified.com/images/news/97076/97076/97076-640.jpg)

![Apple Seeds visionOS 2.5 Beta 3 to Developers [Download]](https://www.iclarified.com/images/news/97077/97077/97077-640.jpg)

![Apple Seeds tvOS 18.5 Beta 3 to Developers [Download]](https://www.iclarified.com/images/news/97078/97078/97078-640.jpg)

![Apple Seeds watchOS 11.5 Beta 3 to Developers [Download]](https://www.iclarified.com/images/news/97079/97079/97079-640.jpg)