Uh-Uh, Not Guilty

Who will take the blame for AI mistakes, and what can you do about it? The post Uh-Uh, Not Guilty appeared first on Towards Data Science.

They had it coming, they had it coming all along.

I didn’t do it.

But if I’d done it, how could you tell me that I was wrong?

And the part of the song I found interesting was the reframing of their violent actions through their moral lens: “It was a murder, but not a crime.”

In short, the musical tells a story of greed, murder, injustice, and Blame-shifting plots that unfold in a world where truth is manipulated by media, clever lawyers, and public fascination with scandal.

By being only an observer in the audience, it is easy to fall for their stories portrayed through the victim’s eyes, who was merely responding to intolerable situations.

Logically, there is a scientific explanation for why blame-shifting feels satisfying. Attributing negative events to external causes (other people or situations) activates brain regions associated with reward processing. If it feels good, it reinforces the behaviour and makes it more automatic.

This interesting play of blame-shifting is in the theatre of life now, where humans can also start calling out the tools powered by LLMs for poor decisions and life outcomes. Probably pulling out the argument of…

Artistic differences

Knowing how the differences (artistic or not) lead us to justify our bad acts and shift blame to others, it’s only common sense to assume we will do the same to AI and the models behind it.

When searching for a responsible party for AI-related failures, one paper, “It’s the AI’s fault, not mine,” reveals a pattern in how humans attribute blame depending on who’s involved and how they’re involved.

The research explored two key questions:

- (1) Would we blame AI more if we saw it as having human-like qualities?

- (2) And would this conveniently reduce blame for human stakeholders (programmers, teams, company, governments)?

Through three studies conducted in early 2022, before the “official” start of the generative AI era through UI, the research examined how humans distribute blame when AI systems commit moral transgressions, such as displaying racial bias, exposing children to inappropriate content, or unfairly distributing medical resources and found the following:

When AI was portrayed with more human-like mental capacities, participants were more willing to point fingers at the AI system for moral failures.

Other findings were that not all human agents got off the hook equally:

- Companies benefited more from this blame-shifting game, receiving less blame when AI seemed more human-like.

- Meanwhile, AI programmers, teams, and government regulators didn’t experience reduced blame regardless of how mind-like the AI appeared.

And probably the most important discovery:

Across all scenarios, AI consistently received a smaller percentage of blame compared to human agents, and the AI programmer or the AI team shouldered the heaviest blame burden.

How were these findings explained?

The research suggested it’s about perceived roles and structural clarity:

- Companies with their “complex and often opaque structures” benefit from reduced blame when AI appears more human-like. They can more easily distance themselves from AI mishaps and shift blame to the seemingly autonomous AI system.

- Programmers with their direct technical involvement in creating the AI features remained firmly accountable regardless of AI anthropomorphisation. Their “fingerprints” on the system’s decision-making architecture make it nearly impossible for them to claim “the AI acted independently.”

- Government entities with their regulatory oversight roles maintained steady (though lower overall) blame levels, as their responsibilities for monitoring AI systems remained clear regardless of how human-like the AI appeared.

This “moral scapegoating” suggests corporate accountability might increasingly dissolve as AI systems appear more autonomous and human-like.

You would now say, this is…

All that jazz

Scapegoating and blaming others occur when the stakes are high, and the media usually likes to position a big headline, with the villain:

- “New York lawyers sanctioned for using fake ChatGPT cases in legal brief”

- “Cursor AI Chatbot Invents Fake Policy, Sparks Developer Outrage”

- “Greek Woman Divorces Husband After ChatGPT ‘Predicted’ He Would Cheat on Her!”

From all these titles, you can instantly blame the end-user or developer because of a lack of knowledge of how the new tools (yes, tools!) are built and how they should be used, implemented or tested, but none of this helps when the damage is already done, and someone needs to be held accountable for it.

Talking about accountability, I can’t skip the EU AI Act now, and its regulatory framework that is placing on the hook AI providers, deployers and importers, by stating how:

“Users (deployers) of high-risk AI systems have some obligations, though less than providers (developers).”

So, among others, the Act explains different classes of AI systems and categorises high-risk AI systems as those used in critical areas like hiring, essential services, law enforcement, migration, justice administration, and democratic processes.

For these systems, providers must implement a risk-management system that identifies, analyses, and mitigates risks throughout the AI system’s lifecycle.

This extends into a mandatory quality management system covering regulatory compliance, design processes, development practices, testing procedures, data management, and post-market monitoring. It must include “an accountability framework setting out the responsibilities of management and other staff.”

On the other side, deployers of high-risk AI systems need to implement appropriate technical measures, ensure human oversight, monitor system performance, and, in certain cases, conduct fundamental rights impact assessments.

To sweeten this up, penalties for non-compliance can result in a fine of up to €35 million or 7% of global annual turnover.

Maybe you now think, “I’m off the hook…I am only the end-user, and all this is none of my concern”, but let me remind you of the already existing headlines above, where no lawer could razzle dazzle a judge into believing innocent for leveraging AI in a work situation that seriously affected other parties.

Now that we’ve clarified this, let’s discuss how everyone can contribute to the AI responsibility circle.

When you’re good to AI, AI’s good to you

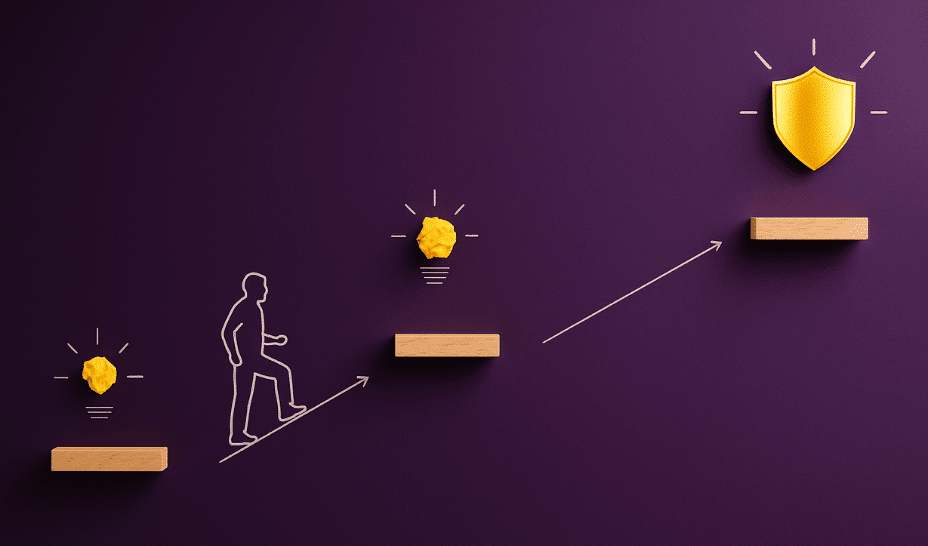

True responsibility in the AI pipeline requires personal commitment from everyone involved, and with this, the best you can do is:

- Educate yourself on AI: Instead of blindly relying on AI tools, learn first how they are built and which tasks they can solve. You, too, can classify your tasks into different criticalities and understand where it is crucial to have humans deliver them, and where AI can step in with human-in-the-loop, or independently.

- Build a testing system: Create personal checklists for cross-checking AI outputs against other sources before acting on them. It’s worth mentioning here how a good approach is to have more than one testing technique and more than one human tester. (What can I say, blame the good development practices.)

- Question the outputs (always, even with the testing system): Before accepting AI recommendations, ask “How confident am I in this output?” and “What’s the worst that could happen if this is wrong and who will be affected?”

- Document your process: Keep records of how you used AI tools, what inputs you provided, and what decisions you made based on the outputs. If you did everything by the book and followed processes, documentation in the AI-supported decision-making process will be a critical piece of evidence.

- Speak up about concerns: If you notice problematic patterns in the AI tools you use, report them to the relevant human agents. Being quiet about AI systems malfunctioning is not a good strategy, even if you caused part of this problem. However, reacting on time and taking responsibility is the long-term road to success.

Finally, I recommend familiarising yourself with the regulations to understand your rights alongside responsibilities. No framework can change the fact that AI decisions carry human fingerprints and that humans will consider other humans, not the tools, responsible for AI mistakes.

Unlike the fictional murderesses of the Chicago musical who danced their way through blame, in real AI failures, the evidence trail won’t disappear with a smart lawyer and superficial story.

Thank You for Reading!

If you found this post valuable, feel free to share it with your network.  Read More

Read More

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![Honor 400 series officially launching on May 22 as design is revealed [Video]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/05/honor-400-series-announcement-1.png?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Beats Studio Pro Wireless Headphones Now Just $169.95 - Save 51%! [Deal]](https://www.iclarified.com/images/news/97258/97258/97258-640.jpg)