Why Are Convolutional Neural Networks Great For Images?

How data symmetry informs neural network architectures The post Why Are Convolutional Neural Networks Great For Images? appeared first on Towards Data Science.

Practical issues aside, such that the number of neurons in this hidden layer would grow enormously large, we do not need other network architectures. A simple feed-forward neural network could do the trick.

It is challenging to estimate how many network architectures have been developed.

When you open the popular AI model platform Hugging Face today, you will find more than one million pretrained models. Depending on the task, you will use different architectures, for example transformers for natural language processing and convolutional networks for image classification.

So, why do we need so many Neural Network architectures?

In this post, I want offer an answer to this question from a physics perspective. It is the structure in the data that inspires novel neural network architectures.

Symmetry and invariance

Physicists love symmetry. The fundamental laws of physics employ symmetries, such as the fact that the motion of a particle can be described by the same equations, regardless of where it finds itself in time and space.

Symmetry always implies invariance with respect to some transformation. These ice crystals are an example of translational invariance. The smaller structures look the same, regardless of where they appear in the larger context.

Exploiting symmetries: convolutional neural network

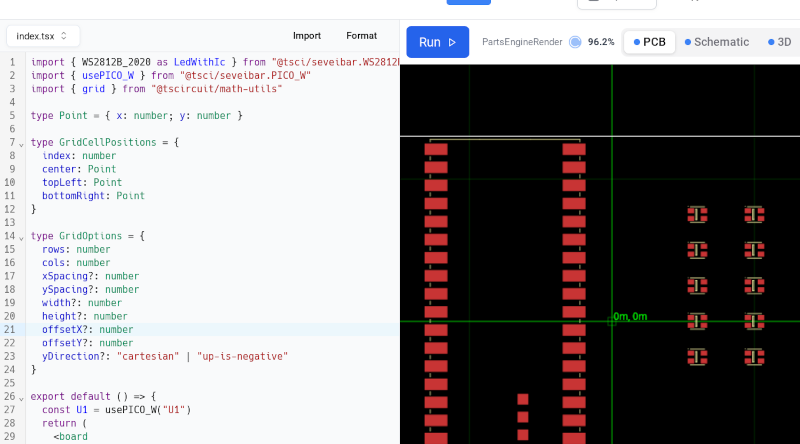

If you already know that a certain symmetry persists in your data, you can exploit this fact to simplify your neural network architecture.

Let’s explain this with the example of image classification. The panel shows three scenes including a goldfish. It can show up in any location within the image, but the image should always be classified as goldfish.

A feed-forward neural network could certainly achieve this, given sufficient training data.

This network architecture requires a flattened input image. Weights are then assigned between each input layer neuron (representing one pixel in the image) and each hidden layer neuron. Also, weights are assigned between each neuron in the hidden and the output layer.

Along with this architecture, the panel shows a “flattened” version of the three goldfish images from above. Do they still look alike to you?

By flattening the image, we have incurred two problems:

- Images that contain a similar object do not look alike once they are flattened,

- For high-resolution images, we will need to train a lot of weights connecting the input layer and the hidden layer.

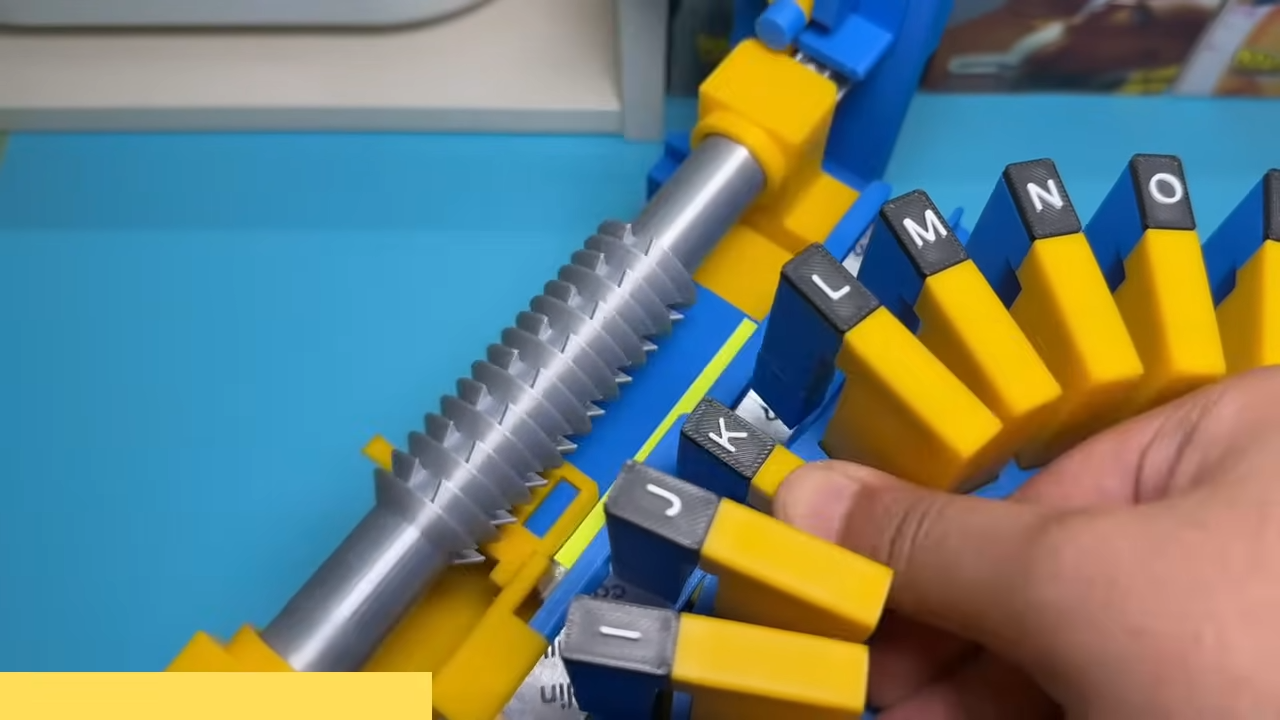

Convolutional networks, on the other hand, work with kernels. Kernel sizes typically range between 3 and 7 pixels, and the kernel parameters are learnable in training.

The kernel is applied like a raster to the image. A convolutional layer will have more than one kernel, allowing each kernel to focus on different aspects of the image.

For example, one kernel might pick up on horizontal lines in the image, while another might pick up on convex curves.

Convolutional neural networks preserve the order of pixels and are great to learn localized structures. The convolutional layers can be nested to create deep layers. In conjunction with pooling layers, high-level features can be learned.

The resulting networks are considerably smaller than if you would use a fully-connected neural network. A convolutional layer only requires kernel_size x kernel_size x n_kernel trainable parameters.

You can save memory and computational budget, all by exploiting the fact that your object may be located anywhere within your image!

More advanced deep learning architectures that exploit symmetries are Graph Neural Networks and physics-informed neural networks.

Summary

Convolutional neural networks work great with images because they preserve the local information in your image. Instead of flattening all the pixels, rendering the image meaningless, kernels with learnable parameters pick up on local features.

Further reading

- Universal Approximation Theorem

- Documentation for convolutional layers in Torch

The post Why Are Convolutional Neural Networks Great For Images? appeared first on Towards Data Science.

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![Google Home app fixes bug that repeatedly asked to ‘Set up Nest Cam features’ for Nest Hub Max [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2022/08/youtube-premium-music-nest-hub-max.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Epic Games Wins Major Victory as Apple is Ordered to Comply With App Store Anti-Steering Injunction [Updated]](https://images.macrumors.com/t/Z4nU2dRocDnr4NPvf-sGNedmPGA=/2250x/article-new/2022/01/iOS-App-Store-General-Feature-JoeBlue.jpg)